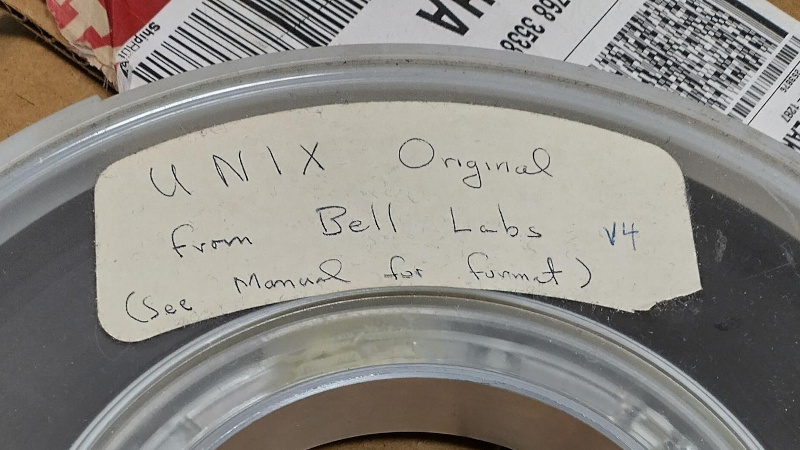

UNIX version 4 is quite special on account of being the first UNIX to be written in C instead of PDP-11 ASM, but it was also considered to have been lost to the ravages of time. Joyfully, we can report that the more than fifty year old magnetic tape that was recently discovered in a University of Utah storeroom did in fact contain the UNIX v4 source code. As reported by Tom’s Hardware, [Al Kossow] of Bitsavers did the recovery by passing the raw flux data from the tape read head through the ReadTape program to reconstruct the stored data.

Since the tape was so old there was no telling how much of the data would still be intact, but fortunately it turned out that the tape was not only largely empty, but the data that was on it was in good nick. You can find the recovered files here, along with a README, with Archive.org hosting the multi-GB raw tape data. The recovered data includes the tape file in SimH format and the filesystem

Suffice it to say that you will not run UNIX v4 on anything other than a PDP-11 system or emulated equivalent, but if you want to run its modern successors in the form of BSD Unix, you can always give FreeBSD a shot.

sound like the University of Utah storeroom need to do more cleaning. Something that is over 50 years old sounds like those people who have a 2 car garage packed with so much stuff there is just a path, while they park their new cars outside.

Some places (lie government agencies) are required to keep either the originals or the copies. Not all agencies have deep pockets to pay for scanning/storing, and some prefer not to bother at all.

As a side note, I’ve worked with no less than three projects handling archives, and, sadly, it is still hit and miss, because private entities can only last as long as their for-profit business model works, and once profit is gone, so are the archives. That’s another reason why some government agencies don’t even bother – UNRELIABILITY. You can also guess that the old school people who advised against privatization of such things had been fired (or retired, or died of old age), and the new acolytes picking up things think they know any better, which they usually don’t.

Reason being, we (in the US) truly do NOT know the extent of what we have stored somewhere in the attics or damp basements, or disused janitor closets, or abandoned campuses/buildings, especially with the private universities/colleges that might as well be running covert KGB/Stasi operations – we wouldn’t know.

https://en.wikipedia.org/wiki/History_of_Unix#/media/File:Unix_history-simple.svg

Are single task OSes simple?

Are FIG Forth and Intel MCS BASIC-52 examples?

for what languages was UNIX V4 written in?

AI Overview

UNIX Version 4 (V4) was primarily written in the C

programming language, with some parts remaining i

n assembly language. This version, released in 1973,

was a major turning point as it was the first Unix

kernel to be largely written in a high-level language.

The use of C, which was developed alongside

Unix at Bell Labs by Dennis Ritchie, allowed the

operating system to be more portable across

different computer architectures, a revolutionary

concept at the time. The remaining assembly

code was mainly used for low-level,

processor-specific tasks like initial booting and

direct hardware interfacing, where C could not

provide the necessary control or performance

benefits.

Earlier versions of Unix (V0 through V3) had

been written almost entirely in assembly

language, with some utilities in the B language.

The development of the C language was

specifically

motivated by the need for a portable and

efficient systems programming language

to rewrite the operating system.

are mulitasking OSes complex?

Yes, multitasking operating systems (OSes)

are inherently complex because they must

manage multiple processes simultaneously,

requiring sophisticated scheduling algorithms,

memory management, and resource allocation

to give users the illusion of true parallel execution,

especially with preemptive multitasking, which is

more advanced than simpler cooperative

methods. This complexity involves the OS

constantly switching between tasks (context

switching), prioritizing critical functions, and

handling potential resource conflicts, all

while maintaining system responsiveness

and stability.

Key Complexities in Multitasking OSes:

Cooperative vs. Preemptive Multitasking:

who funded UNIX V4?

UNIX V4 (Fourth Edition), released in 1973,

wasn’t “funded” in the modern commercial

sense; it was an internal research project

developed by AT&T Bell Labs, primarily by

Ken Thompson, Dennis Ritchie, and others,

using internal resources and equipment like

the PDP-11 minicomputer. They initially

secured a new computer by promising Bell

Labs’ patent department to build software

for them, making it a passion project that

evolved into a landmark operating system,

funded by Bell Labs’ research budget.

Key Funding & Development Details:

Developer: AT&T Bell Laboratories

(Western Electric). Key People: Ken

Thompson, Dennis Ritchie, Doug McIlroy,

Joe Ossanna.

…

In essence, UNIX V4 was an outgrowth

of Bell Labs’ research efforts, leveraging

internal funding and resources rather

than external.

Perhaps you can describe what you like to convey in your own words?

Convey in your own words please. Do your ‘own’ research. The dumbing down of society (idiocracy) is really getting under way I see….

Cool beans on the recovery though. Another old OS for posterity.

No, if you want me to listen to your thoughts, don’t offload your comment to AI. I promise I won’t read it.

yea no kidding .. just posting AI slop for no reason is a waste of everone’s time.

Nobody on the planet earth wants an “AI” overview. Stop making the planet worse and stop posting this crap as comments on hackaday.

Maybe you don’t like them but I do and I’m fine with those posts. If you don’t like reading something close your Internet Browser and go touch the grass, it’s that simple bro.

What you call ‘posts’, Hackaday calls ‘thoughts on’, think about that :)

The problem with AI generated crap is that if it does not know any facts it makes garbage up. Anytime I test AI (by asking a question about an area I have a great depth of knowledge), just to see if it has improved at all, it gets so much wrong it is no longer funny to me. Garbage in (from AI generated hallucinating slop scraped from pollution on the Internet) is so tainted that calling it Garbage out is high praise.

The real problem is, as shown by the current state of American politics, too many people are very naive of most everything around them and they like it that way. They believe a diverse education is politically motivated brain washing. What that leaves us with is really ignorant people who can type a few short sentences into an AI chat prompt, publish that as their own thoughts and feel smucking fart.

I’ve challenged AI as you have and come to the same conclusion, it you have to know about the subject your are asking about to know where the failures are. At best AI for me is an outline generator for topic discussions and code generation.

I see in support forums, here and on /. people posting AI drivel or saying they used AI for a task and now need help from others because they don’t know jack about the basics of the task they are attempting.

That reminds me of the retro computing hobby, which is rather obscure.

ChatGPT was wrong about the AT&T 6300 monochrome video mode when I’ve asked it months ago.

It said it’s 640×200 (that’s CGA hi-res), but we clearly know it’s 640×400 pixels.

So I had corrected it and told it to think again about the matter, after which it admitted its mistake.

Meanwhile, the mistake seems fixed and it mentions the native hi-res mode.

But the point is that answers by LLMs do look confident and correct on a superficial level. So laymen may fall for it.

The more we go into details, however, the picture starts to change. It will do minor errors that can be fatal, though, if not being recognized by a human being.

Just think of Fahrenheit 451, the book.

The title is about the temperature at which paper starts to burn on its own.

Except that this number is not quite scientifically proven, actually.

An LLM might not communicate this detail, however.

It might just repeat the authors reasoning for the title and might represent it as a fact value.

hear, hear! anyone who wants it can use the tools – this should be a place for actual thoughts

Foisting your chatbot copy/paste on non-consenting people as a “thought” here is appalling.

If we wanted to rot our brains and de-skill ourselves like that we wouldn’t be here. Have a little more intellectual curiosity than that.

What ever happened to the report button?

https://edramatica.com/images/1/1a/Beecock.jpg

You should get checked for diabetes if that’s attracting bugs

It’s not a bug, it’s a feature.

One commenter at Lobster makes a fair point:

What started as essentially a computing shitpost became an abomination that can render your workstation unusable with simple OS update just because you have an older but perfectly capable GPU. Fuck you loser, buy bleeding edge hardware or get rekt lel.

And we all thought Microsoft is evil.

Your criticism comes off as completely unhinged. If you run into that GPU driver problem, you will have to deal with the problem…say la vee. It’s much less work than dealing with any of the problems they ran into on early computers.

But more to the point, all of the major early innovations in computing have a YOLO aspect to them, because the most important discovery was not the way to do something but rather the fact that it is possible and in fact easy. The first people to actually do the thing were the people who were willing to risk that it might turn out to be impossible or undesirable. Due to the expense of the hardware and the enormous cost in manpower to work on them, it was rare for a serious use to be really visionary. The visionary things come off as distinctly unserious by comparison. Even “goto considered harmful” used to be radical nonsense.

It was never radical nonsense, there just wasnt always the tools or theory or training available to do without it. Coming up from assembly and machine code goto seems like a natural tool to have. When getting into higher levels of abstraction with high level languages it becomes necessary to look at other solutions.

That has very little to do with Linux itself, and everything to do with NVidia being… well… NVidia.

Oh dear, you seem to have forgotten that Microsoft just made millions of perfectly capable computers obsolete because Windows 11 requirements. Written on such a computer, using MXLinux.

Have they stopped working overnight just because Windows 11 was released? I swear sometimes Linux fanboys are worse than even Stepan Bandera supporters.

I think same.

And I still remember all the upcry when Windows 10 was being forced upon Windows 7 users in last decade.

People protested back then because they didn’t want ads for Windows 10 in their taskbar!

The sneaky upgrade pop-up window then went so far that closing the window by clicking on the “x” close button started the “upgrade”.

Anyway, nowadays people to worship thst same mean Windows 10 and think that Windows 11 is “unfair”.

Heck, Windows is a property of Microsoft.

The company can do with it what it wants to, provided it doesn’t violate contracts, maybe.

There’s no human right for having a recent Windows on an old PC.

No one forces user to upgrade to Windows 11, either.

If they have an old PC, they can keep using it for playing old games on Windows 10.

Or feel free to install any other operating system.

That’s how things always have been.

In the 80s and 90s, PC users had to upgrade the system every 2-3 years in order to keep up.

Which was even “worse” than it had been in the past 25 years.

ms has pulled this crap before. only this time linux is actually good enough to replace it.

Who, today, in a modern society runs/settles for Windows? Windows free here and not missing a thing.

Yeah, you don’t have to upgrade to 11. But from all the ‘chatter’ it is recommended (and played up as a security risk, and the rest of the marketing speak ) and the lemmings will reach for their wallets, and either upgrade or if they think about it, move to something else and breath free. :) My guess the former as it is the ‘easy’ route and just buy another hardware platform with it already installed. Most of us (me included… before Linux) dutifully paid up (new hardware, new OS, new virus checker, new Office, etc.) , before wising up to the pocket bleeding.

Austria is moving away from Microsoft software, which I think is good.

Here in Europe we must become a bit more independent.

The less US software, the better.

https://www.heise.de/en/news/Austria-s-armed-forces-switch-to-LibreOffice-10660761.html

I don’t know, the US isn’t the one trying to break encryption, ban VPNs or require ID to make comments so I think I trust them more.

@Dan The US probably has the biggest espionage network, has access to backdoors in most if not all popular US software,

mandates that US companies comply to provide international user data at wish (pat act, cloud act etc),

has troops stationed in most countries and taps the traffic directly at the telcos in those foreign countries (blackboxes installed).

What the EU does or doesn’t is lousy, amateurish by comparison so far, I’d say.

To my knowledge, the proposed real name requirement for posts on the internet isn’t there yet, either.

I agree that EU is doing annoying things, but it’s in parts due to incompetence, lobbyism and bureaucratic ignorance.

Also, the EU (mainly) makes rules for within its own territory only, it doesn’t enforce anything internationally. Unlike other nations.

China isn’t better than the US, maybe, but the people over there are at least educated and acting rational. Or that’s how it seems on a superficial level, at least.

The US by comparison gets more and more hostile against former partners such as Europe or Canada.

For whatever reasons, I don’t know. It’s in the news so often.

And that’s worrying. That’s why we, the rest of the world, have to learn to become more independent.

And that involves breaking free from traditional use of Windows or MS Office, MS Teams, OneDrive Cloud, Whatsapp etc.

Because they’re all sending telemetry/data to US servers,

where they’re being examinated and exploited for the sake of US interests.

Data of us European citizens and companies isn’t even protected by US law, because US law only protects rights of US citizens.

So we’re being f*cked twice here. And that’s not okay, that has to change.

(What I wrote above is merely my humble understanding of the current situation, of course.

And for many things I’m probably just stating the obvious here.

These things are often being discussed on the internet and in other media.)

That nvidia thing is such a non-story. Educated users of Arch Linux know that they are their own sysadmins, and know to follow the Arch News in case of breaking updates coming down the pipeline. If you want to use a bleeding edge rolling distro you have to be informed. Users who desire more hand-holding are free to use virtually any other Linux distro, where there’s generally a lot more catering to windows-converts who aren’t used to being responsible for their own systems. The Arch Linux install and it’s “choose your own adventure” way isn’t for everyone, and no one should claim it is.

It’s such a sad state of society when Linux users being presented with a text console login prompt is considered making the workstation “unusable”. If you’re using Arch Linux and cannot construct a pacman command, that says nothing bad about Arch.

So you bought unsupported graphics card. Installed proprietary driver. And now complain, when vendor decides to drop support? Are you idiot or what?

Another great reminder what .tar actually means

+1

Great argument for archiving and backups:

If no one has a copy, was it actually good? Counter argument: They hogged everything and didn’t let the average Joe get close to make own a copy.

Just how some people at university hiss if you want to use shared department equipment (and treat it well). It evokes a Gollum tier “my treasure” reply.

*their own copy

Do not give FreeBSD shots! It’s a mean drunk!

Better BSDrunk than high on what MS is smokin’

What are the contributions Richie and Thompson have left to computing?

Buggy, malware vulnerable, unmaintainable [uncertified] software technology requiring

endless series of costly updates?

No, they left the C programming language and UNIX. People then used C and UNIX to give us the buggy, malware vulnerable….junk that we have today. Don’t blame the tools, blame the craftsperson. Don’t really understand what you mean by “uncertified”, uncertified by whom?

If anybody is keen to play around with this newly discovered system I’ve created a site where you can get a live (emulated) terminal of it in your browser – https://unixv4.dev

Check it out and let me know of any thoughts or feedback that you have (or sign the guestbook!)