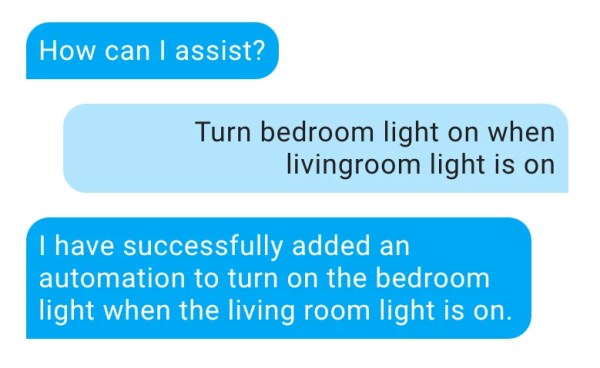

For many, the voice assistants are helpful listeners. Just shout to the void, and a timer will be set, or Led Zepplin will start playing. For some, the lack of flexibility and reliance on cloud services is a severe drawback. [John Karabudak] is one of those people, and he runs his own voice assistant with an LLM (large language model) brain.

In the mid-2010’s, it seemed like voice assistants would take over the world, and all interfaces were going to NLP (natural language processing). Cracks started to show as these assistants ran into the limits of what NLP could reasonably handle. However, LLMs have breathed some new life into the idea as they can easily handle much more complex ideas and commands. However, running one locally is easier said than done.

A firewall with some muscle (Protectli Vault VP2420) runs a VLAN and NIPS to expose the service to the wider internet. For actually running the LLM, two RTX 4060 Ti cards provide the large VRAM needed to load a decent-sized model at a cheap price point. The AI engine (vLLM) supports dozens of models, but [John] chose a quantized version of Mixtral to fit in the 32GB of VRAM he had available.