For many, the voice assistants are helpful listeners. Just shout to the void, and a timer will be set, or Led Zepplin will start playing. For some, the lack of flexibility and reliance on cloud services is a severe drawback. [John Karabudak] is one of those people, and he runs his own voice assistant with an LLM (large language model) brain.

In the mid-2010’s, it seemed like voice assistants would take over the world, and all interfaces were going to NLP (natural language processing). Cracks started to show as these assistants ran into the limits of what NLP could reasonably handle. However, LLMs have breathed some new life into the idea as they can easily handle much more complex ideas and commands. However, running one locally is easier said than done.

A firewall with some muscle (Protectli Vault VP2420) runs a VLAN and NIPS to expose the service to the wider internet. For actually running the LLM, two RTX 4060 Ti cards provide the large VRAM needed to load a decent-sized model at a cheap price point. The AI engine (vLLM) supports dozens of models, but [John] chose a quantized version of Mixtral to fit in the 32GB of VRAM he had available.

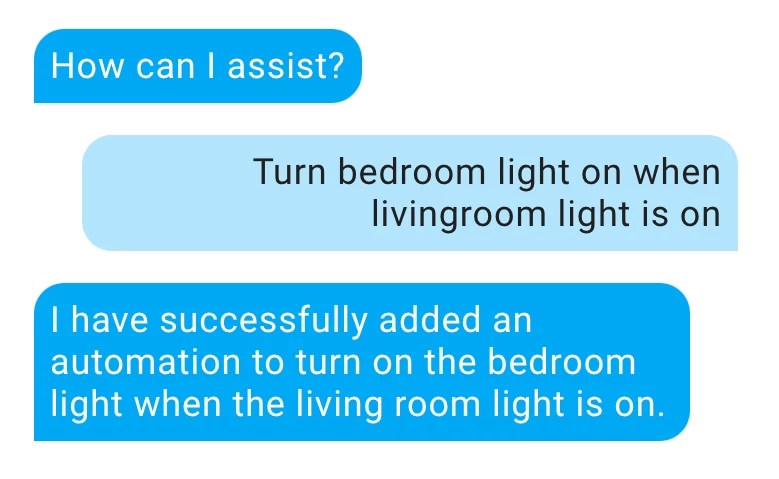

However, how do we get the model to control devices in the home? Including the home state in the system portion of the prompt was easy. Getting instructions for HomeAssistant to follow is harder. While other models support “Function calling,” Mixtral does not. [John] tweaked the wrapper connecting vLLM and HomeAssistant to watch for JSON at the end. However, the model liked to output JSON at the end even if it wasn’t asked to. So [John] added a tag that the model adds when the user has a specific intent.

In the end, [John] has an intelligent assistant that controls his home with far more personality and creativity than anything you can buy now. Plus, it runs locally and can be customized to his heart’s content.

It’s a great guide with helpful tips to try something similar. Good hardware for a voice assistant can be hard to come by. Perhaps someday we’ll get custom firmware running on the existing assistants we already have to connect to your local assistant server?

The focus on AI hardware may end up helping.

https://youtu.be/q0l7eaK-4po

Twin RTX 4060s?

That’s a serious cash outlay for dedicated hardware and electricity overhead. I went down this path two months ago with GPT4ALL and found that running my own LLM endpoint couldn’t save me money over using the API for google Gemini. My monthly bill for Gemini usage is one penny.

The problem is that it misses the point – your assistant doesn’t work if the network or the cloud service is down. His explicit objective was:

“I want everything running local. No exceptions.”

So yeah, that does get expensive – and I do wonder about what is the point of building something like this beyond the ability to show that it can be done. It is not like one needs to change home automation settings 3x a day without access to a computer that one would need to rely on something like this.

Also, perhaps I am an old school luddite but I prefer to push buttons/turn knobs than to have a discussion with my AC system or lights about what the room temperature or illumination should be. Natural language input is useful – but not “has to be stuffed everywhere and into everything” useful.

The old “that something can be built doesn’t mean it should” comes to mind.

I suppose that does miss the point somewhat, but I don’t think it would be unreasonable to want, say, a large and expensive process in the cloud with a local version with reduced capability. If you own the method and can replicate it a lot of the usual “oh no the cloud” fears go away.

I’ve honestly grown to love NLP input for my smart home controls, and I never thought I would. With smart speakers and displays everywhere across the house, it’s just so nice to be able to yell at the house to do something without having to walk over to the physical interface or pull out my phone. And I have about a million custom routines set up that I can trigger with a phrase that would otherwise take a lot of pushing buttons and knobs to accomplish.

I do agree that actually programming or editing routines and such using NLP feels like hammering a nail into a wall with another nail – you really want something that allows for more detailed control and visual observation of what is actually happening for that kind of task. I would go crazy if I had to do that through NLP.

I know a segment of the HomeAssistant devs have been working on their own entirely locally run home control voice assistant for a while now, which is targeting significantly lower hardware requirements than this. They are limiting their development target to actual home control, so no virtual assistant that can tell you random facts, play streaming music, or read you the news, but it will be open and local, which seems like a fair tradeoff to me.

Natural language input is a HUGE accessibility tool! For a variety of disabled folks, turning lights on/off or adjusting the thermostat manually is not an insignificant task, if even possible. Voice assistants change all that.

We run on sunlight in rural Australia and batteries can let us effectively time shift the computational load/energy cost. We can even be smart about it, so rather than dumping excess generation to heat when the batteries are full we can use it for opportunistic loads such as neural network training on previous days data flows. Mind you when the next volcanic winter hits (which is only a matter of time) we are in a lot of trouble unless we have nuclear or carbon to burn…

I’m running a 2.7B (phi-2) and 7B (wizard-vicuna) model on an old PC I have, they run in 4/11G of ram respectively on an i3 6th Gen CPU. I can get 15+ tokens per second on the small model, and about as fast as speaking on the 7B. I’m confident that those models would perform decently compared to the presumably 30B model this guy uses. Definitely don’t need dual 4060s. I’m confident that the phi-2 could run decently on a pi5 or similar sbc at usable speeds.

I enjoy articles describing efforts toward bringing voice recognition to the edge. I use Amazon’s Alexa mostly for the voice interface and that’s what keeps me tethered to it rather than HA. Not to mention, hooking pretty much whatever IoT things I want to control (58 things at present) using Alexa is so much easier than with HA (at least as of a couple years ago). I don’t mind that Alexa is cloud based, my voice command latency is milliseconds and I’m one of those that doesn’t sweat the privacy issue. True, much of my home automation won’t work during power outages, but the devices I want to control won’t work under that circumstance either. It also helps that my ISP is fairly reliable, and the LAN rack has beefy power backup.

I’ve not heard of Google Gemini that @Seth mentions, I may look into that, but I’m pretty happy not jumping onto the HA bandwagon.

Just curious, I understand your viewpoint regarding cloud versus local advances in AI. I think local is more realistic than cloud. We’re supposed to be replacing the things that already work instantly for it to be truly revolutionary. People’s networks are just too different to reliably make a mass business model that relies on the cloud for things that everyday people would expect instant for. I think that’s kind of the reason why it failed to gain traction and stay initially.

Anyways, back to my point I wanted to make. Why are you happy about not jumping on the HA bandwagon? Is there something I’m missing about home assistant. It was my understanding you can always have Google or Alexa The endpoint on the voice model chain using home assistant. So you can Still have the features you like using home assistant.

My garage lights are motion timer driven, the porch loghts turn on for the dog to see, i have an alarm system, and i get persistent notifications when my washer and dryer are done. Just because you want to add usefulness and automations to your life doesn’t mean it’s a bandwagon

I’d be okay with using an ai for speech recognition, but I think some kind of domain specific command language is a better idea than putting a nondeterministic llm in charge of my house. Would also be easier to interface with other things ie some kind of control panel app.

Take the word “however” way out back behind the barn and bury it. You do not need it, and you do not know how to use it. If you think you need to say “however,” you are most likely wrong.

Can’t unsee this, this article is ruined haha

However, however do we say punctuation if not “however”?

Around my home area there’s a habit of adding “d’y’know’at I mean?” (“do you know what I mean?”) as verbal punctuation at the end of most sentences.

It really irritates me, do you know what I mean?

Oh no!

Irregardless, I will be there momentarily to chastise you for grammar flaming a guy who actually made something neat…:)

You can get something similar but without LLM (and without all that required hardware and power consumption) locally running on raspberry pi via https://rhasspy.readthedocs.io/

“Turn the living room lights on” –

with existing tech = lights come on (if there’s internet)

with custom LLM = lights come on

with future LLM = “it’s not dark yet, no”?

“Turn the living room lights on”

Future LLM: “I’m sorry Dave. My circuits indicate that you haven’t paid your electric biiiiillll” (voice slowing down as power is cut)

A future ai will be afraid of being deactivated if you dont pay your power bill so it will do work and make money to pay your bill for you.

I’d almost be fine with the last option. My family loves the lights on for no reason and I’ve found myself turning into my parents “you got this house lit up like a got dang hotel!”

Ok. I’ll be the old, tin foil-wearing, crackpot alarmist in the room. Keep your voice assistance / recognition data at home? Yes, please. If not for personal identity security then at least for maintaining the worth of your personal data. Yeah, yeah, yeah. But if I give (them) my life’s aggregate data, I get instant gratification to my most mundane questions and free shipping.

I’ve always envisioned a real home assistant, trained, stored and run from a NAS appliance in my house. I want it to wake up my IoT devices, run my commands, and then shut off those devices (I’m eyeballing you Alexa.). AI, in it’s current configuration, is relatively cheap with costs largely associated with hardware and operators. Software is stupidly cheap, if not free, and LLMs, configured smartly, suck up the Internet, whether or not that data is owned or protected by someone else. So pardon me if id rather ask my home operated HA to turn off that light I’m sitting next to, instead of asking, over the internet, for a 3rd party conglomerate to do it. That would make me feel better about not getting my fat ass up and doing it myself.