For those who were alive and conscious before the modern Internet, there were in fact things that went “viral” and became cultural phenomenon for one reason or another. Although they didn’t spread as quickly or become forgotten as fast, things like Beanie Babies or greeting a friend with an exaggerated “Whassup?” could all be considered viral hits of the pre-Internet era.

Another offline hit from the late 90s was the Billy Bass, an absurdist bit of physical comedy in the form of a talking, taxidermied fish. At the time it could only come to life and say a few canned lines, but with the help of modern hardware it can take on a whole new life.

This project comes to us from [Cian] who gutted the fish’s hardware to turn it into a smart voice assistant with some modern components, starting with an ESP32 S3. This chip has enough power to detect custom “wake words” to turn on the fish assistant as well as pass the conversation logic to and from a more powerful computer, handle the audio input and output, and control the fish’s head and tail motors. These motors, as well as the speaker, are the only original components remaining. The new hardware, including an amplifier for the speaker, are mounted on a custom 3D printed backplate.

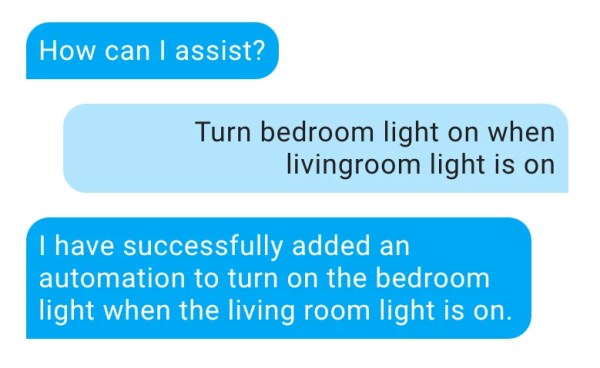

After some testing and troubleshooting, the augmented Billy was ready to listen for commands and converse with the user in much the same way as an Alexa or other home assistant would. [Cian] built this to work with Home Assistant though, so it’s much more open and easier to recreate for anyone who still has one of these pieces of 90s kitch in a box somewhere.

Perhaps unsurprisingly, these talking fish have been the basis of plenty of hacks over the years since their original release like this one from a few years ago that improves its singing ability or this one from 2005 that brings Linux to one.

Continue reading “Billy Bass Gets New Job As A Voice Assistant”

The build is based on an ESP32 Lyrat development board. Unlike most devboards, this one has two 3 watt audio outputs and mics on board, making it perfect for a build like this one. The Lyrat was paired with some NeoPixel LEDs and a pair of Dayton Audio 1.5″ speakers to enable it to interact with the user both audibly and visually.

The build is based on an ESP32 Lyrat development board. Unlike most devboards, this one has two 3 watt audio outputs and mics on board, making it perfect for a build like this one. The Lyrat was paired with some NeoPixel LEDs and a pair of Dayton Audio 1.5″ speakers to enable it to interact with the user both audibly and visually.