If a picture is worth a thousand words, a video must be worth millions. However, computers still aren’t very good at analyzing video. Machine vision software like OpenCV can do certain tasks like facial recognition quite well. But current software isn’t good at determining the physical nature of the objects being filmed. [Abe Davis, Justin G. Chen, and Fredo Durand] are members of the MIT Computer Science and Artificial Intelligence Laboratory. They’re working toward a method of determining the structure of an object based upon the object’s motion in a video.

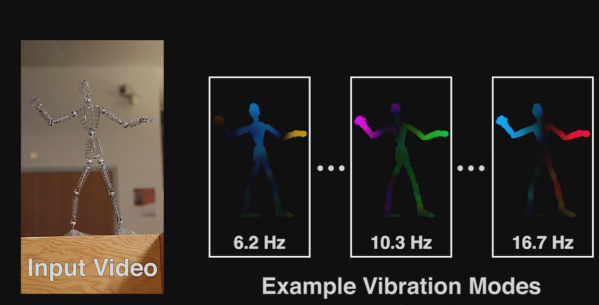

The technique relies on vibrations which can be captured by a typical 30 or 60 Frames Per Second (fps) camera. Here’s how it works: A locked down camera is used to image an object. The object is moved due to wind, or someone banging on it, or any other mechanical means. This movement is captured on video. The team’s software then analyzes the video to see exactly where the object moved, and how much it moved. Complex objects can have many vibration modes. The wire frame figure used in the video is a great example. The hands of the figure will vibrate more than the figure’s feet. The software uses this information to construct a rudimentary model of the object being filmed. It then allows the user to interact with the object by clicking and dragging with a mouse. Dragging the hands will produce more movement than dragging the feet.

The results aren’t perfect – they remind us of computer animated objects from just a few years ago. However, this is very promising. These aren’t textured wire frames created in 3D modeling software. The models and skeletons were created automatically using software analysis. The team’s research paper (PDF link) contains all the details of their research. Check it out, and check out the video after the break.