The HTC Vive is the clear winner of the oncoming VR war, and is ready to enter the hallowed halls of beloved consumer electronics behind the Apple Watch, Smart Home devices, the 3Com Audrey, and Microsoft’s MSN TV. This means there’s going to be a lot of Vives on the secondhand market very soon, opening the doors to some interesting repurposing of some very cool hardware.

[Trammell Hudson] has been messing around with the Vive’s Lighthouse – the IR emitting cube that gives the Vive its sense of direction. There’s nothing really special about this simple box, and it can indeed be used to give any microcontroller project an orientation sensor.

The Vive’s Lighthouse is an exceptionally cool piece of tech that uses multiple scanning IR laser diodes and a bank of LEDs that allows the Vive to sense its own orientation. It does this by alternately blinking and scanning lasers from left to right and top to bottom. The relevant measurements that can be determined from two Lighthouses are the horizontal angle from the first lighthouse, the vertical angle from the first lighthouse, and the horizontal angle from the second lighthouse. That’s all you need to orient the Vive in 3D space.

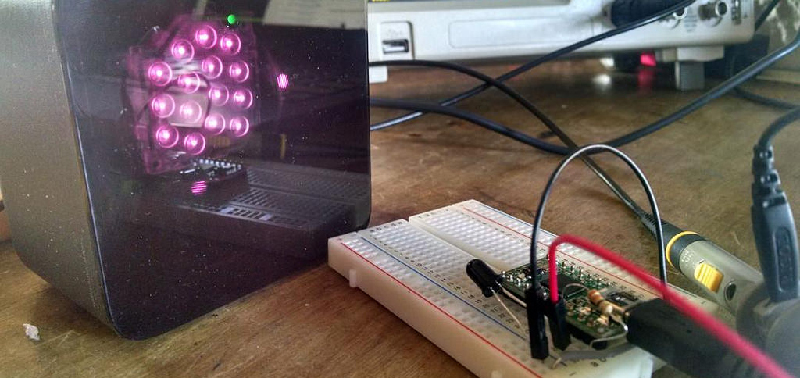

To get a simple microcontroller to do the same trick, [Trammell] is using a fast phototransistor with a 120° field of view. This setup only works out to about a meter away from the Lighthouses, but that’s enough for testing.

[Trammell] is working on a Lighthouse library for the Arduino and ESP8266, and so far, everything works. He’s able to get the angle of a breadboard to a Lighthouse with just a little bit of code. This is a great enabling build that is going to allow a lot of people to build some very cool stuff, and we can’t wait to see what happens next.

This is relevant to my interests!

He should contact valve, its no secret they intended the Vive for tinkering like this, and if you mail them your current work im sure they would be happy to mail you some of the actually used photo-transistors

it’s probably not the transistor that limits the sensitivity, but the lack of any optical filter. Without the filter the transistor gathers all the background light, which lowers signal to noise ratio. Now if you put a good bandpass IR filter that matches the exact IR led wavelength, it will cutoff most of ambient light-noise, only allowing the signal to pass.

I run an IR camera conversion business. I have a lot of scrap IR longpass glass of several wavelengths. If anyone wants some glass to test this let me know.

Floppy disks (if you can find them) are also great for passing IR but not visible light. Cutting up old floppies could be used for as IR optical filters. I have used them to great success in the past.

Floppy disks (if you can find them) are also great for passing IR but not visible light. Cutting up old floppies could be used for as IR optical filters. I have used them to great success in the past.

EDIT: I just noticed that the linked article DOES use floppy disk IR filters. Also, I just noticed that I can edit my posts?

Nope, though it appeared to be changing the existing post when I replied, instead it duplicated it after pressing the POST button. A glitch in the reply system…

The thing is that (as far as im aware) each of the sensors (wich are a photodiode and ‘some components’) handles each of the signals, so they do have a way. They’re mentioned/show in this video: https://youtu.be/QLBxz7djQvc (see 7:40 if u dont care about the rest)

OK, HaD editors …

First, this hack only gives *position*, it can’t provide orientation in space. For that you need at least 5 sensors in a calibrated constellation if using a single Lighthouse, or 3 if using two.

Actual Vive wands use internal IMU for orientation, though. Whether they use the Lighthouse data to correct the orientation estimates is not really known but they aparently do that for the position estimate. They get the position from integrating the accelerometer data twice (gives a very low latency measurement at ~1000Hz) and correct the quickly accumulating errors using the Lighthouse tracker which runs much slower (~100Hz).

Second, the sensor is known – the real Vive wands use BPW43 photodiodes. The most likely reason for a photodiode instead of phototransistor is that is that the laser light from the lighthouses is modulated at around 2 MHz or so, both for helping to eliminate interference from other light sources and to potentially to allow more systems to work in the same space by transmitting data over the light signal. Phototransistors may not be fast enough for this.

The range problem the poster has is because he has connected the phototransistor directly to the MCU, with no amplification, signal filtering, nothing. So no wonder it performs very poorly and works only when very close to the LightHouse. The optical filter is not needed, because the phototransistor he is using has an IR pass one built-in (dark plastic casing is the filter).

The actual hardware (or at least the older, less integrated versions of it) has been posted by Alan Yates from Valve and is a lot more complex, needing several transistors + comparator for each photodiode. Or one can use 2 opamps like they have done in one of the very early prototypes, but that is going to be bandwidth limited. The current retail version uses a custom ASIC to allow for miniaturization (and to reduce costs) instead.

This is the opamp-based sensor amplifier:

https://pbs.twimg.com/media/Cl2SiwJUYAAhd8l.jpg:large

And this is the early discrete one:

https://pbs.twimg.com/media/CfA7HtZVIAAtoRK.jpg:large

I don’t understand the first paragraph. Is it sarcasm? What’s wrong with the Vive that it’s going to flop like the other stuff (the other stuff are flops, right?)

Benchoff is farming for clicks again. Just ignore him. (see @Jan Ciger’s post above)

He hasn’t written a article in the past half year that didn’t have a line that was inflammatory, completely incorrect, or displayed a hint of any fundamental engineering knowledge.

E.G. His previous article on the monoprice 3d printer with blatantly false info about Cura:

“The standard Buddha from [kim]. This print exhibits Z banding, but that’s an issue with the slicer (Cura), and not the printer”

This is completely false, as many Cura users like myself and others can attest to.

I mean this in the nicest way Mr. Benchoff, but maybe you should change your last name from Benchoff to Fuckoff.

<3

Kwtoska is just being unnecessarily inflammatory again. Just ignore him. He hasn’t written a comment in the past year that wasn’t inflammatory, or completely incorrect.

See here, where he criticizes (incorrectly) the grammar of a post. Or here where he accuses me of censorship (the fact that I allow him to post should be enough evidence against that),

Kwtoska is just another member of my fan club. It’s like any fandom: no one hates the source material more than the most fervent fans.

I’ll give you an example: I love Star Trek, but hate the terrible episodes of Voyager. I hate Brannon and Braga for the missed opportunities of Enterprise. The next generation movies shouldn’t be called Star Trek, and I don’t know what the Abrams movies are. There’s so much I hate about Star Trek, but there’s no way I could hate it this much without loving it. My fanclub is just like any fandom, and I’m more than happy to have people accuse me of terrible things – those people are my biggest fans.

And as for [Jan]’s objections above, let me go through the process of writing this post. Somebody built something, then wrote up how they built it. I wrote a summary of this that is completely accurate in reference to the source material. Suddenly, it’s my problem the original project doesn’t use an opamp, or the correct photodiode.

In any event, if you don’t like what I write, you don’t have to read it. Taking responsibility for your actions may seem a bit strange, but trust me: it really is the way to go here.

Ignorance not malice, it really is a good saying..

Maybe the rest of the editors should just repeat whatever you do, #MakeHaDGreatAgain

Is it weird that I heard a certain candidate’s voice saying that?

#MakeHaDGreatAgain

Thank you Brian for the years of great FREE entertainment and information and project ideas. Despite the ravings of your most loyal fans and other comment trolls (that seem to be getting louder here every day), I truly enjoy reading your articles and those of the other HaD editors.

I went from being irritated at the dumb comments to being angry at J.J Abrams.

Lol, good job!

I totally understand that you take the negative comments to heart, Brian, but frankly, Matt B. isn’t the only one with this question:

> I don’t understand the first paragraph. Is it sarcasm?

Mind shedding some light onto this? To me, the Vive is the clear winner of the currently available sets, just like you say. How does that relate to all those failed consumer electronics devices that you mention?

I totally second this. I thought that comment was earnest and I had the exact same question. I was hoping for a real answer?

Sorry, I should have been clearer – my objection was to your claim that the device can provide orientation in space – which it obviously can’t. Even the original article doesn’t make that claim. The only time the orientation is mentioned is at the very end, where the original author explains the math that would be needed, but he has never built it.

So to quote:

“[Trammell Hudson] has been messing around with the Vive’s Lighthouse – the IR emitting cube that gives the Vive its sense of direction. There’s nothing really special about this simple box, and it can indeed be used to give any microcontroller project an orientation sensor.”

That is obviously incorrect and not what the original article says at all.

“The relevant measurements that can be determined from two Lighthouses are the horizontal angle from the first lighthouse, the vertical angle from the first lighthouse, and the horizontal angle from the second lighthouse. That’s all you need to orient the Vive in 3D space.”

That is patently incorrect as well.

To be able to determine orientation, you need multiple sensors (photodiodes or phototransistors) in a *known constellation* – i.e. a set of calibrated 3D points. Which is actually why Valve discourages people from opening the Vive controllers or headset because it will disturb the calibration. Alternatively you need an IMU. Or both, as Vive controllers actually use. A single optical sensor is not sufficient, period.

The rest of my comment was aimed at the commenters that were speculating about the poor performance, not really at you. I should have been clearer about that.

And the first paragraph – I am not quite sure what are you implying by that. That Vive is a market flop? With 70k-100k sold so far according to the recent Steam statistics? Considering it has been on sale for a few months only and cost almost $1000, that is a pretty respectable result, IMHO.

Cool. This operates just like aircraft VOR navigation systems work, since 1950 or so. Except the VOR systems require no moving parts.

It does not. VOR uses two phase-shifted signals to encode the beam direction and the plane decodes the azimuth to the ground station from that, not from actually measuring angles. Oh and the original VORs *did* use moving parts – the antenna was rotating, similarly to a radar antenna. Today’s VORs use phased arrays instead to make the beam rotate.

Lighthouse is more similar to the Nikon iGPS system: http://www.nikonmetrology.com/Products/Large-Volume-Applications/iGPS/iGPS/(key_features)

However, iGPS doesn’t use receiver constellations like Lighthouse uses, so to get a position fix, each receiver must be hit by multiple beacons. Also that system is meant for factory floor (e.g. navigating robots transporting material), so orientation is less important.

All the VORs I ever used (since 1983 or so) had phased array antennas. I don’t know when mechanical ones were phased out.

As for similarities:

Neither system “measures angles”. Both measure only time delays between a reference and a rotating signal.

VOR uses a phase reference (the FM signal), just like the sync burst from the Lighthouse.

VOR measures the delay to the peak of the rotating AM signal (the phase), just like the lighthouse measures the delay to the rotating laser pulse.

Both VOR and Lighthouse use that measured delay to determine the receiver azimuth (bearing) *from* the source. (not the azimuth *to* the source, as you state).

Both a VOR receiver and a Vive require bearing measurements from at least two sources to determine its position in space.

A Lighthouse adds elevation, and a Vive adds a fixed receiver constellation to compute pose, but the basic operating principle is the same: Both VOR and the Lighthouse/Vive determine their bearing from a fixed ground station and use multiple bearings to determine their location.

Also: I do note that the Vive system does not require multiple source locations to compute position: its multiple receivers on the headset allow it to determine its location in space from a single Lighthouse, akin to a VOR receiver taking a few bearing measurements from different locations in flight.

So much negative criticism here directed at the author of this article [Brian Benchoff] as well as the author of the source [Trammell Hudson]. If it were me who had done this and read these comments I would avoid being featured on HackADay again.

I say props to both and take the comments with a grain of salt.

I think the negative comments were mainly aimed at the author of the HaD article, not at Trammell Hudson, who has published the original thing.

BPW43 has a peek wavelength at 900nm. This most likely means that LightHouse also uses 900nm LEDs. This in turn means that if you connect LightHouse to your car you could *sometimes* jam police lidars. Heck, with the Vive wand + LightHouse and a custom firmware you have your own Lidar jammer. All hardware is there…

Nah, the lidar light is modulated. It’d be about as effective as trying to jam wifi using a bluetooth device.

Exactly. Or it’s pulsed, anyway,maybe not actually “modulated” in the usual sense.

Consider that your average car already has two directional emitters totalling 110 watts, with a peak emission near 900 nm already. (headlights…) A CW emitter of any sane power isn’t going to do anything to the receiver.

Now, if you could use the IR sensor that the Lighthouse uses to *sync*, you could sense the lidar pulse frequency and retransmit at a different frequency to spoof whatever speed you want (hint: don’t retransmit at the same frequency…).

Assuming, of course, the Lighthouse emitter wavelength can get through the lidar’s very narrowband receiver filter.

“Now, if you could use the IR sensor that the Lighthouse uses to *sync*, you could sense the lidar pulse frequency and retransmit at a different frequency to spoof whatever speed you want (hint: don’t retransmit at the same frequency…).”

Exactly what I meant when I said +”with custom firmware” :-) With this Lighthouse + Sensor you have a way of receiving those pulses on 904nm and a way to make your own transmission using lighthouse. Each LIDAR device has its own pulse format so a very fast microcontroller to analyze the pulses before they end, and make an appropriate jamming signal should do the trick. That is roughly how it is done nowadays anyway.