They say that a picture is worth a thousand words. But what is a picture exactly? One definition would be a perfect reflection of what we see, like one taken with a basic camera. Our view of the natural world is constrained to a bandwidth of 400 to 700 nanometers within the electromagnetic spectrum, so our cameras produce images within this same bandwidth.

For example, if I take a picture of a yellow flower with my phone, the image will look just about how I saw it with my own eyes. But what if we could see the flower from a different part of the electromagnetic spectrum? What if we could see less than 400 nm or greater than 700 nm? A bee, like many other insects, can see in the ultraviolet part of the spectrum which occupies the area below 400 nm. This “yellow” flower looks drastically different to us versus a bee.

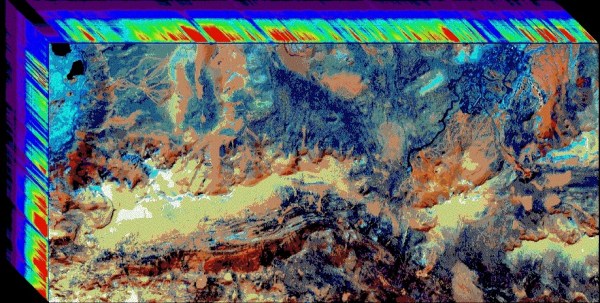

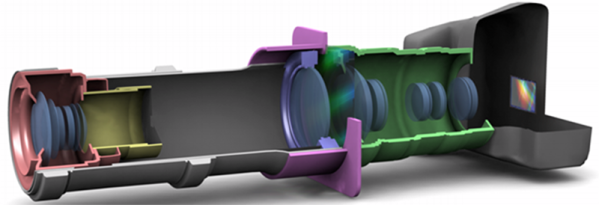

In this article, we’re going to explore how images can be produced to show spectral information outside of our limited visual capacity, and take a look at the multi-spectral cameras used to make them. We’ll find that while it may be true that an image is worth a thousand words, it is also true that an image taken with a hyperspectral camera can be worth hundreds of thousands, if not millions, of useful data points. Continue reading “Hyperspectral Imaging – Seeing The Unseeable”