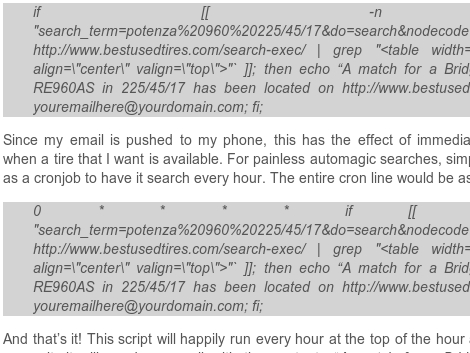

Automating something involving data from the Internet can be confusing when it comes to pages generated by user input. For instance, let’s say you want to scrape data from a page that loads after using a search box. [Andrew Peng] posted a quick and dirty example to help you write your own scripts. The example he used checks stock on one of the websites he frequents. His process outlines finding the link that all searches are submitted to, establishing the method used to send the search string, and grabbing the resulting data. He parses it and sends off an email if it finds what he’s looking for. But this could be used for a lot of things, and it shouldn’t be a problem to make it alert you in any way you can imagine. Maybe we’ll use this to add some functionality to our rat.

Wow. And people bitch at me for writing Perl scripts?

Regexes for scraping are weak. Use something with an object oriented interface to the DOM, and it probably won’t break as often.

@Derek, Have a suggestion? I could use an XML parser, but most websites will fail as they are not usually valid XML. I’ve written a number of “convert website data into iCalendar and push to google calendar” sorts of “scripts” in python using regexes. They seem to work.

Of course I would be more interested in how to load a bit of flash from a website without the whole website, so that i can can have the user of my app press the button to verify they are human. Oh and I’d like it to work on *nix, windows XP and up, and OSX.

Yeah, a dom parser works great. Also xpath and xsl help to expedite things.

You can clean up/convert things to proper xml/html prior to using a dom parser by running the data through I thinks its htmltidy or prettyhtml first.

Use an HTML parser…

“Beautiful Soup is a Python HTML/XML parser designed for quick turnaround projects like screen-scraping.”

http://www.crummy.com/software/BeautifulSoup/

Potenzas — good choice :)

@Cynyr, there is a nice Python library called “beautyful soap” for parsing HTML. It is quite tolerant towards non correct HTML/XML code and it is well documented (including lots of small examples). Maybe it is of use to you.

@IceBrain

BeautifulSoup is no longer maintained, and it deoesn’t handle broken HTML as well a lxml. Scrapy is a better choice for web scraping, or possibly Mechanize + PyQuery is something simpler is needed; Using Python that is (and why wouldn’t you?).

I will second CH’s call to use Perl::Mechanize. It is like lwp but better. You can even log into https sitez with it. w00t.

Thanks all for the suggestions, I like the lxml one. Of course none of them will help you if you need to run JS or flash bits to get the data you want/need. There are a number of sites that do nothing but serve up a flash cookie, and some JS, and then use the JS and the flash cookie to load the rest of the data, to prevent scraping. stupid if you ask me, but ohhh well.

I so hate that sort of thing, the flash and JS crippling attempts.

Or sometimes they just use silly webbuilding software or template and don’t know any better and it’s not even meant as some block.

Dislike it while not even being into scripting these things and scraping.

you might be interested in YQL: http://developer.yahoo.com/yql/ it allows you to query internet like it would be a database.

Other things you might be interested in would be google search alerts – you set up a query and when there are results, you are notified via email and also yahoo pipes, which is a “custom rss engine” – you can edit, modify, filter… RSS channels, websites, run them through external services etc.

Yay, Perl!

First of all always set fake useragent like mozilla or ie otherwise the admin might thinking you are some bot and ban you from the site.

Secondly its better to do it with phpcurl more simple and elegant way.

@Timmah:

I actually meant the Python module, but I’ve never used the perl one.

Maybe there’s some kind of ajaxy module for python too that can scrape the required data. I’d try a programming subreddit, or stack-overflow.

@CH, BeautifulSoup is still maintained. In fact, the most recent (3.2.0) was just released on November 21, 2010. It is an amazing package, and in my experience handles broken HTML just fine.

@logan

see the change -> loghttp://www.crummy.com/2010/11/21/0

There are very few changes, the main change was the version number.

The Author also mentions that he is not working on BS. Given this and problems people have had with broken HTML, especially versus lxml (I cannot comment on your own experience) I reckon it to be un-maintained.

the link above should be http://www.crummy.com/2010/11/21/0

On second thoughts, I wouldn’t use Scrapy.

It’s not nice to use for projects that don’t involve large-scale shell-controlled crawling (mining?).