The Oculus Rift and all the other 3D video goggle solutions out there are great if you want to explore virtual worlds with stereoscopic vision, but until now we haven’t seen anyone exploring real life with digital stereoscopic viewers. [pabr] combined the Kinect-like sensor in an ASUS Xtion with a smartphone in a Google Cardboard-like setup for 3D views the human eye can’t naturally experience like a third-person view, a radar-like display, and seeing what the world would look like with your eyes 20 inches apart.

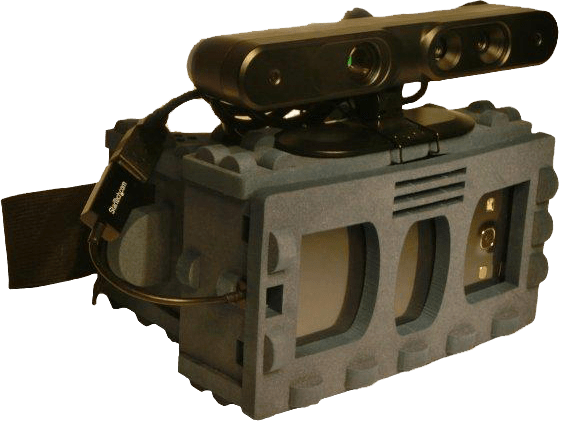

[pabr] is using an ASUS Xtion depth sensor connected to a Galaxy SIII via the USB OTG port. With a little bit of code, the output from the depth sensor can be pushed to the phone’s display. The hardware setup consists of a VR-Spective, a rather expensive bit of plastic, but with the right mechanical considerations, a piece of cardboard or some foam board and hot glue would do quite nicely.

[pabr] put together a video demo of his build, along with a few examples of what this project can do. It’s rather odd, and surprisingly not a superfluous way to see in 3D. You can check out that video below.

The third person view was done back in 2007. http://www.youtube.com/watch?v=TuC1st-cA9M

VR IRL

Kinect + VR headset = gesture-based interface. Add Siri or Cortana or Ramona and you’ve got the incantation-based interface. Soon, casting a spell in Warcraft will look like casting a spell.

The youtube videos shall be hilarious.

I have an S3 and a kinect and a google cardboard. Could I run this without the Xtion?

Posts like this just confirm that me staying at the uni was a good idea. We do most of this “connect x to y and see what happens” soon after the gadgets come out. “x” and “y” include in varying combinations: Kinects, Oculus Rifts, Leap sensors, Nao robots. My favorite still is seeing my own hands as seen by a leap sensor through the occulus.

(No discredit to [pabr]s work meant. It’s still great to see what others come up with.)

The point is, any kid with a Kinect, an old smartphone and a cross-compiler can start working on next-gen mobile augmented reality applications right now. It’s not just for the select few university researchers who have early access to the latest development kits and prototypes :-)

I’m sure the guys who sold Project Tango to Google management already had the idea of strapping a Kinect to the back of a Nexus phone a long time ago…

it depends, we do all of this at work too, i built an HD oculus rift mod last year, and i’m working on a 4K dual version. i’m glad i dropped out of uni since most of the stuff they taught was out of date YMMV

I can’t wait till depth sensing cameras are significantly less expensive :D

Buy 2 HD webcams for $15 each, string em together 4 inches apart, boom you’ve got a less expensive depth sensing camera.

Just looking at this makes my neck hurt.

BTW I have built a rig to make my eyes 20″ apart, it’s called a horizontal periscope. I used a board and two mirrors from the dollar store. It gives you some insane depth effect to look at the moon when it is low on the horizon. Bonus if it is framed at the end of a road or something.