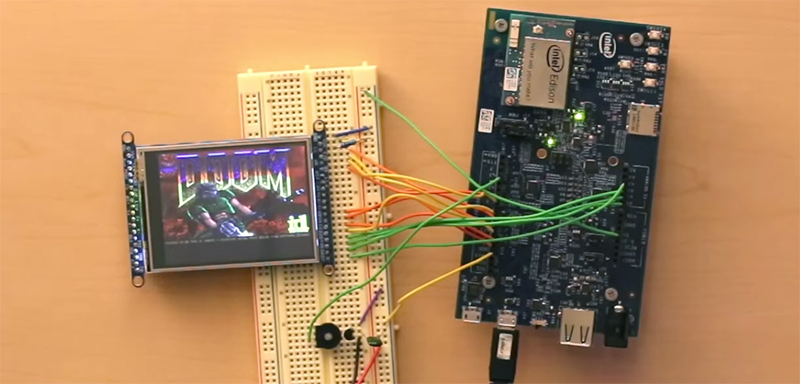

A few months ago, the Intel Edison launched with the promise of putting a complete x86 system on a board the size of an SD card. This inevitably led to comparisons of other, ARM-based single board computers and the fact that the Edison doesn’t have a video output, Ethernet, or GPIO pins on a 0.100″ grid. Ethernet and easy breakout is another matter entirely but [Lutz] did manage to give the Edison a proper display, allowing him to run Doom at about the same speed as a 486 did back in the day.

The hardware used for the build is an Edison, an Arduino breakout board, Adafruit display, speaker, and PS4 controller. By far the hardest part of this build was writing a display driver for the Edison. The starting point for this was Adafruit’s guide for the display, but the pin mapping of the Edison proved troublesome. Ideally, the display should be sent 16 bits at a time, but only eight bits are exposed on the breakout board. Not that it mattered; the Edison doesn’t have 16 pins in a single 32-bit memory register anyway. The solution of writing eight bits at a time to the display means Doom runs at about 15 frames per second. Not great, but more than enough to be playable.

For sound, [Lutz] used PWM running at 100kHz. It works, and with a tiny speaker it’s good enough. Control is through Bluetooth with a PS4 controller, and the setup worked as it should. The end result is more of a proof of concept, but it’s fairly easy to see how the Edison can be used as a complete system with video, sound, and wireless networking. It’s not great, but if you want high performance, you probably won’t be picking a board the size of an SD card.

Video demo below.

How much power did it draw while doing this? That’s about the only good comparison between this and an ARM based system.

According to Intel, the CPU is faster/more power efficient than ARM.

According to Intel modern 64bit quad core cpu should bootstrap in ancient 16 bit real mode ….

At least Intel understands the value of backward compatibility.

ARM is quite good at this as well.

ARM is a bit looser with that. For instance, ARMv6 is incompatible with ARMv5, there are big and little endian versions, and many other little variations that make it hard to run a single binary on different platforms. Overall, the Intel roadmap has been a lot smoother.

No according to the industry it should.

How much efficiency, silicon area, etc. is lost to that archaic backwards compatibility? They could make the CPU only natively capable of 64/32 bit, then emulate 16 bit in firmware. Maybe the gains are too little to make it worthwhile…

Transistors are so cheap now, that it’s probably not worthwhile to put any effort in taking out some of the archaic stuff, especially since it doesn’t have to be extremely fast. Even if support for old stuff takes up the space of an entire ‘386, that’s still only 0.01% of the chip.

This is actually LESS impressive than the Doom port I did for the VoCore, a smaller, cheaper and lower power chip.

expected to see QuakeII or something on the Edison!

I assume the Edison would not have a problem running faster, if it could use a better interface to the display than a GPIO port.

I am more interest if someone writes a driver for those $15 USB to VGA dongles(USB A/V Class) from China instead of yet again LCD. USB graphics would change the way people use their high ended microcontrollers with USB host or OTG ports.

I have a display based on on DisplayLink technology: AOC E1659FWU. From what I’m aware it uses the GPU to render the image and then sends the data to the display via the USB interface.

I would imagine processing the display data on a low power MCU or MPU without any sort of GPU would not be practical

Not like the CPU core in the uC magically gains a GPU to render the graphics on a LCD panel.

https://www.kernel.org/doc/Documentation/fb/udlfb.txt

>DisplayLink chips provide simple hline/blit operations with some compression, pairing that with a hardware framebuffer (16MB) on the other end of the USB wire. That hardware framebuffer is able to drive the VGA, DVI, or HDMI monitor with no CPU involvement until a pixel has to change.

>The CPU or other local resource does all the rendering; optionally compares the result with a local shadow of the remote hardware framebuffer to identify the minimal set of pixels that have changed; and compresses and sends those pixels line-by-line via USB bulk transfers.

>Because of the efficiency of bulk transfers and a protocol on top that does not require any acks – the effect is very low latency that can support surprisingly high resolutions with good performance for non-gaming and non-video applications.

FYI: The old Doom ran smoothly on the old 486 DX2 machine on a VGA card that can barely called a frame buffer. Intel Edison: 500 MHz dual-core CPU, 1 GB of RAM. That’s plenty of CPU for doing even more eye candy software rendering.

As others have said, the bottleneck here is not the Edison itself, it’s the lack of a proper video output. The way this one was cobbled together, it could be an 8 core hyperthreaded 4.0GHz CPU with Iris Pro graphics and it would still render 15 frames per second over the hacked up LCD interface. I bet it would be slow rendering text as well.

With a proper VGA or DisplayPort output baked in, this device would perform reasonably well, probably as well as Atom CPU/GPUs of a couple of years ago. But the Edison isn’t aimed at being an even smaller NUC or BRIX, it’s meant to step in where you need something more powerful than a microcontroller but smaller than a RasPi.

Might want to read my post just before the one you are replying about displaylink which already addressed about a more efficient screen buffer mechanism…

The post you are replying is actually a direct respond to [Simbo] about displaylink needing a GPU which in this case it doesn’t as everyone including me said it doesn’t.

Well, despite the grumps above, I find this pretty awesome. Nice work [Lutz]!

If you guys are interested you might also want to check out the Doom port for the uGFX library which can run on an ARM as well.

So now he has to get it to run Windows 98, or maybe Bob.

Reading Hackaday articles lately feels like someone is trying to “Arduino brainwashing” us, the readers.

The white board from the picture is called a ‘breadboard’, not an ‘Arduino breakout board’.

The Intel Edison board (the slightly lighter blue board sitting on the big blue one) sits on an Arduino breakout board, showing the Edisons GPIOs on Arduino style headers. This board then sits next to an breadboard with the TFT.

The arduino breakout board is not the breadboard. It’s the big board underneath the edison

https://cdn.sparkfun.com//assets/parts/1/0/1/3/9/13097-06.jpg.

An Arduino is a part, like a nut or a washer. I think most people understand that. Most people here also understand that the intarwebs isn’t only comprized out of their personal favorite websites and that there is vastly more information out there. So the whole brainwashing alu-hat notion is a bit silly.

I do like how this projects utilized arduino-cables and an arduinoscreen with an arduino pwoer connection to make it work….Even with an intel edisuino

OK, then let’s not forget to mention the PS4rduino controller, and the electronduinos flowing through the gates, and the fotonduinos that travels from the LCDuino to our brainduino.

And all of these wonders Goduino give us, mortals, in order to write and pray on the interwebduino blogduino.

Aminduino!

:o)

Errrm the Intel Edison is sat on an arduino breakout board (the big blue board with the little edison on the top left hand corner). Note the headers in the arduino-shield compatible layout…

This is then wired to the white breadboard using jumpers.

There aren’t that many Edison breakouts around so I can see why the author used that one.

But can it run Crysis?????

Excellent stuff!

A lot of low level APIs access / usage patterns are portable for other IoT things Edison was designed for!

https://www.youtube.com/watch?v=UbHo9B7Jbp4