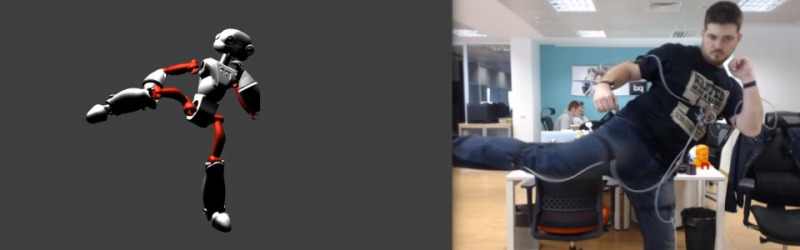

[Alvaro Ferrán Cifuentes] has built the coolest motion capture suit that we’ve seen outside of Hollywood. It’s based on tying a bunch of inertial measurement units (IMUs) to his body, sending the data to a computer, and doing some reasonably serious math. It’s nothing short of amazing, and entirely doable on a DIY budget. Check out the video below the break, and be amazed.

Cellphones all use IMUs to provide such useful functions as tap detection and screen rotation information. This means that they’ve become cheap. The ability to measure nine degrees of freedom on a tiny chip, for chicken scratch, pretty much made this development inevitable, as we suggested back in 2013 after seeing a one-armed proof-of-concept.

But [Alvaro] has gone above and beyond. Everything is open source and documented on his GitHun. An Arduino reads the sensor boards (over multiplexed I2C lines) that are strapped to his limbs, and send the data over Bluetooth to his computer. There, a Python script takes over and passes the data off to Blender which renders a 3D model to match, in real time.

All of this means that you could replicate this incredible project at home right now, on the cheap. We have no idea where this is heading, but it’s going to be cool.

GitHun? :)

Attila approves.

LOL

Where all the cool Mongolian coders hang out.

Southern US software sharing site. ;-)

You have a price ? Can you make one estimation of price ?

Well, as everything, it depends on quantity, but I’d estimate around 10€ per node, plus another euro for the multiplexer chip and your standard arduino(-compatible?) microcontroller. So maybe about a hundred euros if you build it yourself (since this has been developed at the same time as other projects in the department it’s kind of difficult to keep track of costs)

Where did you buy the IMU sensor? I only can find 15€+ for that chip..

I bought them at mouser (http://www.mouser.es/Search/m_ProductDetail.aspx?Bosch%2fBNO055%2f&qs=%2fha2pyFadujdVisibm2UP6xcjLWsehoYLsizDCRFQdYPbRNX3GwxwQ%3d%3d&utm_medium=aggregator&utm_source=octopart&utm_campaign=octopart-temporary-tag),

but it appears you can get them cheaper :

(http://www.futureelectronics.es/es/Technologies/Product.aspx?ProductID=BNO055BOSCH6056771&IM=0&utm_source=octopart.com&utm_medium=CPCBuyNow&utm_campaign=Octopart_Ext_Referral)

Good find that one in spain :) Thank you!

I got them at mouser : http://www.mouser.es/Search/m_ProductDetail.aspx?Bosch%2fBNO055%2f&qs=%2fha2pyFadujdVisibm2UP6xcjLWsehoYLsizDCRFQdYPbRNX3GwxwQ%3d%3d&utm_medium=aggregator&utm_source=octopart&utm_campaign=octopart-temporary-tag

But it seems you can get them for less:

http://www.futureelectronics.es/es/Technologies/Product.aspx?ProductID=BNO055BOSCH6056771&IM=0&utm_source=octopart.com&utm_medium=CPCBuyNow&utm_campaign=Octopart_Ext_Referral

Very nice! You should do this with Unity 3D.

I also did this but with my hand with 11 IMUs, for learning sign language.

Check here a alpha updated video: http://www.youtube.com/watch?v=aqGlPKti5Y8

Thanks, and cool project!

I’ve used Blender instead of Unity because it’s free, open-source and because I have absolutely no experience with animation, and having to learn one why not go with the community’s choice :)

Thanks :)

Unity have a free edition. I use it for 2 years and you will never miss the few, very few “pro” features.

But being opensource, blender is also a good solution :)

The military has been working on using this sort of thing to control androids for a while. It’s nice that things trickle through eventually.

There is no “trickle through”. This dude made this completely on his own and of his own volition.

He meant that the capabilities trickle through, not the concept.

Great work Álvaro.

Thanks for the tip Elliot and Nice work ALVARO!

There are a lot of IMU based motion capturing systems out on the market – XSense being one of the most famous ones. Perception Neuron uses “cheap” mobile sensors as well (and ridiculously expensive connectors that probably cost more than 4 times the price of the sensors). On Kickstarter there are some (failed and some more or less alive) projects.

So while this isn’t news, it’s nice that it is getting documented. Although, from own experience, the HARDWARE is the easy side. What makes a el-cheapo IMU based mocap system “work” is the software. You have to deal with A LOT OF NOISE ™, drift, constant semi-automated recalibration, EM … not to speak of some slightly complex IK helper systems to to get the limb hierarchies work somewhat consistently. Ground-contact handling is another non-trivial task.

Also, if you want to get somewhat accurate measurements, considerable oversampling is required, which ups the needs for data throughput on sensors, routers, wiring …

Again: Good that it’s done. New? Not at all.

While true, it’s an excellent first shot.

It would be easy to expand this to make the hardware capture more consistent. He’d need dedicated sampling for each IMU to get full bandwidth. That would also allow for simulteneous sampling which should make everything sync better, especially for fast movements. As for ground contact, yes, it is a pain, but I’d be curious about making a resistive matrix “shoe” to figure out ground contact points.

Touch sensor on base of shoe indicates its down and so transform root of skeleton by values propogated up from contact point. Then the character will stick to the ground.

Not really though as just before contact and just after contact means a second pass is required unbundling the transforms to fade in the effect and minimise the effects of the noise this sysetm is filled with.

Doing a Tpose everynow and then (and detecting it) could reset the drift of the IMus…

Great start – stick with it…

Well duh, good hacks isn’t always about news value or novelty.

Correct. But if the “news reporter” hasn’t gotten outdoors for a couple of decades (and therefor “hasn’t seen anything like this outside Bollywood”), one might need to explain to him the difference between real life(tm) and the web.

In real life a lot of things are “old stuff” that the web-nerds consider “freakin’ cool” :-)

Cool project.

This could be the basis for a virtual boxing match.

A Danish company named Rokoko, have already perfected that, and uses it to entertain children. They are even doing some test with using it for therapy, where the therapist uses a suit, and the child sees a cartoon character instead, it seem to make it easier for the children to related to the therapist.

http://rokoko.co/

I attended a Rokoko performance with my two kids. Especially the oldest one (7 years) enjoyed it a lot, and so did I. It was fun to be part of an interactive cartoon.

Great hack!

Idea: replace the expensive BNO055 9-DOF sensor by an MPU-9250 (much cheaper on Aliexpress, $3.52/10), add ESP8266 ($1.68/20), 10ppm 26 MHz cristal ($0.26/50), W25Q16 SPI Flash ($0.18/20), TP4056 LiPo charger ($0.07/50)and strap it to a 601417 3.7V 120 mAH LiPo battery (T 6mm x W 14mm x L 17mm, $2.60/5)…

What you get is a <$10 14mm x 17mm x 9mm Wi-Fi 9-DOF sensor with a 1 hour autonomy, small and cheap enough to put on each joint.

Now, THIS is the definitive 1/3 sq. inch project winner!

The problem is that nobody knows how to use MPUs sensor fusion, while Bosch Sensortec has Open Source drivers for all their chips.

Those hardware sensor fusion engines only manage to produce ~4 degree heading accuracy at an output rate of 100 Hz max. Moreover, the BNO055 hardware sensor fusion solution displays a few degrees of heading drift even when turned through 90° laying flat on the desk. The skew of the underlying BMX055 sensor coupled with the automatic calibration is somehow causing this heading drift in the BNO055 hardware sensor fusion mode:

https://github.com/kriswiner/MPU-6050/wiki/Hardware-Sensor-Fusion-Solutions

Use raw sensor data instead and perform a Madgwick simple filter in software, you can achieve 250 Hz rate or more, and you don’t pay for the extra embedded Cortex M0 inside the BNO055.

Wow! I didn’t know about the MPU-9250, will definitely give it a look.

As for the ESP, I thought about whether going wireless, and decided against it because I thought charging all those batteries would be too much of a hassle, but after having to untangle all the sensors before each use I’ve changed my mind :)

Dont go for the 9 DoF MPU9250. This IC only provide a 6 DoF solution with its embedded DMP. 9 DoF requires host processing.

does it work for more than 30 seconds?

keywords: drift, noise

afaik every single system of this type needs absolute position feedback (visual/sound/magnetic), otherwise it all goes to shit rather quickly

You can actually get quite acceptable data for longer periods of time (we have done tests with commercially available suits that ran for about 5-6 minutes without recalibration and only minor drift that could easily get compensated for, because the noise- and drift-prints of every single sensor was known). Oversampling is one important keyword. A sampling rate of 120Hz and higher allows you to filter out most errors, so if the COMPASS is good, you can compensate for drift on the gyro, which is one of the biggest issues with those Chinese mocap systems using mobile phone sensors.

Most projects like this want to do it “on the cheap side”, being a cool “hack”, but turning into frustration rather quickly, because you just don’t get the data quality to have much fun with it. As a proof of concept this project is “gold”. Now, when the actual work starts (getting the software to compensate for the garbage that the sensors provide you with), it really shows if the “hacker” is just a “hack-kid” or has the power to finish a project :-D

(nit picking here, of course, it still is a nice hobby!)

This looks great. It’s been an interest of mine too but I’m not bright enough to work out the maths to animate bipedal 3d models with IMUs.

One query I have, the frame rate *appears* to be pretty low, maybe 5 to 10fps, I’m hoping this is just a limitation of your graphics hardware rather than the sample rate of the IMU hardware, because it’s quite an important factor for character animation.

Thanks!

I’ve only used it with a laptop so I can’t tell you which one it is. When I added physics in blender (kicking a ball into a wall of cubes) it definitely moved a lot slower, so it seems it would be the computer, but I can’t tell for sure sorry

How can this be an “absolute position sensor”? Eg. how does it measure altitude differences?

This would be an “absolute orientation sensor”. In order to be absolute position, it would have to include a GPS and an altimeter :)

Hi Alvaro i’ll do a similar project for my school, can you explain me how datas are recived by the computer (i mean, how they are packaged for be understanded by the pyton program in blender?), and how the pyton program works? sorry but i’m learning now how to use Blender and i don’t know how it uses the recived data by the sensor to move the bones of the armature.

Tanks and a lot of compliments for your project.