[Jack Qiao] wanted an autonomous robot that could be handy around an ever-changing shop. He didn’t want a robot he’d have to baby sit. If he said, ‘bring me the 100 ohm resistors’, it would go find and bring them to him.

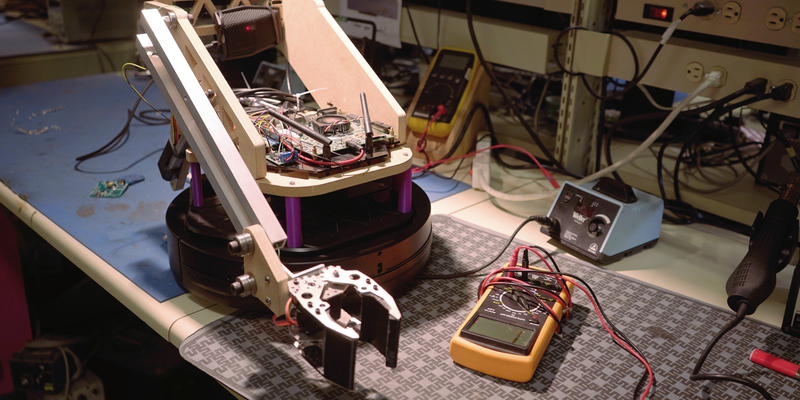

He iterated a bit, and ended up building quite a nice robot platform for under a thousand dollars. It’s got a realsense camera and a rangefinder from a Neato robotic vacuum. In addition to a mircrophone, it has a whole suite of additional sensors in its base, which is a stripped down robotic vacuum from a Korean manufacturer. A few more components come together to give it an arm and a gripper.

The thinking is done on a Nvidia Jetson TK1 board. The cores on the integrated graphics card are used to perform faster computer vision calculations. The software is all ROS based.

As can be seen in the video after the break. The robot uses SLAM techniques to successfully navigate and complete tasks such as fetch resistors, get water, and more. [Jack Qiao] is happy with his robot, and we would be too.

I like him…

when he grows up I could see him taking care of the kids and pets..

Good job…

This reminds me of the helper robot in Iron Man, can’t remember its name though.

Nice robot, great job!

In the movies it’s Jarvis (the robot arm is controlled by Tony’s AI)… Now if there’s some helper robot in the comics I can’t remember.

Robot…handjob

Just don’t say “Jerk it off!”

I think this robot needs a base that could move sideways… Would make positioning way easier and faster.

op here. A holonomic platform would definitely work better for sideways movements, I got the kobuki base mostly because it works with ROS off-the-shelf.

That said, omni-directional platforms have various drawbacks too – the mecanum wheel based ones have bad odometry during sliding and worse traction than regular wheels. The independent drive type requires at least 5 motors.

I think the mecanum wheel approach would be good if I had a real LIDAR and didn’t have to rely as much on wheel odometry.

Well done! I have a horrible mental image of the poor little bot melting down trying to respond to radio song lyrics though…

Epic! I want one!

“Colin! (I would name it Colin after a certain robot in a Douglas Adams book, and no, I do not mean Marvin) Fetch my slippers!” Yeah, I could get used to that.

I love it, great job! But couldn’t the robot manage the task faster? Or did you choose this for safety? (for example when he takes a small pot)

For ease of coding I set it up so that the grasping actions are on a fixed timer, and because the actual time to perform a grasp varies sometimes it waits one or two seconds doing nothing.

the proper ROS way to do this would be to use actionlib, which issues a callback when the action is completed. I just haven’t gotten around to implementing it.

10 years later: Just another software update and it may be able to bring me my slippers!

I like it though – looks great.

Very nice.

Very cool! Doing VHS proud! Good luck with the prize.