If you are like us, you probably just spin up your own code for a lot of simple projects. But that’s wasteful if you are trying to do anything serious. Take a robot, for example. Are you using ROS (Robot Operating System)? If not — or even if you are — check out [Janne Karttunene] and the University of Eastern Finland’s open-source course Robotics and ROS 2 Essentials.

The material is on GitHub. Rather than paraphrase, here’s the description from the course itself:

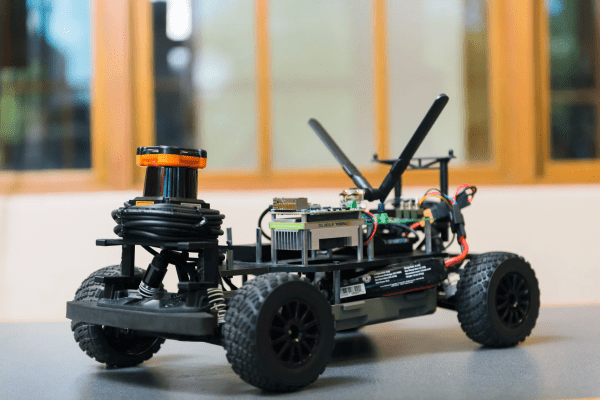

This course is designed to give you hands-on experience with the basics of robotics using ROS 2 and Gazebo simulation. The exercises focus on the Andino robot from Ekumen and are structured to gradually introduce you to ROS 2 and Docker.

No prior experience with ROS 2 or Docker is needed, and since everything runs through Docker, you won’t need to install ROS 2 on your system beforehand. Along the way, you’ll learn essential concepts like autonomous navigation and mapping for mobile robots. All the practical coding exercises are done in Python.

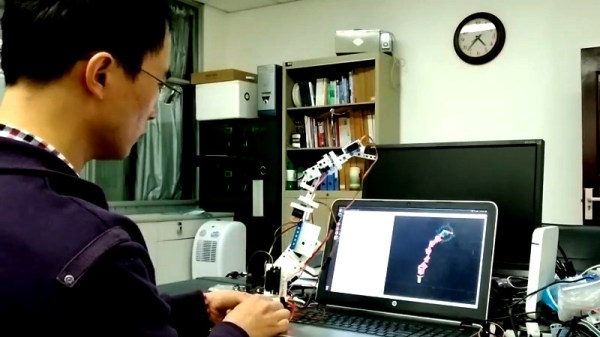

Topics include SLAM, autonomous navigation, odometry, and path planning. It looks like it will be a valuable resource for anyone interested in robotics or anything else you might do with ROS.

If you want a quick introduction to ROS, we can help. We’ve seen a number of cool ROS projects over the years.