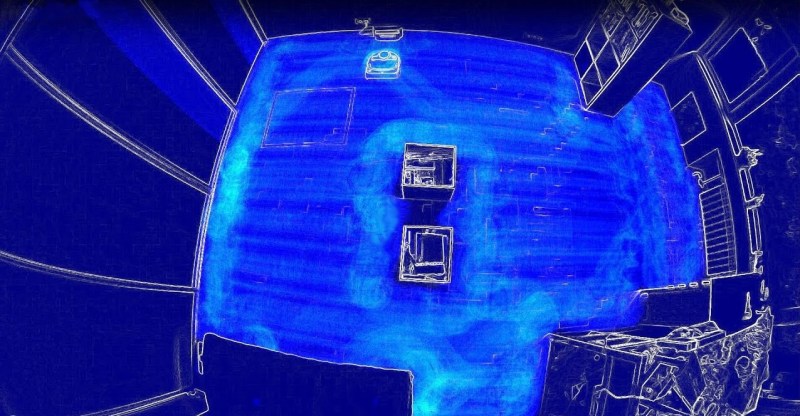

What’s going through the mind of those your autonomous vacuum cleaning robots as they traverse a room? There are different ways to find out such as covering the floor with dirt and seeing what remains afterwards (a less desirable approach) or mounting an LED to the top and taking a long exposure photo. [Saulius] decided to do it by videoing his robot with a fisheye lens from near the ceiling and then making a heatmap of the result. Not being satisfied with just a finished photo, he made a video showing the path taken as the room is being traversed, giving us a glimpse of the algorithm itself.

The robot he used was the Vorwerk VR200 which he’d borrowed for testing. In preparation he cleared the room and strategically placed a few obstacles, some of which he knew the robot wouldn’t get between. He started the camera and let the robot do its thing. The resulting video file was then loaded into some quickly written Python code that uses the OpenCV library to do background subtraction, normalizing, grayscaling, and then heatmapping. The individual frames were then rendered into an animated gif and the video which you can see below.

Watching the video it’s clear that the robot first finds an obstacle and then traverses its perimeter until it gets back to where it first found the obstacle. If the obstacle defines the outer boundaries of a reachable area then it fills in the area by crossing back and forth. It makes us wonder if the robot programmers can get some optimization hints from fill routines used in drawing programs. If you did want to experiment with other algorithms then you can without too much trouble using iRobot’s hackable Roomba, the iRobot Create, though you’d have to add back the vacuum. You’ll also notice that it couldn’t fit between the two boxes placed in the middle of the room. We’re guessing that it’s aware of this missed area. How would you solve this problem?

Very neat.

But did he use a pix-e camera to capture the animated giff

That pattern makes so much more sense than anything my Roomba tried to do!

Does it make more or less sense than the lack of dog crap detection and subsequent smearing of the robotic vacuum cleaner and all of your carpet with it as it sets about for hours, spreading it all over every square inch of floor?

well you could polish the turd first to give a more reflective surface to the lidar.

LIDAR is underpowered. Russian LIDAR will blow that turd clean off.

There’s a very simple solution to this. Don’t have dogs.

Or at least check your carpet first before you turn the robot on. Should surely be able to smell it. A housetrained dog will just about never shit on the floor though. Only if you lock it in, and it’s desperate, and then it’ll be terribly anxious and ashamed about it. Or if it’s ill.

That said I’d worry about dog hair getting into motor spindles and knackering the robot, hair is the enemy of motors.

Makes me wonder how it would handle a 3D Path a shallow ramp the allows it up to a table so that part of it’s accessible area is on top of another part of it’s area?

a comparison between different robots would be so cool!!!…..

I love my Neato it scans rooms and does the job brilliantly

Come back later. I will have few more robots to compare.

This is simply AMAZING. It is not hard to come up in an algorithm to do something like that in a simulation, where you have neat X and Y variables showing your exact position. However, to achieve something like that in real life is astounding. Wheel rotary encoders are only so accurate, so where you think you are will drift from where you actually are over time. Even knowing accurately which direction you are going is also amazing (even compasses can be wrong when you consider that furniture may be made out of metal).

That is actually the kind of path that I would expect if you scaled this up and put it outside so that you could use GPS. Good job, Vorwerk guys!

I own one of those VR200, the navigation is really amazing. Every single time.

Impressive,

reminded me of those home computers in the 80’s with a “floodfill” on the monitor.

Unfortunately no info whatsoever about the sensors, algorithms, sofware side of this kobold on the manufacturer’s site.

I wonder if this vacuum cleaner can stand the turd test.

Vorwerk collaborated with Neato on their robots: the VR100 was effectively a Neato D85 that Vorwerk pimped with an improved vacuum and some additional sensors, the VR200 seems to be a Vorwerk development based on Neato navigation technology.

I wonder if somebody managed to extract the SLAM maps from the Neato robots by now. Anybody know?

video is somewhat reminiscent of watching my 3d printer fill in a complex layer. only real difference is the 3d printer knows whats coming, the robot seems to be adjusting its plan every time it finds a new perimeter.

I have a Samsung Navibot the Worst thing ever, compared to my cheap roomba it claims to be intelligent but it isnt, it gets stuck everytime on its own ramp for the selfcleaning and charging it rotates multiple times at the same spot and doesnt get the job done

the cheap roomba on the other side is just stupid, drives until it hits something than goes to another direction, but he goes to all rooms and gets the job done at 127m2

the navibot on max settings only covers the living room with 60m2

This video reminds me of cutting grass, going back and forth, while

traveling around obstacles. It’s amazing, to me, that a vacuum can

cover all exposed carpet, despite the obstacles.

Robotic is making our life lazy and taking our jobs, a robotic vacuum cleaner is an amazing product for the time management but not for human health.