My phone can now understand me but it’s still an idiot when it comes to understanding what I want. We have both the hardware capacity and the software capacity to solve this right now. What we lack is the social capacity.

We are currently in a dumb state of personal automation. I have Google Now enabled on my phone. Every single month Google Now reminds me of bills coming due that I have already paid. It doesn’t see me pay them, it just sees the email I received and the due date. A creature of habit, I pay my bills on the last day of the month even though that may be weeks early. This is the easiest thing in the world for a computer to learn. But it’s an open loop system and so no learning can happen.

Earlier this month [Cameron Coward] wrote an outstanding pair or articles on AI research that helped shed some light on this problem. The correct term for this level of personal automation is “weak AI”. What I want is Artificial General Intelligence (AGI) on a personal level. But that’s not going to happen, and I am the problem. Here’s why.

Blindfolding AI

Like most people, my phone is now part of who I am. Although I spend hours a day using an actual desktop computer (that’s the kind where the monitor and keyboard aren’t one integrated part of the computer) much of my life passes though a 5.2″ touchscreen. Google is always watching but for now it’s relegated to a small portion of what is going on. It sees those monthly bill notices I previously mentioned because I use gmail, but it doesn’t watch my browser activity close enough to see me pay them.

Everything in life needs an impetus to happen. If there isn’t a closed loop on my bill payments it’s no surprise that I get deprecated reminders about them. This means my current automation is annoying rather than assistive. It can’t see everything I do.

How to Look at Someone Without Creeping Them Out

In many cultures there is a social norm that you don’t stare at people. That is to say, there are times when it is and isn’t appropriate to look at people; there is a maximum amount of time you can continue gazing upon them; and the rules that make this work are a game of moving goal posts.

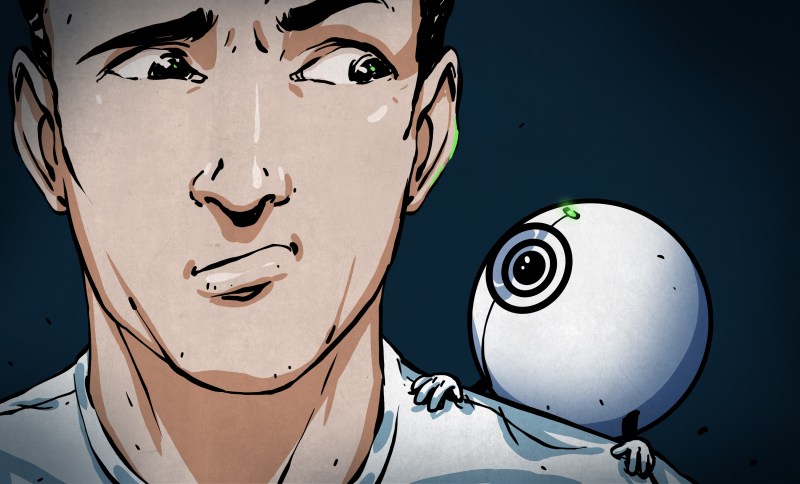

Yet almost all humans are capable of, and do learn this game. Even strangers who have never met you before can quickly recognize when you need help and if they should offer it or not. This is the keystone to unlocking useful personal AI. It’s also an incredibly difficult task.

A much easier method is to watch absolutely everything the user does. This makes a lot more data available but it’s super creepy and raises a ton of ethical concerns. Being observed the majority of the time is unprecedented — there’s no human-to-human paradigm for this type of watchfulness. And the early technology paradigms have not been going well. Just last week authorities in Germany recommended that owners of a doll called “Cayla” destroy the microphones housed within. The doll’s microphone is always listening, routing what is heard through a voice recognition service with servers outside of the country.

Creepiness aside, privacy is a major issue with allowing an system to watch everything you do. If that information is somehow breached it would be an identity theft goldmine. Would your AI need to know to shut itself down anytime you walk into a public restroom, hospital, or other sensitive environment? How could you trust that it had done so on every occasion?

My mind also jumps to a whimsical scenario where your personal AI gets a bit too smart and decides to blackmail you (a Douglas-Adams-like thought… I will try to keep this discussion on the track of what is plausible). More likely, once your personal assistant knows you well enough and proves it can get you to do your work more efficiently it’ll be promoted from your assistant to your manager. Are you still an effective team?

Machine Learning as a Social Norm

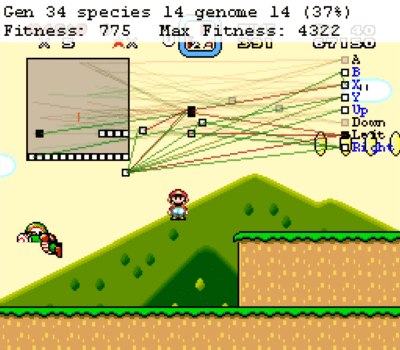

Machine learning is the key to doing amazing things. But gain a bit of understanding of how it works and you immediately see where the problem lies. A machine can learn to play video games at a very high level, but it must be allowed to see all aspects of the game play and requires concrete success metrics like a high score or rare/valuable collected items.

Yes, for a personal AI to be truly useful it must have nearly unrestricted access to collect data by watching you in daily life. But I think it goes even a step further. An AI can’t speed-run your Monday over and over the way it would a level of Super Mario World. For machine learning to work in this case it needs to share data across large populations to get a useful set. It would definitely work, but that’s a peeping-tom network of epic proportions. That’s not an uncanny valley, it’s a horror movie plot.

We have already seen the implications of this flavor of data collection. Social media is the machine learning without any of the AI benefits. Millions of people have published what might seem to them as innocuous information on innumerable platforms. But big data turns that innocuous information into predictions about the behavior of segments of the population.

If the dopamine drip of social media got people to share all of this data, what impact would effective personal AI have? It would be your friend, advisor, confidant, all in one. I tip my hat to Charles Stross who depicted a very scary AI in his book Accelerando. It takes the form of realistic robotic house cat. It’s incredibly easy to underestimate abstract intelligence.

Given Access, AI Still Lacks Vision

The current state of the art could allow a unified data collection effort to watch everything on your various computers and portable devices. It could listen to the audio in your life. And even record video of limited use.

First things first, even given total digital access to your life it is a big task to make sense of everything you’re doing. This is not an insurmountable challenge right now, but it would certainly require that the processing happen remotely to get the necessary horsepower. The same goes for audio data. This is already the case for many systems like the Amazon’s Echo, Apple’s Siri, Google’s Allo, and for children’s toys like the aforementioned Cayla and Mattel’s Barbie.

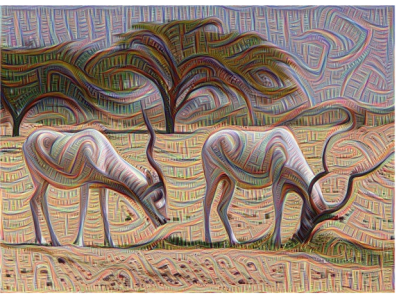

Video recognition doesn’t really exist right now. This is the real cutting edge of a lot of robotics research (think self-driving cars and military robots) so it is surely coming. As with voice recognition, there are services like Google Cloud Vision that depend on a system of constraints: orientation of the item to the camera, lighting levels, known sets to compare, and more. But in the foreseeable future I don’t think that dependable computer vision will be a suitable data source for personal AI purposes.

This is a real problem for making sense of our lives. How will your AI know who you are talking to? Without a view of what you see, gathering context becomes very hard. And the most obvious route for this input would have been wearable cameras like Google Glass. We all know how that turned out. Perhaps Snapchat’s entry into that field will change the landscape.

What We Could Get But Won’t

Okay, I’ve done a lot of bellyaching about the problems. If those were all solved, what do I actually want? In a nutshell I want my intelligence augmented.

If my wife and I have a passing conversation about a musical coming to town I want my personal AI to remember and tell me when tickets go on sale. For that matter, I want it to know my seating and cost preferences for me and to check my calendar and my wife’s calendar to choose the perfect day, simply asking me to pull the trigger on the purchase. I want it to know that we usually will pair a show with a dinner or with drinks afterward and the collate our restaurant visit history to guess which place we would most enjoy visiting this time around. I want the moon.

But I also want privacy. I want my humanity, and I want to live my own life. So I’ll pull myself back from visions of a brave new world and appreciate what we have: access to information which was lunacy to imagine 30 years ago. Technology will continue its march forward and we will benefit from it. But for now that tech isn’t and can’t watch us closely enough to make an Artificial General Intelligence system part of your daily life. But people will try and that will be very interesting to read about on Hackaday.

Very thought-provoking!

The issue is one of maintaining the illusion that the AI isn’t constantly paying attention to every detail. Those that serve those wealthy enough to have domestics know how to pay attention to the most intimate details of their employer’s lives without giving any outward sign that they are doing so. Yet they constantly adjust their own behaviour to know when their help is needed, AND (in general) keep their observations to themselves.

This is what these digital assistants need to be programmed to learn: when to act, and when not to. As well demonstrate that the information they are gathering goes no farther.

Sadly when it comes to ones and zeroes, you can’t really prove anything.

Best to assume the government [and other hackers] are listening in.

A physical switch for the microphone [and speaker], set up as push to talk should keep most tinfoil hat enthusiasts more or less happy.

A physical switch can be helpful sometimes, but would obsolete many of the proposed functions. Assuming that governments and hackers are listening in obsoletes it completely for me. The data must be processed locally and any outside access to this AI restricted as much as possible with best effort sanitization of any input data . Otherwise the devise does possibly more harm then benefit and I would not want it.

I also do no use cloud based smart home solutions.

> As well demonstrate that the information they are gathering goes no farther.

This is the crux of it. There truely is no technical reason that the data has to go out somewhere. It can always all be local. Even queries can be tumbled without logging to prevent metadata leakage from input data. (i.e. finding out the price of milk doesn’t necessarily mean that Alphabet, et al needs to know that I drink milk).

The problems are *not* technical. The problems are thus:

1. Every company in silicon valley is evil. They only want to make money by mining and selling people’s private data.

2. Innovative small businesses are next to impossible to start, again, not for technical reasons, but, because the entrenched interests make it so you have to do business their (entrenched, evil) way if you want to survive, therefore killing off anyone who doesn’t want to be evil. This is a combination of the legal process being onerous, as well as banks not giving financing except to cookie-cutter (entrenched, evil) business plans.

tl;dr fix inequality and good tech will finally come. As long as the same couple hundred asshats are funding/controlling literally everything, everything will be done their (entrenched, evil) ways…

If by evil you mean profound immorality that may well be true of these entities, but that has little bearing on the issue at hand. The blunt fact is that the market is not likely to pay the costs of creating and maintaining personal AI and the past has shown that software will be duplicated without giving value back to the creators. These are the ultimate reasons why data-mining became the most viable business model in consumer IT – nor because the companies involved are intrinsically evil.

Ultimately if we want personal AI assistants that guard our privacy, we will need to find a way to pay for them with something other than our data.

> the past has shown that software will be duplicated without giving value back to the creators

Uhh… all software should be free, and I say that as a software-creator. The value added to humanity far outweighs whatever piddly benefit I might get by being a monopolist. Also, people are happy to pay much larger sums for the privilege of communicating directly with me, the creator, to bounce ideas off of, than they would pay for just the software itself.

No, the only ones who need to make money off of things through monopolies, are the ones who didn’t create it, but rather ‘bought’ the rights off of whoever created it. In otherwords, it’s only for talentless capitalists who want to be in charge of everything, without having to do anything.

> Ultimately if we want personal AI assistants that guard our privacy, we will need to find a way to pay for them with >something other than our data.

Well yeah, the platform, the hardware is the obvious thing. Support contracts another. Bespoke apps a third revenue stream. That’s just off the top of my head. I’m sure I can come up with others if I spend a few moments. It’s not hard to make money without being evil. It just isn’t. It really really isn’t.

Ya well that is all well and good, but the fact remains that we do not live in a world where your ideas have any traction regardless of how nice they might be. Right now the fact is for personal IT you pay with your money or you pay with your data and while those with some skills might be able to work around this, there is no third choice for the bulk of consumers.

As an aside, those that fling around the notion of corporate evil in matters like this are debasing the term – save it for those cases that truly deserve it.

*nesting limit*

> those that fling around the notion of corporate evil in matters like this are debasing the term – save it for those cases that truly deserve it.

> the fact remains that we do not live in a world where your ideas have any traction regardless of how nice they might be

That doesn’t fit the definition of evil to you? Our entire reality is fucked, regardless of how good our ideas are… That’s not evil? WTF?

Right. Well the real world is a messy place and it is competitive in nature, playing hardball over some new idea doesn’t meet my criteria for evil given I can find examples where corporate malfeasance is killing people outright in the name of profit.

I’ve also got news for you: nobody gives a shit about ideas – ideas are a dime a dozen. Selling them is where the hard work is and there are no free passes.

As a software creator (engineer, not developer) myself, I believe people should pay me for my hard work writing the code that noone else has written yet. Free software is wonderful, and I volunteer my spare time to a few open source projects to give back from all the benefits I’ve received from it, but at the dinner table its my day job that feeds my family, and that requires that I get paid to write closed-source software.

> Bespoke apps a third revenue stream

I thought you said software should be free? Apps are software too ya know… This isnt “all software except what I write should be free”

> I thought you said software should be free? Apps are software too ya know…

foo, it’s sad what this world has come to. A couple of decades ago you would’ve known exactly what I was talking about. It was very common back then to have ‘Freeware’ which, if you threw money at the author, would add the feature that you wanted. In many cases the source code was also (eventually) released to the public. This really is no different than a wealthy patron commissioning an artist to put a statue in the local park, except much smaller scale and lower dollar amount, and thus not just wealthy people anymore.

I like the freeware model. However, it does have its limitations. To continue the patron analogy, when the Medici family continues the statue in the park, we all get to enjoy it, but it would be a statue that suits the patron’s sensibilities. However, if you have a system where artists can design statuettes and sell copies without worrying that the guy with the biggest most efficient factory will copy the design and undercut him, then at least the market will be slightly more democratic–so long as a critical mass of folks share your tastes and are willing to buy a work, creators will produce works geared towards you.

Fluffybunny, I respect that you can make a living as a software creator who basically gives music away free and earns money giving concerts. Not everyone has that luxury. I also respect that you value humanity so much that you would be willing to sacrifice your own ability to make a living in order to better humanity. Not everyone is that altruistic.

As for the rest of us, we are not talented enough or famous enough to write free programs and reasonably expect that a patron will hire us to consult and pay us enough to feed our families, nor are we willing to starve while working to better humanity for its own sake. The free market and IP protection systems dont preclude your business model, they simply allow a rival one ti coexist.

“fixing inequality” is not possible. It would mean to wipe out humanity and still there would be inequality in nature so let’s finally sterilize the planet. And hope there is no other life in the rest of the universe, as it would contain inequality in it.

The Khmer Rouge in Cambodia tried to “fix inequality” by wiping out the more intelligent of the population. Because it is not possible to increase the intelligence of of the lesser part.

I don’t think this would be a good idea as they would have killed both of us, just because we are able to read and there still exist people on this planet who are not.

very well put

I feel like this could be eased once technology has advanced to the point where the AI can be hosted on machine that can be owned and maintained by an individual at home. This way only the necessary information to complete a given task would need to be shared with the outside world.

> this could be eased once technology has advanced to the point where the AI can be hosted on machine that can be owned and maintained by an individual at home

The technology has been around for quite some time for this. See above. It isn’t a technical limitation. Don’t believe anyone who tells you otherwise.

I’ll think about it when I’m positive my morally deranged government isn’t grabbing my ai data randomly for idiotic reasons.

Excellent hack. I would never have thought that one of these could be repurposed in that way.

+1

“I want the moon. But I also want privacy. I want humanity, and I want to live my own life.”

Isn’t this what everyone wants but to varying degrees and varying willingness to compromise on one or the other side of this equation?

The answer is rather simple and obvious. It isn’t a hard problem to solve.

Stop using the F@#$ing cloud!

Let me repeat that.. burn the cloud services in h3ll!

Keep a computer running in your own home, open a port to it through your router and tell your phone, tablet, office computer, etc… to connect to instead of some big corporate data collector when you have a query, calendar appointment, message or etc…

We need a good collection of software packages that allow for this. The pieces are all out there, they just need to be put together into an easy to use package. I think it has to come from the OSS world. No, it’s not that I think there is a moral imperative to share everything for free. There is just too damn much money in this game for a commercial company to not keep your data and pass it around. Ever hear of fiduciary responsibility?

So should every non-techie home user be expected to become more proficient in computers/networks/etc to support this? Or do we need to make a software package that is as easy to use as possible so that normal people can figure it out?

Yes. We need both.

Seriously, it’s 2017, everything is getting connected to the internet, almost everyone carries an always networked pocket computer called a cellphone and even though it’s popular not to admit it we all love it. Moderately intelligent human beings should be able to figure out how to forward a g0d4mnd port already! Don’t want to know how your magic black boxes work? You probably learned much that was less relevant to your life in school already. Shut up and get over it.

But.. on the other side of it… nobody should be forced to learn to configure sendmail and ldap for example. There is no reason a software package can’t exist that is 99% plug and play with the exception of registering a dynamic dns name and port forwarding on the server end and entering that same dns name on each client.

Ok, end rant.

“Ok, end COPYPASTA”

ftfy. Also submit your copypasta to any copypasta database, so that all of twitch can chat spam your memes. Keep it up, as your copypasta can rank with the NavySeal pasta

shit comment is shit

Ok dumbass… I can quote a stupid meme too. “citation needed”. If I am copy pasting just show me from where!

Maybe ‘he’ is a corporate AI and doesn’t have the citation function.

Heh. My ISP doesn’t even have port forwarding capability. Their wireless router thingy has a password that they don’t give away. So to get around that, I had to build a virtual router on a Digital Ocean VM and *my* firewall, which is behind the crappy ISP router, keeps a VPN connection open to the DO VM 24/7. The upshot is I can port forward anything, even port 25, to my house. So I host my own email, Owncloud, security cameras (zoneminder) etc. But, there’s no way in hell that 99.99% of the people are going to have the ability to set something like this up even if they knew they should.

Well.. My experience is limited to Comcast where I used to live and AT&T and a smaller, local cable provider where I live now. AT&T’s router config was buggy but it could be done. The other two were no problem at all. Obviously none of us can speak about what is possible with the particular internet providers available in every area but I think Comcast and AT&T are both available in a pretty significant percentage of the continental US.

“Owncloud,” Yah, I’ve thought about trying that one myself quite a few times. It’s still a bit more work to set up then what I had in mind but it’s probably the closest thing to it currently available.

Does anyone else remember NetMax? This was back in 1999 or sometime around then. Linux was hard, you really did have to be a computer geek to install it. Linux had barely progressed beyond the days when you had to pick from 100 kernels to find the one that supported your display (at least in text mode) and your NIC just so you could download the source and build a kernel that supports everything else. Home routers, the magic black boxes purchasable at WallyMart that we know and love today were just not a thing.

Then these people came out with a series of CDs, one was a router, one was an email server, I forget what else they had. They were basically little single-purpose Linux distros but you never saw a commandline. Just put the cd in an old computer, let it boot and answer a couple of simple questions. (we did have a lot of Pentium 1s and 486s rotting in closets already) Bam! You have a router, server or whatever ready to go, no fuss, no muss. I don’t think it ever caught on but it literally seemed like days after I saw this that box store consumer routers started showing up and email went from being a paid service to free everywhere you look so why should it have caught on?

Anyway, I’m imagining something that installs like that. It would ask only a bare minimum of questions, mainly “what do you want your domain name to be… no sorry that’s taken try again… repeat till you have a good one”, username and password. It would reach out to DynDNS or whoever and create that domain for you. Most everything else would be good, sensible defaults. It would tell you to write down that domain, username and remember the password because that’s what you type into the mobile app on your phone, tablet, internet enabled sex toy, etc… The ‘geekiest’ thing a user would ever have to do is set up a port forward and that only until the system became powerful enough that routers started just shipping with it built in.

“But, there’s no way in hell that 99.99% of the people are going to have the ability to set something like this up even if they knew they should.”

No. There is no way in hell that 99.99% of the people are going to set it up. Having the ability is another matter. Understanding the concepts of what a port are, and what port forwarding is are not rocket science. It’s like getting the mail in a big shared house where everyone likes to use a codename. You (the router) pull the letters out of the box (the internet) read the name on the envelope (the port number) and pass it to the correct roommate (the local pc). It’s just not that fucking hard.

In our society today people are proud to be ignorant. They turn off at any explanation of anything technical because they think actually knowing something is going to turn them in to Sheldon or something. They preach about how technology isolates us or is reducing their humanity or some other hippie bullshit like that while secretly they are trying not to shake because they are experiencing DTs from Facebook withdrawal.

The ‘geekiest’ thing a user would ever have to do is set up a port forward and that only until the system became POPULAR enough that routers started just shipping with it built in.

Agreed, this is probably the best solution, and to add what Dan said below, it will hopefully be possible with OpenAI and similar organisations open sourcing their data and neural network weights. A current example i liked would be this guy moving speech recognition from dragon naturally speaking to a self hosted open source kaldi implementation (possible to host on the same machine or your own remote server) https://www.youtube.com/watch?v=YRyYIIFKsdU

It’s funny how different people react to the possibility of being monitored.

We have a problem at the maker space I use where people don’t wash their dishes. So as a joke, I took an old camera lens, hot-glued an LED behind it, hooked it to a rangefinder, a hobby servo and an (Oh my gosh!) arduino so it looks at you, then looks into the sink and looks back at you. I’m hoping people get the point that they should wash their dishes and not leave them in the sink for someone else to wash.

It’s cute in my opinion.

https://youtu.be/xb21zFURUvA

The first day it was installed, it lasted 2 hours before people started asking questions about the robotic camera, and it got unplugged.

So I explained it, put up stickers and turned it into an art installation.

https://goo.gl/photos/Mv9nujDzK6QEDe7J8

He’s been accepted now.

So, even in a maker space with tool-savvy people, monitoring can freak people out.

I expect audio monitoring is less obvious and therefore more accepted by most people.

Write their name on each dish with squeezable brown gravy.

Put up sign boldly stating “A sink is like a toilet.—Don’t leave your &%$ in it.

Please leave nothing but clean water running down the drain.

General Issue to each, numbered sets of service.

You should of used a real camera, uploaded the offenders to YouTube, and then requested commentators to add all the insults possible. As a final touch, play the videos on a public monitor 24/7. Problem fixed.

” The correct term for this level of personal automation is “weak AI”. What I want is Artificial General Intelligence (AGI) on a personal level.”

I hate Siri/Google Now/Alexa being called “weak AI.” We need a new term, like “feeble AI,” or better yet, something that doesn’t include “intelligence” at all.

It’s designed to be a personal assistant to be able to do things with your phone, interacting with it via voice, and it’s an *incredibly* weak AI. It can’t improve itself. You can’t ask it a question and say “no, that’s not right, try again.” You can’t correct its voice recognition, allowing it to update models based on how you correct it. Hell, even Google’s “figure out my likes/dislikes” can’t figure out when you tell it a billion times you don’t like a certain topic that just because you search for that topic doesn’t mean you want it listed.

And its web search is basically “take whatever I told you and shove it into an search engine.” If you want a good example of how dumb this is, try asking it for “pictures of cats that are not brown.” Guess what you get back? BROWN CATS.

It’s not learning how to assist you. If anything, *you’re* the one doing the learning – learning how to interact with it. And I don’t think that should deserve a label that has “intelligence” anywhere in it.

“We need a new term, like “feeble AI,” or better yet, something that doesn’t include “intelligence” at all.”

I’ve always referred to these things as Artificial Stupidity, especially when I am being frustrated by one.

I agree, but there is a problem with the market forces at work here.

Personally, I blame apple, but only as far as they have taken user simplicity so far as to prevent you from doing anything outside what they perceive you to need.

I can manage my own music, and talk to other people to get suggestions on my own for new bands. Can I drag my own music to the memory card on an iphone? No, because it goes against their plans to make money from you, and they think that they can do that better than you with itunes. Not to mention that people are happy to make this trade to have the next popular, cool, shiny thing.

I want my own email, but my ISP doesn’t want me running servers, because they offer packages for business and hosting.

Capitalism is evolving to enable people to be lazy and replace not repair, and trade my “useless” information for convenience and free services. And the people who frequent a site like hackaday are growing in number, but are FAR outnumbered. This will prevent us from becoming a market force and will keep other interests more attractive than ours.

> This will prevent us from becoming a market force

Market forces have nothing to do with it. The force is mediated by institutions and government. The force is entrenchment and barrier to entry. Don’t think in terms of individual markets, because market disruption is actually quite easy. Bring a better product to the table and you will certainly survive, if not completely take over the entrenched. No, the problem is getting the product to the table. You have to rely on all of their institutions and governmental rules that were designed by them to benefit them, before you can even open a business, before you can even make the product. And that’s if you can survive with food and shelter long enough to last through that period.

True capitalism is centered around market forces, and competition (really just the application of new ideas). Owning your own small business is much as capitalism as a big bank (maybe even more so). Monopolies as well as some of the other egregious acts committed by big business are done so because some people are just assholes. And given people at the top tend to rise to the top because they are narcissistic, we have what we have. Keep in mind socialism or its distant cousins fascism or communism) are no different. The power hungry just have one career option (government service) as opposed to many, just look at Mao or Stalin if you need enough proof, or pretty much all human history prior to the last 1700’s, which governments started down the modern democratic path with some respect for human rights. North Korea still hasn’t got the memo.

> Owning your own small business is much as capitalism as a big bank (maybe even more so).

Uhh, no. Capitalism is solely publicly traded/owned stocks (capital, aka stock markets), and the only market forces in that are baked right into the rules of the individual exchange. And those market forces tend to lie somewhere in between gambling and pyramid scheme.

You are also confusing economic systems and political systems.

You are also assuming that there’s some sort of “opposite” of capitalism, like on a scale or something, it doesn’t work that way. It is more like a 4 dimensional hyperweb of concepts. There are practically an infinite number of unexplored permutations.

Hard questions.

I know that AI has a long way to go, and it will be crawling for a long time till it’s good enough to start walking… but I do hate having half-baked AI foisted on us. (or allowed to screw us over, like robo-trading).

I’m older and just settling nicely into crabbiness, so that may well explain why I don’t use Twitter or FB, don’t use Siri or whoever else my ‘assistant’ is, can’t stand dealing with automated phone systems… At the same time I’m still a professional software developer, so I’m not quite Luddite material.

For me, I will accept optional, assistive AI, and i want as much control over how it works as possible. I HATE when systems are dumbed down, like the speech recognition systems that interact on the level of an autistic 7 year old, because that’s the only way they currently work.

Anyway, I know that AI is inevitable and will ultimately be a net benefit, but frankly I want to see as little of it as possible til it’s out of the crib and been in school for a few semesters.

>If my wife and I have a passing conversation about a musical coming to town I want my personal AI to remember and tell >me when tickets go on sale. For that matter, I want it to know my seating and cost preferences for me and to check my >calendar and my wife’s calendar to choose the perfect day, simply asking me to pull the trigger on the purchase. I want it >to know that we usually will pair a show with a dinner or with drinks afterward and the collate our restaurant visit history to >guess which place we would most enjoy visiting this time around. I want the moon.

For the record, this isn’t General Intelligence either. What would be AGI, is you speaking the above paragraph to the AGI, and it replying back “ok Mike, I’ve written a program that does what you described, would you like to start using it?”

“i want service BUT u want also privacy.”

sorry, dude, you CANNOT have both as you CANNOT have both faces when you flip a coin.

You have to choose. And because you are a lazy person as 99% of human race is, you will choose the service and you will renounce to privacy. only few people have character strong enough to rexixt. us 1% of human race will fell so alone, but we newer give up to AI.

Large scale solutions tend to be generalised and so are most effective for the average user, but most of us are not Mr. Average. If you want AI that is tailored to your needs you need to train it yourself, fortunately in most cases you can build on the “average” tools that have been made “open”. This is where OpenAI may be very useful. Rolling your own also ensures greater privacy, so long as you are sufficiently competent in how you implement it’s security features.

I think that this is paradox. On one side you as a person don’t want to be scrutinized, but on the other side the AI need to know as much as possible in order to serve it’s purpose and since many time we want it to do stuff instead of us, it need to know as much as possible about us. By itself this won’t be a problem if the AI system is localized near you and you can unplug, dismantle or destroy it (thus feeling superior and safe), but if it has network connection and it’s hidden in an internet cloud, not knowing where the information about you goes, you lose control over it starting the fear and paranoia. As an example pushed to extreme (yet close to a possible future) see people living under fear of drones in Pakistan and around.

Another aspect is where being helped by AI becomes being controlled by AI? And i’m not talking about SF scenarios when AI gets mad and wants to kill us, i’m talking about the dependency it would create.

What will happen when AI would not be able to solve a problem or it will cease to function or it would go nuts or start doing irreversible mistakes.

We still need to use and develop our brains or we’ll end up in a suicide slumber of them.

We’ll need to use AI only as a tool and not letting it take over on our life and decisions.

there’s an easier solution to this problem. How does a real person/assistant deal with a situation like this? They ask for feedback. Assistant: “Hey, you need to pay your bills.” Person: “I already paid my bills last week”, Assistant: “Oh, let me make note of that.” ……etc. This feedback goes to the google AI and it learns that every time it tells you that your bills are due, you respond saying you payed them at said time. google AI eventually sees the pattern and doesn’t remind you. But what if I forget one month? You yell at google for not reminding you and google AI works to understand what the outlier is that caused you to forget. Maybe it’s a birthday or maybe you scheduled a vacation, etc.

After 30 years of PC, I still can start typing a full sentence in Caps Lock, with the first letter in lower case, and nothing to stop me or to warn me or to ask me to reverse the case of the chars. Then it’s hard to beleive the revolution is en route… How long human will need to assists AI before AI can assists human?

AI is really bad and dangerous.

Tuke

I believe this challenge could be mitigated as technology progresses to the point where AI can be hosted on a personal device, owned and maintained at home. This way, only the essential information required for a specific task would need to be shared externally.

I totally get the balance you’re aiming for—embracing tech’s benefits while holding on to personal privacy and humanity. It’s exciting to think about how far we’ve come, but also wise to keep some distance from the idea of AGI invading daily life for now. Definitely curious to see where this all goes!