Some people may think they’re having a bad day when they can’t find the TV remote. Yet there are some people who can’t even hold a remote, let alone root around in the couch cushions where the remote inevitably winds up. This entry in the Assistive Technologies phase of the 2017 Hackaday Prize seeks to help such folks, with a universal remote triggered by head gestures.

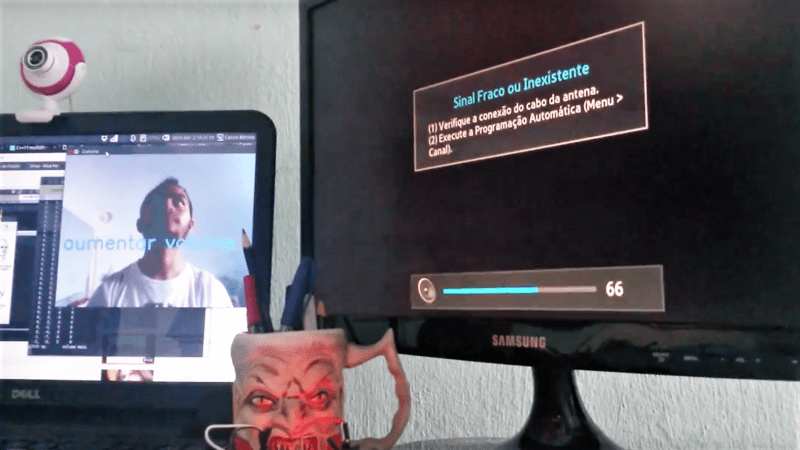

Mobility impairments can range from fine motor control issues to quadriplegia, and people who suffer from them are often cut off from technology by the inability to operate devices. [Cassio Batista] concentrated on controlling a TV for his project, but it’s easy to see how his method could interface with other IR remotes to achieve control over everything from alarm systems to windows and drapes. His open-source project uses a web cam to watch a user’s head gestures, and OpenCV running on a CHIP SBC looks for motion in the pitch, yaw, and roll axes to control volume, channel, and power. An Arduino takes care the IR commands to the TV. The prototype works well in the video below; with the power of OpenCV we can imagine mouth gestures and even eye blinks adding to the controller’s repertoire.

The Assistive Tech phase wraps up tomorrow, so be sure to get your entries in. You’ll have some stiff competition, like this robotic exoskeleton. But don’t let that discourage you.

For the “you could do this with a 555” equivalent for this: an EDtracker on a headband and an IR LED.

Hi, Steven. Thanks for the tip. I’d never heard about EDtracker.

I think using this EDtracker would somehow wire the user to the system, unless we add some Bluetooth conn or other forms or wireless communication to transmit the 3-axis information from the user’s head to the system.

Another option is move the entire system to the user’s head, but in that case we’d need to have multiple IR LEDs, since the performing yaw and pitch movements would block the line-of-sight between the LED and the controlled device.

If I’m wrong, please let me know :)

huahuehuahuebrbr

Well done on getting this working. I did something a bit like this by using the output from the FaceTrackNoir software:

http://facetracknoir.sourceforge.net/home/default.htm

Here’s a link to my project page:

https://sites.google.com/site/hardwaremonkey/home/headgesture

Hi, Matt. I did a dynamic reading on your webpage :)

For what I understood, you’re using face tracking to move from one position to another in a matrix-like interface on a desktop PC, aren’t you?

The technique we are using is called head pose estimation (HPE). For the remote control you see on my video, there’s only 6 outputs (2 yaw, 2 pitch and 2 roll). Therefore, we call it discrete HPE. However, I can make a guess by supposing that you can also use HPE techniques to control the cursor of a mouse, for example. For that, you call continuous HPE. I’m not a CV guy, just an enthusiast, but I think I’m not completely wrong :) If I am, please let me know.

Furthermore,

i) thanks for your comment. I’m using OpenCV and I had never heard of this FaceTrackNoIR software. We’ll look into that.

ii) nice work of yours. Doing AT stuff is awesome.

Hello Cassio,

I use the virtual joystick output from FaceTrackNoIR to measure the head movement. For testing this is then used to control a simple music player grid that we made using Sensory Software’s Grid 2 assistive communication software. This software is often used for constructing sentences using buttons and switches to enable communication for people who can’t speak. Thanks for looking at my site.

uhhhhhh…TrackIR

Remote is called remote because always when you need it, it magically appears in remote place .