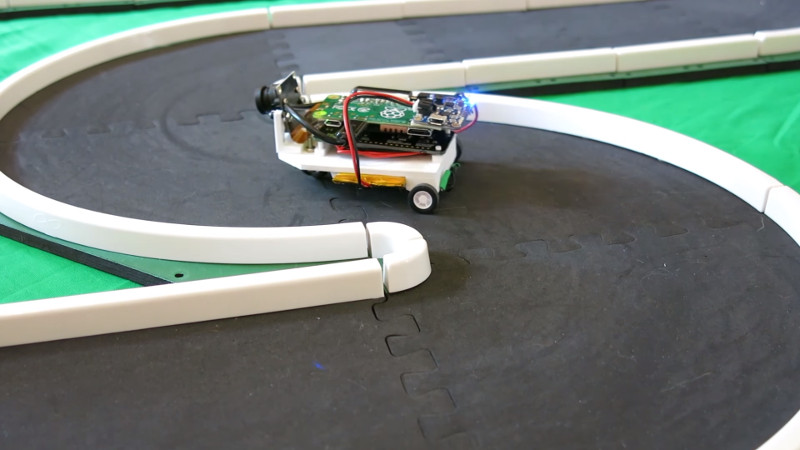

Self-driving technologies are a hot button topic right now, as major companies scramble to be the first to market with more capable autonomous vehicles. There’s a high barrier to entry at the top of the game, but that doesn’t mean you can’t tinker at home. [Richard Crowder] has been building a self-driving car at home with the Raspberry Pi Zero.

[Richard]’s project is based on the EOgma Neo machine learning library. Using a type of machine learning known as Sparse Predictive Hierarchies, or SPH, the algorithm is first trained with user input. [Richard] trained the model by driving it around a small track. The algorithm takes into account the steering and throttle inputs from the human driver and also monitors the feed from the Raspberry Pi camera. After training the model for a few laps, the car is then ready to drive itself.

Fundamentally, this is working on a much simpler level than a full-sized self-driving car. As the video indicates, the steering angle is predicted based on the grayscale pixel data from the camera feed. The track is very simple and the contrast of the walls to the driving surface makes it easier for the machine learning algorithm to figure out where it should be going. Watching the video feed reminds us of simple line-following robots of years past; this project achieves a similar effect in a completely different way. As it stands, it’s a great learning project on how to work with machine learning systems.

[Richard]’s write-up includes instructions on how to replicate the build, which is great if you’re just starting out with machine learning projects. What’s impressive is that this build achieves what it does with only the horsepower of the minute Raspberry Pi Zero, and putting it all in a package of just 102 grams. We’ve seen similar builds before that rely on much more horsepower – in processing and propulsion.

What does it do if the track layout is changed?

probably nothing good

it would need to be retrained

just like a real race driver needs a few practice laps…

In the video, the training was done counterclockwise, while the self driving was clockwise, so it looks like the track layout doesn’t matter.

Ouch, what I just wrote is not true!

Both the training and the self-drive are counterclockwise.

I should have watched the whole video, sorry.

:o(

Hey, one of the authors of the original post here!

We did a video prior to this one where we have a larger car drive around outdoors on paths it has never seen. As for this mini car, it has been tested by driving backwards on the track.

Perfectly memorizing a track is actually way harder than just learning how turns work. The motors are not near precise enough to use any sort of dead reckoning. Generalization in this case is actually easier than memorization!

Not seeing how this is different from a 60s maze follower, that instead of learning by trial and error itself needs a monkey in the loop.

You mean the one holding the controller?

Yup, monkey in loop technology is anything that (still) has to be fudged with human intervention (unless you’ve got some smart actual monkeys around to fill in.)

it looks cute

Impressive for the video tracking and analysis part… but for pure learning and speed, check out the Japanese/Taiwanese/Asian dominated micromouse sport…

https://youtu.be/IngelKjmecg

Arrgh (no edit)… I should have added that each mouse gets a learning/searching run first which can seem slow, then several full speed attempts at completing the maze. Be sure to watch the whole thing, or jump to 7:00 if you must, here:

https://youtu.be/IngelKjmecg?t=420

So i should get myself a raspi cam. And get this roomba thing running.