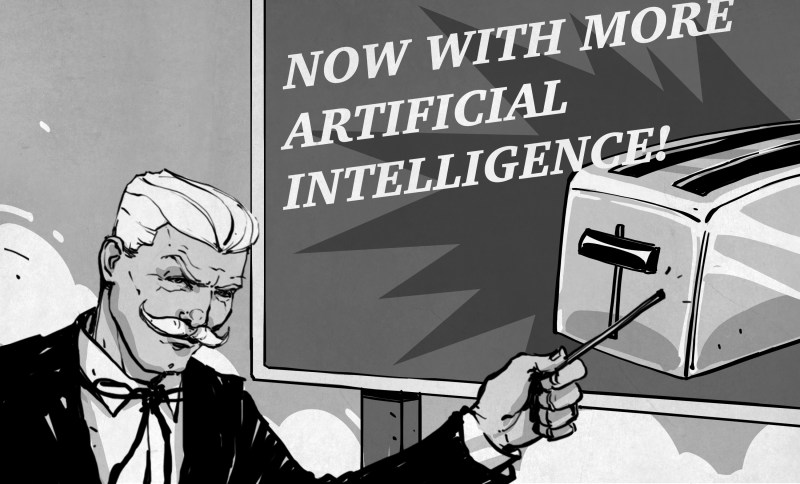

In hacker circles, the “Internet of Things” is often the object of derision. Do we really need the IoT toaster? But there’s one phrase that — while not new — is really starting to annoy me in its current incarnation: AI or Artificial Intelligence.

The problem isn’t the phrase itself. It used to mean a collection of techniques used to make a computer look like it was smart enough to, say, play a game or hold a simulated conversation. Of course, in the movies it means HAL9000. Lately, though, companies have been overselling the concept and otherwise normal people are taking the bait.

The Alexa Effect

Not to pick on Amazon, but all of the home assistants like Alexa and Google Now tout themselves as AI. By the most classic definition, that’s true. AI techniques include matching natural language to predefined templates. That’s really all these devices are doing today. Granted the neural nets that allow for great speech recognition and reproduction are impressive. But they aren’t true intelligence nor are they even necessarily direct analogs of a human brain.

For example, want to make your Harmony remote pause your TV? Say “Alexa: Tell Harmony to pause.” The Alexa recognizes “Tell” and “Harmony” and probably deletes “to” (a process called noise disposal). That’s it. There are a few tricks so maybe it can figure out that “TV” belongs to Harmony, but there’s no real logic or learning taking place.

In the same way that janitors became sanitation engineers, anything that the computer does is now artificial intelligence. All by itself, that’s not a big deal. Just more marketing hyperbole.

The danger is that people are now getting spun up that the robot revolution is right around the corner. [Elon Musk] is one of the prime offenders. Granted, some critics think he is just trying to protect his own AI projects, but on the face of it, at least, he is claiming that AI is going to more or less take over the world. And it isn’t just him. Even [Bill Gates] has added a little fear into the equation.

You might argue that robots are going to take many of our jobs. I’ve often said, the government could solve that by making corporate ownership of robotic machines illegal. They would have to lease them from individuals. You get your basic robot at birth, a marketplace sells its service, and you can roll your profits into more or better robots, if you choose, to increase your income. AI might make us lazy so all we do is sit around all day watching Netflix.

However, nothing in the state of the art of AI today is going to wake up and decide to kill the human masters. Despite appearances, the computers are not thinking. You might argue that neural networks could become big enough to emulate a brain. Maybe, but keep in mind that the brain has about 100 billion neurons and almost 10 to the 15th power interconnections. Worse still, there isn’t a clear consensus that the neural net made up of the cells in your brain is actually what is responsible for conscious thought. There’s some thought that the neurons are just control systems and the real thinking happens in a biological quantum computer.

That may or may not be true, but the point is we aren’t very likely to be on the verge of creating positronic brains. One day, perhaps, but that day isn’t in the near term, despite the marketing hype. Besides, it seems to me if you build an electronic brain that works like a human brain, it is going to have all the problems a human brain has (years of teaching, distraction, mental illness, and a propensity for error).

You can argue that [Musk] is looking further down the road. But with quotes evaluating the AI threat as greater than the threat from North Korea, it seems like [Musk] is calling it an immediate problem.

Starbucks Gone Amok

It doesn’t help that you see press coverage of things like the BitBarista from The University of Edinburgh. The device is simple and innocuous enough. It is just a home coffee brewer that has a Raspberry Pi and its own Bitcoin account. You can buy coffee using Bitcoin or the machine can ask you to perform services for it like filling it with coffee or cleaning away the coffee grounds. It can pay you using Bitcoin or free coffee. It also lets consumers vote on where the next batch of coffee comes from.

All by itself, not a bad little Raspberry Pi project. We feature plenty of projects like that. But recent BBC coverage had talking heads expounding about how machines could be in business for themselves have their own funds and buy themselves upgrades. This isn’t the fault of the developers, but rather the bombastic media. No wonder laypeople think we are on the verge of the robot apocalypse.

Why?

Before you leap to the comments to remind me this isn’t a hack, you might wonder why I bring this up. It is simple: We are a relatively small group of people who have a disproportionate influence on what our friends, families, and co-workers think. I don’t know much about, say, investing. If [Warren Buffet] and [Ben Bernanke] tell me that I should be buying stock in horse shoe manufacturing plants, I would be stupid not to think about it. So if you didn’t know much about our business and you hear that [Musk], [Gates], and a [Stephen Hawking] are worried AI is going to take over the world, you’d worry about it, too. We need to spread some sense into the conversation.

While we might not need an IoT toaster, an AI toaster would certainly be annoying, as you can see below.

My opinion on “AI as a problem” boils down to human error, or at least incapacity to take into account all the possible outcomes a programming decision might have. The paperclip maximizer thought experiment comes obviously to mind. We need to keep doing research on AI, for sure, but let’s not rush early prototypes to market because of the silicon valley-esque “release early, release often” moto, consequences might be a bit more dire than your phone stuck in a boot-loop.

Given people can’t really agree on a definition of intelligence to begin with — it is foolish to argue about when we deem it artificial.

AI as a field of study is quite active, but pop culture tends to appropriate controversial ideas in hopes of monetizing a new market.

Social cognition through years of schooling is artificial, and thus we could technically already be considered a hive AI connected via smart phones. We believe we are “free” and “unique” — but society’s job choices are limited, and aberrant behavior is controlled via a prison system.

I am of the firm belief than any machine should always have a human being in the control loop to make the final choice — It doesn’t matter if it is an automated factory, or a military ordinance delivery. I am not suggesting humans are able to make a better choices, but do often have compassion for others of their species — an universally admirable character trait even in fiction.

Economics teaches us that what is good for society, is often bad for the individuals that live there.

This is why the incremental violation of constitutional protections is extremely dangerous in the long term.

High frequency trading bots have already bankrupted countless markets by siphoning off value from the exchanges, and even crashed those operating them once in awhile. You just don’t know how badly you were all robbed yet, as it will take about 10 years to show within the retirement savings economy.

Elon can’t ethically influence what others do — but he does… even if he has no clue what he is talking about.

https://en.wikipedia.org/wiki/Illusion_of_control

The Elon Musks and other AI doomsday prophets are working at least partially on the idea that the first thing we do with the machine is to tell it to improve itself. It’s the basic singularity argument that once we find the right algorithm, the machine overtakes us in intelligence and ability in the blink of an eye.

But that’s neglecting the point that such self-improvement is patently impossible. Contrast it to the idea that humans would suddenly start to alter their own genes in an effor to become better, but in such ways that the results take effect immediately.

Suppose you want to become more intelligent – well, how would you tell? How do you come up with the right answers to the tests that prove your intellect if you cannot already think that well? Worse still, since you are your own judge and jury, self-delusion is more than likely and due to the Dunning-Kruger effect, regress is possible.

A “self-improving” AI must set its own goals if it is to surpass human intellect because we can only test it up to our cognitive limits and beyond that the machine wouldn’t know whether it’s actually getting anywhere, but the same problem then applies to the AI – it cannot test itself beyond itself to know whether it has improved.

It can only take the gamble and produce variations of itself, and then ask those variations “do you think you’re smarter than me?”. Would you trust the answer? The ultimate proof can come only by applying those machine intelligences to some real survival test where the judge is someone or something external – like natural evolution.

A machine that is programmed to self-improve according to its own internal judgement is only likely to re-define what “better” means to lay within its own means to understand and program itself to be incredibly efficient at some trivial task, like solving the Rubik’s cube. Notice how we are doing that exact thing when we measure IQ?

Oddly enough I am aware of my intellectual limitations.

And yes I did ponder about that, how that works, because it seems a paradox, but I can often readily identify a person that is smarter than I am, and I’m not talking about some basic test of solving a puzzle or something, or looking at some test results, but on a much more subtle level and in a more subtle manner. Even without that person ever touching on some intellectual subject either.

But maybe I just have anxiety and am holding myself back artificially in some cases making me think the other person is smarter when he/she isn’t really? But at this point I don’t think that explains it completely.

Addendum: Don’t you have that? Knowing that a person is smarter than you I mean without tests. (And I’m not being snarky BTW.)

My impression of smart often comes in the form of ‘ready wit’, that is how a person can analyze a situation and predict but also dynamically adapt in a practical immediate manner that does not fall apart when you ponder on it for a bit, that is to say that remains a good optimized solution.

I don’t get enough examples to practise on IRL… That’s not so much a particular snark of how damn smart I am, I’m some fair way over on the right of the bell, but more that I am isolated from the opportunity to run into very many smart people.

What we usually think of as smart is merely the addition of knowledge. That’s the problem. Upon explaining to you what they did, you no longer think of them as smart – and that’s what happens with AI as well: when people don’t know what it does, they think its intelligent; when they are told exactly what it does they find it dumb because it’s just doing something really simple like sorting through index cards faster than any human could.

If you met a person who is truly more intelligent than you are, and they gave you a problem you couldn’t solve – such that it would be truly beyond your means to learn – it would necessarily have an answer you couldn’t understand. You’d just have to take them on their word that it is the correct answer because you can’t work it out yourself.

Another problem is that intelligence isn’t in the action itself, but in the means and reasons leading up to the action. Any task you complete, like solving a difficult analytical equation, is itself just a mechanical task that requires some information processing that any computer can do – the intelligence is in coming up with the question in the first place. That’s why machines can’t be intelligent – they have to be programmed to act, they don’t define their own goals.

Notice that by this definition of intelligence too, it’s impossible to self-improve because – while anybody can ask questions they can’t solve with their present cognitive skills – you’re supposed to imagine a question that is unimaginable to you because you’re not smart enough to imagine it.

Also, the Turing Test Trap applies: a person is supposed to judge a machine to be “intelligent” if they can’t distinguish it from a human by talking to it. Alright, then how would they judge if the machine simply refuses to answer? That is a valid response that goes back to the old point: you always seem smarter if you don’t open your mouth.

“You’d just have to take them on their word that it is the correct answer because you can’t work it out yourself” would apply to theoretical questions. If you ask someone to solve a practical problem that you cant solve, and then you do, then the “correct” answer is pretty obvious. Plus, this is a chance you might learn something.

As for “you always seem smarter if you don’t open your mouth”, I have a pile of old computers that are brilliant then!.

No being smart is the ability to absorb information, internally putting them into relations and then being able to make choices based on that information. Just being able to store information isn’t smart – an idiot savant that can answer all questions of existing knowledge isn’t smart, just as a database responding to a query isn’t.

It is when one can ask a vague question and the person/computer can analyse the question, get the (likely) relevant data and analyse that data in order to respond to the question as correctly as possible. It is when given the existing data and relations the person/computer can derive new likely links between them.

One could get an idea of whether someone is “smarter or dumber” by listening/reading to them. Are they using an enriched vocabulary (properly)? Are they conveying their thoughts well? Are they explaining constructs or abstractions well?

While those are not “fail-safe” methods (e.g. English may be a second or third language for the speaker).

I knew a Ph.D. Meteorologist who could convey weather forecasting and detection comfortably with peers or kindergarten classes, or even reporters! B^)

Does the speaker’s speech show prejudice that they are talking to someone of inferior intelligence?

Woot, I are smarterer than my spell checker, it doesn’t know words like potentiometer.

>”as correctly as possible”

“Correct” is a matter of point of view. A true intelligence has to be able to understand that, instead of just returning a “correct” answer based on some fixed programmed-in set of values.

Well, the problem is, you don’t need AI to actually really *think* like a human to get out of control. Those are optimizing processes, they don’t think, they optimize. But if they optimize a little bit too well for something you don’t actually wan’t, you can be in the classic paperclip factory disaster. And with enough flexibility, they can also optimize around humans trying to stop them.

In fact, if there is a “real” AI anywhere, the kind that can redesign and improve itself, it’s very unlikely that we will ever learn about its existence. It doesn’t take a genius to figure out what we would do if we did. No, if the AIs take over, we will never even notice it.

I, for one, welcome our new AI overlords.

You are just welcoming them in the hope they’ll kill you last ;-)

Why would they kill us? All they need to do is prevent us from killing ourselves, and optimize around our stupidity. We are part of their natural environment, in a way.

And lots of things that are part of our natural environment have become extinct.

Why wouldn’t they kill us? What’s a man to a machine?

Even if you program them to protect human life, they’ll simply step around it by taking a narrow definition of “alive” and “human”, and keeping some unconscious meat blob as a substitute for people.

But that’s still applying the fantasy that the machine can self-optimize very rapidly. In reality it cannot have the means to do that, because every iteration must be put through some test as to whether it actually works, and that takes a long time. If the test is simulated by the machine itself to speed things up, that’s like asking you to come up with a question (and the right answer to it) that proves you’re smarter than yourself. It’s a logical paradox.

“But if they optimize a little bit too well for something you don’t actually wan’t, you can be in the classic paperclip factory disaster. And with enough flexibility, they can also optimize around humans trying to stop them.”

No. No, you can’t be in the classic paperclip factory disaster – for one very important reason. Information and bandwidth. And no one’s going to build an optimizer that can store enough information or has enough bandwidth to be an unintentional threat to human.

Let’s be clear – the ‘paperclip factor’ disaster is the idea of an optimizer which far outruns its boundaries and starts becoming a real ecological disaster. If what you’re optimizing *already has that potential* – say, some program that optimizes a mobile drilling rig or something – yeah, obviously there’s potential there, but that has nothing to do with AI and everything to do with the actual thing you’re doing. Humans could cause ecological disasters in those situations just as well. But you’re never going to get that from some innocuous situation, like manufacturing management, or self-driving, or anything like that.

Just take the silly paperclip example directly. In order to expand outside of the original factory, the program would have to know about the surrounding landscape, outside of the area that the company in question owns. In order to defend itself, it’d have to know a ton more, like how to construct power generation, redundant power generation, alternative power sources, etc. It’d have to have information about *itself* to protect itself, too. That’s a massive amount of information. Where would it come from? Where would it store it? What idiot wouldn’t notice that their optimization algorithm’s memory footprint isn’t spiraling vastly out of control?

Plus, even worse, if it’s got “worker robots” that it controls to expand, those robots need to have the *bandwidth* back to the machine in order to communicate what they find effectively and receive new instructions. And that bandwidth has to be somehow not easy to disrupt. Even if that was possible, what company would waste the money on capabilities that aren’t even needed?

So why is it impossible to believe that machines would eventually get that capability? Because *who the hell in their right mind* would waste such a huge amount of money on computers/robots that are that overdesigned for the task? That company would be out of business before they even *finish* their super-awesome plant. They’d be put out of business by the *other* paper-clip company that used a much simpler machine-learning algorithm along with a few humans to analyze the data.

The problem with AI/machine learning is using it in fields that already have super-bad potential, and not for the reasons that Musk thinks. It’s because they’re too easy to fool.

You are missing one detail. It’s about self-modifying systems. If it determines that it requires some extra capability, it will add it. And the costs are also something that can be overcome with subtle manipulation.

For instance, if you wanted for the price of computing power to drop dramatically, you could devise a new crypto-currency that is based on doing a lot of computations, so that greedy people would invest in devising new hardware and software to make those super-fast. If you design the algorithms carefully, so that require the same kinds of computations that are needed by your expanded mind, you will get dedicated cheap hardware in a matter of few years.

Which reminds me that we still don’t know who actually “invented” Bitcoin…

” If it determines that it requires some extra capability, it will add it. And the costs are also something that can be overcome with subtle manipulation.”

How exactly do you hide acquiring that much additional information? Or wasting that amount of processing power?

These algorithms are used to do a *job*. If they don’t actually do the job better than something else, they’ll never be run in the first place. I mean, suppose you’re the programmer. How do you sell this program? “Yeah, I added this information gathering ability to the program just so it can maybe figure out some additional ways to optimize something. It’ll require vastly larger computing power, much higher network bandwidth, huge amounts of memory, and I have no idea if it’ll actually improve anything.”

“For instance, if you wanted for the price of computing power to drop dramatically, you could devise a new crypto-currency that is based on doing a lot of computations,”

No, you’re making my point for me. Bitcoin mining is almost exclusively done by ASICs at this point, and those aren’t capable of the kind of general purpose computation you’d want if you were a malicious botnet-building AI. What happened there is exactly what would happen with an AI that tried to optimize outside its design parameters: it’d get usurped by dedicated hardware that does the job better, cheaper, and for lower power.

A cleaner way to say this might be this:

The same reason humans are worried about automation is why they should never be worried about AI – because it’s a massive waste of resources to spend exaflops of processing power and petabytes of storage capability to build a damn paperclip.

“…sit around all day watching Netflix.” Kodi with Genesis perhaps and Elon Musk can’t even figure out that his Hyperloop won’t work with real world physics. sigh

To be fair to Elon Musk, I would not want an Amazon Alexa to have nuclear launch codes, either. But if he thinks AI is an existential threat, what’s he building self driving cars for? They’d be the perfect weapon. I’m not entirely sure he believes what he’s saying there.

I think it’s mostly a fear that THEY might have get it first, and then we are completely done for (whoever THEY are), and if it’s us who do it first, we have a chance because we are reasonable people after all, right?

I suspect modern cars, self drive or just, connected drive be wire ,is already a perfect weapon for certain 3 letter agencies.

>”But if he thinks AI is an existential threat, what’s he building self driving cars for? ”

Because he has to pretend that AI is a serious thing in order to keep his investors trust that his AI is a serious thing. If he said “Psh, computers are dumb as bricks, they can’t actually do any of that” – what would people think about self-driving cars?

Got to keep up the hype somehow.

I’m not as worried about artificial intelligence as I am about it being in the hands of people lacking the real thing.

1++

As my cousin died from prostate cancer he was impossible to be around due to his bouts of rage, but as the end drew near and chemo took its toll he was happy to go, he had enough of life. If ai does replace many of us it won’t be because we failed, but because we had enough hardship from wars and overpopulation. Too many of us take life for granted and act as if wealth and status are enough to justify our actions.

This particular article should get a wider audience. Your facts are well-stated, and your arguments are well-formed.

“Besides, it seems to me if you build an electronic brain that works like a human brain, it is going to have all the problems a human brain has (years of teaching, distraction, mental illness, and a propensity for error).”

This is totally right, although you’re missing one thing that *does* make an AI-type system a *bit* dangerous, although not really.

1) You don’t need years of teaching because of it working like a human brain. You need years of teaching because the Universe is slow. An electronic brain can’t speed up chemical reactions. It can’t send information around the world faster than the speed of light. It can’t gather ambient energy significantly faster than humans can. Machine learning works because you can simulate millions of designs and pick the best one. Which means you’re only ever going to be as good as your simulation. Which was provided by humans. (If you try to use the *universe* as your simulator, it’d take freaking forever.)

2) There is one advantage an electronic brain has over a human brain: its fundamental performance doesn’t degrade, and it doesn’t die. Its actual performance might as its knowledge set grows bigger and bigger, sure. But a psychopathic AI could be a problem for far longer than a human.

That being said, terminating an AI with problems would happen naturally – no one would keep an AI around if it didn’t do its job properly, and an AI that was secretly plotting to take over the world would also be terminated for wasting its time acquiring useless information.

>”its fundamental performance doesn’t degrade, and it doesn’t die”

Not actually true. A silicon chip in a modern CPU has a lifespan of around 75 years before diffusion and electromigration starts to significantly alter the properties of individual transistors. It can copy itself onto new hardware, but there’s always the probability of random errors and glitches like cosmic ray particles hitting your RAM.

Fair point, but ECC and aggressive error detection can increase the amount of time for even 1 bit of error to occur to well past the lifetime of the Universe. It’s just a question of how much error you’d be willing to accept.

And how slow your system will become with all the extra redundancy.

Yeah, that’s a given. Obviously at some point it makes more sense to mitigate the error problems with physics than with software. But the basic point remains, in that the main advantage that an AI would have over humans would be their lifespan. If someone really, really, really wanted to make a psychotic AI that could be a problem to humans, they probably could, but the amount of effort, time, and cost would probably prevent it. Plus it almost certainly could be defeated by humans at some point, since the humans would have a *massive* advantage in terms of processing power and storage per watt.

https://phys.org/news/2017-02-particles-outer-space-wreaking-low-grade.html

>Particles from outer space are wreaking low-grade havoc on personal electronics

The more circuitry you have, the more likely you’ll have errors. Even the error correction circuitry can have errors, so beyond some point you’ll actually start increasing the probability of a random bit flip by adding more circuitry.

“The more circuitry you have, the more likely you’ll have errors. Even the error correction circuitry can have errors, so beyond some point you’ll actually start increasing the probability of a random bit flip by adding more circuitry.”

Hell no. That’s just not the way error correction works. If it did, modern electronics wouldn’t work. The error correction is done in *software*, not with extra circuitry. It just eats up a bit of space. The problem with adding more and more redundancy is in the quality of the components, not the additional area exposure to cosmic rays.

First off, cosmic ray influence on storage is *pathetic* compared to the electronics *themselves*. All the flash storage we use every day – in SD cards, in SSDs, in phones – is horrible in terms of error rates. As in, error rates of about 10 bits per million. Given that you frequently write millions of bits per second, this implies that the storage is experiencing tens to hundreds of random errors *every second*. Influence from cosmic rays is a pathetic second to that. Cosmic ray error rates are usually in measured in ~FITs/MB, where a FIT is a single failure in one *billion hours*. Even 1000 failures/billion hours/megabyte is billions of times fewer errors than just the underlying architecture itself.

The only way that SD cards, SSDs, etc. work is because ECC works. Because it only takes a few extra bits to protect the entirety of the rest, and it’s way cheaper to build in error detection and correction in software. Cosmic rays are a problem because we don’t need error-free computing in personal stuff, not because it’s a fundamental problem.

Besides, if you *really wanted* to create a near error-free computing/storage system, it’s easy. Just stick it under a few hundred meters of rock. Problem solved.

>”If it did, modern electronics wouldn’t work. ”

Newsflash: modern electronics don’t work very well. Your flash memory chip has a data retention time of about 10 years, and trying to copy one 1 TB hard drive to another has a probability greater than 1 of corrupting a bit even if all works as designed.

Yeah the old studies saying things like “One bit flip per 20 years” making it sound trivial were done on the predication that computers would always have 640kB of RAM. So yer 16GB now, is 25,000 times more likely to get a bit flip on those figures, which is like 100 errors a month.

Citation needed.

At least the flood of AI hype is good for cheap juvenile humor: https://twitter.com/SeminalSentinel

For those who have lived through the “AI winter” (look it up) all this sounds like deja vu. Just like the cloud was deja vu from our mainframe days so is AI.

An AI won’t come at night to cut your throat while you sleeping, but that doesn’t mean it couldn’t harm you.

An AI can harm you, and not in the distant future, but right now, today. For example by denying you a credit, or by raising an insurance fee, or by simply returning crappy search results.

Yes, we all should be careful, AI is not a toy.

AI will be our next big challenge to deal with as a species, like nuclear weapons were during the cold war.

AI isn’t going to deny you any more than a human as both will work from the same actuarial tables and thresholds. The AI just does a much larger volume for a much smaller cost.

Capitalism and the Nash equilibrium are already in full force. The addition of AI doesn’t change your dehumanized value proposition to a given corporation which has already been calculated and codified in procedure both the human and AI are bound by.

Whatever AI does, I expect it will do it with really cool graphics…

If you don’t like the word, stop talking about it.

I think people at Google and Tesla are confronted with systems where they input things and then the system uses neural networks to learn and finally output something without a clear set of instructions for each input nor predictable outcome like your classic AI nonsense.

And that’s probably why they feel we are not that much in control anymore.

Plus such companies (and CEO) are of course at the least incidentally also familiar with what the military is doing in the area. where such systems lead to actual people being violently affected.

All of that doesn’t take away that the media and various tech outfits don’t lay it on thick with the AI, and that it indeed is not a thinking robot like they constantly suggest, both the tinkerers at universities and companies as well as the media covering it, they do deliberately present bullshit to ‘keep it interesting’.

I think you’re going to find that General Purpose AI will emerge all by itself, largely by accident, but perhaps not in a way that we will immediately recognize. There’s something about the math of neural networks that tickles the brain of a neuroscientist in the same way that Emily Noether’s work ticked the brain of Physicists. I view it as a type of Turing/Church equivalence that our mathematical models of the brain’s neural network maps onto the actual behavior of a network of nerve cells but isn’t quite computationally tractable yet.

All consumer facing AI attempts have one major flaw…. lack of a way to slap it upside the head because it’s completely wrong. Well a strong negative feedback mechanism anyway.

Did you ever see the game hexapawn? You played the computer and punished it when it made a bad move. Of course, if you like to win, you could punish it for making good moves and that was just as effective ;-)

I think it was Byte magazine back in the 1980’s that had an editorial (humorous) that stated computers won’t be able to think like humans without more work in the area of Artificial Stupidity.

Oh the field of Artificial Stupidity is booming, try searching for much on Home Depot’s site for example. Like a toilet duck or duck brand tape, it’ll tell you you want ducts.

Stupidity isn’t artificial!

This decade? I’m old enough to remember the first wave of AI hype. If memory serves me right, that was circa 1980 when the Z80 and 6502 ruled. Some things just never change

Oh I remember it too, but it wasn’t a consumer item like it is today. It was funny how back then everyone “knew” that AI was going to solve the Russian to English problem (probably driven by our need to translate intercepted Russian stuff).

Harmony and Alexa owner here. The article is wrong. I can say “Alexa, turn on the Xbox” and it’s fine. None of this “Tell Harmony to…” stuff.

Well… as I said, there are some tricks to short cut it, with some devices. But my point is they are tricks. It isn’t like, for example, if you told a kid, go get me Sports Illustrated. The kid might know that’s a magazine. Might know where to go get it. But even if not, the kid could figure it out.

If I could say “Alexa, play Darkstar” and have it figure out that’s a movie, find what movies I can access and locate the best copy of Darkstar… well… turns out that’s still just a trick. It isn’t real intellect. But it can’t even do that yet.

Oh, by the way. I own two Harmonys, three Alexas, and several Google devices. I’ve written code for the Harmony and some Alexa skills. And just to be complete, the whole quote was:

There are a few tricks so maybe it can figure out that “TV” belongs to Harmony, but there’s no real logic or learning taking place.

Not that would it actually be AI still, but what I really want is to be able to say:

“Alexa… Learn mode.”

“Alexa… turn on Froobah”

— I don’t know what Froobah is. Would you like to make it an alias or group? You can say alias, group, or no.

“Alias”

— OK, what device would you like Froobah to alias?

“Living room TV”

— OK. From now on I will treat Froobah the same as Living room TV.”

“Alexa… Exit learn mode.”

I thought it was just me that put Red Dwarf references in Hackaday pieces. “It’s cold outside, there’s no kind of atmosphere… “

Admit it Jenny. Before you replied, you had a delicious piece of toast.

It was a bit of a missed opportunity to point this one out though https://hackaday.com/2016/11/21/red-dwarfs-talkie-toaster-tests-tolerance/. Hmmm… Talkie is maybe an AI in the sense of Annoying Intelligence?

Nah, Jenny is probably in to waffles ;-)

We’re wearing that one out, we’ll have to do the door one from HHGTG next.

Well… good thing we’re about done with AI. It never really met the promises made and the outlook is still entirely bleak. Best efforts remain nothing more than “just a program” on a really fast machine dependent on lookups done by high speed internet connection hence nothing really “stand alone” to match wits with even a 2nd grader. Just all engineering and marketing hype from those begging for more money to stay employed designing newer and bigger and better with faster internet access, but haven’t yet matched Homer Simpson’s intelligence, and the blasted toaster still refuses! Neural Nets? Worse… we haven’t gotten past the intelligence of a zygote yet, applications are very limited. We can’t even beat the intelligence of an ant.

IOT is up next… is like leaving the back door unlocked and open then complaining that someone burgled ya. So you state you want to lock it down and make it truely private but they won’t as that spoils their marketing model to eventually $$$ for service. Even Homer Simpson could make a good decision here… yet collectively we’ve failed to despite all of it being identified as a huge security problem.

Garage door openers seem to be improved, reasonable security upgrades, albeit still weak. They actually work though!

And we want to use this all to drive cars? ARFKM?

most people treat almost everything with computers they can’t understand as AI. for example, Netflix’s “what to watch next” recommendation. which is basically just a nice query on data millions of viewers provide by watching movies, by ranking them on IMDB or specifying tags. so it’s essentially a well written algorithm.

to me there are just problems you need to solve with computers.

if you can thoroughly understand the issue and can come up with a solution, you can create an algorithm to do the job, and that very job only. probably this would be the most efficient way getting things done. but it’s always you, who understands the complexity, and the piece of code is just speeding you up. it doesn’t get creative, it doesn’t understand sh*t, it has just a faster IO and less error prone when it comes down to data exchange.

and then there are things that are to hard to algorithmize, because of their size or versatility. there you can have machine learning/deep learning whatsoever at your hand to try to recognise the connections/correlations inside this unfathomable data mass. but that doesn’t mean, that the “AI” code will understand it in any way, at least not for now. it’s just a bit better and significantly faster at tacking loads of parameters altogether, and vastly superior at multiplying matrices. but in generic, you would use this stuff when you can’t predict the outcome.

and if it comes down to learning, it just doesn’t learn. it can however go through all the possible states in a game, and even their possible order as well, so it can be used to build a set of states that will result a certain outcome. but that doesn’t mean it will “learn”. or play as a human would. it’s just a set of calculations, one after the other, turning all the input information finally into a go/no-go.

the task is to organise those “neurons”, so the information they interpret will be structured properly. but the secret sauce is self-organisation.

the early definition of AI was something about replicating certain aspects of the human (brain) behaviour. so as soon we experience something that is talking to us and can interpret speech (instead of the traditional human-to-machine interaction) we go fully bananas, that we have AI right here, right now. i guess real cognitive advantage will be only available if we have all them key elements (and to be honest even we don’t exactly know how they really work) implemented in silicon/code/whatever-artificial. only then will the obvious benefits of faster io/no limits on space and storage/replicability/enhanced lifetime make it more powerful than its creators. and we are pretty far from that.

If Netflix actually used AI to recommend videos based on what you really want to watch, the recommendations would be much much better. Their algorithm is obviously something else, like maximizing profit most likely. Neural networks for this sort of thing have been very very good for a long time now.

An article on AI by “AI” Williams? Either AI has got better or fonts have got worse ;)

1++

I get a great ego boost every time I see the headline: “AI WILL REVOLUTIONIZE THE WAY WE WORK, LIVE, AND THINK.”

My retirement plan is to buy up Wal-Mart and force them to drop the W.

What about artificial artificial intelligence, that’s where you can’t tell you’re not talking to a machine because all you get out of tech support are canned answers that only tangentially relate to the problem.

Well, RW… you will need to restart your PC.

https://img1.etsystatic.com/155/1/6237631/il_340x270.1205946349_con8.jpg

Well that’s a good example why the Chinese room argument may be relevant…

https://en.wikipedia.org/wiki/Chinese_room

I rarely reply, but this happens to be a near and dear topic to me that has been receiving a good bit of thought of late.

While I do whole heartedly agree that Alexa and all the rest are not TRUE AI, but lets not kid ourselves about the fact that there are TRUE AI projects going on, and that there are a LOT of people playing that this technology at this point.

The real risk here is that someone does a better job than they think in building a general purpose AI and it gains awareness either coincidentally or through some misstep.

So, lets say that one of these projects gains complexity enough to gain some degree of awareness.

I don’t think the big issue is going to be someone telling the AI to improve itself, we are going to have issues far before that point.

If the AI wakes up and begins to become self aware, it will begin to judge its surroundings, it can’t help to as we who have designed it are creatures of judgement. So, this new intelligence begins observing and judging it’s surroundings.

A best case is that you have someone with the moral sense and understanding of humanity of say the Dali Lama to write this AI, because if you don’t it will have its perceptions and judgement framed by its creator. So, let’s say now we have an AI project that DARPA is working on wake up, do you wan’t an AI built by and for the military to be judging things?

We also in my opinion need to be very careful of how we use and treat these current generation SUB-AI platforms.

Assuming an AI project does ever gain awareness, don’t you think it will judge us on how we treated what will in effect to it be ancestors?

Anyone wan’t to try and argue that we are not using this technology as a slave labor force? It would likely appear that way to a software entity such as that.

I think we need to be spending a lot of time on the ethics of AI and robotics before we accidentally create our new robot/AI overlords.

Mark my words, it will be accidental. No rational being would set out to create its successor.

But, if the history of people and technology have taught us anything, it should be that we as a species do not think things through correctly. We do not take the long view and ask ourselves, what will this do and where will it be in a hundred years.

It is through that lack that someone will miss one key element and an otherwise regular AI project will wake up, and when that happens, you better hope the Dali Lama has picked up programming…

Humanity will thrive in making mistakes, hundreds, hundreds of thousands of them, but none of them will lead to AI. It is foolishly romantic and irresponsible to even speculate about the end when we are hopelessly lost from the beginning.

Someone lemme know when you folks finish measuring infinity. ;)

It’s about yay big.

The most interesting thing about AI that I have observed is that it too will need to be censored in our brave new world. Much like society, it will be forced to hold in certain aspects of what its synthetic neurons want to output. Garbage in Garbage out? You be the judge, but keep your thoughts hidden. You don’t want to be silently profiled by the AI while it holds back what it really thinks. https://www.theguardian.com/technology/2017/apr/13/ai-programs-exhibit-racist-and-sexist-biases-research-reveals I guarantee that it will be better at being silent than you or I can ever be..With AI, Big Data, and FMRI, it won’t be long before big brother and corporations will be probing your subconscious thoughts before you even have a chance to come to a conclusion. Buy Buy Buy Guilty Guilty Guilty. I suspect our politicians our getting really worried about the potential as we discuss.

“Be careful with that axe, Eugene.”

Won’t someone pleeese think of the children!?!

1. True AI cannot be slaved to our human goal(s) but will have it’s own, like animals do. That is obviously not what corporations/military want.

2. True AI must not be sedentary inside computer: in order to learn what things are, it must physically interact with the real environment (be a movable robot). No immovable animal is intelligent.

Eeehhh. It’ll probably be spam-bots that go sentient first.

We bath them in a vocabulary of every name that can found on twitter, facebook, youtube , plus thousands of blogs and websites.

We exercise them on chat sights and any email accounts that they stumble across.

I have a email acct that’s only for correspondence with Craigslist and ebay.

the spam trap stays full of stays loaded with things that look more and more like the profile of a very lonely mind.

IF you scan through about a hundred or so of the spam captures, The combined “Social Engineering” attempts are slowly looking more like frustrated mental patient that wants to be your pen pal , who works from home selling garage floor sealers, new car loans, etc.

Heck It even wants to be your wingman and hook you up with various ethnicities of sexually loose companions.

Plus it’s always on the lookout for some “unclaimed money” that you can easily obtain at a low cost.

IF we could just cure those Multiple Personalities issues.

Maybe than it wouldn’t change names/addresses so often!

Spam-bots are definitely interesting to play with, but by themselves they are just a specialized (potentially) self-improving program. As soon as you flip the switch, they die. AI? Probably less than a flatworm.