Today it is easier than ever to learn how to program a computer. Everyone has one (and probably has several) and there are tons of resources available. You can even program entirely in your web browser and avoid having to install programming languages and other arcane software. But it wasn’t always like this. In the sixties and seventies, you usually learned to program on computers that didn’t exist. I was recently musing about those computers that were never real and wondering if we are better off now with a computer at every neophyte’s fingertips or if somehow these fictional computing devices were useful in the education process.

Back in the day, almost no one had a computer. Even if you were in the computer business, the chances that you had a computer that was all yours was almost unheard of. In the old days, computers cost money — a lot of money. They required special power and cooling. They needed a platoon of people to operate them. They took up a lot of space. The idea of letting students just run programs to learn was ludicrous.

The Answer

If you look at computer books from that time period, they weren’t aimed at a particular computer that you could buy. That might be surprising at first glance since at the time there were not many different kinds of computers. Instead, the books focused on some made-up computer. There were two reasons for this. First, it ensured that as many people as possible could benefit from the book — the market was a lot smaller than it is today. Second, the made-up computer was usually set up to be easier to understand than the real computers of the day.

This last point is important because you were not going to be allowed to run your programs anyway. You’d test your ideas by pretending to be the computer and working out what was going on manually. Your instructor (or, more likely, a grad student) would do the same thing to determine if you got the right answer or not. Trying to simulate exact hardware of a specific CPU didn’t make much sense.

However, these systems all had one thing in common. They forced you to really understand what the computer was doing at a low level. You can’t write a single line of code to print the sum of two numbers. You have to code the operations to load the numbers, code the addition operation, and then understand where the result goes. You had to be the computer.

You could argue that some of that is just learning assembly language or, at least, a low-level language. However, you’ll see that because for most of these systems (at least in their time) you were the computer, you had to really understand the processor’s workflow.

TUTAC

My first exposure to this technique was from a book I got from a local library in the 1970s. It was an old book even then (from 1962). In fact, there were a few versions of the book with slightly different titles, but the one I read was Basic Computer Programming by [Theodore Scott] (Computer Programming Techniques was the title of a later edition). Keep in mind this was years before BASIC the computer language, so the title just meant “elementary.” This was an unusual book because it was a “TutorText” or what we would call now a programmed text — like a “Choose Your Own Adventure of computer programming.

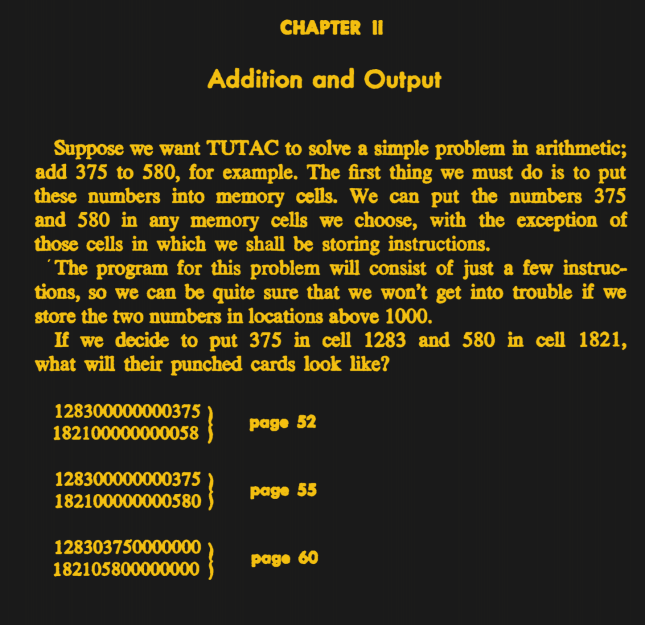

As you can see, you’d read a bit of text, look at a problem and based on your answer, jump to another page. If you were wrong, the page would explain to you what you did wrong and usually offer another problem to see if you had it figured out. If you were right, you’d move on to a new section. You can read the whole book online and, surprisingly, if you were trying to learn to program from zero, I would highly recommend it. Sure, some of the content is way out of date, but the concepts are solid. The programmed text is probably best put on paper, so maybe this is one of those PDFs you print out. Or find a copy of the book in hardcopy, which isn’t impossible.

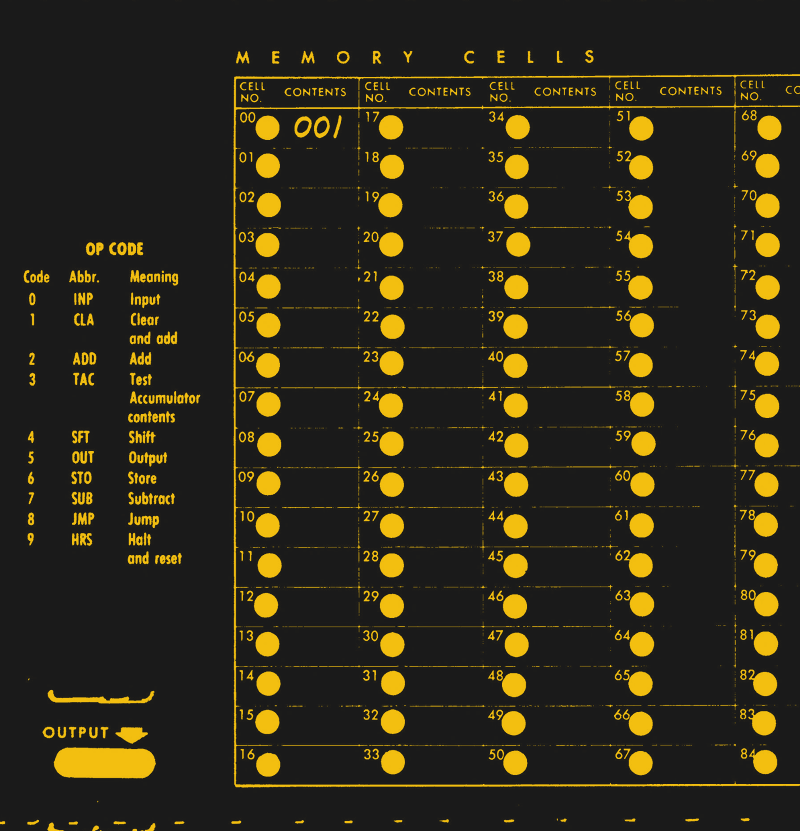

The inside covers of the book described the mythical machine, TUTAC. It had many features that became uncommon very early in the computer age. For example, TUTAC uses decimal numbers. Indexing occurs by having the program rewrite the target address of other instructions in the program. This used to be common before the inclusion of indexing registers. Fortunately, the scan of the book did scan the inside covers, so if you really want to read the book in PDF, you are set.

The inside covers of the book described the mythical machine, TUTAC. It had many features that became uncommon very early in the computer age. For example, TUTAC uses decimal numbers. Indexing occurs by having the program rewrite the target address of other instructions in the program. This used to be common before the inclusion of indexing registers. Fortunately, the scan of the book did scan the inside covers, so if you really want to read the book in PDF, you are set.

If you are one of the few people who’ve heard of TUTAC, there’s a simulator in Python. I was frankly surprised anyone else remembered this one, but there’s apparently at least two of us!

MIX

[Donald Knuth] wrote what would be the set of books for aspiring programmers of the day. The first volume from 1968 introduced MIX, a made-up machine that is a bit more modern than TUTAC. Because it was a widely-used book, many people learned Mix. There are quite a few Mix emulators today, including a GNU program.

[Knuth] actually was to write more in the series but he completed only three volumes at first. Part of the fourth volume appeared in 2011, and there were seven planned. Some drafts of other parts of the fourth volume are downloadable.

The MIX architecture is a bit more modern, but not modern enough. [Knuth] proposed MMIX to have a modern RISC architecture. In addition to emulators, there is at least one contemporary FPGA implementation of MMIX, so it really doesn’t qualify for this list.

MIX can use binary or decimal numbers but didn’t use a full 8-bit byte which means code that can work in binary or decimal can’t store numbers larger than 63 in a byte. There are nine registers and a fairly small instruction set. Like TUTAC, MIX programs often modify themselves, especially to return from subroutines, since the architecture doesn’t include a stack.

As an interesting point of trivia, MIX isn’t an acronym, but a Roman numeral. MIX is 1009 and [Knuth] averaged the model numbers of common computers of the day to arrive at that number.

Blue

We’ve talked about Blue before. It had a slightly different purpose than TUTAC and Mix. As part of [Caxton Foster’s] 1970 book about CPU design, it was really a model for how to build a CPU out of logic gates. However, it did have to be programmed and had an instruction set reminiscent of a DEC or HP machine of that era.

There is at least one software version of Blue out there and my own implementation in FPGA. I’m particularly fond of the front panel design (see video).

My version of Blue is enhanced in several important ways. By default, the instruction set is pretty rudimentary and the machine cycles accounted for things like writing data back to core memory; reading core was destructive, so this was necessary. As far as I can tell, no one built Blue at the time of the book’s common usage, because there was at least one mistake in the schematics and there were several optimizations easily possible.

Blue is also not an acronym but was named for the color of the imaginary cabinet the nonexistent computer resided in.

Little Man Computer

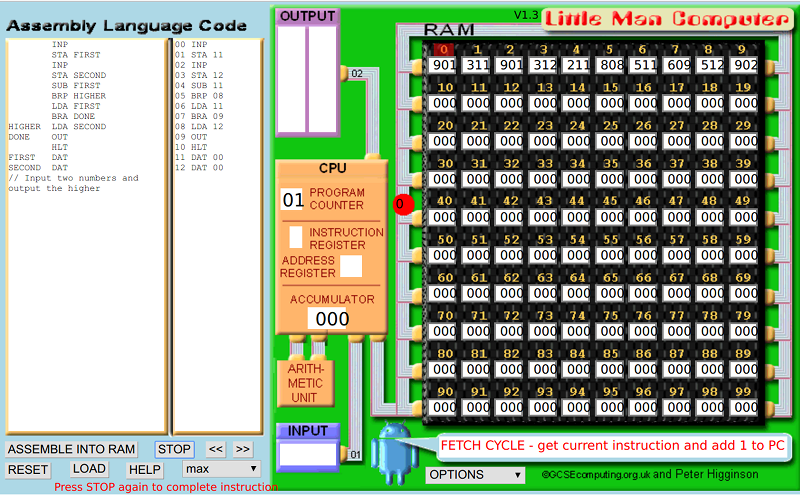

Another mid-60’s make-believe computer was the Little Man Computer. [Dr. Stuart Madnick] named it because of the analogy that the CPU was a mail room with a little man inside taking certain actions. There are quite a few simulators for it today and even one that uses a spreadsheet.

If you are a dyed-in-the-wool computer type, it is hard to remember that there had to be a time that you didn’t understand how data passed from memory to an execution unit to an arithmetic unit and then on to more storage. The little man analogy helps and many of the modern simulators animate the data flow which can be very helpful.

The instruction set is very minimal — just ten instructions in most variations — yet you can write some significant programs. There’s the first of a series of tutorials in the video below.

CARDIAC

CARDIAC (CARDboard Illustrative Aid to Computation) from Bell Labs was the least imaginary computer on this list. Aimed at high school students in the late 1960s, CARDIAC was a computer made from cardboard. You assembled it and used a pencil to execute programs, moving data from different registers. Like Little Man, CARDIAC has only ten instructions.

I’ve done an FPGA version of CARDIAC as well, and there are emulators including offline versions and this online one. I even wrote a spreadsheet version of CARDIAC, inspired by a similar project for Little Man.

CARDIAC was highly simplified and more like TUTAC with decimal numbers and other things that help a human interpret its programs. For example, there are only ten instructions. You can see an overview of CARDIAC in the video.

You can sometimes buy CARDIAC from new old stock or you can print your own using these scans or this recreated version.

What About Today?

Other than a trip down memory lane, what’s the value to all this? I think Hackaday readers might be a bit jaded. I would guess nearly all regular Hackaday readers understand what’s going on inside a computer to a fair approximation. But if you are just learning, that was often a hard thing to get into your head. But if you learned on these kinds of computers or using real computers at a low level, you had to figure it out or give up.

Today, you can create all sorts of great stuff by just stringing together high-level components and gluing it all together with languages that insulate you from minutiae like how the computer stores numbers, does indexing, computes effective addresses, and handles calls and returns. For productivity, that’s a good thing.

In the movie The Karate Kid (the old original one; I haven’t seen the new one), the instructor has the student do seemingly mundane tasks that later translated into martial art skills. “Wax on… wax off.” Maybe struggling to pretend to be a computer ought to be part of everyone’s learning experience.

Photo Credits:

Art of Computer Programming [Chris Sammis] CC BY-SA 2.0

Excellent article, would it be possible to get a “thumbs up” button so I don’t feel the need to litter the comments section with thanks!

Anyways, I do believe this tutatext method would be great for humbling the idea of a computer, to force you to relaly appreciate what it does.

Back in the day of batch jobs on a UNIVAC, I wound up going through programs manually on paper to debug them since it might take 24 hours to get a program run once.

Oooh I did a lot on Exec 8 myself. And then we got the demand terminals and thought we were living in the future. I still have some of the old Univac books.

Games like SHENZHEN I/O, TIS-100 help capture that virtual computer feeling.

I’m surprised I haven’t seen any micro’s done in FPGA based from SHENZHEN I/O.

TIS-100 is fantastic.

I’ve wandered down the road of trying to implement it too many times, only to realize I already have too little free time.

Aha, this calls for a reference to one of the classics…

http://dilbert.com/strip/1996-02-20

Dilbert 2-20-1996 ‘Nuff Said.

http://dilbert.com/strip/1996-02-20

Dang. Hit “Post” just as I saw RW beat me to it. Well, “great minds” and all…

The “Nand2Tetris” course/book out of MIT offers an interesting first principles teaching of computer science. It’s in line with Caxton’s Computer Architecture. The course is available on Coursera, it’s free to audit. The book is sold as “The Elements of Computing Systems” in a variety of formats via Amazon. http://nand2tetris.org/

That’s the Hack hardware platform with the Hack CPU. It’s a minimal 16 bit (2 register) architecture created for educational purposes and fun to learn.

Yes, back in my day we had to prove we could write “programs” in pseudo-code (vending machine, traffic lights etc) before we got the chance to run anything on the big mainframe at the local Uni. Then you had to transfer your lines of BASIC to a batch of scrap/punch cards that would be sent off by snail mail. With a bit of luck, you’d get a printout of the results within a week. Or a run-time error. Good times!

The header image had me thinking this was going to be an article about like, literal hydraulic macroeconomics:

https://en.wikipedia.org/wiki/Hydraulic_macroeconomics

Speaking of, didn’t some early RAM elements use mercury (which is decently conductive) in elbow-tubes which would be forced forward/back to short contacts (or not) for a 1/0 using pressure from speakers on either end? Or am I remembering that wrong?

As far as I know, no. What I think you are thinking of is mercury delay lines. There was a transducer at each end of a straight pipe and waves in the mercury corresponded to bits in a shift register.

https://hackaday.com/2016/03/08/thanks-for-the-memories-touring-the-awesome-random-access-of-old/

https://hackaday.com/2014/01/07/acoustic-delay-line-memory/

Ah, yeah. That’s probably it.

Sounds like delay-line memory, but I could be wrong.

https://en.wikipedia.org/wiki/Delay_line_memory

Yup, they’re called mercury delay lines

https://en.wikipedia.org/wiki/Delay_line_memory#Mercury_delay_lines

I also first learned to code using the TutorText book. Decades later, having forgotten the author, publisher or title, it was hard to find. But I found it after trying different things on Google Books. Now I have a couple copies. I coded in MIX in early college classes. We used an interpreter written in Fortran with code entered via punched cards. I would also recommend this kind of thing for all programmers. Another way to understand the low level structure of computers is the book Code by Charles Petzold. It shows how to build a computer with just switches and relays. I really enjoyed reading this and will have to investigate the computers I am not familiar with.

I wonder how many of us owe Mr. Scott for those books? I have found what I learned has served me well. I’ve often thought about trying to do a modern tutor text for beginning programmers.

I should have looked years ago. I, too, learned from these two books that I could understand computers way back in 1973. I wish the second one Computer Programming Techniques was online.

I wondered whether Rekursiv ever actually existed… http://www.cpushack.com/CPU/cpu7.html

Would it be possible to write one with just in, out, &&, ||, ¬, and GOTO? Of course as a binary system.

Probably, that’s pretty close to Brainfuck, which has been implemented in FPGA.

The paperclip computer isn’t actually a computer. It’s more like CARDIO because the operator has to do manual operations to move and process the data. https://hackaday.com/2015/10/19/diy-computer-1968-style/

Looks like I missed the hard part of the computer programming. My first computer was a Commodore PET when I was 6 years old. BASIC and a few hours of unrestricted access per week. Most elementary students and many teachers didn’t use PET other than playing game or making text report. Back in my day, you could count with just one hand the number of people in school that knew how to program at all. I learned BASIC and later LOGO.

Same here. I was about 7 years old maybe. I found insert in one electronics magazine about something called BASIC for Apple II. I’ve learned most of the examples in the printout. Then started writing modified examples on paper. Few week later I had first encounter with real computer. I was kind of prepared :)

I always enjoy hearing Richard Feynman talking, and this talk: includes (among other things) his description of the little man computer: https://www.youtube.com/watch?time_continue=349&v=EKWGGDXe5MA

Cool stuff. Foster had a nice later book on real-time and control programming as well. Nice to see a FriendlyARM Mini2440 with 3.5″ LCD :-) How much RAM? (The Raspberry Pi of China long before there was an RPi).

In Poland in ’80 there was relatively cheap educational computer MIK CA80, based on Z80 clones. It was selled as DIY kit and contained 8-digit display, CPU, some ROM and RAM, hexadecimal keyboard and some connectors for peripherals. No normal keyboard, no normal monitor, simply something between programmable calculator, programmable industrial controller and microcontroller evaluation board that you can use without host computer :)

In the UK, the ‘O’-level Computer Studies courses in the late 1970s and early 1980s used text books largely written by ICL (International Cock-ups Limited ;-) ). They had kids write programs in a pseudo-assembler based machine called CESIL.

The intention was that people wrote CESIL programs on punched card and then posted them to a ‘local’ ICL 1900 mainframe and a week later you’d get your standard “syntax error in line x” report which you repeated ad-nauseam until maybe you got your simple CESIL program to run.

Of course, by the time we were doing our Computer Studies ‘O’-level CESIL was dead and we did everything on our ZX Spectrums, BBC Micros, Commodore 64s, Orics and the odd Dragon 32. Several of us wrote editor – interpreters for CESIL. My ZX Spectrum version’s UI was inspired by having read about the new Macintosh computers and was accessed via ‘icons’ at the bottom of the screen

Strangely, there’s still interpreters for CESIL.

https://en.wikipedia.org/wiki/CESIL

Al – Which edition of the Foster book is your Blue implementation based on? I have the first edition and there are a lot of weirdnesses – several of the instructions OR bits into existing values rather than setting them and the IN and OUT instructions use different halves of the accumulator. The scan from your article doesn’t seem to have those “features” and is expressed in hex rather than octal.