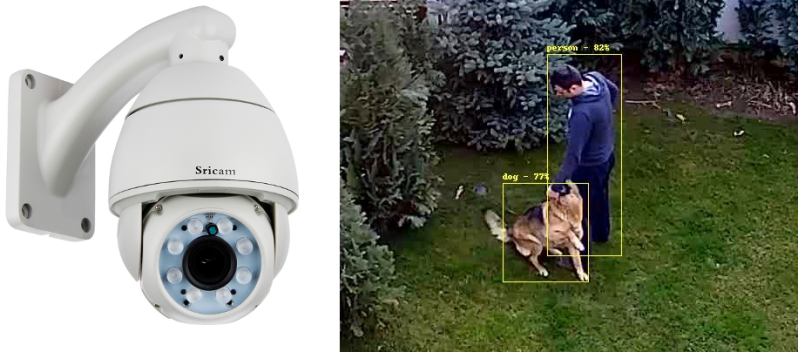

AI is quickly revolutionizing the security camera industry. Several manufacturers sell cameras which use deep learning to detect cars, people, and other events. These smart cameras are generally expensive though, compared to their “dumb” counterparts. [Martin] was able to bring these detection features to a standard camera with a Raspberry Pi, and a bit of ingenuity.

[Martin’s] goal was to capture events of interest, such as a person on screen, or a car in the driveway. The data for the events would then be published to an MQTT topic, along with some metadata such as confidence level. OpenCV is generally how these pipelines start, but [Martin’s] camera wouldn’t send RTSP images over TCP the way OpenCV requires, only RTSP over UDP. To solve this, Martin captures the video stream with FFmpeg. The deep learning AI magic is handled by the darkflow library, which is itself based upon Google’s Tensorflow.

Martin tested out his recognition system with some cheap Chinese PTZ cameras, and the processing running on a remote Raspberry Pi. So far the results have been good. The system is able to recognize people, animals, and cars pulling in the driveway. His code is available on GitHub if you want to give it a spin yourself!

I foresee someone using this to protect a bird feeder from squirrels via airsoft turret.

Here. But not a bird feeder, but my tomatoes. And probably paintball. I don’t think airsoft is powerful enough to deter squirrels, plus I don’t want the trash.

“You are being watched. The government has a secret system, a machine that spies on you every hour of every day.”

Such a good show.

It’s up there with wargames for “favorite fake haxxor shows”

Best show ever.

I wish they’d do a spin-off.

+1 for the show “Person of Interest”. Starts out as a police procedural. Pivots to something much cooler.

I wish everyday that they would continue this series

I am so happy to know that I’m not the only Irrelevant around on here.

Remember when people were using Pogoplugs to add a little extra to their cameras.

fun project, but 1 frame every 5 seconds is pretty useless security wise..

he also says ” Ideally, this setup will run on a Raspberry Pi 3 B+, I have one in order.” So it’s not running on a Pi at the moment.

or at least not a 3B+ then ..

Face detection (not recognition) can be done at 10-15 fps with OpenCV on a Raspberry pi 2. So a Pi 3 should be plenty fast. But I don’t understand the benefit of a surveillance camera being capable of classifying its triggers? Usually you depend on other sensors together with motion detect to filter out false triggers. so you both need a PIR trigger, laser break or shock sensor event, while motion is detected to trigger an event. Motion detection in cameras have also come of age, as opposed to the old Linux Motion that simply detected percentage difference between frames. Now cameras utilize vector detect algorithms so size of objects and speed can be defined, so snow, Coyotes and birds are no longer a problem.

I have a camera pointed outside to an area with a small tree. The movement of it in the wind is enough to trigger the motion detect all day. It’d be swell if I could tell it to just record when it sees people, or cars. Especially if I can tell it to record and also ping me when it sees a UPS truck or Fedex truck go down the street.

With all of the cameras being *nix boxes, I don’t see why they can’t have heatsinks installed and run the network natively. It may be slow, but there must be some way using networks to simplify the data and do some on-board pre-processing before it is sent out to the Pi.

It is difficult to get root access in most cases, but the most difficult part would be the processing overhead..

I don’t see why it has to run once in 5 seconds, there’s no reason given. It could be faster.

There was the article about the camera with the open source firmware:

https://hackaday.com/2018/03/05/customising-a-30-ip-camera-for-fun/

Add this and see how well it runs. Atleast the original firmware is able to track moving objects. Indentifying takes more cycles, but it might be able to do that too. One just needs to try’n see.

I looked a bit more into it. The T20 processor on that linked camera is max 1Ghz, but does support some SIMD instructions. According to the Ingenix propaganda “supports flexible and changeable intelligent analysis algorithm in real time. Such as motion detection, body detection, license plate recognition and so on”.

And someone asked about the once in 5s-thing and the answer was, that on the given setup, RPi can only do once in 3s max.

Not really. Sounds like you could still save the raw video feed at whatever fps is native to the camera, but it would have metadata of what’s in the frame every five seconds. That would save a hell of a lot of time seeking through hours of footage.

Hotdog or not hotdog?

El Chupacabra – 1%

El Chimichanga – 99%

Shopping-bag “intruder” sighted, sound the alarm.

Yes, a total war on airborne plastic trash headed to the sea. That should win the HaD prize. Proof of purchase links with personal ID on every piece of nano tagged carry out trash! Gum wrappers, cigarette butts no limit. Prosecute them off of the Earth, they’re signed up for the mars colony.

I have always wondered what the difference is between a jagged solid rock and a crumpled paper bag is when seen laying on the road when I see it and an Uber “it” is seeing it? Needless to say the bag user needs to pay even if Uber swerves off into me.

or alternatively you could ask the manufacturers to go back to using recycleable materials such as paper (wax paper) as let’s be fair, the reason plastic was used for such things as bags and packaging was driven by companies wishing to make more profit.

Since a plastic bag is cheaper than a paper bag and shareholders want their dividends who is really to blame eh?

Putting all the focus on consumers for saving the planet is pure bull.

It’s manufacturers that are the ones at fault and government cronyism/lobbying lets them get away with it.

Find an evil in society and at the end of it you’ll find drive for continual profit/growth and shareholder greed is usually the reason.

I had a HOG person tracker running on a PI which worked great with my PTZ during testing, following drawn people shapes against a very cluttered background, but the moment I ‘deployed’ it on the drive of our house, it immediately became obsessed with the vertical lines of a corrugated shed roof…

It was fast enough to do real time pan tilt and zoom tracking, but the openCV HOG always false positive’d on the corrugated roof, so the moment that target got anywhere near the shed, the camera got stuck there…

I never got into retaining it, but i guess that’s what I need to do, to get it working reliably. (masking out the shed seemed like a poor solution)

I don’t really understand why vertical lines confuse the HOG algorithm so easily.

If anyone has some tips on retraining HOG with openCV in python, let me know!

Is it possible that it is interpreting the straight lines as movement? That may be the reason it is obsess with them. Either that, or it is constantly using them as references for alignment but won’t go back to actual detection.

Think this could be used to be able to answer the question: has a cat entered and not left yet?

“Bufferscotch, what are you doing to that sheep? Is that even legal in this state? Why oh why wasn’t I placed in a Hot Topic?”

Oh, goodie! I can stop paying the ridiculous price for a Nest Aware subscription!