Self-driving cars have been in the news a lot in the past two weeks. Uber’s self-driving taxi hit and killed a pedestrian on March 18, and just a few days later a Tesla running in “autopilot” mode slammed into a road barrier at full speed, killing the driver. In both cases, there was a human driver who was supposed to be watching over the shoulder of the machine, but in the Uber case the driver appears to have been distracted and in the Tesla case, the driver had hands off the steering wheel for six seconds prior to the crash. How safe are self-driving cars?

Trick question! Neither of these cars were “self-driving” in at least one sense: both had a person behind the wheel who was ultimately responsible for piloting the vehicle. The Uber and Tesla driving systems aren’t even comparable. The Uber taxi does routing and planning, knows the speed limit, and should be able to see red traffic lights and stop at them (more on this below!). The Tesla “Autopilot” system is really just the combination of adaptive cruise control and lane-holding subsystems, which isn’t even enough to get it classified as autonomous in the state of California. Indeed, it’s a failure of the people behind the wheels, and the failure to properly train those people, that make the pilot-and-self-driving-car combination more dangerous than a human driver alone would be.

You could still imagine wanting to dig into the numbers for self-driving cars’ safety records, even though they’re heterogeneous and have people playing the mechanical turk. If you did, you’d be sorely disappointed. None of the manufacturers publish any of their data publicly when they don’t have to. Indeed, our glimpses into data on autonomous vehicles from these companies come from two sources: internal documents that get leaked to the press and carefully selected statistics from the firms’ PR departments. The state of California, which requires the most rigorous documentation of autonomous vehicles anywhere, is another source, but because Tesla’s car isn’t autonomous, and because Uber refused to admit that its car is autonomous to the California DMV, we have no extra insight into these two vehicle platforms.

Nonetheless, Tesla’s Autopilot has three fatalities now, and all have one thing in common — all three drivers trusted the lane-holding feature well enough to not take control of the wheel in the last few seconds of their lives. With Uber, there’s very little autonomous vehicle performance history, but there are leaked documents and a pattern that makes Uber look like a risk-taking scofflaw with sub-par technology that has a vested interest to make it look better than it is. That these vehicles are being let loose on public roads, without extra oversight and with other traffic participants as safety guinea pigs, is giving the self-driving car industry and ideal a black eye.

If Tesla’s and Uber’s car technologies are very dissimilar, the companies have something in common. They are both “disruptive” companies with mavericks at the helm that see their fates hinging on getting to a widespread deployment of self-driving technology. But what differentiates Uber and Tesla from Google and GM most is, ironically, their use of essentially untrained test pilots in their vehicles: Tesla’s in the form of consumers, and Uber’s in the form of taxi drivers with very little specific autonomous-vehicle training. What caused the Tesla and Uber accidents may have a lot more to do with human factors than self-driving technology per se.

You can see we’ve got a lot of ground to cover. Read on!

The Red Herrings

But first, here are some irrelevant statistics you’ll hear bantered around after the accidents. First is that “more than 90% of accidents are caused by human drivers”. This is unsurprising, given that all car drivers are human. When only self-driving cars are allowed on the road, they’ll be responsible for 100% of accidents. The only thing that matters is the relative safety of humans and machines, expressed per mile or per trip or per hour. So let’s talk about that.

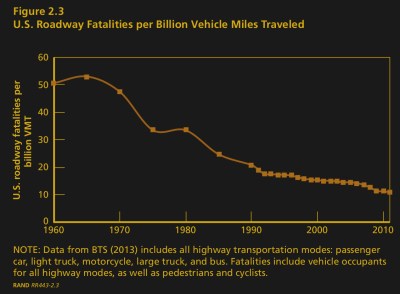

Humans are reportedly terrible drivers because 35,000 died on US highways last year. This actually demonstrates that people are fantastically good at driving, despite their flaws. US drivers also drove three trillion miles in the process. Three relevant statistics to keep in your mind as a baseline are 90 million miles per fatality, a million miles per injury, and half a million miles per accident of any kind. When autonomous vehicles can beat these numbers, which include drunk drivers, snowy and rainy weather conditions, and cell phones, you can say that humans are “bad” drivers. (Source: US National Highway Traffic and Safety Administration.)

Finally, I’m certain that you’ve heard that autonomous vehicles “will” be safer than human drivers, and that some fatal accidents now are just an uncomfortable hump that we have to get over to save millions of lives in the future. This is an “ends justify the means” argument, which puts it on sketchy ethical footing to start with. Moreover, in medical trials, patients are required to give informed consent to be treated, and the treatment under consideration has to be shown to be not significantly worse than a treatment already in use. Tests of self-driving technology aren’t that dissimilar, and we’ve not signed consent forms to share the road with non-human drivers. Worse, it looks very much like the machines are less good than we are. The fig leaf is that a human driver is ultimately in control, so it’s probably no worse than letting them drive without the machine, right? Let’s take a look.

Tesla: Autopilot, but not Autonomous

This is not great. Autopilot is supposed to only be engaged on good sections of road, in good weather conditions, and kept under strict supervision. If one considers how humans drive in these optimal conditions, they would do significantly better than average as well. Something like 30% of fatal accidents occur at intersections which should make Autopilot’s traditional cruising-down-the-highway use case look a lot better. An educated guess, based on these factors, is that Autopilot isn’t all that much worse than an average human driver under the same circumstances, but it certainly isn’t demonstrably better either.

But Autopilot isn’t autonomous. According to the SAE definitions of automation, the current Autopilot software is level 2, partial automation, and that’s dangerous. Because the system works so well most of the time, users can forget that they’re intended to be in control at all times. This is part of the US National Transportation Safety Board (NTSB)’s conclusion on the accident in 2016, and it’s the common thread between all three Autopilot-related deaths. The drivers didn’t have their hands on the wheel, ready to take over, when the system failed to see an obstacle. They over-relied on the system to work.

It’s hard to blame them. After thousands of experiences when the car did the right thing, it would be hard to second-guess it on the 4,023rd time. Ironically, this would become even harder if the system had a large number of close calls. If the system frequently makes the right choice shortly after you would have, you learn to suppress your distrust. You practice not intervening with every near miss. And this is also the reason that Consumer Reports called on Tesla to remove the steering function and the Autopilot name — it just promises too much.

Uber: Scofflaws and Shambles

You might say that it’s mean-spirited to pile on Uber right now. After all, they have just experienced one tragedy and it was perhaps unavoidable. But whereas Tesla is taking baby steps toward automation and maybe promoting them too hard, Uber seems to be taking gigantic leaps, breaking laws, pushing their drivers, and hoping for the best.

In December 2016, Uber announced that it was going to test its vehicles in California. There was just one hitch: they weren’t allowed to. The California DMV classified their vehicles as autonomous, which subjected them to reporting requirements (more later!) that Uber were unwilling to subject themselves to, so Uber argued that their cars weren’t autonomous under California law, and they drove anyway.

After just a few days, the City of San Francisco shut them down. Within the one week that they were on the streets of San Francisco, their cars had reportedly run five or six red lights, with at least one getting filmed by the police (and posted on YouTube, naturally). Uber claims that the driver was at fault for not overriding the car, which apparently didn’t see the light at all.

In a publicity stunt made in heaven, Uber loaded up their cars onto a big “Otto” branded trucks and drove south to Arizona. Take that, California! Only the Otto trucks drove with human drivers behind the wheel, in stark contrast to that famous video of self-driving Otto trucks (YouTube) which was filmed in the state of Nevada. (During which they also didn’t have the proper permits to operate autonomously, and were thus operating illegally while filming.)

Uber picked Arizona, as have many autonomous vehicle companies, for the combination of good weather, wide highways, and lax regulation. Arizona’s governor, Douglas Ducey, made sure that the state was “open for business” and imposed minimal constraints on self-drivers as long as they follow the rules of the road. Notably, there are no reporting requirements in Arizona and no oversight once a permit is approved.

How were their cars doing in Arizona before the accident? Not well. According to leaked internal documents, they averaged thirteen miles between “interventions” when the driver needed to take over. With Autopilot, we speculated about the dangers of being lulled into complacency by an almost-too-good experience, but this is the opposite. We don’t know how many of the interventions were serious issues, like failing to notice a pedestrian or red traffic light, or how many were minor, like swerving slightly out of a lane on an empty road or just failing to brake smoothly. But we do know that an Uber driver had to be on his or her toes. And in this high-demand environment, they reduced the number of drivers from two to one to cut costs.

Then the accident. At the time, Uber cars had a combined three million miles under their belt. Remember the US average of in excess of 90 million miles per fatality? Maybe Uber got unlucky, but given their background, I’m not willing to give them the benefit of the doubt. To tally even these three million fatality-free miles to the autonomous car is pushing it, though. Three million miles at thirteen miles per intervention means that a human took control around 230,000 times. We have no idea how many of these include situations where the driver prevented a serious accident before the one time that a driver didn’t. This is not what better-than-human driving looks like.

Takeaway

Other self-driving car technologies have significantly better performance records, no fatalities, or have subjected themselves to even the lightest public scrutiny by testing their vehicles in California, the only state with disclosure requirements for autonomous vehicles. As a California rule comes into effect (today!) that enables full deployment of autonomous vehicles, rather than just testing, in the state, we expect to see more data in the future. I’ll write up the good side of self-drivers in another article.

But for now, we’re left with two important counterexamples. The industry cannot be counted on to regulate itself if there’s no (enforced) transparency into the performance of their vehicles — both Tesla and Uber think they are in a winner-takes-all race to autonomous driving, and are cutting corners.

Because no self-driving cars, even the best of the best, are able to drive more than a few thousand miles without human intervention, the humans are the weak link. But this is not for the commonly stated reason that people are bad drivers — we’re talking about human-guided self-driving cars failing to meet the safety standards of unassisted humans after all. Instead, we need to publicly recognize that piloting a “self-driving” car is its own unique and dangerous task. Airplane pilots receive extensive training on the use and limitations of (real) autopilot. Maybe it’s time to make this mandatory for self-driving pilots as well.

Either way, the public deserves more data. From all evidence, the current state of technology in self-driving cars is nowhere near safer than human drivers, by any standards, and yet potentially lethal experiments are being undertaken on the streets. Obtaining informed consent of all drivers to participate in this experiment is perhaps asking too much. But at least we should be informed.

Still unconvinced that a computer can do better pattern matching than a human brain which has had eons of evolution to train it.

+1

Now all the posters that were dismissing peoples warnings about countless edge case failure modes are deluding themselves into thinking this is fixable. I find it more surprising it took a year to happen — good work team — someone else did die due to industry fostered arrogance and lack of accountability. Sure a sociopath is amusing — but the hypocrites are just hilarious.

https://www.youtube.com/watch?v=4iWvedIhWjM

Eons and we still screw up. Besides training is being worked on.

https://www.theatlantic.com/technology/archive/2017/08/inside-waymos-secret-testing-and-simulation-facilities/537648/

As the article stated, it’s not about whether or not screwups will happen. Of course they will happen, both for humans and machines. It’s about screwups per mile, and humans have a spectacular record with that.

Of course they’ll keep training, but humans have had an absurdly good head start. Can computers catch up? Probably, eventually. But it’ll be way harder than most people make it out to be. A lot of futurists have this assumption that the robots have a better safety record, but those statistics are flawed as shown above. It’s highly likely that automated cars are another one of those “only five or ten years away” technologies like flying cars (which will never be commonplace for reasons of energy efficiency and sheer destructive potential) and fusion power (which might happen eventually but it’s orders of magnitude more difficult than we first thought it would be).

Here’s the trick:

“for most simulations, they skip that object-recognition step. Instead of feeding the car raw data it has to identify as a pedestrian, they simply tell the car: A pedestrian is here.”

The main problem with the self-driving cars is exactly their limited perception of the surroundings – object recognition. The actual logic of driving is very simple: just don’t crash into anything. This is why the billions of miles of virtual driving actually count to nothing – they can hone the car’s driving logic all they want, build prediction models about how cars or pedestrians will behave and load pre-canned response patterns for every type of intersection ever imaginable, but it’s still an AI with the perceptual understanding of a gnat, and it’s going to get confused and run into things because it doesn’t know what’s going on.

In the current state of the technology, the driving AI and the percieving AI are separate, with one reporting to the other “There’s a pedestrian”, and the other just having to trust that this is so. It has no way to double-guess itself.

In humans, perception is a two-way street. We create our own internal reality and loop this model back through the visual cortex where it either gets confirmed or rejected as incongruent, so the internal hallucinations of real objects that exist get amplified while the false spurious hallucinations get suppressed and a match between internal model and external world is maintained. That’s why we have object permanence while the computer does not. We can imagine objects that are currently not visible, and then see if they appear – a skill learned when we were babies.

The computer could hang on to some piece of data that says “a pedestrian is here”, but doing so risks running into false realities as it has no means of asking “is this pedestrian still here?”. It’s relying on the perception network to say “pedestrian, pedestrian, pedestrian….” and when that stops, the pedestrian is “gone”. That might be because the pedestrian is truly gone, or because the network is failing to recognize them at that instant.

There is an internal model of reality of course, which is continuouslly re-aligned to what the sensors say so the car knows where it is, but this model is fixed and cannot dynamically adjust to account for the differences between reality and the model. The computer in the car just doesn’t have the computing power to generate that sort of feedback, because it would have to generate a mockup of the sensor data that would match the updated internal model, and compare that to the actual sensor data it is recieving. The AI would need the power of imagination.

– That was an amazing explanation of human perception, kind of makes me want to read more into this… Yes, comparing human perception/processing to a 10FPS camera as someone did is asinine. Our powers of preception are not even in the playing field with any current tech. Seeing a deer in the ditch for example, and maybe it turning and disappearing behind some sort of baracade/visible blockage between it and the road. You can tell it bolted towards the road without seeing so much as a partial stride before it disappeared, and calculate it’s probable velocities to explode into your path with no warning due to the visual barrier, and react accordingly. The ‘autopilot’ won’t see it until it pops out right in front of you, at full speed, either a resulting crash, or evasive maneuver (into a car in another lane?), that could have been easily avoided with the average human’s perception. Your average person not looking around or paying close attention would likely pick up on this, just from movement in peripheral vision.

The fundamental disconnect between the brain and the AI is that the brain is doing its thinking using the direct experience, whereas the computer first identifies the data, then gives the data a label or a flag, and then does computation on that level of absraction like generals in a war room playing with little tin soldiers on a map while the real soldiers are dying on the field. The information the generals have may or may not be relevant to what the soldiers are doing.

Our brains get a direct experience of “moose”, and while the brain is already deciding what to do with it, it also sends this thought-object back to the visual cortex, where it either comes back as “moose!” or it diminishes down as there’s no corresponding input signal to boost it. If the resulting “moose” signal gets amplified, the brain is already primed to act. If not, the idea of the moose dies down and the brain resumes driving as usual.

It’s kinda like a singing microphone just at the level of gain where it’s silent when nobody talks, but starts to squeal when there’s even a tiniest bit of noise at the resonant wavelenght of the system, and then falls silent again when the noise stops. It’s very sensitive and effective against irrelevant information, because it isn’t trying to posivitely identify objects as such, but rather find out if the imaginary and real correlate, and this is only possible because the brain is operating directly on the signals rather than turning them into abstract symbols for later processing. The brain is doing a sort of mental chemistry, where it’s not doing “IF moose THEN brake”, but “moose + driving braking” – the mental objects react with the state of the brain to produce new states of the brain.

In this way, everything you’ve learned is stored as a neural pattern in your brain, and when the corresponding signal comes in, that pattern starts to ring – your brain starts to squeal like the microphone, and those squeals mix together and form new patterns which ring with other patterns, which result in actions.

Also, because resonances can be excited by nearby frequencies, that’s how the brain deals with ambiguity. You don’t have to hit the exact right button “moose” or “pedestrian”, or “car” to make the brain avoid collision. The brain doesn’t care if it’s a moose or a caribou or a runaway shopping cart, it still ticks enough of the right boxes to trigger the avoidance response.

Meanwhile, the AI can’t do this box-ticking thing because it relies on the perceptual network to say exactly what is there. If the perceptual network fails to identify an object for what it is – like the Tesla car mis-identifying the side of a truck for an overhead sign – the AI can only do the wrong thing.

Sure but it’s not competing with that, it’s competing with a brain that’s generally good at what it’s concentrating on that’s not always driving. It’s not going to consume mind altering substances, get tired, ignore speed limits (in theory), decide that corners are best taken sideways on two wheels etc etc.

Not me. That’s why I feel safer driving myself that some darn silicon diode. Now all you people…let the puter do the driving.

Haven’t crashed on the information superhighway yet.

germanium diodes have 100% safety record for self driving cars

I think this is a big factor, a lot of driving miles are commuting to and from work, presumably well rested to work, but certainly not when driving back home. Humans are generally quite good at processing visual information, but less good at remembering to regularly look around, and even worse at deciding which risky action is still acceptable.

I would argue (for the sake of arguing? B^) that people are more tired on their way to work (under a caffeine buzz perhaps) than they are on their way home.

B^)

You don’t actually have to consciously look around. After you’ve learned to drive, it becomes more or less automatic.

People can even fall into road hypnosis where they drive for hundreds of miles and “wake up” without any recollection what just happened.

Nothing scarier than notice that you have no recollection what has happen during your daily commute … It has also happened to me while cycling, and that’s even more scary.

That’s not the scary part. The scary part is not knowing where you are when you do regain your conscious awareness.

That happened to me the first time I used a GPS navigator. I was listening to the directions on a route I already knew, and suddenly realized that I didn’t actually know where I am or which way is north.

Sure, unless, of course, there is a glitch, or malfunction…then the computer can and will do anything. Do you really want to ride on the highway next to a vehicle guided by nothing but programming and Chinese hardware? I do not.

The “glitch” or “malfunction” can happen in a human also. I think it’s called “human error” and not the rarest cause of accidents. Here we really have to use statistics and look for the probabilities. Which are NOT YET acceptable

Speed limits are often way to low. There are situations where it is safe not to stick to them like a slave. I would want that the computer recognizes this also.

+1 this as I feel they have been forcing things and trying to proceed too quickly.

It can when the human brain is also trying to talk to someone on the phone or text a friend or use the mapping software to get somewhere. Distracted driving isn’t going away, and only going to get worse as future generations rely more and more on mobile devices… Devices that are by design trying to capture more and more of their time and attention.

It’s no accident that Google is pushing so hard for autonomous vehicles, they’ve hit a wall is the amount of time that they can safely capture our eyeballs and sell space for ads. Gaining control of our eyeballs during our daily commute would be a HUGE win for them.

Another problem is self-driving vehicles are very rude.

In order to merge on the freeway when the flow of traffic is going slightly above the posted speed limit, they will accelerate in order to merge, then slow down – and many of the “accidents” were because human drivers keep up in traffic, and the AVs break for no apparent reason – but technically they, after breaking the speed limit, slow down to obey it.

True. There’s a necessary wiggle-room in lawful behavior for society to function smoothly, and manufacturers of course won’t program common scofflaw behavior into machines even if it’s necessary for the machine to function in a way that is predictable and fits in with human drivers. Of course that won’t be a problem if self-driving cars ever become a majority on the road.

I don’t think that’s going to happen, but for a much more uncomfortable reason. I think the manufacturers and researchers know about it but it doesn’t often make news, with outlets preferring to report on accidents and the so-called trolley problem instead. But if these cars are ever reliable, fully autonomous, and accessible by the general public, then they are essentially perfect land-based cruise missiles that could reach a majority of targets through existing road systems.

Right now the main deterrent is that a driver would have to commit suicide or leave a car parked in an awkward and suspicious location to make a getaway on foot. Of course you could say that manufacturers will implement DRM to require a person be in the vehicle, but practically all DRM and protected software has been cracked. Sensors can be spoofed. Jailbroken software will probably start out for non-violent purposes, such as the ability to repair your own hardware, tune and customize a car, or to retrofit a guidance system onto a pre-existing car. It will be cracked almost instantly, and anyone with a torrent client, proper drivers and cables, and a small amount of computer literacy will be able to use it.

I think that for this reason we aren’t going to see totally autonomous technology available to the consumer or non-corporate tinkerers any time soon. Maybe they’ll just take the risk and wait for an incident to implement regulation–admittedly the norm for emerging tech–but I think that incident would happen very quickly after the release of this technology.

Too much “DRM” shit and I would not buy that car.

And btw.: One of the advertised benefits of autonomous driving cars is automatic search for a parking space and picking up the owner later after he did what he wanted to do, another are autonomous taxis. The autonomous taxi is probably what Uber wants. Both use cases need the feature of the car driving empty, so you can not require a person in the car. And a potential terrorist could still circumvent the system. Depending on the sensors, they could use a puppet, an animal like a pig or a captive or drugged person to make the system go.

As an owner I would not accept a system which refuses to take me home if I am e.g. too drunk to drive.

tz: Brake. Brake. Brake. Brake. Brake. That should be enough to copy and paste the next time the word is required.

Nice piece Elliot. I believe there has been a lot of confusion with the various levels of self-driving capabilities and the terms used to describe them. I am glad you pointed that out. My concern is, and I can’t recall which manufacturer announced this recently, (maybe Ford?) but they are so confident in their systems that they have removed the controls for human input entirely. I think this is a huge mistake and I believe that this technology is not ready, and that other human drivers are so unpredictable, no computer program in the world will be able to safeguard against against them.

Right now Cruise (GM) and Waymo (Alphabet/Google) are both playing the level-5-or-bust card. I have to say that the difficulty of training someone to work alongside some of the “fancy” driving tech makes their argument more convincing. It really looks like people need to be able to understand the self-driving algorithms at a deep level to anticipate when they will fail, and that’s a big ask.

I recently got a refresher that neural networks / current AIs do not usually say yes/no 1/0, but calculate probabilities.

Do the autopilot supervising drivers know/see the current confidence of the system?

What does the relation between confidence and accidents, human interventions and so on look like?

From your nice article I take it the relevant data is privet/secret/confidential – too bad…

Naturally, and complicated.

http://store.steampowered.com/app/619150/while_True_learn/

Game about being a machine learning specialist.

I think Tesla is also trying to skip level 3 and 4. At least Elon Musk is well aware of the problem of almost level-5 cars.

We are still quite far (several years ) away from level 5. That means autonomous driving under any conditions a human driver is able to drive. Also in the city with complicated junctions and street level public transportation.

But I also see the higher risk of having to supervise a level 2 system all the time instead of driving the car all the time. Your attention degrades if you are not really in control and focused on the driving.

True, but the next generations might be a lot more savvy than we think possible. It was a pretty big ask that the common driver understand how to double-clutch back in the day. This, admittedly, might not be a fair analogy. But people can surprise you.

I’d personally bet they’ll succeed in making quite reliable totally-autonomous cars, but they won’t be available to the public for security reasons I mentioned in another post. A car that can function with zero passengers (which is the obvious end-game for a lot of the taxi services who want to entirely replace human “contractors”) is essentially a consumer-grade stealth smart bomb. Sounds crazy, but if they let anyone buy one then it will happen. It’s even a huge risk if you can even call a taxi, fiddle with its passenger-sensing hardware, then program in a destination. Someone will figure out how to fool it and abuse the system. Maybe just as a practical joke or petty vandalism, but some black hat will definitely come along and cause some serious trouble.

Even worse, you call a taxi and a “taxi” turns up, locks the doors and drives you to the “final destination”, taking you with it. Add one to the casulty list.

Rogue or criminal taxi drivers are already possible without any autonomous technology. But we can add a mechanical emergency brake.

Skipping levels 3 and 4 and going strait to 5 is foolish.

Will another human be able to safeguard against unpredictable humans though?

No, which is why we kill about 45,000+ people in cars every year in the U.S. But, I am afraid that number will be much higher with totally autonomous vehicles mixed on the same roadway with other human drivers.

I noticed Google’s absence in this article. Do they skew the statistics? Also will autonomous vehicles affect road and urban design? Sort of a meeting halfway.

Regarding Google’s self-driving car effort…I am a Union officer at NASA Ames and ~4 years ago Google tried to test out their fully-autonomous vehicles (golf cart-style vehicles capable of going up to 25mph with only a “emergency stop” button for the passenger) everywhere on our facility. Our Union fought furiously that they needed to comply with human research protocols…as they were, in effect, using all employees at the facility as human test subjects. (And while 25mph seems slow, not so slow to a pedestrian or cyclist with little protection. Regarding human research protocols, see: Tuskegee–“subjects” need to be fully aware of the research and risks and be able to opt-out.)

Needless to say, they moved their operations to a BRAC’d military base and are using Google employees as test subjects (whether they comply with any sort of human research protocol is unknown to me.)

Nissan is now using Ames, and is following a much less “disruptive” approach; in fact they are collaborating, sharing their data with NASA. Something that Google refused to do.

The moral of the story is,

Don’t try to replace Union workers with robots!

Waymo (which is what became of Google) looks pretty good on paper, relatively speaking, but they’re not self-driving yet.

Since they haven’t had a fatality yet, you can only really look at their “disengage” rates, where a person takes over, and their miles/accident rate — bearing in mind the human override again.

Waymo gets something like 5,600 miles per human intervention that represents vehicular failure or would have caused an accident. (This is maybe a factor of 100x worse than the average human driver.) Their actual accident rate, even with the human driver, is around 2x higher than the unassisted average, although this is in San Francisco, and most of those accidents are due to other traffic participants. People seem to hit self-drivers a lot. (More on this later.)

Driving a self-driving car is hard.

It might affect urban design superficially, like having special parking spaces and very possibly an autonomous version of the HOV lane. But we obviously won’t have a fully autonomous system unless human drivers are banned, which in the US at least is about as likely as the libruls coming to take your guns. For better or worse our country bleeds car culture, and it’s not going anywhere.

a dumb guy with a chainsaw can do more harm than a dumb guy with a wood saw… same story.

To err is human, but to really foul things up you need a computer.

But what about a dumb guy with an axe versus a dumb guy with a chainsaw?

Nahh, the more interesting question is the “smart guy with a butterknife”.

An engineer with a metal file, and time.

Until small computers can process spatial information the same way and at the same rate as a human brain, these idiotic things have no business sharing the road. They simply cannot be trusted to drive like a human, and that is the bare minimum to being able to share the road with human piloted vehicles. If people want to ride in automated vehicles, stick to planes and trains.

The future is coming, you won’t be able to change or escape it. Sorry, but your mentality is on the wrong side of technological advancement. Please don’t write policy or be involved in the politics of this whatsoever.

Well the future also has killbots too, so don’t stand in the way of that either.

That might just be the most foolish sounding advice I’ve ever heard. The future has not yet been decided, chum.

I believe that we can all make a difference and help to shape a future where people aren’t mercilessly ran down by automated cars with the approximate equivalent intelligence of an earthworm.

People aren’t being run down by autopilot cars on a regular basis, not yet atleast. Don’t make it more convoluted than it really is. Besides, you have no real control over what the mass population decides to follow. We have enough dumbasses giving away their personal life story on Facebook, and when they get the option of a self driving car they will take it. Technology has enabled lazy people to reach well beyond their means. Automotive manufactures are working silently behind the scenes to make these cars as immune to these same dumbasses. A fool and his money something something. Theres this movie that came out that predicts our future, and if has a lot to do with plants craving electrolytes and TV shows about kicking people’s nuts in.

some interesting footage on autopilot and not so smart people. https://www.youtube.com/watch?v=jkzzvRfzigw

+1 I’m generally for new tech, but not this. They’re using the public at large as their guinea pigs. The ‘for the greater good’ argument and acceptance of some deaths is ridiculous. Everybody thinks this self-driving car thing is a nifty idea, until it’s your wife or kid that gets killed by one that didn’t make a judgement call your average teenager with a learners permit could have.

– A stretch comparison maybe, but lets take another situation where deaths are somewhat regular (and very tragic) occurrence – school shootings. Lets say a ‘pretty good’ AI sentinel guard was designed that could incapacitate a shooter/threat. How long would this AI guard program last once it (sooner or later) determined a false positive threat from a student, and seriously injured (or worse) the innocent student? I think the plug would be pulled on the system pretty quickly, likely across the country of use. Yet for some reason, seemingly because people think the idea of self driving cars is great, we are accepting (serious) software shortcomings occasionally killing innocent bystanders who did not agree to be part of the test as acceptable.

Yes, you are right, we accept human error more easily than computer error. We accept an innocent victim of a policeman more easily than that of a computer. Perhaps because we can not punish the computer if a judge decides it is guilty.

Not that I think AI sentinels a s a good idea. A little more gun control would be much better. At least anyone who does not store his guns orderly should loose the right to have them. Kids take the gun of parents to school: Parents shall loose them. Kids shoot there brothers/sisters – parents/gun owner should loose the right for to have one for the rest of his life. It is not acceptable, that gun owners let get them in the wrong hands.

@thethethe: Everyone is entitled to their opinion and you do not know what the future holds. Who do you think you are to suggest that someone should give up their right to shaping the future.

Shape away, just don’t complain when it doesn’t go your way.

Why not? Do I have to give up my right to give my opinion if technology goes in a direction I don’t approve? What an asinine statement.

I’m sure glad I don’t know you in real life. I doubt I could stand being near you for more than 10 seconds.

@DainBramage: I have encountered you on HaD more than I’d like, as you tend to turn up at nearly every article to troll any confidence away in the issues of the article. You never seem to have much to offer, aside from pedantic musings on how to attack peoples beliefs. I don’t imagine there are many people who take pride in knowing *you* in real life anyhow.

The wastebasket of history (and therefore of the future, since the future is just more history which hasn’t happened yet) is also filled with dead-end technologies which never caught on. You’re falling for survivorship bias if you think all buzzy technologies being dreamt up today are going to pan out as expected. Remember the lessons of fusion power / flying cars / robot butlers: some of these things will probably happen one day, in one form or another, but it might not be as revolutionary as you think and it might literally take centuries longer than it seems it should at the outset.

That feels a little naive. Humans are good at spatial processing but not perfect and rely a lot on subconscious processes or biological responses. Those aren’t uniform across all humans. They weren’t designed for travelling at speed either. Now if I said to you I want to trust my self-driving car to a program with a wide-angle camera recording at ~10fps that does a basic check for differences between frames, and this is the system to be used in emergencies, you’d call me crazy. That’s the equivalent of the system built into your eyeballs and everybody relies on it heavily while driving for kid-running-into-the-road situations.

The thing is that because all these systems are happening below your consciousness it’s impossible to record a human incident rate. 13 miles between having to grab the wheel seems incredibly good to me, I can barely make it 200 metres in the city on a bike without having to change direction, speed up or slow down due to a pedestrian stepping into the road or a car doing something weird.

Well, as the article states humans are on average FANTASTIC at driving. The number of miles per incident is nuts, considering how many drunks and morons we think are out there. Maybe people deserve a bit more credit than we pessimists dole out.

Can computers ever get good enough? Yeah, I’d certainly say so. But it might be a lot harder than it seems. Sometimes you get a very encouraging initial result with a new technology, but it’s that last mile that gets dicey. It’s going to be a PR and regulatory nightmare if accurate statistics come out showing that these AI are a hundredth as safe as human drivers, which is probably a realistic figure. We have very impressive systems out there today, and I definitely don’t wish to disrespect the amazing work done by developers, but this is a seriously complex and difficult problem to solve. And it will take some time. You think a normal development cycle has debugging and testing that never seems to end? This is QA hell right here.

Many automotive safety developments take several decades to properly implement. A lot of improvements we think of as modern have really been around for a hell of a long time, but it takes a while to work out enough kinks for a public beta. But everything thus far is orders of magnitude less complex than a self-driving car with ability and reliability comparable to even a sub-par human driver. Possible, yes, but likely much trickier than we realize.

actually human vision can see roughly 30Hz (full cycle) or 60Hz (half cycles, or frames), see Nyquist

I am not claiming human vision during car driving will never be surpassed by machine vision during car driving, but let me point out an aspect where human vision surpasses machine vision as I believe it to be used in self-driving cars:

Our eyes are not only sensors but also actuators, we have muscles so our biological cameras can track an object in relative motion. This means we can eliminate motion blur for a target of interest.

Here is a test you can do:

* use xrandr or similar tool to find your screen’s DPI

* write a minimal vsync-ed application that renders fine but legible single line word of text in a certain font and DPI and can be paused, and can be programmed to move the piece of text from the left side of the screen to the right and back at some programmable pixel shift per frame, i.e. setting it at 4 shifts the texts for pixels to the right per frame, and as it reaches the end it returns at the same speed.

* print out the same word of text at the same DPI and cut it out of the paper trimming it, except for a strip of paper at the low side so you can hold the piece of paper without obscuring the text.

* start the application and pause it, hold the paper text just below the text on the screen, verify they have the same size and appearance

* continue the program and hold the piece of paper at arms length with the paper almost touching the screen, somewhat below the displayed text, following it along back and forth

* observe how you can read the piece of paper in your hand, but how the monitor gives motion blur

During the duration of the single frame your eye and the piece of paper are in relative rest because you are tracking the piece of paper, but during that same single frame your eye is not in rest with the display text, which is smeared out horizontally degrading the high resolution content of the text.

The same happens with cameras, if we dont have control of the lighting. Sure we could set a shorter exposure period, but then the selfdriving car becomes nightblind much sooner in order to see as crisp as oculomotor equipped human vision.

Instead of rotating a camera one could rotate a mirror, but it would remove a sense of objective input, as now the AI needs to be trained to track important objects…

Exactly this! It has been scientifically proven humans cant see above 30 frames per second, just ask any game console player!!!1

Eliminating motion blur is called “image stabilization” (IS) in camera technology. OIS or optical IS is there for more than 10 years. In this system you move the lens mechanically to compensate for motion blur, like our eyeballs do. I think it was already in video cameras (camcorders) using magnetic tapes, they used some kind of motion sensor (accelerometer). Although that technology was a little more expensive than today’s smartphone cameras.

Today it is more often electronic (digital) IS. The camera takes a bunch of pictures with fast exposure time and the are combined (added) after they are individually shifted. This can be controlled by a motion sensor or by tracking objects in the individual frames. In that way it is even possible to track several objects moving different and get a sharp image. The software also calculates 3D information out of subsequent video frames while the camera moves.

So basically your example demonstrated, that the human eye can be inferior to modern image processing.

I respectfully disagree that image stabilization is equivalent in functionality to oculomotor vision.

Regarding mechanical IS:

Think about a scene with a sports player moving left and another moving right. Which sports player should be sharp? In order to compensate motion blur for a target of interest you must track the target so that it is relatively at rest with the pixel raster. Image stabilization does have its use: PROVIDED something else (human or AI) has identified and causes the camera to ALMOST fully track a target, then by analyzing the previous frames (i.e. ignore low spatial frequencies, analyze high spatial frequency content, determine what direction to mechanically compensate lens or lighter mirror to keep their phase constant, as then high spatial frequencies are at rest). Its more like “snap to grid” for motion tracking. I did not say it was impossible, I only said I suspect none of this is used in car image sensors. The reason this could be used in human-directed cameras is because the user is expected to almost fully track the target of interest. Note how an accelerometer (while helpfull to compensate for user motion) can not determine on its own what the speed of the target is: suppose the camera is at rest filming a single sports player moving to the left, the relative speed between player and camera is undeterminable from the accelerometer.

Regarding digital IS (without wide angle camera gaze deflection):

Again I am not saying such technology is useless, nor that it will never catch up with human vision. Consider the duration between output frames say for 60Hz, and an object moving 30 pixels in this time. Suppose the IS is actually capturing 3 camera frames per output frame, then even the IS will still need to deal with 30/3 = 10 pixels of blur. If the camera is actually 180Hz, please can I have my 120 other frames (with 3x lower blur)? None of this changes the darkness sensitivity issue though: as I mentioned we can brute-force towards higher and higher frame rates, but those individual frames will become darker and noisier at some point the IS will not be able to do its job.

Consider a 1920x1080p camera, now move your finger across the screen left to right during one second, and one second back to left, and in one second back to right. That’s pretty slow, and if you tolerate at most 10 pixels motion blur, 60Hz is not enough (32 pixels of motion blur). if you want to register motion that is only twice as fast and still tolerate 10 pixels motion blur you need 6.4*60Hz=384Hz frame rate). If for that last motion, in fact you only tolerate 3 pixels of motion blur you needed a framerate of 1280Hz! They would have to put a whole lot more or faster ADC’s in the camera… and what camera interface would that be?

There is no substitute for wide angle camera/mirror deflection (and an intelligent vigilant system directing this oculomotor system) if one insists on being able to have relatively crisp images, without having to resort to absurd framerates (we can comparatively safely drive cars with our ~60Hz framerate vision, but we can prevent motion blur). It’s just sad that with all the clocked hours, and now accidents we didn’t even record the human supervisors eyetracking behaviour. imagine an eyetracker and an extra camera which deflects to look in the same direction as the human, with tight DC coupling up to high frequency (notice how we can quickly ‘jump’ with our eyes). At least we’d have gathered data on what our brain deems interesting and the sight we roughly had while tracking something.

So while I agree IS can be useful, the core problem is intelligent motion tracking, and adding wide angle gaze deflection, unless one is prepared to go the very high framerate route…

Something else I don’t understand regarding the Uber accident: there were supposedly 3 independent systems that should have prevented it (Volvo radar, Uber Camera, Uber Lidar), I understand mortal accidents are rare (in the sense that one needs enough sad examples to properly train the neural network or fix one of the 3 systems) but if triple failure has presented, then there should have been many many double failures, and even much more single failures, surely that seems like plenty of opportunity to notice a problem and fix it?

There is absolutely no point whatsoever in having self-driving cars if you still need to ‘keep an eye on it’ and respond when needed. The only way this will work is if you are watching all the time, so your brain is still in-gear, which totally defeats the purpose. Nobody will go for these cars if they are incapable of driving without human input. At the moment they apparently can’t, so I think its game over for them, at least for a while. The legal system just won’t have a way of working out who was at fault.. Another case of throwing money at something not guaranteeing success.

I work in a very different industry, but when you introduce “smarts” into the system, people suddenly get stupid. They rely on the technology to do the work for them, and the thinking too. Suddenly, they are unable to troubleshoot something that they would have to troubleshoot had the “smarts” not been there in the first place. When people’s lives are on the line, or the road, I don’t trust any technology but the human brain. Even at that, I’m skeptical.

Wider community – same problem. As we offer more options, people become sheeple

Yes, I was in the first generation to have electronic calculators in the classroom.

Our teacher warned us that by the end of the quarter, he’d ask us “What’s 30 divided by 2?” and we’d reach for our calculators. “He’s right you know!”

Slide rules spoiled us. :-D Miss my book of tables.

Books with tables? In my day we laboriously done the calculations the long way around using a pointed stick to make scratches in the dirt.

It’s a very old argument. Socrates thought that widespread literacy and written texts would ruin people’s ability to remember things. In some ways it did, considering the kind of oral history average people could memorize at that time. But in the long run the augmentation of technology was worth the sacrifice.

We’re a tool-using species. It’s obvious by looking at our bodies that we have sacrificed most of the talents nature initially gave us. We can’t live through winter without technology–truly pathetic for a mammal. But (until climate change kills us all) the advantages we have gained have been worth the trade.

“Climate change” will for sure never kill all of us. Even if it gets warmer, in some regions we need less energy for heating, and perhaps in some other more energy for aircon. Perhaps some areas get uninhabitable while others get habitable.

The story of Air France Flight 447 is relevant here. Autopilots are easier to build for aircraft because they don’t have to avoid pedestrians, other traffic, roadworks, &c., and the aircraft in this case essentially flew itself most of the time. When it dropped out of autopilot after three and a half hours of peace and quiet the flight crew didn’t really understand what was going on, reacted in the wrong way, and ultimately crashed it.

https://en.wikipedia.org/wiki/Air_France_Flight_447

That’s the big challenge – how do you render the system “safe” in the event of a failure.

In the case of AF447 – how do you render the system “safe” after a sensor failure?

In the case of an autonomous vehicle on the road, how do you render the system “safe” in the case of a guidance or sensor system failure? You can’t just slam on the brakes as hard as you can, that will likely cause an even more dangerous situation. How do you safely pull to the side of the road when your autonomy system is faulted?

One can survive a single sensor failure by leaning on others. For example, the artificial horizon still gives a good approximation of AoA for a given thrust setting, the altimeter still gives a good approximation of power required (if the altitude is decreasing while at an apparent angle to horizontal one needs more power; the AF447 cut power to idle and pulled the nose high, so both instruments gave clear indication the plane was going to crash.) If all cars were autonomous there would be little risk to stopping anywhere on the road; cars would simply flow around it just like any other obstacle.

I share your first sentence.

But why should be “game over”? You write yourself:”At the moment they apparently can’t”. I am sure the development will continue and “throwing money” on them is not lost. Btw “guaranteeing success” is very rare. Often things are promising, but nut guaranteed.

I used to do quite a bit of “autopilot” driving about 25 years ago. A pair of horses can be depended upon to hold the correct direction with a minimum of input on very lightly traveled roadways. One still needs to remain aware of what is happening and, fearing the consequences of dropping the reins, to have them tied with string to the carriage.

Every self-driving motor car, even the slightly autonomous ones mentioned in the article, needs a bumper sticker reading MURPHY IS MY CO-PILOT.

the quiet man film with john wayne – the horses knew where to stop

B^)

Then again horses have been known to panic and get out of control. Only goes to show nothing is perfect…ever.

My then-octogenarian grandfather once pointed out to his then-teenage grandsons one of the advantages to horse-drawn transport: “Where the horse knows the way, you’ve got both hands free for your date!”

Also a horse and carriage will still work after a Carrington event but so will a car due to the wires in it being shielded and generally being too short to have high currents/voltages induced in them though I can’t say the same about an autonomous one due to some implementations being dependent on the cloud and GPS sats which won’t fair so well.

Horses don’t come with delicious insulation to eat.

No, the insulation is not that delicious. But much of the stuff under it :-)

I’m normally all for the ‘damn the torpedoes’ approach to accepting new technology. A new technology may have problems starting out but it isn’t going to get any better if it isn’t being put through it’s paces in actual use right?

Where would we be if our ancestors were slower to accept new technology? Starving whenever it doesn’t rain? Dying of small pox and other preventable diseases? Where could we have been had they been quicker to accept new ideas and new technology? Singularity 5,000 years ago maybe?

But… I must be honest. I don’t think I could properly operate a “self driving” car. I’d love such a thing if I truly did not have to babysit it. I could use that commute time napping, studying technology, reading HaD articles! Or.. I can just drive myself. I’ve been doing it just fine for a long time. But… if a self-driving car seems to be doing a good job.. I’d be so tempted to just let it go. Not to mention, even if there were no distractions it would be boring! To just watch the car drive, just in case it needs me… for mile after mile… after zzzzzzzzzzzzzzzzzzzzzzz………CRASH!

I don’t think I can do that!

The future’s OK as long as it’s someone elses sacrifice.. ;-)

Self driving cars are, in a way, a solution to a problem that should not exist.

Just imagine railroads instead of streets and autopilots would be much easier because of the greatly reduced realm of possible situations and far more options for automation & sensors on the track.

It could be a gradual process:

1. increase spending on railroads.

2. create ‘parking spaces’ at train stations.

3. offer automatic mini trains for rent and purchase.

4. use innercity public transportation to get to your mini train.

5. get connected to the net at your skyscraper.

6. and so on….

A city/urban design that allows vehicles and people to interact. You’ll notice in some SF movies there’s a clean separation between the two. At worst vehicles take out other vehicles which are better protected.

Public transportation ! the car lobby won’t tolerate such a silly idea … And all the taxes would evaporate !

It is not a question of lobby or tax but also of personal freedom.

You can not build rails to any building. And your system requires a change of the means of transportation very often, including moving any stuff you bring with you. I prefer my car where I put my stuff in at the supermarket and put it out at home. And I leave my CDs in or an extra jacket which I could need in the evening. And the car can go where no rails are, even out of te center of the city with it’s skyscrapers.

So your scenario is a true dystopia.

Absolutely true.

What are the stats like for aircraft autopilots? How often do the pilots have to intervene during a typical long flight?

If, by ‘intervene’, you mean correct for an autopilot fault, rarely in the newer aircraft. But definitely not zero. Uncommanded disconnects happen. But we’re not 3 feet from another vehicle so it’s usually not a big deal, AF447 being a major exception, and that being caused by a completely inappropriate response to the disconnect.

An airliners autopilot, though, has a limited range of responsibilities. Stay on altitude, fly the programmed route, land in visibility too low for pilots to see. It’s not responsible for weather or traffic avoidance. It doesn’t hold a tight formation with another vehicle (like a car must), and doesn’t have to anticipate pedestrians, deer, etc. It’s an idiot savant – it does a basic job very well but doesn’t have the intelligence that a car autopilot would need.

This. Airplane autopilots are also understandable by pilots, and they’re trained in their edge cases. Because they can comprehend them, they can _use_ them, essentially as tools, to help with the whole looking-at-too-many-dials-in-the-fog situation, for instance.

There is a very real division between human-augmenting tech and human-replacing tech in the self-driving world. Both in the companies’ ideals, and in the way they design. And they are very different.

Tesla’s autopilot is human-augmenting on paper, but people seem to treat it as human-replacing. Some of this may be because they don’t understand the system. Some of it may be Telsa’s marketing. Anyway, the result is clear enough.

– This – If the technology REQUIRES human supervision, the supervising human should be trained in the technology, and understand it’s limits and edge cases, as a pilot would in the above case. Until (if ever, in our lifetimes) cars get to full-autonomous capabilities, I don’t think it should be out of the question to require training and testing of drivers to be certified to operate any sort of ‘autopilot’ mode. These cars are so connected, authenticating a driver for autopilot mode shouldn’t be a big deal, with some sort of facial recognition and/or other credentials. For that matter, maybe the tech should be honed to monitor the driver is actually being attentive while in autopilot mode, and if they are not, throw out a warning that autopilot mode will be disengaging momentarily if they do not return their attention to the road. If the tech can monitor the road, monitoring the driver for attention to the road should be easy enough. For that matter ‘black box’ recording of a driver facing camera should be mandatory with this tech, for accident fault liability reasons. Someone adopting this tech should fully understand it, and take liability for what may happen if they abuse it (running someone over while not paying attention and at the ready to take over, for example).

I know about system where the driver is monitored by an extra (hidden) camera to not close his eyes or so. Otherwise the assistance system (it’s not called “autopilot”) will disengage after some warnings. I don’t know if or for how long the camera picture will be recorded.

Aircraft have a much larger operational zone, and have much less congestion to deal with.

+1

The Question that I’ve not seen addressed is:

What do you do about suicides?

The video of the Uber crash shows someone who looked to have deliberately timed their travel so as to get hit.

But NOT blurring the uploaded copies of video would make it possible to have a better inform opinion.

Assuming they are carrying a phone (or other gadget), I’d like to see scrutiny of cell phone usage of any pedestrian that gets hit by a vehicle.

This should be given as much attention as any distractions that the driver is held accountable for.

Been more than one case of pedestrian vs non self driving vehicle (no pedestrians were in a crosswalk zone), in this city and the contribution/responsibility of the pedestrian seems to get swept under the rug.

I’m not a fan of self drivers, but if we’re going to judge them for roadworthyness, the whole set of facts need to be available in order to be fair about it.

Not just a one sided/partial fact set “witch hunt” against the cars.

P.S. The news videos show irrigation sprinklers, in use, at the crash scene.

I wish we had info as to whether or not they were spraying at the actual time of the crash.

I’ve read that water mist can confound the cars sensors. Thus my reason for wanting to know about sprinkler timing.

Random mist in the air will be a difficult thing to program for.

Get’s back to other comments about processing abilities of vehicle.

What an Achilles Tendon, that would be to have. Random wheel spray or an overheated radiator or just the vagaries of car movement generated turbulence, in sprinkler mists, etc…

The sprinklers you mentioned are indeed an interesting point to that crash. I would be interested as well.

I am however pretty impressed with the progress thus far. Still some ironing out to do, but they are getting closer to the goal.

Oh I just thought of a hilarious new scenario though of ‘bricking’ cars thru bad updates lol.

“This guy will take the heat. ”

That’s a guy????

No that’s a really bad holographic projection over an alien life form that looks worse then an octopus does to some small children.

Besides the Google designed self driving cars are safer because they drive slower. Uber is making itself worse and abusing its stolen technology. As for Telsa? Cruise control still needs some intervention.

The driver has been reported to be a woman, Rafaela Vasquez.

Hackaday editor, please note.

She’s trans. That may be why her felonies did not come up in the background check. They were under first name Raphel, and she applied as Raphela.

http://abc7.com/driver-in-uber-self-driving-car-fatal-crash-was-convicted-felon/3247898/

20 year old felonies have nothing to do with the crash and wouldn’t have mattered in a background check. The discussion about old felony convictions is just a distraction.

Fixed. Source I read said “Raphael” and I just assumed. My bad.

I stick by my claim that it’s very likely that the system failed this person, who will probably be reliving that night over and over. Sorry.

The ideal of self driving vehicles is very interesting to me. Of course I would love to be chauffeured around and not worry about doing the driving, but to me the important issue of self driving is trying to solve is lapses in human attention while driving. These could be distraction (cellphones, etc), falling asleep at the wheel, or driving while intoxicated. It seems an easy logical leap to think a properly designed and implemented technological solution could improve upon these issues.

In reading Elliot’s excellent article here, one thing I hadn’t considered about the statistics pops out. I wonder how many near-misses turned out okay because of the reaction of human drivers? This is an interesting metric because it is nearly impossible to measure with human drivers, but definitely possible with autonomous systems (recording times that automatic braking avoided an incident, etc.).

If humans have a habit of being distracted while driving, giving them a feature called “auto-pilot” and expecting them to be equally or more alert than before is just silly. Of course the distraction problem will become more. This is especially so because with each passing minute of driving with the machine in control we get lulled into comfort because our experience is that we haven’t had a bad outcome.

I suppose the real interesting data would come in having a driver alertness system. Instead of watching the road, the machine watches the driver to make sure they’re paying attention. That does seem very Big-Brother-esque, doesn’t it?

The super cruise that GM is rolling out tracks the driver and will stop the car if needed.

Mike nails it here:

“If humans have a habit of being distracted while driving, giving them a feature called “auto-pilot” and expecting them to be equally or more alert than before is just silly.”

When ABS came out, insurance companies offered lower rates to drivers that had it. Later they dropped the discounts because drivers were expecting the car to compensate for their bad judgement and accidents resulted.

It is similar with 4 wheel drive, people expect to be able to drive in lousy weather because their car has “super powers”.

With 4×4 and AWD every winter I can see people sliding out of control as it may be able to get going better than a 2 wheel drive car it often can’t stop any better and in the case of SUVs they often need more time to stop.

As for ABS I wonder how many cars on the road have non functional system?

Though this is partly the manufactures fault by not making the fault codes visible to a standard code reader.

Human-augmenting vs. human-replacing tech.

Many cars have lane-hold features where they vibrate the wheel or beep when you drift. That’s half of your battle already, with nothing big-brothery at all.

I would buy a car that offered a good range of human-augmenting techs over one that offered to drive for me.

Because when I don’t want to drive, I just take the darn train. It’s got a safety record that none of the self-drivers will attain in the next 50 years. (I’ll bet anyone a beer. In the café car.)

Bingo. 1000% this.

Great article Elliot, refreshing to see the research and thought put into my confirmation bias.

Good piece. Just one small note: check again the identity of Uber driver and perhaps change the caption of the picture. I believe I read in the news that “This guy” is actually “This lady”.

Not every woman is a lady.

Got it. Thanks.

Unfortunately autonomous driving is kind of “all or nothing” either the driver must constantly be involved (as in actively performing part of the driving), or they must be completely divorced from the driving process. It is unreasonable to expect humans to be constantly attentive but not driving 99% of the time. Humans simply are incapable of maintaining high levels of alertness and being able to swap to active control with that much inactivity.

And you need the context …

Who exactly want’s these death traps anyway? Forced consumerism is getting very annoying.

> hands off the steering wheel for six seconds prior to the crash

that DAY, not directly before the crash, bravo Elliot for swallowing Tesla PR

https://www.youtube.com/watch?v=6QCF8tVqM3I

autopilot? more like glorified lane following cruise control

Are you sure? Here is the full quote of the sole source of info (Tesla blog) I could find on this:

“The driver had received several visual and one audible hands-on warning earlier in the drive and the driver’s hands were not detected on the wheel for six seconds prior to the collision.”

It certainly does suggest that the driver had a warning previously, which I did not “fall for”.

But it also really reads like the driver’s hands were not detected on the wheel for six seconds prior to the collision. AFAIK, you get warned after seven seconds. That’s a second late, no?

But the whole point is that the driver became over-reliant on the system, and the system failed. It doesn’t make it any less horrible that the system killed the passenger, but in terms of strict legal liability, I think Tesla would be very careful about the timing..

If there’s more to the story, and you know it, source me!

Tesla has no sensors to detect “hands off the steering wheel for six seconds”. It has motor in the wheel and measures torque while jiggling it a bit, so all they can measure is someone acting on it with opposite force, _not_ hands on the wheel. If you ask Tesla owners they will tell you its pretty easy to get warnings when gripping the wheel.

Tesla told you “our sensor couldnt detect something”, and you immediately jumped to “driver had hands off”, just because “warning earlier in the drive”.

That is just crazy. Why would you even want this kind of half-assed autopilot? So you can sit and twiddle with your phone even more? You still have to do all the hard parts manually. If you’re already sitting there, hands on the wheel, giving the computer a side-eye in case it tries to run into the rails–why not just drive?

Might be nifty for long-haul truckers as a backup system, though. They already have systems that screech at you if you start to veer out of your lane. Would be nice to have one that also tries to keep you on course until you come back to your senses. That might already exist.

One reason I feel true autonomy would not be possible with out doing something like burying magnets in the road.

Why this solution it’s simple and robust and tampering with it would require someone to go through the effort and risk of digging them up.

Other solutions such as RF transmitters would be to easy to tamper with.

Lots of disagreement on self driving cars. The one area that we can agree on is using technology to assist us. If a car can prevent us from drifting off the road that would be a good thing. One thing that I would like to see in all cars is a system like the Cadillac has. It monitors the driver to make sure you are paying attention, if not it will pull over. It needs to be extended to look for inattention do to cell phones use and signs the the driver is drunk. If this feature was on all the time it would save countless lives.

” If this feature was on all the time it would save countless lives.”

And Hackaday would be loaded with projects on how to defeat Big Brother’s monitoring of our lives.

True. It would be disabled by many people, with DRM or hardware lockouts cracked almost immediately after release. Some cases would even be valid–right to repair, unique applications, modifications, etc. plus a hefty draft of simple paranoia and stubbornness.

But still, a percentage of the systems would remain intact and work as intended, leading to a corresponding percentage of safety gains for the public.

DRM makes everything it touches worse and less reliable.

On something as complex as an autonomous car adding another layer of software to go wrong could be disastrous.

These are just the first of what will be a long salvo of errors that self-driving cars make. The problem is they can only deal with what they’ve seen in their deep learning and not anything that comes up unexpectedly. Human drivers, on the other hand, are incredibly adept (most of the time) at dealing with new situations.

Do something totally unexpected to any self-driving car, like let the air out of 1 tire or put a boot on it. Or strap a giant inflatable snoopy to the hood. The car will have no idea what to do. That’s just fun and games but when the car sees a ball bouncing across the street, decides that the ball isn’t an issue and drives right over the child that’s when reality will sink in.

You can argue statistics and maybe overall they will be safer, but when the bar is set at “does the car do what a reasonably competent driver would do” for every situation then we’ll see a lot of failings in these systems (and a lot of lawsuits).

I think that in the end we will actually need a general intelligence to create a truly self-driving car.

… then the car mistakes the ball for a tumbleweed and still hits the kit – or vice versa – causes a crash on the open road in the countryside…

I think there’s also reason to think of opaque machine-learning algorithms as the ultimate in security by obscurity–so obscure even the engineers don’t know exactly how it works. But somewhere somebody will figure out a vulnerability, an exploit behind the abstraction layer. Maybe through dumb luck, maybe sophisticated statistical analysis. Maybe there’s a quirk left in the neural network, e.g. a certain visual input which drives the car nuts.

There’s lots of noise and unintentional behavior left over in trained neural networks. It’s similar to how most of our iteratively-evolved genetic code is junk that does nothing. The remaining portion works and makes us do what we need to do, but sometimes the junk rears its ugly head and cancer happens. This is code made by random mutation selected by trial and error, so of course it’s not the most efficient and succinct code, pruned to an eloquent and poetic simplicity. It’s the opposite of that. A pile of emergent noise so daunting that the way it achieves specific goals is not obvious and requires ages of exploratory research.

I don’t think that it’s going to spontaneously develop sapience or anything, but somewhere in those inscrutable terabytes of machine learning are some glitchy problems which we’ll have to face when the proper stimulus brings them forth. Security by obscurity only works for a little while. It always gets cracked given enough users and time.

I hadn’t really thought about deliberate attacks. Wouldn’t be hard to put a series of white LEDs on a road that cause the car to think the lane turns sharp right when it doesn’t.

i’d love to have a self driving system that actually extends my “sensors” with information my eyes/ears can not gather or process. IR sight, distance information, 3d environment sensing, proximity sensors, all the cool stuff. but i don’t really like the idea of having just “good enough” version of image recognition from the front facing wide angle camera.

the poor soul who got hit by the uber car – should have been picked up by all the other sensors even in pitch dark night without any lights on the car or on the street, long before she entered in the camera’s field of view. he even had her bike, most probably with a bunch of metal parts. for god’s sake, the perfect obstacle to detect!

badly written code that locked up the os in the critical seconds – could be a plausible explanation for the accident. looking at the footage, there is no-one who could have reacted to it manually to avoid the accident.

can’t get one thing out of my head: it’s business.

and business is ultimately about profits. so beside practicality, reliability and safety, you have an additional requirement: low(er) costs. i can totally understand, that a very expensive product is a game killer. but looking at the uber footage i can’t stop thinking of someone is trying to optimise stuff here – get rid of LIDAR to substitute its inputs by information extracted from camera images. while computer vision is making great progress, it is totally different compared to how our brain works. we have to rely mostly on our eyes only, but in this stage i wouldn’t let the autonomous cars to do the same.

and indeed there is research and development in more startups around the globe that use solely camera images to drive a car.

Your first point reminds me of a gadget I’ve been fiddling with every now and then, though it’s mostly been put on the backburner.

I wanted to put a HUD in a motorcycle helmet (the kind that reflects off a transparent material, of course. It must fail in a way that will leave the rider’s senses unimpaired) and the HUD would display data from a lidar mounted on top of the user’s head. Perhaps the data would be displayed as a circle around the periphery of your vision, where closer objects would cause the circle’s perimeter to grow brighter or bend inward, whatever UI was most intuitive and easy to read. Perhaps a combo, where the distance to the perimeter is absolute distance and brightness/color is the rate of change, so incoming objects would be apparent before they get too close.

In the end I’d like to have a system which would give you distance and speed information from all angles, such as a tailgater or somebody in the lane next to you for an extended amount of time. Or someone maniacally speeding up from behind. A person coming from the side about to run a red light, not slowing down like the rest. I always check mirrors and over the shoulder when I change lanes, but I’ve learned over fifteen years of riding that letting somebody stay close to you for any period of time gets progressively more risky. Also, in the event of a sudden emergency ahead of you, it’s handy to have plenty of info about escape routes in case there’s not enough time to check a blind spot.

Of course all this would be handy for cars as well. Project it on the windshield and have lidar on top of the cab. There are some surprisingly affordable lidar units out there, but you have to hack and dig for them. I was looking at one from a now-defunct robot vacuum. Most robot vacuums cheap out and don’t have it, but there’s a brand that shows up on Ebay broken for parts and usually the lidar still works. Look up Neato vacuum lidar.