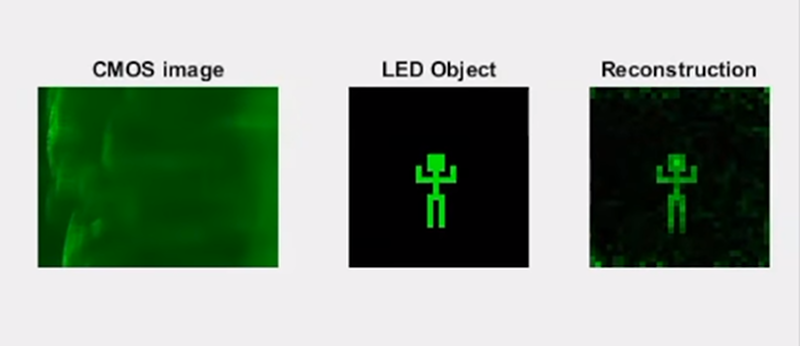

A normal camera uses a lens to bend light so that it hits a sensor. A pinhole camera doesn’t have a lens, but the tiny hole serves the same function. Now two researchers from the University of Utah. have used software to recreate images from scattered unfocused light. The quality isn’t great, but there’s no lens — not even a pinhole — involved. You can see a video, below.

The camera has a sensor on the edge of a piece of a transparent window. The images could resolve .1 line-pairs/mm at a distance of 150 mm and had a depth of field of about 10 mm. This may seem like a solution that needs a problem, but think about the applications where a camera could see through a windshield or a pair of glasses without having a conventional camera in the way.

The only thing special about the window is that all the edges except the one with the sensor have reflective tape applied and the remaining edge is roughed up. The window used was a piece of Plexiglass with three edges heat annealed and the fourth edge sandpapered. The sensor on that fourth edge picks up a seemingly random set of points.

This isn’t the only lensless camera in the works. Caltech, Rice University, Hitachi, Microsoft, and others all have something in mind, although they are generally more obtrusive. The paper discusses some of these and while they do work without lenses, they generally need something more than just a sheet of clear material between you and the scene.

The images aren’t wonderful but, then again, neither were the ones from the first digital camera. They still should be sufficient for many computer vision applications. If you’d rather play with pinholes, they work better than you might think.

So literal magic?

“Any sufficiently advanced technology is indistinguishable from magic” Arthur C Clarke

nope, it’s mathemagical. ;)

Very promising stuff! Thanks for posting.

It’s not really “no lens” … it’s “a really funny shaped lens”.

I presume this works best with the object 90 degrees to the pane. At an angle the image will be distorted. What about the other way around with a dot matrix led display on the edge? that would also be useful as displays in glasses and HUD type displays.

Looks to me like they’re basically using the plane of glass/acrylic as a lightpipe, and are taking advantage of total internal reflection to effectively guide light usefully to the sensor. This should cause aliasing with some spatial period on one axis, and that should also mean that performance will be unusable in any situation that isn’t light-on-mostly-dark.

Obviously you can add shrouding on the end, where light is supposed to enter, but now you’re recapitulating a pit eye.

Bingo. “really funny shaped lens”

The whole window, polished edges, reflective tape, etc., are an unnecessary distraction. The video even shows the window with no reflective tape on the edges..

The whole idea is that the scattering surface in proximity to the image sensor *is* the (very complicated) lens. The paper describes a complicated “lens” calibration procedure where the scatter pattern associated with each and every possible source point is recorded.

When an image pattern is shown to the camera, consisting of several of those previously-calibrated points, the image collected is the sum of all those previously-calibrated scatter patterns. The reconstruction process simply decides which of the previously-calibrated points are required to best fit the recorded scatter pattern. Once you get a few dozen light point simultaneously contributing to the scatter pattern, you require exceptionally good calibration and signal-to-noise ratio to extract the source points correctly.

It would make a nice undergraduate lab exercise.

As a practical camera, I have reservations, but I can imagine some niche applications where this technique could be handy.

I’m not an optical guy so maybe I am wrong. However, to me it would depend on how you define lens. I think of a lens as something that refracts light so that it focuses to some point or diverges (convex lens). This is neither. If you define lens and “something that collects light” then yes, but I don’t think that’s the formal definition.

Of course, the big deal here is that if I can mount a sensor on the edge of a window/visor/viewscreen etc and see what the human sees through the window that could be huge. Even if it isn’t “picture perfect” if is enough to do feature extraction on that would make a lot of things much easier.

‘Lens’ is pretty well defined with no ambiguity.

In this case the pane of glass is a transparent material with curved surfaces on the ‘rough scattering surface’ concentrating and dispersing light rays.

As far as sensationistic science journalism goes, this isn’t too bad though.

I wonder what’s more difficult, developing an efficient algorithm which can process data from an ordinary sensor, or a sensor that that records also phase information.

Eliminating the lens, if they actually do that, would decrease production cost because lining the lens up with the optical sensor is a non-trivial issue. Miss-alignment also creates optical aberrations that lower the quality of the resulting image.

The same inversion approach used here can be used to correct for a misaligned lens. The problem is that the calibration procedure is more expensive than getting the alignment right.

is it just me, or does the CMOS image have a ‘face’ in it, slightly towards the right?

The Orwellian nightmare moves another step forward.

Now we enter the modem era of AlgoLenses.

Enhance!

https://youtu.be/xbCWYm7B_B4?t=71

zoom AND enhance

https://www.youtube.com/watch?v=2aINa6tg3fo

Hmm, so extrapolating any so called benign and currently accepted non sensing/computing surface anywhere could then be instead a camera indistinguishable from current non sensing surfaces – that would get into the idea of seriously advanced alien like tech with QM like entanglement paradigms for communications/telemetry !

ie. If say an advanced civilization budded off from eg Babylonians some 3000+ years ago (when they started experimenting with electroplating and progressed to advanced tech well isolated from primitive religiously “inspired” cultures) then for those thousand plus years they could be and have been watching (and listening to) us without us ever knowing. IOW. Once you have enough focussed intelligent people starting off with electricity which inevitably leads to electromagnetics then all manner of advances can be made and combine that issue with rate of change tech development – if they exist that is, would be very far ahead of us and also likely to be able to hide under our noses and perhaps fire t us as well and very well indeed – except for the occasional accidental sightings of course ;-)

Going back to our comparative (or enforced) loneliness, so sometime in the near future we might even be able to make something like a multipart paint with self-organising properties towards pragmatic utility as it dries/sets to become a sensing/computing surface which also collects light energy and maybe via THz comms offers telemetry (THz is nice place to hide signal as very high noise level) – Geesh what a really advanced world we could be living in not far off either. Perhaps then our distant Babylonian cousins might deign to communicate with us rather more directly – it would have to be sometime after current societal/commercial/nation-state fragmentation were well moderated though – let’s hope they could trump our current chaotic political systems offering the illusions of true democracy :D

* “perhaps influence us as well” not ‘perhaps fire t us as well’ weird not android auto correct :/

https://www.youtube.com/watch?v=JTNBPtZHLjk