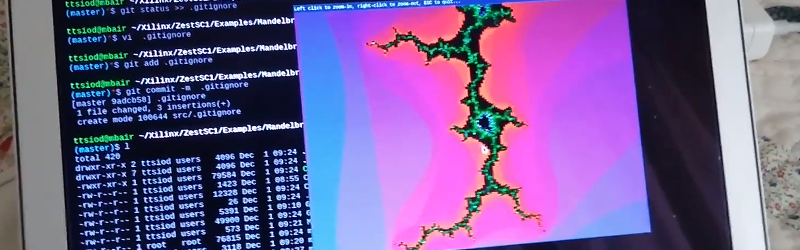

Over on GitHub, [ttsiodras] wanted to learn VHDL. So he started with an algorithm to do Mandelbrot sets and moved it to an FPGA. Because of the speed, he was able to accomplish real-time zooming. You can see a video of the results, below.

The FPGA board is a ZestSC1 that has a relatively old Xilinx Spartan 3 chip onboard. Still, it is plenty powerful enough for a task like this.

The project doesn’t directly drive a display. It does the math, stores the results in the board’s onboard RAM and then sends a frame to the PC using the ZestSC1’s USB port. Currently, the code isn’t pipelined and a future task is to add pipelining so that it computes a new pixel on each clock cycle, after some latency, of course.

The repo contains the VHDL code and some C++ code that interfaces with the board and displays the results. If you have that particular board, it would be a good basis for a different project.

Our FPGA boot camps use Verilog, but they are still a good place to start if you want to learn FPGAs. The concepts still apply and the recently added module on state machines will give you a good head start no matter what language you use. If you crave more VHDL math, there’s always CORDIC.

One pixel/cycle will be hard (and inefficient), because each pixel requires different number of iterations. Instead, I would create an array of N workers, each capable of doing an iteration, and working on N pixels at the same time. Every time you have a final result, fill the color value in the buffer, and assign new pixel to that worker.

It’s not a problem that you need a different number of iterations per pixel: you just had a sideband signal that travels with the pipe that indicates whether or not the current calculation is still relevant.

See also here: http://lulabs.net/electronics/mandelfpga/

To expand on it: if you want to do one pixel per clock cycle, you have to always assume the worst case, often for a long amount of time.

In the case of Mandelbrot, you can have such a case always, when you point right in the middle of a black region.

So the efficiency argument goes out of the window.

The worker method with less resources than worst case only flies when you can even worst case calculation with non-worst case and have a FIFO or something to even things out.

Or you pipeline the iterations and let the pixels fall out of the pipeline when they finish? Or only put in one iteration and just use the reduced gate delay to crank the clockspeed through the roof. Have multiple high-speed “iteration cores”?

software-only XAOS is faster. Much faster.

I don’t think it should come as a 12 year old mid-range Spartan-3 that runs code that was written by a beginner and that uses a brute force algorithm, runs slower than a highly optimized piece of software that runs on a CPU with a 40x higher clock speed and reuses results from previous frames to cut down on calculations.

The goal of these kind of projects is almost never to make something faster or better than what already exists. It’s aimply to built something cool and to learn a lot in the process.

Think of the satisfaction and sense of accomplishment of finally getting everything working and compare that to downloading XAOS and running it. Which one wins? ;-)

Thumbs up, best comment in a long while.

Yes, other than the lack of “surprise that a “, it sums up many of the projects featured here very well …

I recall reading about fractal zooming images/photos in the past and this gets me thinking.

Are there any updates in regards to prediction of non-fractal image/photo edges for infinite zooming that use a fractal algorithm or optimal fractal algorithm prediction process like maybe the magic wand/lass/polygon tools in photoshop/gimp?

Are the later mentioned algorithms even fractal related?