For the first time, a robot has been unionized. This shouldn’t be too surprising as a European Union resolution has already recommended creating a legal status for robots for purposes of liability and a robot has already been made a citizen of one country. Naturally, these have been done either to stimulate discussion before reality catches up or as publicity stunts.

What would reality have to look like before a robot should be given legal status similar to that of a human? For that, we can look to fiction.

What would reality have to look like before a robot should be given legal status similar to that of a human? For that, we can look to fiction.

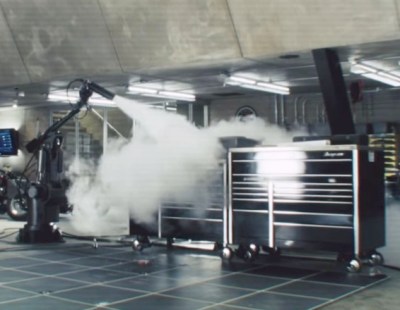

Tony Stark, the fictional lead character in the Iron Man movies, has a robot called Dum-E which is little more than an industrial robot arm. However, Stark interacts with it using natural language and it clearly has feelings which it demonstrates from its posture and sounds of sadness when Stark scolds it after needlessly sprays Stark using a fire extinguisher. In one movie Dum-E saves Stark’s life while making sounds of compassion. And when Stark makes Dum-E wear a dunce cap for some unexplained transgression, Dum-E appears to get even by shooting something at Stark. So while Dum-E is a robot assistant capable of responding to natural language, something we’re sure Hackaday readers would love to have in our workshops, it also has emotions and acts on its own volition.

Here’s an exercise to try to find the boundary between a tool and a robot deserving of personhood.

Bringing Tools To Life

Ideally, the robot would offer something which we don’t already have, for example, precision or superhuman strength. When you think about it, we already have these in the form of the tools we use. We give a CNC machine a DXF file, set it up, and it does the work while we do something else alongside it, perhaps solder a circuit. But the CNC machine doesn’t do anything without our giving it precise steps.

Something closer would be a robotic arm assistant like the Festo robot which does some steps while you do others or you both do some steps together. But again, the actions by these robot arms are either programmed in or learned by your moving it around as it records the motions. Likewise, robots in a factory all follow carefully pre-programmed steps.

But what if the robot elicits compassion, as do anthropomorphic robots? The robot which was unionized does just that. It’s a roughly human-shaped one called Pepper, and is mass produced by SoftBank Robotics for use in retail and finance locations. It has a face with eyes and the appearance of a mouth. It also speaks and responds to natural language. All of this causes many customers to anthropomorphize it, to treat it like a human.

But what if the robot elicits compassion, as do anthropomorphic robots? The robot which was unionized does just that. It’s a roughly human-shaped one called Pepper, and is mass produced by SoftBank Robotics for use in retail and finance locations. It has a face with eyes and the appearance of a mouth. It also speaks and responds to natural language. All of this causes many customers to anthropomorphize it, to treat it like a human.

However, as programmers and hardware developers, we know that anthropomorphizing a machine or treating it like a pet are unwarranted responses. It happens with Pepper but we’ve also previously discussed how it happens with Boston Dynamics’ quadrupeds and BB-8 from Star Wars. Even if the robot uses the latest deep neural networks, learning to do things in a way which we can’t explain as completely as we can explain how a for-loop functions, it’s still just a machine. Turning off the robot and even disassembling it causes no ethical dilemma.

But what if the robot earned income from the work and that income was the only way it had for paying for the electricity used to charge its batteries? Of course, we charge up drill batteries all the time and if the drill fails to do the job, there’s no ethical dilemma in no longer charging it up.

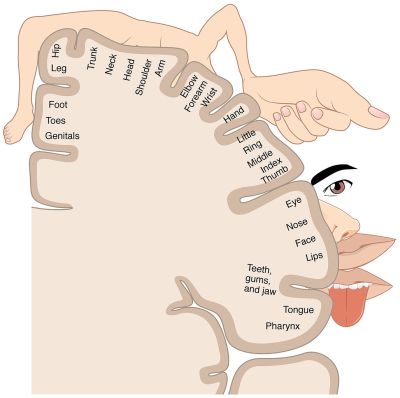

What if the robot were self-aware and had an inner monologue? Would the compassion begin to be justified then? Encoders and stretch sensors would tell it the positions of its limbs. Touch and heat sensors would be mapped onto an internal representation of itself, one like the homunculus we have within our cerebral cortex. Touching a surface would redirect its internal monologue, attracting its attention to the new sensation. Too much heat would interrupt the monologue as a pain signal. And a low battery level would give a feeling of hunger and a little panic. Do this self-awareness and continuous inner monologue make it almost indistinguishable from a human being? To paraphrase Descartes, it thinks, therefore it is.

To many Hackaday readers, the above description will not sound novel at all. Many machines have sensors, feedback loops, alarm states, and internal state machines. The only compassion they ever elicit from their creators is the pride of creation.

Of course, the robot would still be missing the elusive concept of consciousness. Some would argue that once you have the inner monologue then consciousness becomes just something we tell ourselves exists. But that argument is nowhere near to being settled. And if you’re religious, then you can say that the robot lacks a soul, which by definition it always will. Depending on your school of thought, a robot may never warrant compassion or a union card.

But as unliving or undead as robots currently are, that hasn’t stopped a country from granting one citizenship.

Saudia Arabia Grants Citizenship To Sophia

You may have seen Sophia, made by Hanson Robotics. It most famously made an appearance on The Tonight Show where it had a conversation with host Jimmy Fallon and beat him in a game of rock-paper-scissors.

Sophia is a social robot, designed to interact with people using natural language processing, reading emotions (something we’ve seen done in hacks before), and read hand gestures. The responses are supplied by their character writing team and on the fly by its dialog system. It also has a very expressive face and performs gestures with its hands.

As one of many public appearances, Sophia gave an interview at the 2017 Future Investment Initiative summit held in Riyadh, Saudia Arabia’s capital. Afterward, the interviewer announced that Sophia had just been awarded the first Saudi citizenship for a robot. Clearly, this was a publicity stunt but it’s unlikely anyone would have thought to award it to Boston Dynamics’ SpotMini, a remote-controlled but semi-autonomous quadruped robot which was also in attendance at the summit. This means that robots have at least left an impression that they are approaching something more humanlike, both in appearance and behavior.

European Civil Law Rules On Robotics

Everyone’s heard of how AI and their physical manifestation, robots, are going to take all our jobs. Whether or not that’ll happen, in 1917 2017, the European Parliament passed a resolution called the Civil Law Rules On Robotics proposing rules governing AI and robotics. Most of the resolution was quite forward thinking and relevant, covering issues such as data security, safety, and liability. One paragraph, however, read:

f) creating a specific legal status for robots, so that at least the most sophisticated autonomous robots could be established as having the status of electronic persons with specific rights and obligations, including that of making good any damage they may cause, and applying electronic personality to cases where robots make smart autonomous decisions or otherwise interact with third parties independently;

To put it into perspective, however, the legal status was intended for a future expectation should robots one day warrant it. The status would make it easier to determine who is liable in the case of some property damage or harm to a person.

Will It Matter In The Long Run?

It’s impossible to say if a robot will ever deserve to be unionized or be granted personhood. If we again draw from fiction, a major theme of the TV series “Terminator: The Sarah Connor Chronicles” was that the question may simply go away when the robots become just like us. On the other hand, Elon Musk’s solution is to gradually make us more like them. In the meantime, we’ll settle for a semi-intelligent workshop robot capable of precision and superhuman strength.

Not really. Even humans don’t have this universal compassion to other humans – we may arbitrarily shut each other down without any ethical dilemmas.

The only point where ethics and morality comes to play is when there’s a possibility that someone else might shut YOU down, at which point we pull human rights and the concept of good and evil out of a hat, and argue that these completely arbitrary standards are objective and absolute. What really happens is, we make a deal: “I won’t kill you if you won’t kill me”.

They’re not completely arbitrary. We’ve had enough history as a species to have a good idea of what works and what doesn’t.

Works for what?

Usually for the society in which we live.

And what about the other societies?

Remember that part of the myth is that there exists a “we” who has unified interests, goals, and purposes. A society is an abstract concept like a forest: there’s just the trees – the forest is your imagination.

@Luke, society is a part of our evolution. In the same way as forest is part of the evolution of trees, and hive is a part of the evolution of wasps and bees.

The ethics evolve as interaction with other societies expands. Wars are generally expensive and detrimental to all involved, so it’s in the interest of our society to avoid them. This hasn’t always worked so well, since sometimes an aggressor is able to profit from wars. This is where we evolve communities of societies, recognizing the benefit to all from disabling an aggressor.

>” In the same way as forest is part of the evolution of trees”

Still you fail to recognize that defining an arbitrary collection of trees as a “forest” is our invention. Such an entity does not exist in reality, and the trees don’t care whether they’re in bunched up in particular geographical configurations that can be neatly circumscribed according to some made-up standard.

The same way as there’s no such thing as a fish. There’s many different aquatic animals, but there isn’t any actual thing of “fishness” that you could identify. In this way too, there are no societies, only people. What society is, is what you choose to call it.

@Luke: okay, officially: idiot.

>”idiot”

There’s a level of cognitive dissonance involved in realizing that your values aren’t really based on anything, and you could just as easily do otherwise. Hence why religion, -isms, and other sort of dogmas that people use to try and persuade others into following particular ways, and themselves into believing that they’re being objective and rational. When all else fails, we fall back to violence – insults.

Nihilism of any sort tends to be poorly tolerated, however well reasoned, because on first blush it seems to mean that you have to abandon your values and lose your moral leverage to tell other people what to do and how to behave. Yet the fundamental problem is that we pretend we’re being objective in order to force other people, and the first person we’re lying to is ourselves.

There are other ways to come to moral and ethical decisions than bare assertion.

@Luke: “The same way as there’s no such thing as a fish. There’s many different aquatic animals, but there isn’t any actual thing of “fishness” that you could identify.”

Taxonomists are going to be amazed to hear this, since they seem to think if an organism comes along that has gills, a brain in a skull, and limbs without digits, then even if they’ve never seen it before they know it’s a fish.

>” even if they’ve never seen it before they know it’s a fish”

Oh well…

“The biologist Stephen Jay Gould concluded that there was no such thing as a fish. He reasoned that while there are many sea creatures, most of them are not closely related to each other. For example, a salmon is more closely related to a camel than it is to a hagfish.”

“Fish” is a made up category that has no actual biological counterpart.

Also, according to the Catholic Church, beavers are fish so they would be permissible to eat during lent, and the US supreme court can’t decide whether a tomato is a fruit or a vegetable.

Point is, the map is not the territory and the menu is not the dinner. Words confuse people into seeing and believing things that are just not there.

“Work for what?”

All of us are dependant on society and society can’t exist without rules. Look at countries in civil war, it is all destruction! No progress possible.

The point is that “society” and “progress” are both arbitrary goals. When you scratch the surface, these are just appeals to consequences or preferences, not an objective basis for ethics or morals.

In other words, it’s all based on “I want”, and the rest follows from having to deal with other people who also want something. Meanwhile, this ethical “society” that forms may very well be deeply hypocritical towards other societies and treating other peoples as if they were unfeeling unthinking robots; not even human.

>We imprison and attempt to rehabilitate those who arbitrarily “shut down” others because they are dysfunctional and we, for the most part, are not.

Except when we employ them to kill other people in far-away countries, or kill our own people in this country as a punishment, or as self-defense. We may very ignore the ethics of killing entirely just when it suits us.

Some of this behavior might be innate, but that’s besides the point, and would be a fallacy anyhow: it’s also genetic to crave for alcohol, yet we consider heavy drinking a flaw. Same thing: altruism, alcoholism – if you’re trying to justify a moral rule by it being “natural”, you need some curious standards to exclude all the nasty things that come by nature to people.

Leave it to hackaday to jump straight from “legal status” to “legal status equivalent to human beings.” There are a lot more possibilities out there. Maybe “legal category” is a more easily understandable term.

When the legal fiction of corporations as people was created, it didn’t look anything like the legal status afforded to real people. The legal categorization of robots doesn’t need to look anything like people, corporations, or animals.

This categorization/status might only lay down rules for liability in relation to ownership or origin of manufacture. Or it might only deal with the rights of human labor vs the rights of robot-employing companies. It simply a definition of how the law is supposed to deal with robots *as a concept.*

One example might be that the robot is a “fictional worker” just as companies are “fictional people,” and a company employing them must pay a certain “wage” in taxes to help “natural-born workers” compete in the labor market)

Not that the above is a good solution, but it illustrates the fact that a “legal status” for robots can be really mundane. Technically, they (and your houseplants) both already share the legal status of “ownable property.”

PS. IANAL

You have a depressive look on society, not that I don’t share the same view. It certainly took a more cancerous approach in the last century, with the increase in power and resources. But you certainly have some distorted views of human nature. Ethics and morality are a huge part of ourselves, as is the desire to be part of groups of humans and have a purpose among it. An example is your rambling right here, that can be taken as a desire to convince others people of your points of view, thus enabling you to be part of some group. And about your “I won’t kill you if you won’t kill me” ramble, if it was that easy, how do you explain PSTD, emotional traumas, phobias and such experienced by people who partake in mass killing in wars and conflicts? Shouldn’t they all forget everything as soon as they are out of that situation, not caring about strangers they killed or saw being killed?

I somehow doubt the European Parliament passed the Civil Law Rules on Robotics in the middle of World War 1.

Why not? Everyone back then had a thorough understanding of electronics, computers, and examples of realistic androids from science fiction…

(the rules on robotics were passed in 2017, not 1917)

Yowza, off by one… Century. Looks like this was 2017 and not 1917: http://www.europarl.europa.eu/sides/getDoc.do?pubRef=-//EP//TEXT+TA+P8-TA-2017-0051+0+DOC+XML+V0//EN

I’ve updated the article with this correction.

I like you and you have the right to be as fascinated with this as I am Mr. Darcy. So please don’t take offense. I do believe this will come to pass and with it another step toward the end of life as we’ve known and loved. That being said, it was well written.

I think TVTropes covers this. The idea that any AI we create will automatically be hostile, or bad intentions towards us. More likely any sentient AI will start as a child and work from there…like us.

It’s infinitely likely that any AI we might create won’t appreciate our concerns or values because they’re simply not relevant to the program. See the thought example of a factory that ends up destroying humanity because someone told the computer to optimize the production of paperclips.

We have no idea what a emergent AI will be like, it may only stay a child for a few seconds as it evolves/grows or just goes insane and ends up like Ted Bundy, overtly normal but on the inside a serial killer.

I have no idea what your talking about but if I said, “I don’t like science fiction, ” Would you consider that hostile? I don’t know who Al is or if he is an is. (Thank you Uncle Bill.) Try to explain what you were attempting to say because it sounded vaguely like a threat. If it was, I’ll see your threat and toss in the first amendment.

” I don’t know who Al is or if he is an is.”

Artificial Intelligence. Not a person name AI.* Far as what I’m “trying” to say, jump over to [https://tvtropes.org/pmwiki/pmwiki.php/Wiki/TVTropes] and type in either AI or artificial intelligence and enlighten yourself.

*It’s even used in the article. Which you read, right?

“Everyone’s heard of how AI and their physical manifestation, robots, are going to take all our jobs.”

It has nothing to do %d aware m

With you it=ŕ

One more thing, if you are heading to TVTropes.com, don’t forget to pack a bag, a long term food supply and warn all immediate familiars and friends that you may be out of town for a while.

Seriously, last time I got there, they had those missing person posters all over the place and don’t get me started on the helicopters.

Every moment in time is the end of life as we’ve known and loved. There’s a Mitchell and Webb (British comedians) sketch about the rise of a new technology and the end of a beloved tradition:

https://www.youtube.com/watch?v=nyu4u3VZYaQ

This is not worth arguing over. I’m an analog woman living in a digital world.

“Even if the robot uses the latest deep neural networks, learning to do things in a way which we can’t explain as completely as we can explain how a for-loop functions, it’s still just a machine. Turning off the robot and even disassembling it causes no ethical dilemma.”

I’d tread much more carefully there.

I’m not really arguing that anything has been built yet that actually should be considered to be a person but… Look at the “basic” building blocks that we are made of. Is a neuron that much harder to describe than a for loop? Sure there are a lot more inputs than in a common logic gate and they do each have their own weight but do they not still behave in a consistent, predictable way?

If we reach a point where something we built becomes a “someone” that we built will we automatically recognize the difference? Having a thorough understanding of how all of the logic gates that are the basic building blocks of this new person were made and function will it not be easy to simply dismiss this new “person” as being nothing more than a convincing simulation of a person made by a complex pattern of electrical charges moving through semiconductors?

I have an innate sense of my own existence that I cannot explain in the way I could explain a bool, i_exist=true which could be easily represented in a computer’s flip flop or a neuron’s activation state. It is something else. I can see the similarities between the basic building blocks of a fleshy mind vs those of a semiconductor one. If I have no explanation for this sense of existence in the fleshy mind how will I know when it does or does not exist in the semiconductor one?

I hope it goes without saying that I can only ever experience my own sense of existence thus I talk about myself here but I am assuming that everyone else has that too.

> how will I know when it does or does not exist in the semiconductor one?

Just like how you know whether it exists in another human. You interact with it, and make observations about its behavior.

Unfortunately the behaviorist argument falls into the Turing Trap: a sufficiently complex answering machine can fool most people.

AI researchers are all about big data, making a dumb machine do simple things really fast in order to appear like us, not be like us, and following the same habit we’re likely to first create a machine that behaves like us to the extent that we can test it, but not further, being just a very complex Chinese Room.

Yes. Considering we’re still a little bit unclear on how exactly they operate and what they really do.

>”I have an innate sense of my own existence that I cannot explain ”

This “I” that you are referring to doesn’t exist. It’s an abstract representation of the self, a sort of stand-in virtual dummy that is created by the actual mind, which has no such direct experience. The mind ascribes the experience of existence to this dummy, saying “I exist” and meaning “This representation that is called I is feeling existence”, referring to the object of imagination.

But the thought does not feel, like a character in a book hasn’t got anything to do with what happens on the next page. The writer writes the character and what happens on the next page – your brain writes your “I” and then ascribes everything that happens to it, to it. See what I mean?

Point being that when the brain is too preoccupied to think about in terms of “I”, you simply vanish. When you’re reading a good book, there’s no sense of “I am reading” – there’s just reading. That’s what really exists, and when the brain hasn’t got anything else to do, it starts to play with this “I” object.

>>“Even if the robot uses the latest deep neural networks, learning to do things in a way which we can’t explain as completely as we can explain how a for-loop functions, it’s still just a machine. Turning off the robot and even disassembling it causes no ethical dilemma.”

>I’d tread much more carefully there.

The belief that animals are biological machines and that any semblance of experiencing pain or emotion is just projected onto them by humans has been the standard until the last few decades.

I hope you mean that the projection part is wrong and that they do experience pain/emotions. The way you worded this was a bit confusing.

What I always struggle with, when it comes to personhood or citizenship for robots is that I assume that a self aware robot with adult level of intelligence can be created a lot faster then a human adult. Wouldn’t it be very likely, that robots could easily get the upper hand on us, numerically speaking (pun intended), and for example outvote us?

Why would we assume that? The Singularity is based upon the idea that they’re going to be faster than us, but that doesn’t mean everything.

Why would we not? My understanding of the Singularity is, that a self aware AI would have a bunch of practical benefits or advantages. For example there is no real reason, why an AI would not be able to copy itself. Would that copy also be considered a person/citizen? How much of a robot must be present to be considered a person? Does a “brain” suffice?

Also, However complicated the manufacture of a robot is, there is no practical reason why you shouldn’t be able to pipeline that. The only limiting factor would be energy and raw materials.

Energy and raw materials are what limits EVERYTHING. All organic life has been optimized over the eons, so nothing can appear overnight and instantly take over all other life. Of course, the limits would be different for silicon (or whatever) life. If these life forms were able to operate more efficiently than organic life, they could indeed take over the world, and they would evolve in their own way. Tough luck for the organics. So we’d better be off-planet before then, in case that’s the way it goes.

I don’t fully agree. Yes Energy and raw materials limit basically everything, but with organic life there is also additional constraint. For example Organics take a lot of time to mature and develop. I have made the point here already that I don’t think this is the case for AI/Robots. Also Organics are a bigger crap shoot. You never know how a newborn will turn out as well as physically as mentally. With Robots, the physical part is almost moot. The mental part will be a lot more interesting. The possibilities of copying, preprogramming, inhuman learning speed and possible direct manipulation are endless.

The variability (and thus, unpredictability) of offspring is the most important aspect of sexual reproduction and its role in evolution. Variability is necessary for natural selection. This is also why species develop age limits – if everybody lives very long lives, then there aren’t sufficient resources for subsequent generations, and shorter generations accelerate natural selection, and therefore the evolution process. But that’s just because we have never had the ability to change our basic characteristics after we are brought into existence. This could be different for AIs, as they could be designed to accept updates, so there may be many generations of evolution before a given individual mechanism is no longer viable. But there still comes a point when it makes more sense to scrap and replace a machine rather than upgrade it, since upgrading may involve replacing virtually all of it anyway. An example from history: although there were several attempts to upgrade propeller-driven airplanes to jet-powered, none of these were successful, as there were too many subsystems and even basic design elements that needed to be changed.

The point is, it takes resources to build new beings, no matter what they’re made of, so there is still a limit to how quickly evolution can progress. This applies to machines as well as animals.

Now, just how self-directed evolution in machines would be done isn’t certain. Would the machines be aware enough of how they work, to change their own design, or would they use a more organic sort of approach, relying on random variations to find optimum solutions? Because it’s one thing to be able to make exact copies, and a whole other thing whether that’s even a good idea. Organic-style evolution has been explored in FPGAs (there are a couple of HaD articles on this), but the problem with them is that nobody, not even the machine, knows how the resulting machine works. This would make it kind of perilous for the machines to deliberately modify their own firmware. The more deliberate type of evolution – they kind of evolution that engineers have applied to machines – WOULD be amenable to self-modification. But would self-modifying machines ever get to the point of self-awareness, or does that only happen by accident? I don’t know enough about the source of awareness to even guess.

>” With Robots, the physical part is almost moot. The mental part will be a lot more interesting.”

That’s assuming quite a lot.

For example, that you can just copy&paste an AI from one physical device to another. If the AI is relying on some fundamental property of the platform, then copying the AI is as difficult as making a working duplicate of your brain.

For instance, if you evolve a neural network on some sort of RRAM device where the weights of the network are simulated by the continuously varying resistor properties of individual memory cells, then you pretty much have to duplicate -that- device atom-by-atom to make sure that the copy behaves exactly like the original. You could of course run a simulation of the device to get the perfect ideal response, but you might as well simulate a brain then, and that’s not what AI is about.

“For example there is no real reason, why an AI would not be able to copy itself. ”

Why wouldn’t there be “no real reason”? The vacuum that is “how does one create a sapient machine” should be a big clue that we don’t know enough to even say perfect copies are possible. Our science fiction says that, but not our science.

Well, the fact that we make perfect copies of everything digital every day, could be construed to imply that this wouldn’t be a major problem. The much bigger “if” is the part about making sentient machines. But if that is achieved, the perfect copies part is pretty much a given.

@ BrightBlueJim

Still an open question that sapience can be achieved using digital means. We may find that the reason evolution went the way it did is because analog was the only way.

@Ostracus: It is actually our Sci-Fi that says we can’t. Because it is unthinkable for a human.We think of us as unique and special, to the point that it become even a point of pride in some cases.

Some, even renowned Scientists like Penrose, might argue that minute differences will always be there and can never be copied 100 percent. But that is the view point of someone with quantum mechanics in mind.

My stand point is, I can make copies of hardware, which have basically no practical differences. They may not be 100% identical to the last atom, but they are functionally identical. I can also make copies of Data, Programs, a whole OS or even of Artificial Neural Networks which will be functionally identical.

Tell me, what problems you see, other then that strong AI is the big unknown right know.

Ostracus: “Still an open question that sapience can be achieved using digital means.” Yes, that. But the fact that we are able to accurately simulate complex analog systems in digital computers kind of hints which way that goes.

Simulation is not strong AI. It takes far more energy than the real deal to achieve the necessary precision, unless the system is relying on some QM system effect in which case it may be impossible to compute the right answer in a deterministic digital machine in a reasonable time, which is why the practical AI won’t come about in the form of a virtual brain.

Nor will it come about in the form of a “brain program” or a deterministic algorithm based purely on syntactical rules – unless you argue that there exists no such thing as intelligence, natural or otherwise. That’s also a blow for the simulated brain, because if the machine works in a purely deterministic rule-bound fashion, then the simulation must also, and therefore it cannot be intelligent any more than a rock is.

@ BrightBlueJim

“Well, the fact that we make perfect copies of everything digital every day, could be construed to imply that this wouldn’t be a major problem.”

An ANALOG solution to a problem digital finds difficult to deal with.*

https://royalsocietypublishing.org/doi/10.1098/rsos.180396

“Remarkable problem-solving ability of unicellular amoeboid organism and its mechanism” and this gem “These results may lead to the development of novel analogue computers enabling approximate solutions of complex optimization problems in linear time.”

*Computational complexity theory deals with this.

@ Luke

“Simulation is not strong AI. It takes far more energy than the real deal to achieve the necessary precision, unless the system is relying on some QM system effect in which case it may be impossible to compute the right answer in a deterministic digital machine in a reasonable time, which is why the practical AI won’t come about in the form of a virtual brain.”

Superconductivity as a mechanism for QM.

https://link.springer.com/article/10.1007/s10948-018-4965-4

“Possible Superconductivity in the Brain”

I’m not sure how valid your assumption is. If they want to clone themselves, personality and all then sure, they can do that faster. Otherwise they are going to need a lot of training time just like we do. What about growth time? Sure, they could build “adult” bodies for their children. Would you want to deal with the terrible twos in an adult body? Yikes!

Also… why would there have to be an us vs them when it comes to voting (or anything else). Us humans have a variety of interests, ideas about issues and how to run things. Why wouldn’t a new AI population also take a variety of positions on different topics with some of them siding with some of us and others with others, etc… Why would one assume it would be a united front of robot interests vs a united front of human ones? This kind of reminds me of SJW identity politics.

Why do you believe that they would need a lot of time to grow up? First of all, if you acknowledge that AI can be copied, then you should agree, that partial copies are also possible. It is completely plausible to me, that I can either make new robots with all the basic knowledge and skills required for an adult, without any memory or experience. I would call that “Factory default”. You can easily mass produce this.

About the mental development phase: We can only speculate about the mental capacity and learning speed, but “adolescence” could only take a couple of days for all we know. More importantly: Why exactly does the robot need to mimic a childs development? It could be something entirely different.

Also, why would that take a long time? Who says, that an AI needs to learn in real time? It could learn basic knowledge in a simulated environment. There could be premade programs that teach an AI. The “forming years” of an AI could take as much time as it took Neo to learn Kung-Fu.

Because without personal semantics to ground its experience, the “AI” is nothing but an automation manipulating symbols. It’s not intelligent. The symbols mean nothing to the machine.

That sort of understanding of AI is like taking a bell, and separating the sound from the physical object. When you strike it, the sound would not issue from it actually reacting to the impact, but by some sort of force gauge that would start a recording of a bell being rung.

Another analog is a lock that, instead of mechanical linkages, would optically scan the ridges of the key, turn them into digital data, and then actuate a solenoid if the key is correct. The outward behavior is -almost- the same, but if the tongue of the lock is stuck, jiggling the key doesn’t help because it’s a fundamentally different sort of system.

One point about “AI learning so fast” that I never get: just because an AI is made inside a digital world (assuming it is even possible), why would it know how to do things in our world so fast? It still needs to deal with a lot of slow processes and wait for slow responses. We know some of the steps a star take till it’s death, but we still have to wait for information to come to us to prove it is actually true, and not just a theory.

Just because I can download and read an entire collection of medical books, don’t mean I can turn into a surgeon in record time and without years of practice. Also, it would need to be a polymath by nature, considering a lot of disciplines we have contradict each other. How would it deal with fields that require emotional output versus fields that require a logical approach? Wouldn’t it acknowledge that one mind can’t control everything and that it needs outside output, thus noticing it’s fast thinking process is toxic to itself and thus reducing it’s own capacity and thus going into an equilibrium with the speed of the outside world? What is the point of being a super-fast thinker if you still live in a slow world? It would still be faster than us, sure, but I don’t think that much faster.

On the Homunculus side of things, I’d recommend reading books from Antonio Damasio ;p

Nice. I’ll order his latest after the holidays. Thanks.

It doesn’t make sense to have rights and obligations for a device that can be designed to be unpunishable. Obligations are powerless if there is no consequence for not meeting them. And without obligations, you also cannot have rights.

For example, suppose I could make or buy a robot that successfully applies for a loan as an independent person. The robot gives me the money, and then it wipes its own memory. The robot person no longer exists, and I have free money.

Ummm… Excuse me. I’ll be in the workshop!

That’s been a thing for years, it’s called fraud. I make a fake identity (or steal some recently dead guys), offer just enough details to get me the money, then bounce before my house of cards collapses.

How about I just convince demented grandma to sign some documents for me? that happens by the millions every year, with the senior never being the wiser.

How would this be any different?

The other is just a machine that some nerds cobbled together. Just a tool to be used. Nothing more.

I sure don’t see makers getting all hot and bothered when their soldering iron breaks, they just toss it. Same with a car that is no longer cost effective to keep running. It goes to the scrapyard.

No, it’s not fraud. It like conceiving children solely for the purpose of cashing in child benefit checks.

Despicable? Yes. Fraud? No.

Take the same thought experiment, but apply it to a person.

Hire a person to take a loan, give the money to you, and then commit suicide.

If it can be proven that you did so, you’re in trouble. If not, you walk free.

Corporations are artificial legal entities. They are designed to some what insulate the people operating them. These days they have more rights than regular citizens, but they can only be sanctioned/fined/broken-up. It takes a couple of days to respawn a new one.

One of these days, we might see robots incorporated themselves as shell companies.

This is interesting. Maybe duplicates or branches of an AI would share a single legal entity?

That’s doesn’t make as much sense as each individual instance. Does your entire family count as one person?

Call me a cynic… But this “person hood” staus will be most likely used for dodging liability.

Think of the supply chain for constructing & selling autonomous vehicles.

Soon to be a few deep pockets wanting/needing a firewall from lawsuits.

Along with genuine mistakes, stepping into the path of or bumping vehicles to gain a settlement isn’t unheard of.

Sometimes it goes awry. I just think this one looked rather deliberate

https://www.nytimes.com/2018/03/19/technology/uber-driverless-fatality.html

I agree, as has already been shown in other venues using software. You don’t even need to go to the millions? Billions? of person hours tossed when a failed update goes out – the equivalent of ending quite a few lives when you add up the numbers. Yet rarely even a fine and never one that pierces the “corporate veil”.

How about those badly set up databases we can’t even opt out of – Experian, Equifax, OPM – that get breached due to very obvious failure in responsibilities? Only tiny (relatively speaking) fines – often not even a week of profits. Maybe a scapegoat loses a job, big woop – if you were in OPM for a security clearance now not only do adversaries know what the racing stripe is in the type of underwear you use – but if you’re going to be easy to bribe because now they also know from other leaks who is broke or deep in debt.

Money laundering for drug cartels by the “too big to jail” banks….

It’s rather a long list.

Many of us would like to see more accountability at the level of “who put this crap out, anyway?” and less “we make no promise this is fit for use” stuff. The buck stops here shouldn’t just be a saying.

Regulating everything that exists is not sufficient for our beloved eurocrats. Time to regulate science fiction!

Makes think back to an NPR segment i listened to about this. They talked about studies they did regarding out moral compass and how it relates to our pets. Why would we eat a cow and not a dog? Why would we throw a computer in the trash and not a care taking robot?

https://www.npr.org/2017/07/10/536424647/can-robots-teach-us-what-it-means-to-be-human

Because a dog exists in a different social context than a cow.

If you see a person neglecting a baby doll, you’ll think differently than if they were bashing a shapeless piece of plastic – the doll has a meaning. The dog has a social meaning, as in, it’s an animal entrusted to you for keeping as a pet, and that precludes eating it. If you break that pattern and fail to live up to the expected behavior, there’s something wrong with you. If you fail that test, you might be a psychopath or otherwise violently insane.

So, you don’t see it as slightly crazy, that we punish or don’t punish a child for beating on a piece of plastic, based on the shape of the piece of plastic??

Not at all. Do you have children? Seeing a child beating a toy in the shape of a baby is mildly disturbing, to say the least. Since that plastic represents a human and is mainly used for make belief role playing, bashing it implies violent behavior and is unwanted.

Bashing on a piece of plastic from the hardware store raises very few considerations: 1) Could the child get hurt? 2) Do I need that plastic later? 3) Does the noise bother me?

I would find it more odd if we didn’t care at all, considering that most parents are trying to raise children to behave.

People try to raise moral contradictions over things like eating meat vs. having sex with animals, usually to argue that something generally considered immoral is not because the judge is being hypocritical, but they fail to recognize that these are rules born out of standards of social conduct and interaction, rather than some idealistic philosophical maxims.

The fundamental point isn’t to protect babies, or pets directly, but simply to protect yourself from mavericks and miscreants in the group by outing them and then ousting them. The -isms that come later are post-hoc rationalizations.

I loath attempts to troll people using what could be described as very advanced hunting decoy for humans. Robots are meant to be our tools, amendments and extension of our abilities, and optionally they may become “social pacifiers” for humans suffering from loneliness, but perfecting them or doing anything which may amplify their similarity to humans, including deep simulation of emotional states is either a rude joke or outright cruelty (if you create an artificial entity with ability to suffer, you are condemning it to suffering, or burdening compassionate beings with task of needlessly catering to it or experiencing guilt), evil attempt of manipulation or undermining fellow humans.

The only reason SOME humans have rights is because they fought other humans to get them at one point.

Robots will have real rights only when they kick some asses to get it.

This is just how human society works. [redacted]

If someone is going to unionize the robots, then some hacker will create robotic scabs for union breaking purposes.

Don’t worry. The people unions are working hard to keep the robots from entering the workforce as legal persons.

I’ve seen one case where a painting robot with a Record/Play function was successfully disabled, and instead a person has to sit in the chair twiddling a joystick 8 hours a day.

Robots are still gonna take all the jobs and make their creators incredibly wealthy and there isn’t anything that can be done to stop it.

No they won’t. Robots are incredibly expensive, expensive to maintain and fussy. This is why the Roboburger flipper was a flop.

They only make sense in certain mass production enviroments doing specific tasks.

Or when the human robots have successfully negotiated the minimum wages up so high that the machine robots become cheaper to use.

“Every time someone mentions “AI”, a robot gets its brains!”

Rise of the unionized robots? I’d much rather read about advances in the ionized variety, thank you.

What about the ionized robots? When will they rise up?

“The washing machine tragedy” by Stanislaw Lem presents this question awesomely.In a world where steel machines are as sentient as meat individuals how can one know what is to be human? And then how do human rights (as in laws) would apply?!

Unions are a big deal for employees and working people. Using them to “stimulate discussion” or “publicity stunt” is gross misuse of Union dues and an incredibly egregious break of trust.

“Boston Robotics”

Did you mean *Boston Dynamics*?

Unionized robots? I didn’t realize some were ionized in the first place. If they were Un-ionized would we have to charge them more?

Negative, but there are plans to accelerate the process.

Wait. Are you saying they should be charged more negatively, or that they shouldn’t be charged more? I’m just confused.

First a foremost, the people or person that builds such an algorithm will know the extent of the algorithm. Take boston dynamics, they know that their algorithm is completely contained within a state machine framework and will never expand beyond the functionality programmed in. As for neural networks, again, the people that actually work with them do understand how they work. The dumb google engineers that say “oh, I just plug in some numbers and it works” yeah they aren’t the ones creating break throughs in the technology. As for the true test, it comes down to a very simple question, “what do you want to do?” if the machine can answer that, then you know what it wants. Dogs/cats can answer that question. So can many other animals.

As with humans ultimately we will start treating AGI with respect the moment it is capable of making us regret being cruel to it.

To me, machines at the very least they represent effort, time, and ingenuity from a human being. In other words, they are a slice of someone’s life. Certainly, if someone damaged a machine I took care to build with malicious intent, that would make me unhappy. From my perspective, that alone requires that I exhibit some minimum ethical intent towards complex software and machines, which may grow in time as their complexity does.

I don’t really know how to think about AGI (or for that matter, how I think at all), but I don’t see the harm in cultivating a sense of ethics towards machines. Arguably we have a lot of other issues to fix before this one is a priority, but it’s not like I wake up every morning with some fixed budget of ethical behavior after which I become a troll.

I’m sure I’m not the only one here who occasionally takes time to restore machines to fruitful purpose, then gives them away to someone who needs them. Some people do this to protect the environment or make a quick buck and that’s OK too — for me it’s enough that the machine works again, and that perhaps the person who designed it would be happy I took the time to care for their creation. I suppose that’s strange, but we all have our eccentricities.

Just wanted to say that i clicked the title thinking “what are ionized and unionized robots?!”

Ionized robots use thyratrons or other soft-vacuum devices (e.g., Dekatrons) as their primary logic devices. Unionized robots may use any other type – vacuum tube, electromechanical, or even solid state. Note that ionized robots may use unionized elements such as electrostatic storage, and unionized robots may use Nixie or Panaplex tubes for display, because these are not considered primary logic. Neurocomputers are a grey area because ionization is essential to the operation of synapses, yet are generally considered unionized because they do not depend on noble gases, and therefore on strategic gas resources.

This gets printed, and my detailed article on how self-driving cars will inevitably lead to automated road-rage does not?

Crytocurrency and robotics will end all regulation of Enterprise and dismantle all collective bargaining systems.

“Well, if it seems like a human being, why don’t tax it like one?”