We salute hackers who make technology useful for people in emerging markets. Leigh Johnson joined that select group when she accepted the challenge to build portable machine vision units that work offline and can be deployed for under $100 each. For hardware, a Raspberry Pi with camera plus screen can fit under that cost ceiling, and the software to give it sight is the focus of her 2018 Hackaday Superconference presentation. (Video also embedded below.)

The talk is a very concise 13 minutes, so Leigh flies through definitions of basic terms, before quickly naming TensorFlow and Keras as the tools she used. The time she saved here was spent on explaining what convolutional neural networks are and how they work, just enough to prepare the audience. But all of that is really just background, the meat of the talk is self-contained examples that Leigh has put together and made available online. I love to see that since it means you go beyond just watching and try it out for yourself.

Generating Better Examples, and More of Them

When Leigh started exploring, she didn’t find enough satisfactory end-to-end examples for TensorFlow machine vision on Raspberry Pi, so she created her own to share and help future people who find themselves in the same place. She said it is a work in progress, but given the rapid pace of advancement in this field, that disclaimer can honestly be applied to everything else out there too.

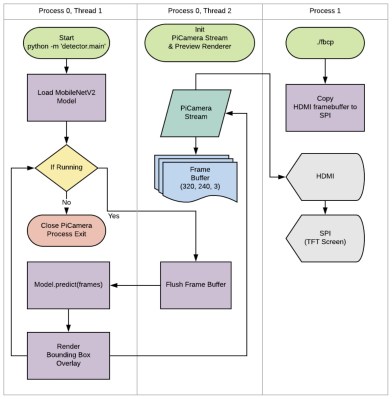

Most machine vision algorithms require hefty hardware. The example project used MobileNetV2 which was optimized for image recognition on modest mobile phone processors, making it a good fit for a Raspberry Pi. However, that modest requirement is only when performing recognition with a network that has already been trained. The training process still needs an enormous amount of computation, hence Leigh highly recommends using something more powerful than a Raspberry Pi. A GPU-equipped PC would work well, and she also had good experience with Google Cloud’s Machine Learning Engine.

Most machine vision algorithms require hefty hardware. The example project used MobileNetV2 which was optimized for image recognition on modest mobile phone processors, making it a good fit for a Raspberry Pi. However, that modest requirement is only when performing recognition with a network that has already been trained. The training process still needs an enormous amount of computation, hence Leigh highly recommends using something more powerful than a Raspberry Pi. A GPU-equipped PC would work well, and she also had good experience with Google Cloud’s Machine Learning Engine.

Training Neural Networks

But Leigh’s not interested in just training a single neural net and calling it a day. No, she wants to help people learn how to train neural nets. This is still something of an art, where everyone can expect to go through many rounds of trial and error. And to improve this iterative process, she wants to improve the feedback we receive through the visualization tool TensorBoard and better understand what’s going on inside a TensorFlow network. Leigh wants to make it more effective at helping users track changes they’ve made and correlate them to the effect of those changes.

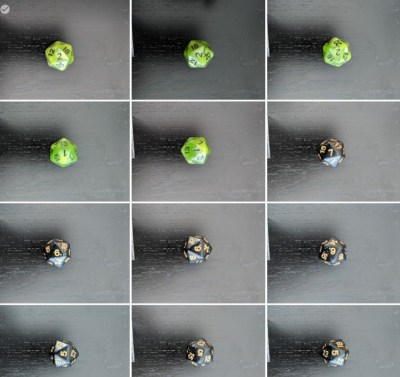

Another important part of making deep learning machine vision experiments reproducible is having the same datasets to train on. She offers one of her own: a set of images of dice to help teach a computer to read rolls, and pointed everyone to additional resources online for training data in a wide range of problem domains. All of the above resources will help people learn to build neural networks that will work effectively when deployed, concepts that are important no matter if the target is a high-end vision computer or a $100 machine vision box for emerging markets.

Another important part of making deep learning machine vision experiments reproducible is having the same datasets to train on. She offers one of her own: a set of images of dice to help teach a computer to read rolls, and pointed everyone to additional resources online for training data in a wide range of problem domains. All of the above resources will help people learn to build neural networks that will work effectively when deployed, concepts that are important no matter if the target is a high-end vision computer or a $100 machine vision box for emerging markets.

If you like to see additional TensorFlow-powered vision applications running on a Raspberry Pi, we’ve seen builds to recognize playing cards and to recognize a cat waiting by the door. If you’re interested in machine learning but not yet up to speed on all the jargon, try this plain-language overview of machine learning fundamentals.

“…she wants to help people learn how to train neural nets. This is still something of an art, where everyone can expect to go through many rounds of trial and error.”

I saw what you did there.

Not Hotdog!

I think price is the only thing keeping ai image recognition out of Arduino land. Maybe someone can make a cheap fpga to interface with Arduino.

One of the projects in my ideas pile is a machine that rolls a die many, many times and sees if it is rolling fair. Obviously I need a camera and image recognition. This dataset might be a good starting point for training my net!

Raspies have a fair bit of power, but unfortunately most of it is not easily accessible. I am talking about the GPU. Until we get GPU linear algebra libraries, things aren’t going to get easier.

Machine vision, based on cameras – without additional thermal imagers and wave spectrum analyzers, is fraught with recognition errors. We studied the systems of echolocation and complex reverberation for the behavior of drones in fog, and came to the conclusion that a multi-frequency sound system allows us to understand the kinetics of objects, their size and movement more efficiently than the thermal imager. Especially in mist with low temperature and water.

The sound wave allows you to get almost the exact shape of a moving object behind the barrier and to predict the vector of its movement at a distance of 140-200 meters in a quiet environment and up to 60 in a noisy over 130 dB.

How do you acquire sound, to “translate” it in a picture? Mic arrays? Are those precise?

Pretty weak talk tbh. I just hear buzzwords and don’t know much more than before. So still have to figure it out myself. The few comments here also reflect this prbly

It’s much more of an overview / here’s the framework I’m using, come play along.

The real meat is in the GitHub repo. Code, training set, etc. Off you go!

(There are of course other tutorials out there too.)