One of the things that makes us human is our ability to communicate. However, a stroke or other medical impairment can take that ability away without warning. Although Stephen Hawking managed to do great things with a computer-aided voice, it took a lot of patience and technology to get there. Composing an e-mail or an utterance for a speech synthesizer using a tongue stick or by blinking can be quite frustrating since most people can only manage about ten words a minute. Conventional speech averages about 150 words per minute. However, scientists recently reported in the journal Nature that they have successfully decoded brain signals into speech directly, which could open up an entirely new world for people who need assistance communicating.

The tech is still only lab-ready, but they claim to be able to produce mostly intelligible sentences using the technique. Previous efforts have only managed to produce single syllables, not entire sentences.

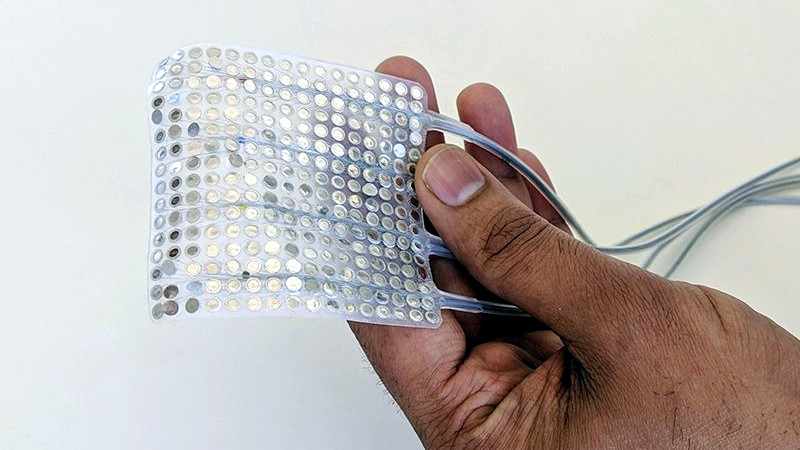

The researchers worked with five people who had electrodes implanted on the surface of their brains as part of epilepsy treatment. Using the existing electrodes, the scientists recorded activity while the subjects read out loud. They also included data on physiological production of sound during speech. Deep learning correlated the brain signals with vocal tract motion and could then map brain patterns to sounds.

The results are not perfect, but 70% of the produced sentences were understood by test subjects — although the subjects had to pick words from a list, not just listen arbitrarily. Oddly, while the results were not quite as good, the process worked even if people read while moving their lips but not making actual sounds.

It appears that the training is specific to the speaker. The paper even says “…in patients with severe paralysis… training data may be very difficult to obtain.” It’s possible that this could limit its usefulness for people who already can’t speak, which coupled with the poor quality of the speech means this work remains unlikey to change anyone’s life in the immediate future. However, it is a great first step to both helping people with speech problems and of course for the dystopian future with that eventual brain interface that will supplant the cell phone.

This is more scientific than the last time we saw a brainwave talking apparatus. If you want to influence your brainwaves instead of reading them, we have been experimenting with that.

So holistically if we think like a lab equipment life cycle requirements and the human is the lab equipment… we sure don’t have a standardized specification.

Basically, one of the methods I thought might be needed to be even more accurate and I learned is used from Eigenvector Research is the algorithm steps regarding the instrument operation/performance specification values so to adjust the prediction model which uses a training set of data for the calibration, tolerance limits and validation target and non-target data set.

So, thinking that way… each human needs some sort of specifications checked prior to each prediction routine as a human calibration adjustment routine to predict more generally I’m thinking. Anyhow… I think the ELINT/RINT/RNM or whatever they call it is making me feel weird now. At least they’re not torturing my anal rectal cavity and is more a head buzz. Now I go do other stuff.

What you talking Willis?

” However, scientists recently reported in the journal Nature that they have successfully decoded brain signals into speech directly…”

What does a drunk brain sound like?

Probably gibberish due to incoherent thinking.

Might work in conjuction with the truth serum.

Hey Dude, pull my resistor! I wuv you maaan.

Off topic but not (1): I’ve enjoyed the assistive tech articles that pop up from time to time on Hackday, the betterment of peoples lives from technology in a direct way (and research into) is really important for hackers to get into and contribute towards.

Further offtopic but not (2): A suggestion for the editors would be to improve the categorization of taxonomies and tagging of articles, as there a load of posts on HaD that are orphaned due to wild freetagging of content.

It would be great if you could make dictating software working with this that will not only be noise-resistant but can also be much faster than normal speech after some training

A few years ago Chang gave an keynote speech at Interspeech in SF, he’s a brilliant neurosurgeon, engineer and scientist. Would not be surprised if he’s an hacker too. Congratulations for the paper on Nature, well done!

It would be interesting to see how brain plasticity and deep learning combined with this.

You would expect the human to get better at working the device, the human brain is quite a good neural network 🙂

You might get synergies between the brain and the algorithm, or they might end up fighting each other.

I can actually imagine that a static algorithm would work better. Let the user learn how to use the machine, just being able to make a full range of sounds with brain signals + significant time might be all that is needed.

Why didn’t Capt Pike have something of that sort ?? (instead of that dumb blinking light ? – what was it ? 1 blink for yes, 2 for no).

I have said before Pike needs to learn Morse code. And yes, Morse code survived. Both Kirk and Uhuru were able to copy Morse code and at least one other episode.

The tech was abandoned because in real life, placing probes inside the human body is harmful. What I remember is recurring infections and wires migrating through the body cutting up the person’s insides. I am too lazy to dig up the history on trying to make people walk through the use of electrodes but that would be the source material to prove the point if you want to learn more and if it can be found.

I create speech from brain signals too. It is a proud day for Rube Goldberg fans everywhere ;)