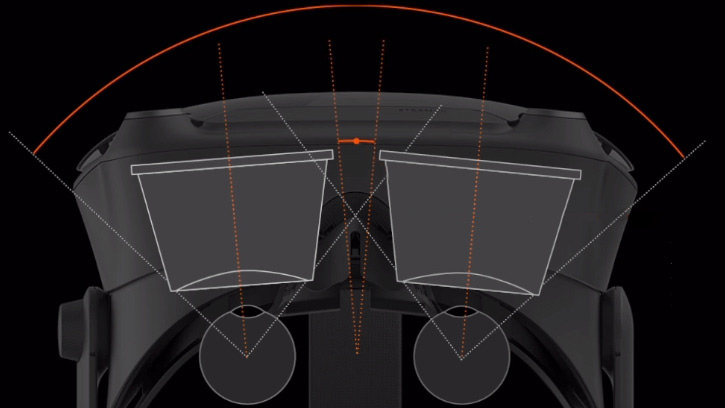

VR headsets have been seeing new life for a few years now, and when it comes to head-mounted displays, the field of view (FOV) is one of the specs everyone’s keen to discover. Valve Software have published a highly technical yet accessibly-presented document that explains why Field of View (FOV) is a complex thing when it pertains to head-mounted displays. FOV is relatively simple when it comes to things such as cameras, but it gets much more complicated and hard to define or measure easily when it comes to using lenses to put images right up next to eyeballs.

If there’s one main point Valve is trying to make with this document, it’s summed up as “it’s really hard to use a single number to effectively describe the field of view of an HMD.” They plan to publish additional information on the topics of modding as well as optics, so keep an eye out on their Valve Index Deep Dive publication list.

Valve’s VR efforts remain interesting from a hacking perspective, and as an organization they seem mindful of keen interest in being able to modify and extend their products. The Vive Tracker was self-contained and had an accessible hardware pinout for the express purpose of making hacking easier. We also took a look at Valve’s AR and VR prototypes, which give some insight into how and why they chose the directions they did.

Sure fovea and eye-tracking will play a role in there somewhere.

We can almost imagine a kind of video compression: only display with accuracy and color where the eye is looking (fovea), and refreshing the other part (macula) when the eye is moving, with eye tracking of course.

It would limit the 3D processing but it would need a heck of response time to not feel dizzy as saccades are darn fast

LG/Google already showed 120 Hz 5K panels, which is fast enough assuming no other sources of latency, so that part is covered. The limiting factor is 3D engine/driver/OS architecture, there are just too many huge sources of latency there to do a quick fovea update in between normal updates. Though they are there purely because of legacy software design decisions, they are not inherently necessary.

The limiting factor is the accuracy/precision/realiability/speed of eye tracking, latency caused by 3D engine/driver/OS architecture has already been taken care of for VR in the past 6 years, it’s sub 20 ms in modern VR headsets.

When you saccade you are actually blind. Anyone who’s ever had a case of rapid nystagmus can attest to this.

Low-persistence displays used in VR actually counter this. See : http://blogs.valvesoftware.com/abrash/down-the-vr-rabbit-hole-fixing-judder/

That’s if you are tracking an object or rotating your head while keeping your eye fixed on a single point, not saccading.

Actually, rendering based on the pixel distance from where the eye is looking drastically reduces the load on the rendering system. i.e. At the point of focus, the frame might be rendered RTX/4k/etc., while at the edge it might only be shaderless 240p, without the user even realizing. I first heard about it while watching a presentation on the FOVE 0.