USB stands for Universal Serial Bus and ever since its formation, the USB Implementers Forum have been working hard on the “Universal” part of the equation. USB Type-C, which is commonly called USB-C, is a connector standard that signals a significant new chapter in their epic quest to unify all wired connectivity in a single specification.

Many of us were introduced to this wonder plug in 2015 when Apple launched the 12-inch Retina MacBook. Apple’s decision to put everything on a single precious type-C port had its critics, but it was an effective showcase for a connector that could handle it all: from charging, to data transfer, to video output. Since then, it has gradually spread to more devices. But as the recent story on the Raspberry Pi 4’s flawed implementation of USB-C showed, the quest for a universal connector is a journey with frequent setbacks.

The Incredible Promise of One Plug To Rule Them All

It doesn’t take an electrical engineer to feel a draw to USB-C. Many consumers fell under its spell as soon as they saw it was reversible. No more annoyances with a USB plug that mysteriously manages to be upside-down both ways! But it had even more to offer.

It doesn’t take an electrical engineer to feel a draw to USB-C. Many consumers fell under its spell as soon as they saw it was reversible. No more annoyances with a USB plug that mysteriously manages to be upside-down both ways! But it had even more to offer.

The new connector was slim enough for the latest lightweight gadgets like tablets and smartphones, doing away with thick barrel connectors while carrying enough power to charge laptops. With a greatly increased number of signal wires compared to prior versions, USB-C has enough throughput to feed multiple 4K screens yet it retains backward compatibility by using a cheap adapter. And the specification offers flexibility to go beyond USB with Alternate Mode support for Thunderbolt, MHL, and for protocols that don’t even exist yet.

A single universal port is appealing to product designers who are always working to tame a hodgepodge of ports, each with different size, shape, and mechanical strain requirements. Replacing them all with a single neat row of USB-C ports sounds like paradise. All of this made the rollout of USB-C an eagerly anticipated event across the industry, including here at Hackaday. It seemed like things were off to a good start as the standard launched on premium laptops like Retina MacBook, Chromebook Pixel, and on high end cell phones.

Why Doesn’t My USB Cable Work?

Those early products had the benefit of connecting mostly to peripherals that came in the same box with the purchase — chargers, headphones, etc. We didn’t detect a sour taste with USB-C until third party accessories start coming onto the market. That’s when ambitious plans from USB-IF meetings finally met that perilous land we call the real world.

A promise of USB-C is that we would no longer need to dig through a big tangle of cables, we’d just have USB-C cables and everything will just work. In essence, this removes the need for the user to have any knowledge at all about USB — even which end of the cable to plug into which device becomes moot.

Except it didn’t. The lowest point came when a USB-C cable manufacturer managed to mix up power and ground wires to destroy Benson Leung’s Chromebook Pixel. Hopefully we have left those horror stories in the past, but even when cables are made correctly to specifications, there’s still no guarantee things will “just work”. USB-C is a cable and connector specification, but those cables may be expected to service numerous standards, from USB 2.0/3.1/3.2, to Power Delivery (USB-PD), and alternative modes which encompasses numerous other standards. Trying to be all things to all devices through these multiple valid data speeds and power levels makes for a total combination of six different ways to build a spec compliant cable. This complicates the testing matrix of developing a USB-C device. Not only because lower-spec cables may not work when a higher-spec is required, but also because of situations where non-compliant implementations mean a higher-spec cable would not work even though a lower-spec cable did. (Such as with the initial faulty batch of Raspberry Pi 4.)

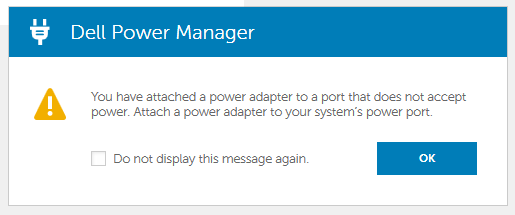

These confusing cables and ports present a poor user experience. Just because USB-C could do it all doesn’t mean a particular USB-C port would. Does it supply power? Does it accept power? Does it carry video? There’s no way to tell just by looking. It gets worse as we move away from mainstream devices. For example, Google’s Coral development board has two USB Type-C ports. One is used to supply power, the other communicates USB data, and the only way to tell which is which is to look at PCB silkscreen. Compare this to a barrell jack or other legacy power cord. They certainly weren’t universal, but users didn’t confuse them with data connectors like USB, Firewire, or Ethernet.

Getting There Will Take Practice

On the bright side, the situation is still better than the bad old days. When USB 1.0 launched, one of the ports it aimed to replace were PS/2 ports. Mice and keyboard had mechanically identical PS/2 plugs but would not work if plugged into the wrong one. Even worse, the port had no provision for hot-swapping and some systems protected themselves from voltage spikes by blowing a non-user-replaceable fuse. In contrast making a mistake connecting or disconnecting USB-C devices will not be destructive (ignoring cables of gross incompetence). Sometimes they may not “just work” but at least they fail gracefully.

On the bright side, the situation is still better than the bad old days. When USB 1.0 launched, one of the ports it aimed to replace were PS/2 ports. Mice and keyboard had mechanically identical PS/2 plugs but would not work if plugged into the wrong one. Even worse, the port had no provision for hot-swapping and some systems protected themselves from voltage spikes by blowing a non-user-replaceable fuse. In contrast making a mistake connecting or disconnecting USB-C devices will not be destructive (ignoring cables of gross incompetence). Sometimes they may not “just work” but at least they fail gracefully.

We’re seeing more and more products designed around advantages of USB-C, and the hardware hacking community is diving in. “Convert it to USB-C power” is quickly becoming a common project theme. You can add it to an old laptop, or use it to charge your wireless mouse. It’s even been added to an easy-bake oven. This category might eventually reach “Put a Raspberry Pi in it” level of popularity.

High profile episodes like Raspberry Pi 4 power circuit design serve as a community-wide lesson so that hopefully the same mistakes will not be made again. Key to this will be a helping hand in understanding the specification and how to test news designs. We also expect proven reference designs like, a proper USB-C power sink, to quickly become a standardized pattern that people can just drag and drop whole into their EDA software of choice. As we all learn and get better at it together, hopefully USB-C will actually become that one plug to connect them all.

Barrel connectors certainly weren’t / aren’t a panacea. It’s far easier to destroy something by using the wrong barrel connector brick than by using the wrong USB-C cable or power supply.

I am still wondering why so many more devices are being destroyed because people don’t hold them steady while pushing in the USB-whatever cable. Resulting in the precious device crashing down onto the (cement/concrete/pickyourflavor) floor. Saw it happen Saturday. It doesn’t help if USB/-+°^C is “reversible”, when the damage done thanks to lack of support by a sturdy hand is “irreversible”.

Became a lot better when they stopped throwing the occasional “evil center negative” into the mix here and there. :-D

oh i hate those. and the symbol that indicates this is somewhat vague to normal people.

One way is to plug a Dymo label printer’s 24 volts DC power supply into a device that runs on 12 volts. The Dymo people should have known that barrel plug size was the most common one used for 12V devices, like 3.5″ external hard drives. But noooo, they just had to select *that* size even though there’s a wide selection of less commonly used plugs and jacks that either will not fit into or will not make contact with both conductors in the almost universal 12V connector.

Fortunately I only had to remove the 12V over voltage protection shunt (which fortunately permanently shunted instead of blowing open) to get the Western Digital MyBook drive working again. The USB 3.0 to SATA adapter inside the case has a very robust 5V regulator to protect itself, but it passes 12V straight through to the drive. Doh!

“Mice and keyboard had mechanically identical PS/2 plugs but would not work if plugged into the wrong one” – This is just laziness on the part of the software – on some non-PC machines it was even possible to plug two keyboards or two mice in and it’d still ‘just work’.

I don’t know if “lazy” is the right word, it had more to do with assumptions than anything.

PS/2 is a specific serial protocol, but in the PC world initially was made to be backwards compatible with older and different hardware used in that circle.

Oversimplified, the PS/2 keyboard port, while electrically compatible with the AT protocol, could also be backwards compatible with the XT keyboard protocol which was quite different. This isn’t something you’d ever see from a mouse though.

On the other hand, the PS/2 mouse port could be backwards compatible with RS232, which wasn’t a normal thing for PC keyboards.

Later on the PS/2 ports were the old protocols and could switch over to USB.

You’re correct that this is a software issue, although specifically firmware in the BIOS which means many people never got updates to that software. Part of the PC compatible world at the time was cheaper mostly compatible clones, where the manufacturer didn’t much care about forward compatibility worries, for better or worse, since that just upped the price.

In the non-PC world you mostly didn’t see these assumptions, or if so they were different ones. I know the one SGI I have with a ps/2 port never had to worry about XT keyboards or serial mice :P

Your memory is correct. However on PC’s with PS2 ports they were typically handled by an Intel 8042 or 8051 family microcontroller running firmware that was usually mask programmed into the part and could not be changed. The micro interfaced the serial KB & mouse to the PC’s main Intel processor (starting with the 80286) via parallel IO ports at 0x60 and 0x64 along with an interrupt. In laptops this chip was also given the responsibility of rudimentary power management and keyboard scanning for the built in laptop keyboard. They also used it for kludges – like the horrible A20 toggle. For any “modern” PC with these ports the micro has been absorbed into the superIO chip. https://wiki.osdev.org/%228042%22_PS/2_Controller

That should be a subject for a HaD article. Why did IBM route a part of the memory control of the PC-AT through the keyboard or keyboard controller? That led to cloners coming up with a superior way while also keeping the original IBM way in case of some rare software dependency. The BIOS setting was typically called Fast A20.

Eventually the fast way became the only way, after a while of being the default setting instead of the slow setting being default.

The reason was simple. Therewere some idiots who were making programs which abused the fact that there was only 1 MB of address space (20 bits of address width). Thus on the 1 MB boundary, you could have segments which were partially on the upper end and partially on the lower end of the memory space. Simply adding more bits would break these programs, thus the need to manually enable the 20th bit of address.

Can’t connect a PC or PC/XT cable to a PS/2 port without an adapter with more smarts than a dumb point to point wired cable. Or you need one of the keyboards that were switchable between PC/XT and AT modes. I once had one that had a 3rd position on its switch but I never was able to find out what type of computer position 3 was for.

This. Many years ago, I had a coworker w/ a Dell docking station (with a lone PS2 port) for his laptop. I remember him upgrading his laptop from NT4 to 2000, and 2000 would identify whether the docking station had a keyboard or mouse plugged into the PS2 port, and allowed either to work correctly.

When USB fist came out, if you had to go into BIOS or were booting from a CD, etc., you wished you had a PS2 keyboard available b/c USB didn’t have full support. USB is definitely better now, but I don’t get the hate for PS2, which seemed like a much bigger step over its predecessors of AT keyboards and serial mice.

For mobile devices where there are limited surfaces for connectors, it makes sense. I can’t see USB C replacing all the cables in a desktop as any of the alt functions of the connector requires a lot of resources to support. PD needs extra power circuitry. PCIe is very limited from desktop CPU unless you have HEDT. It is also silly to have external expansion boxes when you could plug in an expansion card.

Unless you plug cables in and out all day from the back side of a PC, the connector orientation aspect doesn’t offer much. There are front panel connectors you know and hipsters like their ”rigs” take up desk spaces. The orientations can easily be figured out from the PCB as USB connectors unlike RJ45 do not have an upside down one (yet).

Another huge Joe Kim art win! Great image!

I really don’t get why PS/2 received so much hate.

They were not interchangeable?

Well sort of. I seem to remember my keyboard working despite which port I plugged it into. The mouse, no, not so much. For a while I think USB mice and PS/2 keyboards were popular. Or was that just me not replacing keyboards as often as mice? Anyway, during that time for me the mouse was already USB so either port worked for the keyboard.

They were not hot-swapable?

That’s news to me!

I know I hot-swapped keyboards many many times and it just worked. Mice… well.. if there wasn’t a mouse plugged in at the time the computer booted I remember it not seeing a mouse even if I plugged one in until I rebooted. I think I remember being able to hot swap them after that though. Also, I always thought that was a software issue, the OS stopped looking for a mouse if it didn’t see one by a certain point. Was that a real hardware limitation?

In my anecdotal experience the only problem I ever had with USB or PS/2 for keyboards and mice was always that if I needed one then what I had on hand was the other! Besides that either format just worked.

USB anything else though… for years after motherboards stopped coming with PS/2 connectors by default and available expansion ports were greatly reduced in favor of USB I found USB to be crap. It was slow and UNRELIABLE! I worked tech support for a major ISP and they taught the installers to just plug in a USB network device even if there was a built in network card already. They even taught them to tell the customers that the USB device was “better”. All so they could higher a cheaper, less educated tech rather than teach them what a PCI slot is for.

Then of course I would get these customers on the phone, irate because their internet was slow and unreliable! Grrrr!

USB has mostly gotten better but it sure did take a long time and was pushed as a panacea for everything long before it should have even been considered even beyond alpha testing quality. Even today I miss more dedicated ports. How many times have I sat and waited for an Arduino sketch to upload all the way to timeout only to realize that the last time I unplugged it the OS for whatever reason gave it a new descriptor. (COM port number for you windows users). Do that enough times and you will have to reboot just to get it to work at all! I still contemplate installing a dedicated RS232 port and getting an RS-232 to TTL adapter.

Maybe I got that backwards and it was the mouse that worked in either port. I don’t remember.

Either way, so what? Unless you are colorblind it isn’t exactly hard to tell them apart!

I’m willing to bet nearly 100% of the human population is colorblind (heck, even completely blind, maybe except for mid-IR) in their fingertips. Seeing the port you plug into is a rare luxury.

Why would that be rare? If it’s a desktop you only really install those the day you set it up. Didn’t you turn the computer so you can see the back then turn it around when it’s all hooked up? I sure did!

If it’s a laptop then well.. even easier.

Umm So the real problem with PS/2 was that it was mounted on the back rather than the front of the box? Well, to each their own. I prefer to just plug my keyboard and mouse in once, at computer setup time with the case pulled out, turned around or whatever so that I can easily see and reach what I am doing. If I thought I was going to switch things around for that, well I had a KVM switch for that.

But anyway… they were rare but I have seen PCs that extended the PS/2 ports to the front as well.

No, placement of the PS/2 port was never the issue (probably even an advantage – front mounted ports would probably get hit with chairs etc. more often). And yes, in most cases mouse and keyboard are a set it and forget it kind of thing.

My issue with your argument is that the color is the least useful feature to differentiate two semi-incompatible ports with. It only works if you can look at it, and not all setups even afford you the luxury of looking. My father has his old PC inside a cabinet under his desk and cables are never quite long enough to allow you to pull the tower far enough to actually see what you are doing, so everything is done by touch.

All other ports (except for audio, which shares the color coding sin) can be operated with one hand, in the dark, by feel alone. From all PC ports I have encountered in my life, PS/2 shares the podium with DIN-5 (for AT keyboard) for the hardest connector to plug in the correct way by touch alone.

@MikrySoft – Yes, in those days everybody’s parents and grandparents had their PCs entombed in those dumb built-in-to-the-desk cabinets because OMG, we can’t actually look at technology. Technology is ugly!

Encouraging people to take their PCs out of those cabinets is a feature not a flaw because they only served to limit airflow thus reducing lifespan and increasing the frequency at which you have to come out and replace their shit for them.

Replace? That box is still running 386SX. It’s old enough to drink.

Then again the majority of the USB ports on my tower computers aren’t easily seen. My bet is on the lazy accusation another made. Apologies to those where that shoe fits.

There are several easy ways to get around this:

You can crawl under the table behind the computer.

You can use a mirror and crawl not that deep

You can move the computer until you see the connectors.

You can remember, which port is at which place.

No truer words have been spoken. Yet, today I discovered that I’d mistakenly put a USB type-A into the unused Ethernet port on my Pi.

PS2 became hotswappable by convention if not default once enough computers were written off because the cable got pulled out by accident etc. But it is certainly true you shouldn’t assume hotswapping won’t brick the machine!

USB now is good enough to be a default. But I do find it annoying that PCI devices are getting rarer and most costly because of USB’s popularity it seems (I wish more devices still came with serial and parallel ports – when you want to interface with your computer directly they are IMO a better interface than USB). I also prefer a nice slot and forget PCI device so much more than running out of USB ports again or having to pull out hubs and devices by the score to untangle the cables when adding something new.

Hell I’m still having a PS2 keyboard connected to my gaming computer, just works and I’ve got a free USB port left. Also PS2 is way easier to implement for tinkerers.

So which USB C cables should I be buying to guarantee that everything “just works”?

In short you can’t. As mentioned, you should in theory be able to just get usb-c thunderbolt 3 cables, but those won’t work with a pi 4, and probably a switch.

All of them.

The one on Amazon

That really depends on what you want to “just work”.

There are 3 basic cable types (looking at just USB C to USB C cables)

– USB 2.0 cables

Like the cable supplied with the new iPad. These can be up to 4m and carry 5V@3A to 5V@5A if the cable is electronically marked (ne or both plugs contain chip providing cable description (make, model, length, max current etc.) to the host/charger).

Transfer speed is limited to USB2.0 (480Mbps). It won’t work for any of the alternate modes (Display Port, audio, etc.)

– USB 3.0 cables

Going by the spec, all USB 3.0 (and up) cables have to be electronically marked. There are two power levels available: 60W (20V@3A) and 100W (20V@5A). 60W can technically work with a non-marked cable, but not all chargers will support that. On the other hand, marked cables can have issues with poorly designed devices (like RPi 4).

Length is determined by maximum speed the cable supports.

– 5Gbps – USB 3.0/USB 3.1 Gen 1/ USB 3.2 Gen 1×1/ USB SuperSpeed (same protocol, new names – one lane at 5Gbps) works up to 2 meters.

– 10Gbps – USB 3.1 Gen 2/USB 3.2 Gen 2×1/USB SuperSpeed+ (one lane at 10Gbps) works up to 1 meter.

– 20Gbps – USB 3.2 Gen 2×2/USB SuperSpeed++ (two lanes at 10Gbps) works up to 1 meter.

Some (non-compliant) USB 3 contain only the bare minimum of wires needed for USB, but will fail when tasked with using any of the Alternate Modes (DisplayPort etc.)

– Thunderbolt 3 cables

Up to 2 meters, the cables are just fully featured USB 3 cables. The 60W/100W distinction still from previous tiers still exists. Speed you get is again determined by length – 40Gbps up to 0.5m (by spec, 0.8m cable exists) and 20Gbps after that.

2m at 40Gbps is possible using active cables, but they don’t support USB3 transfers.

Up to 60m at 40Gbps is theoretically possible if/when someone releases optical cables (like Corning did for TB1 and 2)

Good rule of thumb is checking for USB-IF or Thunderbolt 3 certification and appropriate logos (which are trademarked and technically can’t be used without certification). For most use cases, a good passive cable matching the best port you have (either Thunderbolt 3 or USB 3.1) from a reputable brand (Belkin, Plugable, Anker, Cable Matters and CalDigit show up in rankings quite often) will be fine. After that, it becomes the issue of balance between speed, length and price. Special(-ish) cases like Thunderbolt docks or external video cards may benefit from the extra freedom of 2m active Thunderbolt cables.

There is one more caveat to “just works” – behind USB C socket there can be a whole range of devices – USB2, USB 3 (with or without alternate modes like DisplayPort) or Thunderbolt 3 – and even the best cable won’t let you connect a monitor to a USB2 device.

Why would you put the ‘smarts’ in the cable in the first place? After all the connector is ‘standardized’… right? I would think you’d want a dumb USB-C cable (like cat-5 for example) that you just ‘connect’ on device to another. Let the smarts be in the end-points to negotiate what you are connected to via a protocol. In my small mind that seems the logical thing to do…

Not all USB-C cables have the exact same set of conductors (for the signal) or are even capable of the bandwidth that some of the protocols use. Even more important is that to achieve the highest power for USB-PD, the endpoints need to negotiate what they can handle *AND* what the cable can handle. USB-PD can do up to 100W. This is done by putting ~20V at 5A on the cable. If you try to send 100W at 5V you would need to send 20A- and since Ohm’s law still applies, the conductors and connectors would have to be pretty massive. All 100W capable USB-PD cables are electronically marked.

The more they overthink the plumbing…

This is a pretty good example of such.

Anyone ever heard of Cypress EZ-PD BCR?

I prefer different connectors for different things, unless it really makes sense to use the same because they really “always” work. Which is not the case for USB C stuff. When I have to pay attention to which port accepts and which one delivers power, which one can output video and so on, it’s a lot more convenient to have different connectors on there IMO. At least then I can tell at first glance which port is for what. To me, USB C feels like a huge “disimprovement”.

Yup, particularly as the one thing you’re most likely to have plugged in is power, and MagSafe connectors have saved me several times.

today i was backing up my files and was wondering with usb ports capable of supplying tons of power for charging batteries, my external hard drive still needed an external wall wart to function. the future is a lie.

This reminds me of the Plug-N-Play fiasco. It took a long time for people to stop calling it Plug-N-Pray. I still don’t like the stupidity of wondering what descriptor will be given – why can’t I have my device programmer always set to one specific COM port, etc.?

You can. Well, it’s not as easy as it used to be as now you have to write rules but once you write them it’s easy.

https://newscrewdriver.com/2018/08/21/telling-usb-serial-ports-apart-with-udev-rules/

Of course you have to be using a decent OS too. Windows and Mac users need not apply here.

Thanks for the link – it looks like this will help a bit.

In a previous job, I had the Python program read the /dev/ttyxxxx port when it connected to an Arduino, and then used that port to communicate with the system’s Arduino. That worked, but only because we only one Arduino per system.

This helps, but eliminates the ability to just swap the Arduino in the event of a failure. What I would still like is a serial port that enumerates to the same /dev/ttyxxxx port every time, no matter what is connected – kind of like the old serial ports with hardwired addresses.

Still, this is a nice tool to put in my tool belt. Thanks again.

Removing the serial ID from the USB device gives a bit more predictability on Windows. You need to patch the ATMega16U2 sources like this:

https://github.com/Ultimaker/UltiboardV2-usbserial/commit/e7604af7d2fb16c6ea84efa12c38f80d6d83bd8f

I just can’t wait for USB 4. I hope it will be triangular, so I can plug it in 3 ways, not in just 2. And it should have 15 pins on each side and a full featured cable should be even thicker and more rigid.

Ah!, an in the middle they should now be able to put a barrel jack, but it should be multi function, headphones and power delivery and source. But wait! The end of the jack can be open and a small tube can run through which can provide a source of water so the computer can use evaporation cooling.

Hold on! the tube is still not so small, we can even add an optic fiber through it!

And don’t get me started on the plans for USB 5.

USB 6 – http://shorturl.at/MPV15

USB 7 – https://www.youtube.com/watch?v=ksPL0HFA3lM

You are onto something here

The main reason I like USB-c over micro USB is because the latter are shitty connectors that would always find room to wiggle on my phone and lose connection coz of loose connection

Those crappy leaf springs always used the worst pig metal and fatigue in no time at all. So worthless. I want to smack those engineers upside the head who thought that was a good idea.

My #1 frustration with USB C: You can NOT use USB OTG /and/ charge your phone / tablet at the same time! Why? Can’t even trickle charge. Can’t even with Y cables that specifically say they can (ask me how much money I’ve wasted on this). So to use USB OTG, making your phone / tablet into an effective portable computer, you MUST find one that has a separate DC power jack. And good luck with that.

I simply don’t understand why none of the designers / mfgrs thought about the fact that people might want to do this? It’s such an obvious application.

And think about it for “headless” operation of a small Linux system. E.g. RasPi or whatever. You plug in your cell phone, pull up an OTG terminal app, configure the IP address, etc… and off you go. Wouldn’t it be nice to just leave an old cell connected to it to monitor console messages? Or better yet, write an app that responds to simple console messages by showing a status. e.g. big green “OK” if the app is seeing the expected console message and big red “ERROR!” if it’s not. And it could show the IP address at the top so you know where to connect.

Anyway, USB OTG and Charge should work at the same time.

Seems, it’s not out of sight.

a shame we live in a world that aims to design at minimum costs to production with closed-source IP for maximum profits from patenting and iterative improvements for consumer’s low-time preferences.

Thanks for an informative article written with a sense of humor and a great title and illustration. This is what tech writers aspire to.

Lightning connectors look a lot simpler and more robust.

Are they ?

What is a very much needed USB-C device is a PCIe x1 card with an internal Type E connector. They simply do not exist despite there being PCIe x1 cards with one (or more) *external* Type C ports. All a manufacturer would need to do is take one of those designs and change the circuit lines to mount a Type E connector on the inside.

But for PCIe Type E cards the shortest made is x2. No idea why. It’s quite annoying because my motherboard has one x16 slot, one x16 slot that’s only connected as x4 – and shared with the NVME Ultra PCIe x4 SSD slot, so that one cannot be used for anything. All the other slots are just x1. The motherboard has ONE USB C port on its back panel, no Type E connector for a front panel USB C port.

There are PCIe x1 cards with slots for NVME, and there is one (way overpriced) NVME USB C board, but of course its connector is Type C, not Type E, and there is no such thing as a Type E to Type C adapter.