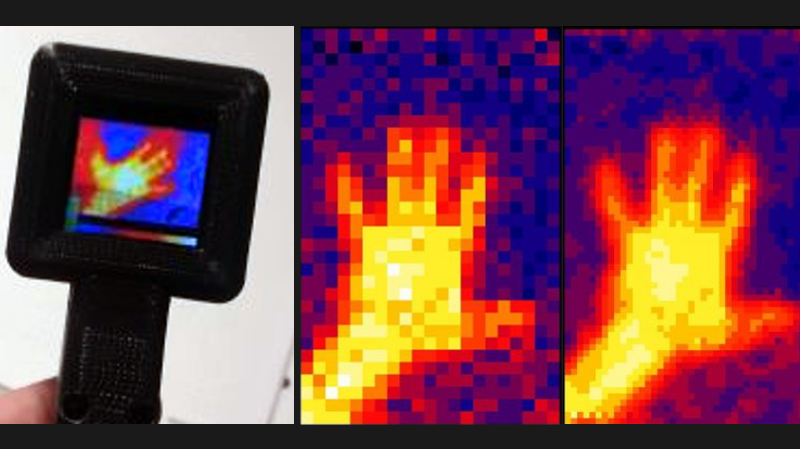

Under the right circumstances, Gaussian blurring can make an image seem more clearly defined. [DZL] demonstrates exactly this with a lightweight and compact Gaussian interpolation routine to make the low-resolution thermal sensor data display much better on a small OLED.

[DZL] used an MLX90640 sensor to create a DIY thermal imager with a small OLED display, but since the sensor is relatively low-resolution at 32×24, displaying the data directly looks awfully blocky. Gaussian interpolation to improve the display looks really good, but it turns out that the full Gaussian interpolation isn’t a trivial calculation write on your own. Since [DZL] wanted to implement it on a microcontroller, the lightweight implementation was born. The project page walks through the details of Gaussian interpolation and how some effective shortcuts were made, so be sure to give it a look.

The MLX90640 sensor also makes an appearance in the Open Thermal Camera, one of the entries for the 2019 Hackaday Prize. If you’re interested in thermal imaging, don’t miss this teardown of a thermal imaging camera.

3×3 kernel is pretty fast, but if one needs a wider blur, CIC filters will work well for this. Needs only addition and subtraction, no multiplication. 3rd level or higher CIC filter has a response close to gaussian. Gaussian blur is separable, so one can filter first in X direction and then in Y direction, needing N+N instead on N*N calculations.

for a fun afternoon, prove that the 2D Gaussian is the only circularly symmetric separable kernel.

for a lost weekend, research enough to understand that sentence sufficiently to carry said proof out. :grin:

I recently implemented a 2D Gaussian blur as a composition of two 1D Gaussian blurs, making it ~O(n) rather than ~O(n^2). I also used a look-up table for the 0…255 possible rgb values to avoid the need for multiplies when applying the kernel, but a micro controller might be a bit too resource constrained to allow this method with sizeable kernels, although symmetry can be taken advantage of.

If I recall correctly, God of War on PS3 used a co processor to do a post processing Gaussian blur and where the horizontal operation in memory using SIMD was much faster. So they did it in several steps. A 1D horizontal. A complete rotate by 90 degrees. Another 1D horizontal. A rotate 90 degrees back. A key to this obviously was a fast rotate but I feel like they were able to do that with hardware assist like with programmable DMA.

If you start with an image h x w with pixels (x, y)

Apply 1D kernel to columns (or rows), and output results to w x h array as pixel (y, x) = (x’, y’)

Apply 1D kernel to columns (or rows) of this intermediate w x h array with pixels (x’, y’), and output results to h x w array pixel (y’, x’) = (x, y)

For extra points, divide columns being done into n stripes, for distribution across n threads or processes.

Yeah that sounds about right. I do believe they did distribute it across several co processors as well.

Honestly wish those modules were cheaper.

So the programmable refresh rate on that sensor is up to 64Hz. I wonder if MEMS mirror arrays are reflective in the far infrared? If so you could conceivably increase the resolution a fair bit through scanning.

Interesting. Was also thinking about the ol’ fad of removing the IR filters from regular cameras. Was thinking it might be possible to subtract visible-light from the [nearby?] pixels to reveal only the IR part… but how? Was thinking two cameras, one lens, but there may be an answer here.

You can replace the IR blocking filter with a visible blocking ( and IR passing) filter, either on the sensor or on the lens (or anywhere in between, if that can work with your lens/camera). Low cost absorbing filters (look like black glass) are available in standard lens filter sizes. Higher cost interference filters (look like mirrors) are available from scientific optics suppliers (Edmund, Thor Labs, Newport). The higher cost ones offer much better transmission and rejection, but have some other tradeoffs.

That’d be far too easy… that’s what I get for reading HaD before coffee.

But, now that I have, I take it normal ccds don’t pick up a wide range in the IR spectrum, otherwise IR/thermal cams would be more common, eh?

For your hacking fix, a piece of exposed photographic film will block visible light, but not IR. You can get enough for a hundred cameras for free from any ‘one-hour’ photo shop.

I was looking at creating a single pixel imager in the IR at one point, and I went so far as to contact TI about their DMD devices. The mirrors will reflect fine until the wavelength gets to be on the order of the mirror dimensions. However, the problem is that the windows on the packages are not IR transparent, so the light won’t make it to the mirror anyway.

Hmmm I have some gold TI talp1000b MEMS micromirrors on my bench at home, definitely LWIR reflective and with a large enough active surface. Already have used one for a DIRCM fine pointing actutator

Oh, it looks like you’re looking at a larger mirror than I was talking about. I was talking about DLP mirror arrays, which have a hermetically sealed window that is NIR transparent, at best. Here’s a link to what I was looking at:

http://www.ti.com/dlp-chip/advanced-light-control/near-infrared/products.html

You can see that it only passes light up to 2.5 um, whereas thermal imagers are typically 8-14 um.

Why do you need gaussian blur instead of simple and fast bilinear interpolation?!

Maybe because bilinear (and Gaussian to an extant) just blur the image.

Here, this technique kind of uses weighted neighbor scaling. So you keep some sharpness while enlarging your image.