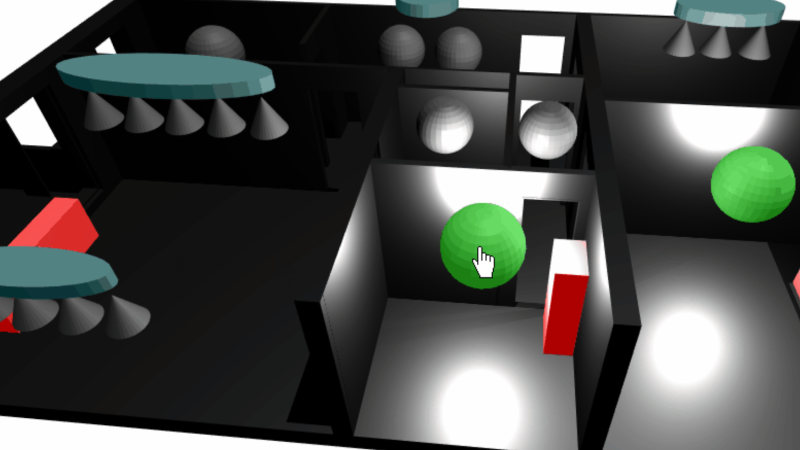

With an ever-growing range of smart-home products available, all with their own hubs, protocols, and APIs, we see a lot of DIY projects (and commercial offerings too) which aim to provide a “single universal interface” to different devices and services. Usually, these projects allow you to control your home using a list of devices, or sometimes a 2D floor plan. [Wassim]’s project aims to take the first steps in providing a 3D interface, by creating an interactive smart-home controller in the browser.

Note: this isn’t just a rendered image of a 3D scene which is static; this is an interactive 3D model which can be orbited and inspected, showing information on lights, heaters, and windows. The project is well documented, and the code can be found on GitHub. The tech works by taking 3D models and animations made in Blender, exporting them using the .glTF format, then visualising them in the browser using three.js. This can then talk to Hue bulbs, power meters, or whatever other devices are required. The technical notes on this project may well be useful for others wanting to use the Blender to three.js/browser workflow, and include a number of interesting demos of isolated small key concepts for the project.

We notice that all the meshes created in Blender are very low-poly; is it possible to easily add subdivision surface modifiers or is it the vertex count deliberately kept low for performance reasons?

This isn’t our first unique home automation interface, we’ve previously written about shAIdes, a pair of AI-enabled glasses that allow you to control your devices just by looking at them. And if you want to roll your own home automation setup, we have plenty of resources. The Hack My House series contains valuable information on using Raspberry Pis in this context, we’ve got information on picking the right sensors, and even enlisting old routers for the cause.

> vertex count deliberately kept low for performance reasons?

Highly likely.

I’m a former professional photographer, and I’ve seen Blender generated images that I couldn’t tell weren’t photographs. And some of those were fairly high resolution.

(Althought a lot that very obviously weren’t… but most weren’t trying to be.)

What I don’t know, if it’s for the performance hit in generating the Blender, or the .glTF format, or for the performance when using the .glTF format and visualising them in the browser using three.js.

I started to be cautious in the beginning. Main reason is performance, so I plan to add performance stats and make tests on multiple smartphones to check the fps for different models, then I’ll be adding details. I’m also learning blender so started with the simplest shapes. I also wonder if clear geometrical shapes would not be more user friendly than the real objects models. The size for example would most likely better be magnified otherwise the bulb would be too small to click on.

.glTF can embed any mesh internally in text, as a whole binary file or even externally, do have some compression so is not limiting here. The limiting factor would come from the webGL implementation of the smallest phones that are required to be supported. But luckily, as every one can use a different model, each can adapt the detail to his phone or PC app.

You can get very good performance out of that setup with waaaaay more detailed meshes. Could also bake some global illumination from Blender into textures, make two versions for each room (one with lights on, one off), it would look way nicer than the potato lights from the example. It’s a cool concept anyway, makes it easier for the less technically inclined to use a home automation system.

Agreed, as I answered “Canoe”, I’ll be adding more and more details, this project is just starting and I do have a long features plan.

Baking textures into Blender is an excellent idea. It might not scale for rooms with multiple spotlights next to each other, but these, I had to configure them not to cast shadow due to webGL limitations anyway. I planned baking the shadows mainly for the sunlight, and I figured out that it’s even possible to do that with command line through python. Thanks to the help of a server, the user can load a texture configuration that shows the current sunlight impact (or potential impact) in each room, combined with a light sensor, additional parameters can be taken into account.

I’ve been working on something similar but built with Unity

https://youtu.be/wHzdfeWEFRA

I have little time to carry on with it so will release the source soon.

I really like your example with Unity. We definitely have the same goal. I’m currently studying the Unity option, but why not. For me the ideal case would be to provide a running concept that are not locked to any sort of tech. The 3d rendering part could be performed with three.js babylon.js or unity, if they all could use the same .glTF interactive material. So after all, the network bindings, hue, ikea, mqtt are independent from the rendering engine.

As you had experience with a similar project your advice and recommendations are highly welcome.

Looks nice and intuitive. However, in my view a smart home shouldn’t need an interface. How does a home become “smart” by just moving its light switches to a remote? It isn’t “smart” just because google can control my lights. And it isn’t smart to control lights from a phone. At most that is all just fun concepts. My idea of a practical smart home is one where the lights turn themselfes on when they are needed.

Decorative ligths – ON when it’s dark. OFF by master switch or a certain time at night when everyone at home should be in bed.

Outdoor ligths – ON when it’s dark, always. No manual or timer OFF switch.

Room lighting – ON when someone is in that room, and for about 15-30mins after the last person left.

Master OFF switch located in my bedroom. Shuts down all lights in the house except for a few very weak always-ON lamps lighting a path to the bathroom.

That’s the way I’ve built my system anyway…

Hi @arcturus,

I like your approach and I think there is room for both approaches. I’ve even been working in headless automation from the beginning, see for example my “Home Smart Mesh” Project (https://hackaday.io/project/20388-home-smart-mesh) where I used all sort of small low power wireless sensors and switches with a ruler logic running on a raspberry pi.

Your partitioning of light classes is a really nice and pragmatic automation concept, but a completely headless smart home without any dashboard can hardly fulfill all use cases.

Depending on the mood, we might want a new color combination or a very particular dimming level, do a normal user have to sit behind a pc and patch a config file for that ? Automation is good but it also do have itself some parameters that the user could wish to configure through a friendly interface. Sometimes I want 19° Celsus heating some others I want 20° or 21°. Automation can hardly read my facial expressions and deduce that for the time being. It is really challenging to create perfect automation rules, so there is often the need to check what is the current status and do adjustments.

For that a “Dashboard” is required. A dashboard shall not replace any light switch or presence sensor. A Dashboard has two scopes in my opinion, the history and the present. For the history, we can’t beat Grafana (use usage in linked project and many others).

For the status of the present time, a 3d app that shows everything is in my opinion an intuitive approach and a natural extension to the currently available devices listing or 2d maps applications.

Smart Home is when Jarvis is running the setup.

Wink is dead. That idiot Will. I. Am killed it

Too funny. Here I was thinking the light switch existed so I could have light in a room, not the switch itself being THE interactive virtual reality experience.

Does anybody really want a TV remote they can talk to? “Hey Siri, change the TV channel up one station” vs CLICK

This is really a deep thought, a similar thought kept me busy a long time in the beginning of this project.

As a conclusion, I’m trying not to provide any “3d button” in this app, as the concept of a button is a remote control of something different. That is already creating a complex association in the user’s mind. Instead, any 3d object can become interactive with click, hold, 3d slider popup (see hackaday project update section multiple parameters).

And in the same line, going from 2D to 3D we are right away tempted to extrapolate to Virtual Reality and then Augmented Reality. And there acting on a real light would only require a point and shoot with fingers or something, light switches would be things of the past :)

Some people like to talk, other’s don’t. I think that for some operations a sentence formulation could be quicker that explaining it with clicks and other geometrical concepts, but certainly not the “channel up” function :)

The real power of smart homes comes when you can program a series of actions with a single command or action. I had a whole routines before bed of turning off various lights, checking the garage door, turning on some bedroom lights etc. Now with a single verbal command, ALL the lights are either turned off or adjusted to preferred levels, the garage door is confirmed closed, the thermostat is set to a cooler temp, I am give a verbal reminder of what I need to do before bed, and soft relaxing music starts to play in the speaker next to my bed.

Now if only these current routine systems would let us start using more conditional and scheduled routines, that would be a step up.

Patching in automation and voice recognition to an obsolete “channel” concept is crazy… as crazy as navigating the internet by IP address.

“Hey Google, play the next episode of Friends in the living room” is far easier than navigating through a video-on-demand system to find the list of Friends episodes, and look for the last played one, and then finding the next in the series, and streaming that to the livingroom – regardless of how intuitive the interface is.

The ideal voice control system will be very natural-speech based, always on, interactive, and predictive. If it hears me say to my room mate “Hey, you wanna watch Friends?” it’s already preparing the next episode on the last TV I watched it on, and on the closest TV. Then when my room mate says “Sure”, the system should be tracking my location in the house so that when I walk into a room with a TV, it prompts me “Ready to watch the next episode of Friends?”. If I say yes, it plays. But if I say “no, we’re going to watch a rerun in the bedroom” then when I walk into the bedroom, a list of episoides should be waiting for me on the TV there.

But I digress. This project is great for remote control and observation of automation installations. When I’m not home, it would be fantastic to see the view of the house like this and be able to interact with the automation. Turn on and off lights easily, control the heat by bringing up a 3D interface to adjust the thermostat, and tap on a camera for a real-time view. I like it!

Thanks @Robert,

I do share the belief that AI is improving to a point where it will manage to act as you predict here. Will it at some point be so smart and in addition to speech acquire our facial expressions, or just blink of an eye for a yes ? Why not ?

For the 3D webapp, I do not have the pretension to provide the best 3D interface, I’d like simply to advertise the concept and push people to adopt it on their integrated software. I’m myself surprised that video games are at such an advanced level of realism, and when it comes to useful 3D apps, not that many.

Thanks again for your comment and encouragement.