If you look hard enough, most of the projects we feature on these pages have some practical value. They may seem frivolous, but there’s usually something that compelled the hacker to commit time and effort to its doing. That doesn’t mean we don’t get our share of just-for-funsies projects, of course, which certainly describes this online 3D ASCII art generator.

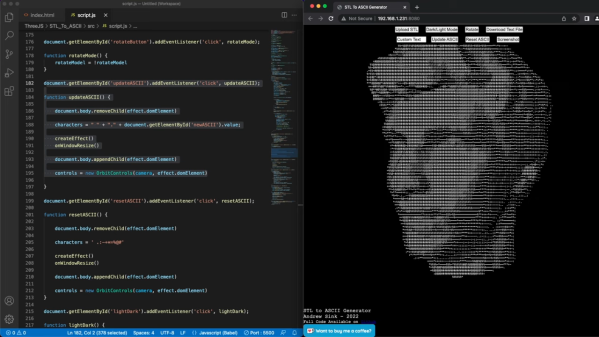

But wait — maybe that’s not quite right. After all, [Andrew Sink] put a lot of time into the code for this, and for its predecessor, his automatic 3D low-poly generator. That project led to the current work, which like before takes an STL model as input, this time turning it into an ASCII art render. The character set used for shading the model is customizable; with the default set, the shading is surprisingly good, though. You can also swap to a black-on-white theme if you like, navigate around the model with the mouse, and even export the ASCII art as either a PNG or as a raw text file, no doubt suitable to send to your tractor-feed printer.

[Andrew]’s code, which is all up on GitHub, makes liberal use of the three.js library, so maybe stretching his 3D JavaScript skills is really the hidden practical aspect of this one. Not that it needs one — we think it’s cool just for the gee-whiz factor.