In the early days of the home computer era, many machines would natively boot into a BASIC interpreter. This was a great way to teach programming to the masses. However on most platforms the graphics routines were incredibly slow, and this greatly limited what could be achieved. In 2020 such limitations are a thing of the past, with the Color Maximite 2. (Video, embedded below.)

The Color Maximite 2 is a computer based around the STM32H743IIT6 microcontroller, packing a Cortex-M7 32-bit RISC core with the Chrom-ART graphics accelerator. Running at 480MHz it’s got plenty of grunt, allowing it to deliver vibrant graphics to the screen reminiscent of the very best of the 16-bit console era. The Maximite 2 combines this chip alongside a BASIC interpreter complete with efficient graphics routines. This allows for the development of games with fast and smooth movement, with plenty of huge sprites and detailed backgrounds.

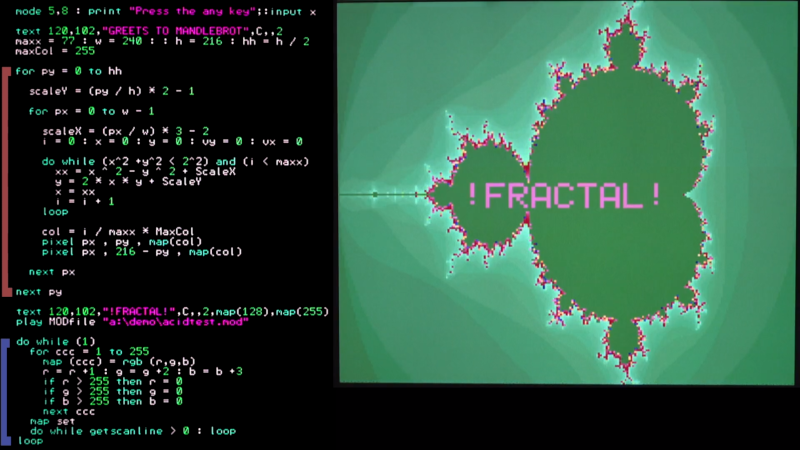

[cTrix] does a great job of demonstrating the machine, designed by [Geoff Graham] and [Peter Mather]. Putting the computer through its paces with a series of demos, it shows off the impressive visual and audio capabilities of the hardware. It serves as an excellent spiritual successor to BlitzBASIC from back in the Amiga days. Particularly enjoyable is seeing a BASIC interpreter that adds syntax highlighting – making parsing the code far easier on the eyes!

We’d love to see this become an off-the-shelf kit, as it’s clear the platform has a lot to offer the retro hobbyist. It’s certainly come a long way from the original Maximite of nearly a decade ago. Video after the break.

pretty neat but for that much I could have a couple raspis.

It’s about the journey not the destination.

it has to be, because the destination is a computer that you have to write every single piece of software yourself … in basic

Just like the phone booth sized HP2000E I started out with in the 1970s. Back then we had to write our own software in the snow which made data entry problematic.

We dreamed of snow……..

WRONG. You can load any program others write with that SD card slot. If you don’t bother to read, why do you bother to comment? 🙄

TL;DR: sometimes we just want things to work.

It’s not even about the journey. It’s about removing the colossal operating system. It’s about the journey being a walk in the park, rather than an ordeal over treacherous terrain.

What people LOVED about 8-bit home computers, was that they were simple, but flexible. They were simple enough that you could learn to write a simple program in an evening, and fleximble enough for you to step into a world where what you could do was limited only (mainly) by your imagination. Most home computers started up in a couple of seconds, to a BASIC prompt, and by inserting a floppy disk, a cartridge, or a cassette tape, could be made to run programs you either bought or wrote yourself. Some had rudimentary operating systems, like Apple DOS or CP/M, and these added a thin layer of abstraction, where you first had to load BASIC from a disk before you could program them, but this was still within easy comprehension.

Then things got complicated, and in the name of greater and greater capabilities, we got more and more complex operating systems. Your Raspberry Pi is an example, as are computers using Windows, MacOS, and various forms of Linux or Unix. They can spend minutes, spouting either arcane gibberish (or just silence and darkness), doing things you probably don’t understand. Most of us have gotten used to the wait, and even the gibberish, but there are two possible outcomes: 1) the computer presents a familiar and welcoming environment from which you can continue with what you actually want to do, or 2) it never gets there, either freezing with a screen full of incantations, or with a screen full of darkness, and whatever you wanted to do is replaced with either summoning a wizard or rolling up your sleeves to fight the dragon yourself. Hopefully you have another computer, because you’re going to need help, either way.

There was a reason for all of this, and what we get in exchange are computers that are vastly more flexible than the 1980s home computers. All of that gibberish (either seen or hidden in darkness) is about the computer, which is really a complex array of interconnected subsystems, learning about itself and configuring itself for use. And also, much of this complexity is about making it possible for multiple programs to be running simultaneously and independently of each other. But what we pay for that is a lack of access to the simplest parts of the computer.

So surprise, many people long for the days when computers Just Worked, but were still more interesting than appliances. Maximite and similar machines represent a return to machines that can be fully understood by individual people, without that having to be the sole focus of their lives.

I think it’s great, that with the ARM architecture, we have a whole range of systems possible, from simple to fully multitasking.

It’s more than just wanting a simple system to work. If you wanted that, you can write a python program on your PC.

Nope, not preinstalled in your UEFI or even your OS unless its Linux, try again.

After you install python, of course.

This basic computer doesn’t come preinstalled in my house either.

Or you could buy a used thin client with x86 architecture and double digit gigabytes of ram for even less. But both would probably just sit on the bench. When you wanna have fun with programming, it’s the limitations that make it interesting. I love that it boots straight into a basic environment. I miss fiddling around with computers like that in the old days.

Of course, you could probably put a similar image on a pi but ugh.. just kind of tired of pis everywhere. The diy hardware kit is also appealing.

I like the basic part but even a $20 orange pi has HDMI outputs and USB that’d be a better foundation for an all basic system.

Can you boot the Pi right to a BASIC prompt? The Max is for people who don’t want an OS in the way of programming. I own a few Raspberry Pi units 2, 3, 4 and they’re great but the Max is for a whole different audience.

You can with RISC OS Pico, which gives you an experience like a BBC Micro on steroids.

If you are done looking for the middle button on you mouse, and have mastered the ACORN specific quirks of RiscOS you find yourself browsing the internet on an ancient HTML only browser for the user manual. And even the disk format (SD format) is completely incompatible with what normal computers use so sharing your work is impossible.

“That filet mignon looks delicious but for that much I could have a couple big macs.”

Why link to the Youtube video, but not to the original site?

Has humanity become so dumb, that they need a video about everything, because they can’t read?

http://geoffg.net/maximite.html

For me text is so much faster to understand than the slow knowledge transfer (if any) from a video.

I can’t STAND wading through video, trying to get the salient information, while someone monopolizes my attention and natters on and on before getting to the desired facts. Or worse, never getting to them at all!

I’m with you, I want to be able to skim at My speed, look for key words, read the parts I want, ignore the rest. Screw video.

“I’m with you, I want to be able to skim at My speed, look for key words, read the parts I want, ignore the rest. Screw video.”

Using video* to explain quantum mechanics vs text.

*Animation is part of this as well.

I think this answers your question :)

https://www.youtube.com/channel/UCJ2BSIyIU84ZtJ9MiLh0jZA

I’m also often frustrated by the fact that text tutorials are so hard to find now. Everything requires a YouTube video which is of course ten minutes and one second long for monetization purposes. And you have to scrub through that to find like four seconds of relevant information buried among idiocy and soyface. The internet has really gone downhill.

You said it.

Videos suck:

– You can’t search inside them.

– You can’t scan through them quickly to see if what you need is in there.

– Drawings are small to fit the video resolution, so have either diagrams without much in them or else smooshed, fuzzy, and illegible to try and fit in a larger drawing.

– You can’t copy and paste the code examples to try them out.

– It is boring as F to watch a video of some lunkhead waffling on instead of delivering information.

– You can’t click a link in a video to get to a datasheet or download for a program or library or schematic diagram.

———————–

For many of the same reasons, I hated school. It takes an hour of listening to a lecture to get the same information you can read in 5 minutes. You need teachers to help make the connections between the what and the why. Books deliver the how. Unfortunately, most places I went to school the teachers just stood in front of the class and rambled on about like the Youtube videos without providing anything more than what I got out of the books.

Yeah. Agreed. I definitely see the utility of videos for some subjects—like a detailed teardown of a mechanical object, like a motorcycle engine. If filmed and edited well, it can really provide a lot of information quickly that is difficult to precisely communicate in language or even static images. I’ve done some mechanical work and although mechanics are almost universally terrible photographers, they actually do keep their dialogue and editing surprisingly tight. Much better than developers do. But anyway, that’s another story.

I have to watch a lot of Maya and C4D tutorials, for example. The information I need is usually just the location of a specific menu item for this or that feature. It’s something I could look up a general text tutorial for back in the day, hit ctrl-f, and find that data instantly. Now I look it up, find two hundred youtube videos (I’m sure google has manipulated the results to favor their other platform, naturally) and each one starts “HEyyyEYYYyyyYY yOuTuUuUbE! Like and subscribe! Well first you have to boot your computer… oh, hmm, I have some windows updates… oh well, let’s wait for that… okay now let’s log in… start Maya… Oh, whoops, I didn’t install Maya yet… okay I’ll show you the full process of installing the software itself.. Now let’s open it and create a new file… even though this is a tutorial for one simple feature of the software, I’m going to build an example scene from scratch and make it needlessly detailed before getting around to the meat of the tutorial… okay, now that that’s finally all done, go to modify>convert>NURBS to poly>uncheck match render tesselation! Remember to like and subscribe!”

Dang it! Cut the beginning of the video out. I know you have video editing software. I’ve been bitterly staring at the icon in your task bar for ten minutes. For the love of Christ!

And the relevant bit is two seconds long and literally the only thing anybody needs. Hidden somewhere in the middle. What have we used all this miraculous bandwidth for? Garbage! I forgot the name of the law, but there’s some theory of the internet that states that you can skip the first third of any given youtube video without missing any relevant information. It holds up shockingly well.

“Yeah. Agreed. I definitely see the utility of videos for some subjects—like a detailed teardown of a mechanical object, like a motorcycle engine. If filmed and edited well, it can really provide a lot of information quickly that is difficult to precisely communicate in language or even static images.”

https://youtu.be/5Mh3o886qpg

That link is already in the article.

It’d be nice if people weren’t so fast to throw insults, especially when they’re guilty of the same thing.

To be fair: I snuck it in after the fact. Lewin really should have had that in there.

But whatever. Folks just wanted to air their anti-video grievances, so you gotta let them.

The BASIC-booting computers were a bit before my time, which I can’t help but to somewhat feel like was a cool thing I missed out on. This machine is really quite tempting, so I’ll just go ahead and remind myself that I currently have too many projects. Too bad.

They definitely were really fun. Maybe some day, but it is good to have the instinct to finish what’s already on the bench sometimes :)

not entirely, when they existed the reason basic was on them was cause the platforms changed so damn much anytime you bought a computer, chances were there was zero software so get crakin.

Even then the onboard basic was so limited and slow about the only thing you could do with them is make stuff so simple you might have been better doing it without a computer in the first place (oh look a Rolodex or a Recipe program how pointless)

Like most collective nostalgia, It was a thing some people did but everyone remembers. Reality was if you were into programming that built in basic wore out its welcome really fast and was replaced by software, and if you were not, you probably never saw it as you booted off cart, tape or disk.

#Reality

I think most of us appreciate the progress made in computing, and wouldn’t want to actually go back and deal with all the limitations of old hardware to do anything particularly practical. That’s not to say you couldn’t do something practical with it, just like some great and useful things can be done with a 25-cent micro, but the Maximite form factor doesn’t exactly lend itself to it. But as a hobbyist machine, I think it’s pretty damn cool. It doesn’t supplant the variety of SBCs out there, but does carve out a niche which I think some people are glad exists.

I like my Maximite and I instantly ordered the Maximite 2 from Circuit Gizmos when I found out about it.

I would really, really like to see something like this done with Python and Pygame. A single board computer running an ARM processor that boots right to Python with VGA video out.

Direct link to site, please!

Ok, I can goggle for it, but please, see all the love for video above here? A site link would be way better

But…will it run Doom?

Doom port on STM32F429 disco board here :

https://www.youtube.com/watch?v=bRNcfsDIc2A

now on STM32F7 disco board here :

https://www.youtube.com/watch?v=-JVuIZn112A

The maximite 2 have the STM32H7 series MCU , it can normally run Doom even better ;)

At comparing material prices , the STM32H747 disco board will be a cheaper choice for this project.

No. Just No.

I learned on the original Microsoft 4k Basic way back in the 1970s. I’ve been unlearning all the bad habits it taught me since then.

We have better alternatives now.

How about booting to Python?

That’s because Microsoft Basic was crap. You’re right that we have better alternatives now, and some of them are *also* Basic.

Disagree. There is a good reason Microsoft Basic was in so many computers in the days: there was nothing better for the memory footprint and target audience.

And the later Microsoft Basic versions where still used 30 years later, even in industrial environments.

I have respect for what they pulled off in the 70’s and 80’s.

That is not why it was used. It was used because hardware designers were cranking out their own computer designs left right and centre, and unable to use them without an OS. Licensing Microsoft Basic was a quick way to solve that problem, but it was always the bare-minimum choice.

Want to use the unique hardware features of your computer? Well MS Basic didn’t know about them, so good luck doing it with cryptic POKEs and machine code.

The computers that *didn’t* choose MS Basic and wrote their own (or got an existing Basic product vendor to do a solid port to their architecture supporting the features) often ended up with really good results. BBC Basic is lauded for it’s proper procedure syntax and integrated assembler, amongst a range of unique advantages. Locomotive Basic on my beloved Amstrad CPC had full graphics and sound commands, *and* interrupt support. There were other platforms that did good things too, but I’m less familiar with them.

Don’t judge the language by the *generic* option, just because you never experienced better.

Having been born in 1969 I am a child of the 70’s and 80’s. I started coding around 1979/80 in BASIC. Every machine at that time booted to some form of BASIC. I spent the better part of the 80’s coding games and my own electronic BBS in BASIC.

I now teach coding to kids and I can that BASIC as it existed in 70’s, 80’s and even the 90’s SUCKED as a language and should not be taught to kids today. It was great for what it was back then but there are far better alternatives today. I belong to a bunch of retro computing groups and I hear old guys like me all the time scream that we need to bring back BASIC. No, we dont, at least not BASIC as it existed in the 70’s through the early 90’s. With that said, this runs a much improved version of BASIC without line numbers and with many structures of modern languages. This BASIC isnt bad.

However, I would LOVE to see something like this that booted straight to Python with Pygame installed already and VGA out along with a bunch of GPIO exposed. Ive often thought about trying my hand at building something like that but IM not sure my skills are up to par. Python has been ported to run on ARM Cortex processors in the form of MicroPython, which I absolutely love. Since this SOC has VGA out built in it shouldn’t be too difficult to get it going.

So why exactly do you find BASIC so horrible? Because it has GOTO? I would love to see some assembly code without the equivalent of goto.

I just ported some C-code to Maximite 2 BASIC. Mostly all I had to do was remove semi-colons, change braces to equivalent structures, change comparisons such as == and != and change statements like d++ to d = d + 1. The structure of the code was unchanged.

Now Python, well what is so great about a language where invisible characters matter?

BASIC sucked back in the day because of its limitations. I grew up using typical 8 bit BASICS. I had a lot of fun with it, but also a lot of frustration:

– Variable names: The version on my TRS-80 only allowed like two character variable names. What was N1$ again? Oh, yeah, that’s the name of the current player in the game. Oh, no. That was in the other game I wrote. In this one, N1$ is something else – damifino what. The $ isn’t, by the way, part of the variable name. The $ tells the interpreter that N1 is a string.

– Variable types: string, integer, real, and arrays of the three. No classes, no structures, nada.

– No way to import libraries. If you had a common thing your various programs needed to do, then you had to implement that thing in every program. Since there was no way to copy and paste, you ended up typing in the same functionality in every program you wrote. Sucksville.

– Slow as F. BASIC was interpreted back then, but no JIT or other optimizations. Even comments in your code slowed things down because the interpreter had to look at the line, decide it was a comment, and skip to the next line.

On the plus side, the simplicity made it possible to have a good grasp of the entire language and all its functions. I had a manual that described every single BASIC command available on my computer, and each command had a decent description with examples.

I think we do need a simple, limited functionality language for beginners to “get their feet wet.” I also think the 8 bit BASIC of yesteryear is a really bad place to start.

As much as I like Python, I don’t think it is the right starting place, either.

Ideally, you’d have a statically typed, interpreted language with classes and inheritance and a fairly restricted set of functions built in to the language.

It’d take simple text files with a minimum of “magic incantations” to get it started. Look at the difference between Java and Python to see what I mean. The amount of boilerplate code needed to get a minimal Java program (“Hello world.”) to run is crazy. That has its place, but not for a beginner.

The idea being to introduce learners to the concepts of programming in an environment where they “see” all of the things available in the language and where the language can be completely documented in a compact manual that describes everything.

It needs a way to create and import libraries, but not a system for finding them where ever they may be in the file system. It also should not come with any libraries itself – libraries are strictly for users to create and learn from.

It should also be clear from the outset that the language is only for learning concepts – there should be a migration path from the kindergarden playground language to a regular, really useful language.

——

Climbs down off soapbox, looks around.

Sorry about that.

Oh, and the BASIC back then didn’t have functions. You had GOTO, and you had GUSUB. Both changed the execution path, but there wer no functions.

Imagine writing an entire C program in “Main” and you have some idea what it was like.

There was not even any indentation to help visually separate functional blocks.

The screen on my old TRS-80 was only 64 characters wide and 16 lines high – anything that “wasted” a character on screen was a painful loss.

I don’t miss BASIC from back then, though I do miss the simplicity of the computer and the language.

Just because you can write an unstructured mess in BASIC doesn’t mean you can’t structure it. I mean that’s the main complaint Pascal zealots have about C, it doesn’t force structure.

Your GOSUBs are your functions and you can put them in distinct blocks of line numbers. Keep your main loop in a distinct block. Space it pretty with blank line numbers if you want.

In good BASICs you could save out a block of line numbers. So you could save out a subroutine, edit the line numbers if they clashed with your other program, and load it into the program you are working on. There you go, library functionality.

I mean, you can pull up endless examples of horrible BASIC programming, and I can point to the obfuscated C contest to illustrate the horrible mess you can make with anything else if you try.

I think you need to go back and look at BBC basic to see what you were missing out on…. but I totally agree on the general Slow as F….

BBC Basic had functions and procedures. Locomotive Basic had functions (but not procedures) and first-class interrupt support. Separating functional blocks was easy enough with blank comment lines.

What people don’t seem to understand is that this MMBasic computer can be used to create something out of the box. Try that with python box. To design a python box that you can really create something on WITHOUT adding libraries from the web is a challenge on itself.

And if you need access to the web, you end up with a Pi or similar. That already exists….

This is new

Volhout: YES. I hear this all the time – BASIC crippled and stunted a whole generation of would-be programmers. While it’s true that Python and Javascript are more capable computer languages than BASIC, they are FAR more difficult to learn and require WAY more resources than BASIC. Can you imagine running Python on an ATmega328?

I started with BASIC in 1971, then progressed to several different assemblers, then to C and C++. After decades of programming mostly in C, I succumbed to the hype and decided to pick up Python, thinking it would be better than C for simple projects, or for the front end for complex projects. I found it NOT ONE BIT easier than C/C++. All of the conceptual things that make learning full-featured compiled languages difficult to learn (things like variable scope, proper use of libraries, structured and/or object-oriented program design, for example) are the SAME. After a few months in Python, I realized that:

1) Python is WORSE at catching errors that a compiler always catches, because it doesn’t even catch syntax errors until the statement containing the error is executed.

2) Python error messages are just as confusing and/or misleading as C error messages.

3) Typing “make” and then “./project” is no harder than typing “python project”. Building programs – even at the command prompt – is not that difficult, and Makefiles are my friends.

4) Including libraries is no easier than it is in C.

5) Python is SLOW. Duh.

Once I learned all of this, I dumped this toy language and went back to C.

Python is not the answer, regardless of the question.

BASIC is the simplest language to learn, hands down. The claim that BASIC gives people bad habits is nonsense. It’s like saying that giving toddlers sippy cups makes it difficult for them to drink out of glasses. Or maybe you would suggest that students learn algebra while learning basic arithmetic, since arithmetic is so limited, and doesn’t teach the structure of logical proofs. Hint: they tried this in the 1960s. They called it “new math”, and it crashed and burned, because students didn’t understand what the objective was. I submit that the same thing can happen when students are taught sophisticated languages first: they trip over things like indentation and function declarations and punctuation, when they are trying to learn what is already a complex skill.

BASIC is a perfect first language, because it teaches you the MOST important part of programming: learning how to break a task down into steps. Eventually, the programmer learns its limits and moves on to a bigger and better world. If you think it’s a big step going from BASIC to C, just imagine going from nothing to Python in a single step.

BASIC is BASIC. Beginner’s Allpurpose Symbolic Instruction Code. Kwitcher bellyachin’.

Does it have a sid chip in it too? Can we call it the Commodore 6400. Can we have the cool commodore “ASCII” characters?

You can add a SID chip to it. It obviously doesn’t come with one because they’re rare and expensive.

to avoid colors in the grayscale they could just have used RGBI with the last bit as intensity.

or with another 8 pins, a full 3x8bit dac, and they would have good old mode 13!

It’s because of the resistor-ladder DAC, which will always be sloppy unless you hand-match the resistor values. You could also replace it with a proper DAC IC and get better results with no code changes at all.

This is good. Thanks for sharing!

The 8-Bit Guy just posted a review: https://www.youtube.com/watch?v=IA7REQxohV4

The performance of this little beast is amazing.

The 4.77 MHz clock might have caused some of the slowness with the graphics routines…

In my 45 years’ experience since learning BASIC on HP-98xx desktop calculators, I’ve programmed in C, C++, Objective-C, PL/SQL, Haskell, and F#. The latter two are early adopters of newer programming paradigms (currying, lazy evaluation, deep recursion, etc.).

I’m practicing mmBasic in anticipation of receiving one of the Colour Maximite 2 computers during the pandemic, and was quickly able to implement a well-structured compex-recursion, memoized version of Ackermann Function evaluation. I also pretty quickly implemented a highly-structured version of Conway’s Game of Life.

While mmBasic is missing some of the most recent concepts, I’m quite happy with the language. OTOH, its built-in editor is weird, and is a long, long way from Atom or NotePad++.

Gee, I actually wrote some real-world industrial, real-time applications in HC11 BASIC, which had real interrupt handlers, and, with an 8-bit+ MCU @ 4 MHz, managed 20,000 instructions per second… Amazing what can be done in 512 bytes of eeprom