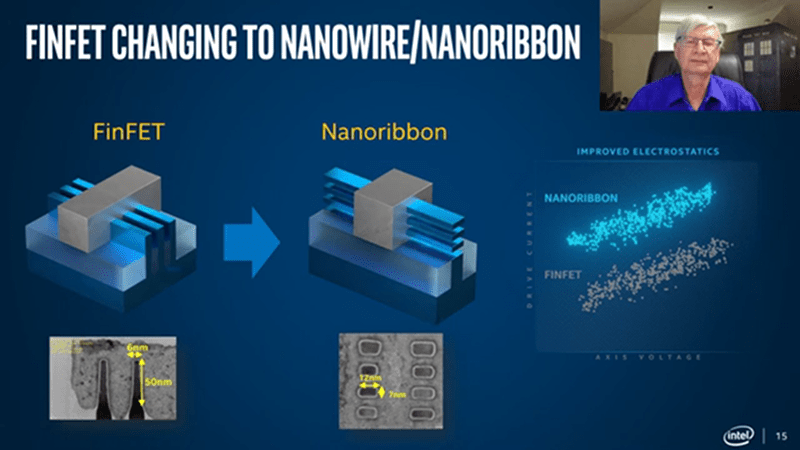

Intel’s CTO says the company will eventually abandon CMOS technology that has been a staple of IC fabrication for decades. The replacement? Nanowire and nanoribbon structures. In traditional IC fabrication, FETs form by doping a portion of the silicon die and then depositing a gate structure on top of an insulating layer parallel to the surface of the die. FinFET structures started appearing about a decade ago, in which the transistor channel rises above the die surface and the gate wraps around these raised “fins.” These transistors are faster and have a higher current capacity than comparable CMOS devices.

However, the pressure of producing more and more sophisticated ICs will drive the move away from even the FinFET. By creating the channel in multiple flat sheets or multiple wires the gate can surround the channel on all sides leading to even better performance. It also allows finer tuning of the transistor characteristics.

Of course, another goal of these nanostructures is density. With the fin topology, one section of the channel remains bound to the die. With the nanoribbon or wire structure, it as if the fin is floating and this allows the gate to surround the channel.

We’ve seen a lot of work on nanowires lately. New structures and new materials will lead to devices that far exceed the performance of what we have today.

Now if only they could avoid side-channel attacks.

Pretty much never gonna happen if we want the maximum amount of performance squeezed out of a given chunk of silicon. There’s so many timing-based tricks to exploit that are physically and fundamentally vulnerable to listening in from somewhere else on the die. I dunno if the people who demand security will ever economically outweigh those who demand a bit more out of a benchmark. At least not until side channel attacks start affecting more people’s daily lives. Most users and most dollars just want a few more fps or a shorter processing time on some other intensive computation. It’s a trade-off. But I do generally side with you and wish there were more options aside from a really expensive POWER9 workstation which comes with a whole host of its own problems in real-world professional applications.

The problem is that it’s not few percent. Intel made long list of elements they’d need to disable. And it decreases performance to <1% of original

What bothers me, is the incessant need to take net facing servers and have them carry on sensitive tasks that don’t require net exposure… put them on 2 different machines, and the issue evaporates.

Yes, the problem isn’t really with the hardware being unsecure against side channel attacks.

But rather that one shouldn’t run safety critical stuff in an environment that one also runs arbitrary stuff on…

In some cases, one will have to run stuff that needs security next to stuff one can’t really trust. But here the answer is simple, just run the untrusted thing in a runtime environment where we can interleave multiple processes. This means that each process can’t execute its commands fast enough to be able to do a side channel attack as easily. (would likely still be having a fast enough serial execution rate to see that one were waiting around on a HDD/SSD compared to accessing something from RAM…) This is though not a perfect solution either…

Though, if one had more granular control over what runs on what core in a system, then one could improve security a fair bit.

Though, in terms of timing level attacks on cache, CPU makers could implement one of the two following rather simple solutions:

In regards to processes trying to access stuff they shouldn’t be allowed to access, that is a problem that can be solved by having a fixed response time. (If the response time is long enough, all one knows is that one weren’t allowed to access that content. (the response time should of course be counted from when the process requests the data, not from the point you figure out it isn’t allowed to access it.))

Or one can simply have the CPU ask the kernel to switch out the thread, and simply stall it in the mean time. The process will suddenly have an exceptionally hard time doing a timing attack, since as fast as it tries, it’s like it blacked out for a while. So the only conclusion it can come to is, “Some fairly unknown amount of time has passed…” A well behaving process rarely if ever tries to access content outside of what its allowed to access, and would thereby not be affected by this security implementation. (edge cases do of course exist.)

Both these two solutions will have performance impacts on processes that on occasion mistakenly tries to access data that they aren’t allowed to, but this is an edge case. While all well behaving processes won’t even know the security feature is there. And processes actively trying to poke at such content will be severely hampered in their attempts. (This of course doesn’t fix all side channel and timing based attacks.)

Ryzen “Zen 2” say hi.

So I get the idea that it’s gonna be all fine and dandy in the lab, but when they actually do something more than a toy tester, they’ll have a few million transistors in there and go “whoops, substrate ain’t sucking the heat out like it does when we lay them flat” then end up depositing more insulator on top, then getting all the thermal noise problems back, then having to raise power a tad to compensate, and then having horrible thermals again/still. Maybe they’ll end up sticking those thermal cavity LEDs on them, need lightpipes to cool your CPU instead of heatpipes.

Hey, breakthroughs are never easy. But it’s not like it’ll be the first two steps forward/one step back situation that eventually gets there and takes over. Sometimes realistic expectations are just a march into the grave.

There is still option to explore carbon based semiconductors. IIRC they are growing large enough diamond ingots, but I don’t know if dopants and insulators have been identified or the processes to create them on a wafer.

The switch to diamond semiconductors has been talked about for quite a while but we keep squeezing more out of silicon. Which is good to some degree because the researchers can continue to research more exploratory paths and build a large knowledge base rather than focus on production.

dont quote me on that but i seem to remember having read somwhere that low volume diamond electronics allready exist for specialized/niche use. maybe military or aerospace specs?

I’ll have to follow up, but that’s really cool news!

Production often seems to be the hardest barrier to break and if this tech has managed that to even a limited degree, then maybe it will be easier to build on if/when the industry can’t get any more out of silicon.

I imagine the heat transfer will be improved. And that Intel probably is on it.

Maybe use something like artificial diamond?

Now to see if this is actually gonna materialize in 2025, or if it’ll still be 5 years out.

They are critical in the deployment of flying cars.

Can’t trust their time tables these days. Might as well add Carbon nanotube for interconnect too.

May be Linux will become the most dominant Desktop, gaming and console OS by them!? :P

The devices as described are still FETs and the technology is still CMOS.

There’s a line in the anandtech article summary, “before eventually potentially leaving CMOS altogether.” I think the hackaday author saw that and didn’t understand the context. The discussed devices are of course CMOS FETs, GAAFET (nanowire) and MBCFET (nanosheet).

Came here to say the same. They’re not abandoning CMOS, just using a new more efficient topology for CMOS.

>Intel’s CTO says the company will eventually abandon CMOS technology that has been a staple of IC fabrication for decades. The replacement?

Oh come on guys. This is still CMOS, because there still will be N- and P-channel field-effect-transistors.

It’s actually not that dfifficult to understand why the new structure brings an improvement. Traditionally transistors were flat. That means that only one surface can be used to control the current flow in the channel. A few years ago, Intel and others introduced the finfet, where the Silicon channel is enclosed by the the control gate from three sides. This allows for a much better control of the transistor.

Nanowire devices bring this to the logical conclusion and allow building transistors where the channel is controlled from all 4 sides, or is totally surrounded by the gate.

The idea behind this it is rather simple. That big big challenge is the actually manufacture this at the nanometer scale. The will also be funny things happening due to floating bulk.

Guided Vapor Deposition

Now if only they would get rid of the x86 architecture, implement something more sane and better, and ask Microsoft to do what Apple is doing now: port the OS to the new CPU/architecture, provide tools for everyone else to do the same, and provide a means of distributing the apps for the old and the new cpu/architecture.

Of course with the motivation of making cheaper CPUs with at least the performance of the current ones, and ample room for future improvement of that performance. The x86 CPUs are expensive, and it’s only because they have so much bloat. It’s like a christmas tree, with all sorts of attached ornaments, making the tree groan under their weight.

If not, no problem. Apple will do it regardless, and will become as big as Microsoft and Intel together.

“Apple will do it regardless, and will become as big as Microsoft and Intel together.”

Keep dreaming.

Apple has at various times had technically better hardware, but has never come close to wiping out Intel and the PC.

Apple is simply too expensive for the vast majority of people.

Plus Apple goes for efficiency and not performance.

plus apple goes for your money with closed patents, blocking open source/hardware , now allowing white labeled hardware, they will want to approve your world, thats happened to MS and now is rolling back to support open source/open hardware. At least you can run same sw on a intel or any other cpu maker, but you can run any software on a apple device?

They also love sticking rather high performance parts in inadequately cooled and power shells so it will run like crap under any real load and die fast.

Then refuse to fix it…

I agree with the keep dreaming comment.. Though for me it would be a nightmare not at all an enjoyable dream.. Don’t exactly like M$ but they are getting less obnoxious in recent years.. Apple on the other hand have got worse and worse as they became the fashionable thing to own…

While x86 does have its trade offs its not all bad compared to RISC type chips.

For me the biggest thing Intel need to do is either do away with the all powerful Management engine bollocks or open it up to proper security scrutiny so its possible to have faith in it, and make use of it. More calculations per Watt, and scalable power draw for efficient energy use at all loads are definitely the metrics we should be prioritising rather than peak speed bigger numbers better stuff – and it seems we are seeing a trend in that direction.

Yah, that’s particularly egregious, pay twice the money for the same CPU numbers as a real computer, then performance is slashed in half by the thermal throttling. Double Apple tax.

But the cultists be like, “But it looks so sleek sitting on the table next to my grande cappuccino macchiatio carburante idiota.”

MS 1250 billion. Intel 230 billion. Apple 1300 billion. So Apple is pretty close to MS + Intel. Who’s dreaming?

Intel has tried to kill x86, and even had the aid of Microsoft. Remember Itanium?

And it takes a lot more than an ISA to be a computer.

I tried jumping from the PC hardware world once about 9 or so years ago. I learned that ARM is a development train wreck. You were targeting specific devices with specific SOCs. Microsoft couldn’t help wrangle that ecosystem, and Android has only been successful because they haven’t tried until it became pretty well established (but it’s still not ‘PC like’ yet). Even Linus has weighed in on some of the dumb things ARM OS devs tend to do.

I’m out of the PC hardware world for a second time working at an SOC company and it’s still a mess of proprietary SOCs and associated software that all need the IP owners;’ blessing for the OS to work on a given SOC. If the company doesn’t want to do the legwork for the OS, you don’t get it. The same is true for security patches.

AMD even took a crack at this by releasing an ARM server platform spec. It’s been demoed by MS, but it does largely ignored by SOC makers who still build highly proprietary systems.

Here is the conclusion I’ve come to. If the OSS community wants Intel to go away or change, then they will have to make the hardware to make it happen. It needs to go beyond the ISA and the CPU, it needs to extend to system buses, peripheral interfaces, software to work all that (best if it all figures itself at runtime), a common means of platform initialization and OS IPL, flexibility in OS IPL and storage location, and the ability for the platform to grow based on future needs.

Brave statements from a company that’s lagging so far behind in CPU technology.

yea I was just thinking “gee cant manage 10nm and thermal’s how about we catch up before going down a rabbit hole”

In X64 technology wise AMD is whipping their ass dropping 7nm stuff all day long that’s almost at or even slightly better performance than intel’s 6th or 7th generation Gen 6 refresh (gen 10 as they claim) at 2/3rds the price or cheaper … and in the ARM market PHHHFT LOL

AMD rumored to release 5nm chips in Q42020.

Are the nm comparisons really apple-to-apple comparisons? I read the 5nm process doesn’t always mean the same thing for different processes (spacing of a transistor vs spacing between transistors etc etc).