Apple computers will be moving away from Intel chips to its own ARM-based design. An interesting thing about Apple as a company is that it has never felt the need to tie itself to a particular system architecture or ISA. Whereas a company like Microsoft mostly tied its fortunes to Intel’s x86 architecture, and IBM, Sun, HP and other giants preferred vertical integration, Apple is currently moving towards its fifth system architecture for its computers since the company was formed.

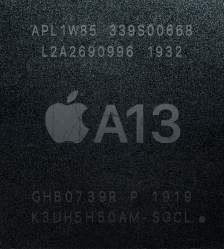

What makes this latest change possibly unique, however, is that instead of Apple relying on an external supplier for CPUs and peripheral ICs, they are now targeting a vertical integration approach. Although the ARM ISA is licensed to Apple by Arm Holdings, the ‘Apple Silicon’ design that is used in Apple’s ARM processors is their own, produced by Apple’s own engineers and produced by foundries at the behest of Apple.

In this article I would like to take a look back at Apple’s architectural decisions over the decades and how they made Apple’s move towards vertical integration practically a certainty.

A Product of its Time

The 1970s was definitely the era when computing was brought to living rooms around the USA, with the Commodore PET, Tandy TRS-80 and the Apple II microcomputers defining the late 1970s. Only about a year before the Apple II’s release, the newly formed partnership between Steve Wozniak and Steve Jobs had produced the Apple I computer. The latter was sold as a bare, assembled PCB for $666.66 ($2,995 in 2019), with about 200 units sold.

Like the Apple I, the Apple II and the Commodore PET were all based on the MOS 6502 MPU (microprocessor unit), which was essentially a cheaper and faster version of Motorola’s 6800 MPU, with the Zilog Z80 being the other popular MPU option. What made the Apple II different was Wozniak’s engineering shortcuts to reduce hardware costs, using various tricks to save separate DRAM refresh circuitry and remove the need for separate video RAM. According to Wozniak in a May 1977 Byte interview, “[..] a personal computer should be small, reliable, convenient to use, and inexpensive.”

With the Apple III, Apple saw the need to provide backward compatibility with the Apple II, which was made easy because the former maintained the same 6502 MPU and a compatible hardware architecture. Apple’s engineers did however put in limitations that prevented the emulated Apple II system to access more than a fraction of the Apple III’s RAM and other hardware.

The 32-bit Motorola Era

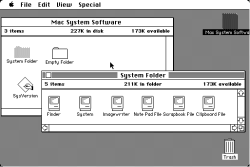

With the ill-fated Apple Lisa (1983) and much more successful Apple Macintosh (1984), Apple transitioned to the Motorola 68000 (m68k) architecture. The Macintosh was the first system to feature what would become the classic Mac OS series of operating systems, at the time imaginatively titled ‘System 1‘. As the first step into the brave new world of 32-bit, GUI-based, mouse-driven desktops, it did not have any focus on backward compatibility. It also cost well over $6,000 when adjusted for inflation.

The reign of m68k-based Macintosh systems lasted until the release of the Macintosh LC 580, in 1995. That system featured a Motorola 68LC040 running at 33 MHz. That particular CPU in the LC 580 featured a bug that caused incorrect operation when used with a software FPU emulator. Although a fixed version of the 68LC040 was introduced in mid-1995, this was too late to prevent many LC 580s from shipping with the flawed CPU.

The year before the LC 580 was released, the first Power Macintosh system had been already released after a few years of Apple working together with IBM on the PowerPC range of chips. The reason for this shift could be found mostly in the anemic performance of the CISC m68k architecture, with Apple worried that the industry’s move to the much better performing RISC architectures from IBM (POWER), MIPS, Sun (Sparc) and HP (PA-RISC). This left Apple no choice but to seek an alternative to the m68k platform.

Gain POWER or Go Vertical

The development of what came to be known as the Power Macintosh series of systems began in 1988, with Apple briefly flirting with the idea of making its own RISC CPU, to the point where they bought a Cray-1 super computer to assist in the design efforts. Ultimately they were forced to cancel this project due to a lack of expertise in this area, requiring a look at possible partners.

Apple would look at the available RISC offerings from Sun, MIPS, Intel (i860) and ARM, as well as Motorola’s 88110 (88000 RISC architecture). All but Motorola’s offering were initially rejected: Sun lacked the capacity to produce enough CPUs, MIPS had ties with Microsoft, Intel’s i860 was too complex, and IBM might not want to license its POWER1 core to third parties. Along the way, Apple did take a 43% stake in ARM, and would use an ARM processor in its Newton personal digital assistant.

Under the ‘Jaguar’ project moniker, a system was developed that used the Motorola 88110, but the project was canceled when Apple’s product division president (Jean-Louis Gassée) left the company. Renewed doubt in the 88110 led to a meeting being arranged between Apple and IBM representatives, with the idea being to merge the POWER1’s seven chips into a single chip solution. With Motorola also present at this meeting, it was agreed to set up an alliance that would result in the PowerPC 601 chip.

Apple’s System 7 OS was rewritten to use PowerPC instructions instead of m68k ones, allowing it to be used with what would become the first PowerPC-based Macintosh, the Power Macintosh 6100. Because of the higher performance of PowerPC relative to m68k at the time, the Mac 68k emulator utility that came with all PowerPC Macs was sufficient to provide backward compatibility. Later versions used dynamic recompilation to provide even more performance.

Decade of POWER

The PowerPC era is perhaps the most distinct of all Apple designs, with the colorful all-in-one iMac G3 and Power Macintosh G3 and Power Mac G4 along with the Power Mac G5 still being easily recognized computers that distinguished Apple systems from ‘PCs’. Unfortunately, by the time of the G4 and G5 series of PowerPC CPUs, their performance had fallen behind that of Intel’s and AMD’s x86-based offerings. Although Intel made a costly mistake with their Netburst (Pentium 4) architecture during the so-called ‘MHz wars’, this didn’t prevent PowerPC from falling further and further behind.

The Power Mac G5, with its water-cooled G5 CPUs, struggled to keep up with the competition and had poor performance-per-watt numbers. Frustrations between IBM and Apple about whether to focus on PowerPC or IBM’s evolution of server CPUs called ‘Power’ did not help here. This led Apple to the obvious conclusion: the future was CISC, with Intel x86. With the introduction of the Intel-based Mac Pro in 2006, Apple’s fourth architectural transition had commenced.

As with the transition from m68k to PPC back in the early 90s, a similar utility was used to the Mac 68k emulator, called Rosetta. This dynamic binary translator supports the translating of G3, G4 and AltiVec instructions, but not G5 ones. It also comes with a host of other compromises and performance limitations. For example, it does not support applications for Mac OS 9 and older (‘Classic’ Mac OS), nor Java applications.

The main difference between the Mac 68k emulator and Rosetta is that the former ran in kernel space, and the latter in user space, meaning that Rosetta is both much less effective and less efficient due to the overhead from task switching. These compromises led to Apple also introducing the ‘universal binary‘ format, also known as a ‘fat binary’ and ‘multi-architectural binary’. This means that the same executable can have binary code in it for more than one architecture, such as PowerPC and x86.

All’s Well that Ends Vertically Integrated

A rare few of us may have missed the recent WWDC announcement where Apple made it official that it will be switching to the ARM system architecture, abandoning Intel after fourteen years. What the real reasons are behind this change will have to wait, for obvious reasons, but it was telling when Apple acquired P.A. Semi, a fabless semiconductor company, in 2009. Ever since Apple began to produce ARM SoCs for its iPhones instead of getting them from other companies, rumors have spread.

As the performance of this Apple Silicon began to match and exceed that of desktop Intel systems in benchmarks with the Apple iPhones and iPads, many felt that it was only a matter of time before Apple would make an announcement like this. There has also the lingering issue of Intel not having had a significant processor product refresh since introducing Skylake in 2015.

So there we are, then. It is 1994 and Apple has just announced that it will transition from m68k CISC to its own (ARM-based?) RISC architecture. Only it is 26 years later and Apple is transitioning from x86 CISC to its own ARM-based RISC architecture, seemingly completing a process that started back in the late 1980s at Apple.

As for the user experience during this transition, it’s effectively a repeat of the PowerPC to Intel transition during 2006 and onward, with Rosetta 2 (Rosetta Harder?) handling (some) binary translation tasks for applications that do not have a native ARM port yet and universal binaries (v2.0) for the other applications. Over the next decade or so Apple will find its straddling the divide between x86 and ARM before it can presumably settle into its new, vertically integrated home after nearly half a decade of flittering between foreign system architectures.

“Although the ARM ISA is licensed to Apple by Arm Holdings, the ‘Apple Silicon’ design that is used in Apple’s ARM processors is their own, produced by Apple’s own engineers and produced by foundries at the behest of Apple.”

Trust only oneself. Netflix and others have shown what happens when you give control of one’s fate to others. Original content is the result. Microsoft could get away with Wintel, because Intel needed MS as much as MS needed Intel.

Please tell me where I can purchase the Netflix manufactured set-top box that enables them to not depend on third parties

Where is Apple going to start up their silicon and rare earth metal mines?

Go far enough down and you become dependent on someone.

California has fantastic rare-earth mines. Maybe they will be allowed to open again and the World won’t be dependent on China.

Thing about those mines is they pollute A LOT without costly measures to take care of that.

And factor in the livable wages for the workers too, and you now know why china’s the supplier.

Cheaper, and out-of-sight means out-of-mind.

The reason why they shut down those mines was because they had pipeline bursts regularly spewing out nuclear waste (uranium/thorium).

It’s the same problem in China – they just don’t care.

Do people actually mine silicon or just go to the beach?

It is dug up from open pit mines and dredged from beaches. While silicate rocks are everywhere, there’s actually relatively few places where you have (almost) pure SiO2. Most beach sand is too low grade to be used for electronics or glass.

Not for the lack of trying… https://www.engadget.com/2013-01-23-fast-company-offers-more-details-on-netflixs-abandoned-hardware.html

FYI, that would be “Roku” which was a spin-off from Netflix – https://venturebeat.com/2013/01/23/netflix-created-roku/

“As the performance of this Apple Silicon began to match and exceed that of desktop Intel systems in benchmarks with the Apple iPhones and iPads, many felt that it was only a matter of time before Apple would make an announcement like this.”

This is significant, because ARM as the underdog has always been the “lessor” brand when it compares to x86, their dreams of equal standing in the desktop and server space. Mainstream processors, much like political parties, needs some competition and the landscape will be ARM and x86.

It’s funny because ARM is both the lesser brand and, making their money by licensing their product, the lessor brand.

Once again people, please flush after leaving such droppings

“ARM as the underdog has always been the “lessor” brand when it compares to x86,”

what a joke, take a look at the embedded space where the exact opposite is the case

I don’t think he was ignorant of this just noting that they are definitely underdogs in desktop and servers. As he says. “their dreams of equal standing in the desktop and server space”

ARM and RISC is fundamentally about cycling simple instructions rapidly rather than having powerful complex instructions that allow extensive pipeline optimization and memory logistics to be handled by the CPU itself. It lends itself better to simplified and cheap implementation on silicon with greater electrical power efficiency, at the expense of raw processing power. All the design choices so far have been made on the assumption that it’s going to be put in battery powered devices with little need to do complex math or big data operations in a hurry.

That’s why it’s had so much success in the embedded market. ARM has basically been the Pareto-optimized CPU with 80% of the utility at 20% of the power budget, while x86 has been the 100% CPU that can run with a 100 Watts off the wall.

The current crop of ARMs like A53 have deep pipelines that allow greater then 1 MIPS per MHz with some averaging 1.5. But they produce too much heat so far to go over 2 GHz. Most top out at 1.2 to 1.8GHz. AMD and Intel are more than 2 MIPS/MHz (Per thread? Or core? I can’t tell from what I have read.) and at 4GHz. It looks like at least a 4x advantage over ARM. Use 4x the number of cores, solve the heat issue and you get – hmmmm – a Ryzen/i9? Maybe simpler though. The AMD and Intel have huge amounts of circuity to boost instruction speed and handle the two-address architecture versus the ARM one-address architecture, popularly called “load-store” for some reason.

You bring out the load-store architecture, which means you need to use a separate instruction to load data from memory to a register, then more instructions to do ALU operations on them, and then again separate instructions to store the register contents back to memory. It’s called load-store because you keep spending half your instructions just loading and storing data.

On x86 and derivatives, pulling data from memory to a register and doing an ALU operation is typically a single instruction. The ARM needs to complete four instructions to load two values, add them, and store the result back to memory while the x86 may do the same in two instructions. That too matters when you’re comparing MIPS/MHz since the x86 architecture can so often do twice the work per instruction. It’s harder to optimize a RISC processor for this kind of efficiency because it doesn’t have these complex routines. You have to build an engine that tries to guess from the incoming stream of small atomic instructions what complex operation the code is trying to accomplish.

It’s like the difference between guiding someone through every single step needed to eat a bowl of soup, starting from “pick up the spoon…”, versus just saying, “eat this bowl of soup.”. If you insist on using the reduced instruction set to describe the task, then even a smart person who knows that they can just drink the soup from the bowl will take more time to guess the ultimate intent, and so they can’t work efficiently.

Indeed the ARM is a Load-Store, and may need 4 instructions in total to do the ‘same work’ as one x86 instruction. Consider also that the ARM has 12 registers that can also be used to hold temporary results that never need to get stored back to memory, registers can be accessed in one clock cycle, as opposed to memory which may take dozens. The x86 has maybe 6 registers to do the same, so you end up spilling more registers to memory, and wasting clock cycles waiting for the memory if you need to read it back. The caches help this some, and they can’t help in all cases. To get extra performance, the system designer has to throw a bunch of extra hardware outside of the processor itself to do it. In the case of the ARM, a similar external cache could help even more, as the memory accesses will be more spaced out. If your compiler is also aware of the pipeline, it might be possible for it to interleave two or more code sequences to achieve even more ‘MIPS’…

If you want thorough analysis of all of this… check out books by Hennessey and Patterson. In the end it does depend on what mix of instructions you plan to use to do your application. I would bet that for things that fit well in the 6 x86 registers, using an ARM or other RISC processor for the same job might end up having very similar performance. At some level of complexity, the RISC machine might pull ahead for the same memory architecture.

For the users it means choosing between two system vendors that are no longer directly compatible, so they’re not directly competing and the prices of both can go up since the users have to pick their team.

But we’ve been here before, with the home computer wars of the 80’s.

They weren’t compatible before so nothings changed.

Except you could run Windows on a mac and make an intel PC into a hackintosh, so you had access to both as needed.

Running Windows on a mac is supported, but running MacOS on non-Mac hardware is not.

“supported” as in through layers of translation that make it slow and buggy. An Intel mac could run windows natively, and back in the XP/7 era nearly 2/3 of mac owners from what I’ve seen and heard were using bootcamp to have access to games and productivity software.

Yeah! They can re-use all that hydraulic-mining equipment gathering dust in Gold-Rush museums, and level the Sierras for good this time!

I’m also reminded that in prior migrations there were some very late, arrivals to the native app scene. HP withheld driver support for scanners for a very, very long time after the PowerPC->MacOS X->Intel migrations. Notably too, good ol’ Adobe.com was dead LAST in the mission critical app migrations every time Apple decided to migrations. Here’s to a native version of Creative Cloud on Apple Silicon, which I predict we won’t see until 2030 at the earliest given past reluctance/recalcitrance.

Apple announced Adobe was already working on a native version of Creative Cloud and demoed Photoshop and Lightroom running on their Apple silicon at WWDC.

I think what they demoed was specifically iPad/iOS native apps running on the development Mac mini. That’s not quite what I’m describing here. Because THOSE apps contain much less functionality features as compared to their native Mac OS X desktop versions running under 64bit Intel. And CC apps are much more numerous than Lightroom and Photoshop. There’s Illustrator, InDesign, Premiere, etc. along with a multitiude of other apps that need to be re-written and recompiled by the time desktop versions of the A12Z or A14(whatever) reach the market. I guarantee Creative Cloud will still be in process while MS and other makers of of Desktop Apps have alredy done the work, the heavy lifting, and re-written their apps for Swift/Metal on ARM64 rather than Objective-C on Intel/AMD64.

They already demonstrated a native version of the creative cloud in the keynote.

Here’s an interesting article which I dare say, proves how FAR Photoshop for iPad is from the desktop version: https://appleinsider.com/articles/20/07/27/photoshop-for-ipad-comes-inches-closer-to-the-desktop-version , Adobe cannot expect to “inch” closer to the Desktop version of Photoshop. The need literal leaps and bounds to achieve parity in time for the first release of an iMac running the A14 cpu and Big Sur. You can say anything you like, but Adobe is not there yet.

It will probably be a lot easier to prevent Hackintoshes this way. Why anyone here would buy an Apple appliance instead of a general purpose computing device is beyond me.

It will still be BSD, you will still be able to pop a shell window, compile programs, etc just like any other unix workstation so what ARE you taking about???

Lol, have you ever tried using current MacOS? It’s super locked down nowadays. You can’t even run GDB without disabling ‘system integrity protection’ (SIP), which is a core part of how MacOS secures itself from viruses.

Apple also keeps making it harder to install third party applications.

Given what a debugger does that sounds completely reasonable.

It’s not BSD and never has been. It’s Mach with pieces of BSD pasted in but that’s beside the point.

And I suppose your not wrong that you CAN write and execute your own code on a Mac as easily as any other computer. But usually people go for a whole platform don’t they? And just look at iOS. I don’t really have to explain why those are useless for hacking on do I? Completely locked down software installation process, no bluetooth serial profile, no headphone jack. It’s just an appliance.

I seem to remember there being talk of locking OSX down just the same. Wouldn’t this be the perfect time to do it? Maybe that would be too much bad publicity for them but I doubt hackers and makers are their main target audience. They just might do it.

Besides all that, as a non Mac user who has been gifted a few iOS devices I’ve looked into just what it would take to run any of my own code on them. Apple is constantly obsoleting old versions of Xcode in it’s iOS updates, old version of OSX in it’s Xcode updates and requires very recent hardware to run any newer OSX. Apple makes sure that nobody is getting any sort of good deal on a used Mac to build anything they might want to run on an iPhone or iPad. Thus the importance of Hackintosh.

But ARM is just a base specification. Pretty much every ARM chip has it’s own custom additions. No doubt Apple will make sure OSX does not run without special proprietary stuff they will cram into their ARM chips. I doubt there will ever be an Arm hackintosh except maybe built from the floor scraps in one of their own factories.

It’s worth making another prediction based on this move by Apple: the whole personal computer industry will likely move to ARM (and perhaps eventually RISCv) in due course, and Microsoft will endorse this.

The reasoning is the same as for Apple: both silicon manufacturers and computer manufacturers would like to get away from Intel x86 / x64 dependancy. All of us would also like to get away from all the legacy baggage that comes with the architecture. Microsoft tried with Windows CE based systems and have full Windows 10 that runs on ARM. Google support ARM-based Chromebooks, it’s a natural companion to ARM-based tablets and phones.

The advantage ARM provides is greater scalability (from low-end MCUs to 64-bit multicore servers) and consequently product differentiation. The end of Moore’s law means, finally that Intel’s huge engineering leverage won’t be enough to keep them ahead.

Welcome to the new computing era.

http://www.linuxmadesimple.info/2019/08/all-chromebooks-with-arm-processors-in.html

Every time the threat of ARM is brought up, I ask where can I get an ARM computer that I can install Windows 10, Windows server, any desired Linux distro, Free or Open BSD, or any software based on these OSes? If such an ARM platform does exist, can it also handle getting OS updates straight from the OS maintainers? Can it also support booting from multiple targets like a combination of separate drives and network devices?

If such a platform does exist, can is also support 3rd party hardware like GPUs, storage controllers, network devices, etc?

ARM is an ISA, the PC is a platform. While there have been proposals to create a platform utilizing the ARM ISA to compete with the PC, they never seem to reach enough adoption to really challenge the PC.

I’ve been watching this struggle for a decade and I’m not sure it will ever happen, I just don’t see any reason for companies to create a general ARM based platform targeted at the desktop.

The Pi 4 is the closest I’ve seen. Perhaps its fitting that it’s the board’s popularity that has helped it which is a bit like how the PC came to be.

You’re looking for a board that supports SSBA which is the ARM equivalent of a “stuff just works” x86 PC environment. I can’t think of any really cheap low end ones but the Honeycomb LX2K supports it, is intended for use as a workstation and costs 750 USD.

I’d rather just get away from Intel, Nvidia and Microsoft taking every opportunity to be a dick to consumers.

There is AMD/ATI and *nix for that, but the former has a tendency for nasty run-ins with a cursed monkey paw up until very recently (and during Intel’s P4 era), and the latter has the most popular variation (Linux) plagued with the Microsoft way of thinking with SystemD.

Windows NT ran on MIPS, Alpha, PowerPC and Itanium. They tried it before, and what killed it was the lack of third party support.

I actually ran it on a MIPS Magnum, and the performance was excellent. It just wasn’t enough to justify the 4-5x hardware price differential.

Interestingly, I’ve now found out Jean Louis Gasse thinks exactly the same!

https://9to5mac.com/2020/07/13/switch-to-arm/

He even goes a step beyond and claims Intel will start making ARM cpus again!

don’t forget that next computer, where mac osx came from, already had multiple platform binaries for nextstep and later openstep, so the knowledge was already in house after the merger of Next computer and Apple in 1997.

Apple had support for fat binaries right from the transition to PPC, which preceded that by a few years. It’s fairly trivial to implement (especially given the dual-stream nature of mac files) so not something they would have had to acquire.

It’s also worth bearing in mind that the ARM processor started out as a desktop architecture about 8 years before Apple licensed it as an embedded device for the Newton. So, it’s not merely that Apple has turned full circle to switch from CISC to RISC (again), but that ARM has gone full circle back to being used as a mainstream desktop computer.

Personally, I can’t wait (though of course, I have to):

https://en.wikipedia.org/wiki/Acorn_Archimedes

It must be said of course, that ARM CPUs have rarely not been available as ‘desktop’ computers:

https://en.wikipedia.org/wiki/A9home#:~:text=The%20A9home%20was%20a%20niche,bit%20version%20of%20RISC%20OS.

And of course, the Raspberry-PI is a ‘desktop’ (albeit super-tiny desktop) device:

https://en.wikipedia.org/wiki/Raspberry_Pi

-cheers from Julz

I for one welcome our Apple silicon overlords. Windows on ARM has relied on Qualcomm so far and while I don’t have pricing data their top end chips seem to be expensive for the performance they offer. The reports of Apple’s A12Z dev kit beating a surface pro X in geekbench when one’s running a translated x86 binary and the other’s running native ARM code are just mind boggling. A little competition in the ARM space would be welcome to bring prices down and pump performance up.

Just a tidbit. I worked at Signetics back in the 1980’s. There was a story that Steve Wozniak and Jobs sought samples of the 2650 micro, but they were perceived by marketing as a couple of college students looking for freebies and were denied.

Classic misstep, if true!

That sounds a bit garbled. I can certainky imagine Steve Wozniak trying to get a sample, but the 6502 was out in 1975, and I didn’t hear about the Signetics CPU until later. It was seen as quirky, so I can’t see the appeal. Go for cheap, or go for standard, ie the 8080. There’s a reason I can’t name a 2650 based computer, though there were construction articles and a related kit.

Don’t forget the 65C816 in the Apple IIGS.

And I thought “fat binaries” dated from the PowerPC days? I vaguely remember doing something to strip off the unneeded code when I was running a 68000 Mac.

That’s because it was for PowerPCs. 68k apps had the code in the resource fork and PPC used the data fork

I bought a //gs. I assert that every one of them sold was an act of fraud. Apple had absolutely no intention of properly supporting GS/OS since the Mac was already out.

The 65C816 was more like the 68000->68020 transition, which on Mac was mostly about going from the original 24-bit addressing (with flag bits buried in Memory Manager handles) to 32-bit-clean, and getting Color Quickdraw.

Also for both PPC and Intel, Apple supported first the 32-bit then the 64-bit versions of those, to the point where the 32-bit x86 is no longer supported. The ARM transition starts out at 64-bit on Mac, but they already went through the 32->64 transition with iOS. So you can easily double the count of architectures that Apple has supported.

And in my useless opinion, the IIgs should have been more like the LC + Apple II board, but in a single set of ASICs, and one or two slots for legacy Apple II hardware. Instead, both products became dead ends. And the Ensoniq sound chip may have been really cool back in the day, but it was basically just a really well-made wavetable synth, which is why it doesn’t have the nostalgia of classic chips like SID and POKEY.

The first macs were 16-bit, not 32. When the processors went 32 there were issues because the internals had some 20 bit stuff. Specifically I think it was in the RAM address bus but only 16 bits was used externally to actually address RAM. Devs would use these extra bits for things because devs are clever and if the bits aren’t mapped to RAM chip addresses then they don’t change the location being addressed. No harm no foul, until a 32 bit chip ties to run your app and it does try to address a different location and then you have a bad day. “32-bit clean” was used in software marketing to show that the software didn’t have this bug.

It might have been a 20-bit data bus, I don’t remember.

The 68000 had a 24 bit address bus, 16 bit data bus, and 32 bit registers. So the top 8 bits of addresses would be unused, and the original system (and third party developers at the time) used them for flags.

That’s not quite true. The original Mac used a 68000 processor which Motorola termed a 16/32 CPU, meaning: it had a 16-bit data bus, but a 32-bit architecture.

Conventionally, a CPU is defined by its ALU architecture. So, a 68000 is a 32-bit CPU, because it has a full set of 32-bit operations.

The 68000 CPU compromised its 32-bit implementation by having a 16-bit data bus. This meant that it took two bus cycles to transfer 32-bit data values. In addition the original 68000 had a 24-bit address bus, which meant it could only address 16Mb of data (or code). The address registers including the Program Counter inside the 68000 were still 32-bits, but the 68000 just ignored the top 8-bits when outputting the address.

The Macintosh operating system (System 1 to System 6) used the ‘ignored’ address bits to implement addressing flags. This was a major goof and why it took a lot of work, just over 4 years from when the Mac II came out to when System 7 was released in 1991, to update the OS to properly remove those addressing flags – and even then developers had to do the same.

https://www.folklore.org/StoryView.py?project=Macintosh&story=Mea_Culpa.txt

It has got to be a huge expensive to change to a new platform for them. Seems like there will be a lot of heavy lifting besides going to a closed platform (all built in-house). They have the cash though, so it will happen.

Personally I don’t see the draw to an expensive Apple desktop regardless of what CPU is in it, but that’s just me. Don’t get me wrong, I’d pay IF and only IF, Apple gave me an overwhelming reason to do so…. That said, My RPIs run on ARM, and my desktops/laptops on x86_64s. OS is Linux. Same on both. On my desktops, I get to install motherboards, change CPUs, pick my own storage devices, etc. I’ve a x470 motherboard that I’ve upgraded from a AMD 1600, 2600, 3600, to current 3900X (wonder on an ARM platform would there be the same level of flexibility?). I mean why on Earth would a person want an Apple when you could have the ultimate flexibility of a generic PC or build it yourself? Then there is the UI. I can pick and chose what fits me. Not be shoehorned into just one. Seems like a no brainer. If I want to write a ‘C’ application, no problem (gcc or clang). Or Pascal, or Fortran, Python, Cobol, or even the new kid on the block Rust… Debug with gdb if needed. LibreOffice runs across the board, etc… Apple just sounds to ‘restrictive’ for a PC platform. BTW, I did look into developing apps on the iPhone, but there was quite a few hoops to jump though, plus registering with Apple…. Didn’t have to do that with Android (at the time I was exploring this anyway). Bottom line, I just don’t get the Apple draw :) . Everything I read just pushes me further away.

” Bottom line, I just don’t get the Apple draw :) . Everything I read just pushes me further away.”

Read Steve Jobs biography. Agree, or disagree, he’s never been shy about what his platform was all about.

I agree, a mac is a product, not a hobby or a project. It’s designed for people who don’t care whether it’s an x86 or ARM or something else. They buy a mac because they want to be able to write a paper and get on the internet with a full keyboard and maybe run photoshop or something. Oh and iTunes and it needs to play movies on the plane.

But what keeps me buying apple products is: 1) the design. Everything (that a normal person would want to do) is easy. 2) it lasts a long time. My MacBook from 2008 still works fine. 3) no Windows. Whether it’s Mac OS or Linux or something else, anything’s better than that.

I see what you are getting at. Like a wrist watch, or a door, or even a cellphone…. You just use it, and in time you throw it away. That part makes sense I guess. It just isn’t for me. I like to ‘upgrade’ parts now and then, like a new Video Card. I remember when I switched to Gigabit ethernet for home network. I had to buy a PCI card as the motherboards were 10/100. I didn’t have to buy all new computers.

Insignificant from the market perspective.

“No wireless. Less space than a Nomad. Lame.” ?

Time will tell, it’s not because Apple desktop market is small that it might not start a trend (I agree with comment above that PC might leave Intel behind).

“Microsoft mostly tied its fortunes to Intel’s x86 architecture”

and 6502 (BASIC), Z80 (MSX) and ARM,MIPS,ALPHA,POWERPC (CE / NT / XBOX)…

ARM Advanced RISC Machines Ltd, was founded in November 1990 as a joint venture between VLSI, Acorn and Apple.

so Apple is not really changing architectures, just dropping an external supplier, to use their own architecture.

Indeed, but the mass market preferred Intel. Windows 2000 (aka NT 5.0) was the first real OS exclusively available for the x86 architecture. Obviously, I am not counting anything based upon MS-DOS as a *real* OS.

Note that the non-x86 versions (which you invariably possess if you possess an original Windows NT installation CD) included an x86 emulator, so you could run the usual software even if it was not compiled to native code for your non-x86 CPU.

I’d love to see a citation for kernel dbt considered performant. I suspect the reason the mac exokernel ran so fast was that powerpc was so fast comparitively. Removing the user to kernel transition is often an optimisation, even before you get to the security benefits!

“Apple briefly flirting with the idea of making its own RISC CPU, to the point where they bought a Cray-1 super computer to assist in the design efforts.”

Sorry, no.

Firstly, the Cray-1 was released in 1975, and was largely supplanted by the Cray X-MP in 1982. The period you’re describing happened between 1988 and 1991-ish, with the first Power Mac being released in 1994. The Cray-1 was utterly obsolete by then. This period corresponded to the tail of the X/MP era, and the start of the Y/MP range. This article notes they owned (possibly leased) several Crays in the 80’s and 90’s:

https://wiki.c2.com/?AppleCrayComputer

I’m a little dubious about the lack of sources in this, although I personally remember visiting Cray HQ in 1991 and being told they’d recently purchased a Y/MP.

Secondly, the Cray Research Incorporated PVM (Parallel Vector Machines, ie. Cray-1 through Y/MP) were really optimized for number crunching (nuke codes, weather sims, computational fluid dynamics) and bit bashing (crypto codes). Although they had a C compiler, it wasn’t great at vectorizing loops, so it was their FORTRAN compiler which got decent performance. I do recall there was a Cray port of FORTRAN SPICE, as that’s the only tool I can really think of which would be useful. Again, I can find no documented evidence to support this use, just repetition of rumors.

However, I actually know what Apple mostly used it for: Moldflow. This software is used for simulating plastic injection moldings to make the cases Apple used, which is a CFD task and perfect for the Crays. Moldflow was an Australian company, which was acquired by Autodesk in 2008.

BTW, Moldflow owned a Cray Y-MP/EL92 system in the 1990’s, and when it was decommissioned, I saved the CPU and memory boards from the dumpster. They’re sitting right next to my filing cabinet as I write this.