FPGAs are somewhat the IPv6 of integrated circuits — they’ve been around longer than you might think, they let you do awesome things that people are intrigued by initially, but they’ve never really broke out of their niches until rather recently. There’s still a bit of a myth and mystery surrounding them, and as with any technology that has grown vastly in complexity over the years, it’s sometimes best to go back to its very beginning in order to understand it. Well, who’d be better at taking an extra close look at a chip than [Ken Shirriff], so in his latest endeavor, he reverse engineered the very first FPGA known to the world: the Xilinx XC2064.

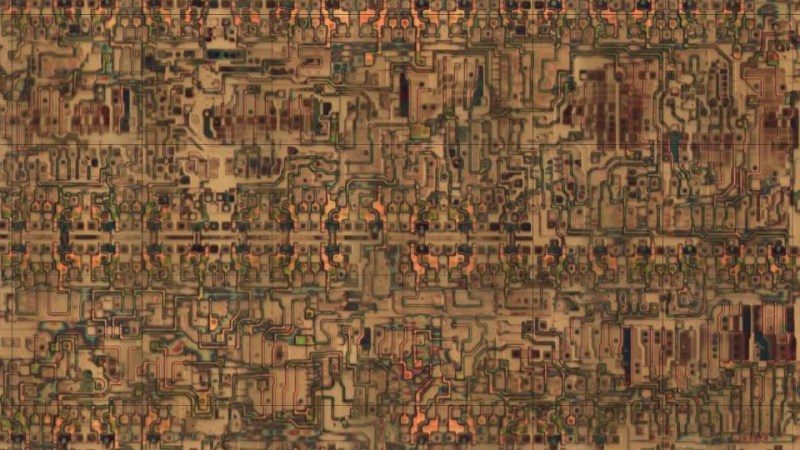

If you ever wished for a breadboard-friendly FPGA, the XC2064 can scratch that itch, although with its modest 64 configurable logic blocks, there isn’t all that much else it can do — certainly not compared to even the smallest and cheapest of its modern successors. And that’s the beauty of this chip as a reverse engineering target, there’s nothing else than the core essence of an FPGA. After introducing the general concepts of FPGAs, [Ken] (who isn’t known to be too shy to decap a chip in order to look inside) continued in known manner with die pictures in order to map the internal components’ schematics to the actual silicon and to make sense of it all. His ultimate goal: to fully understand and dissect the XC2064’s bitstream.

Of course, reverse engineering FPGA bitstreams isn’t new, and with little doubt, building a toolchain based on its results helped to put Lattice on the map in the maker community (which they didn’t seem to value at first, but still soon enough). We probably won’t see the same happening for Xilinx, but who knows what [Ken]’s up to next, and what others will make of this.

Wish the FPGA tools weren’t such utter crap. The joy of getting your first bitmap out of the DDR RAM to VGA monitor doesn’t even begin to compensate for the pain you suffer by eternal waiting for the tools to do the simplest tasks.

The software of the big ones are crap and bulky. Luckily project icestorm produced some lean tools.

An all in one opensource toolchain is apio (which has all the tools): https://github.com/FPGAwars/apio

There is a GUI for it: https://github.com/FPGAwars/apio-ide

Apio supports ice40 and xilinx ecp5 out of the box (and perhaps more)

lattice ecp5, not xilinx. Xilinx is still WIP/beta

Also, Quicklogic has embraced the opensource toolchain concept and is actively supporting Symbiflow now.

QuickLogic is fully onboard with supporting and contributing to FOSS FPGA tools. This is just a start and will continue to get better over time.

You can see all the docs, tools and dev kit here: https://www.quicklogic.com/qorc/

Some of that may just be that the tools are trying too hard, attempting to work out optimal layouts which is computationally unscalable. When that much raw CPU work is going on putting effort towards slimming down the rest of the tools may be seen as useless.

Hmmm maybe you should finally ditch your old 486 Windows 95 system.

I can compile a complete PDP-8e CPU and system (memory, floppy disk interface, uarts) written in verilog in about 90 seconds on my Windows 10 Intel XEON system using the Altera/Intel QuartusII toolset. Download the image to the FPGA (CycloneIV) in about a minute. And have a FOCAL-8 prompt pop up on my terminal window.

Point is, that’s still pretty slow, innit. Even on your kickass machine.

The iteration cycle times for HDL development are still pretty terrible (the “build”, “deploy”, “test”, repeat), compared to other modern software dev environments, where you can get the builds done in seconds, not minutes.

And don’t even get me started on the apparent lack of any free, sensible IDEs (or other productive tooling) for HDLs in general :/

I think the problem is comparing it to software development which it absolutely is not. With compilers for software there are very clear ways to convert from the high level code to machine code where for some languages like C it is more like just translating between the languages.

Compared with synthesising a HDL, where first it has to examine what you’ve written and create an implementation of it as a hardware circuit, potentially using any specific hard features in the fpga like hard peripherals or dsp slices or block ram.

Then it needs to map the hardware circuit and any specific features to physical parts of the fpga in the most optimal layout.

Then it needs to convert it into a bitstream to program the fpga with.

The big vendors tools are as slow as they are because for other companies they want to get the most out of the fpga, they don’t want to have to buy a more powerful fpga because the tools create a fast to create but sub optimal design, they’d prefer to use a cheaper fpga and cram as much into it as possible and to be able to do that then the tools need to take a lot longer and generate a more optimal design. FPGAs are still just taking off in the hobbyist world and that’s why you have smaller companies and open source toolchains that run faster but most likely aren’t as optimal or the chip is a lot less complicated. A lot of people are complaining about the IDEs for HDLs aren’t good and are very slow but you’ve got to remember they probably aren’t made for hobbyists they are made for people that know what they are doing and understand why they are like they are. For example Xilinx vivado let’s you instead of synthesising on your PC they let you generate the scripts to run on a server because if you ran it on your PC it could take many hours but if you are making an extremely complex design then you are probably working for a company that will have access to a compute server to run it faster. Just overall hardware design and HDLs have a lot more complexity than writing code to work on a microcontroller.

> for some languages like C it is more like just translating between the languages

I’m afraid you have no idea how compilers work :)

(or may be you have some ideas about that but they are all wrong ;)

Software development and Hardware development are very similar in nature, but unfortunately HDL tools are stuck in the past – current trend when CPU companies buy FPGA companies are very good thing actually and that is finally may combine both approaches into new bright “grey area” future of reconfigurable computing :)

This guy reproduced, then enhanced, the TMS9918A Video Display Processor in an FPGA https://www.eetimes.com/creating-the-f18a-an-fpga-based-tms9918a-vdp/#

Project X-Ray is actually working on doing something similar to project Icestorm, but for Xilinx parts.

Oh fun! I’ve a couple tubes of xc2018’s waiting to do something useless-ish. 9)