Currently, if you want to use the Autopilot or Self-Driving modes on a Tesla vehicle you need to keep your hands on the wheel at all times. That’s because, ultimately, the human driver is still the responsible party. Tesla is adamant about the fact that functions which allow the car to steer itself within a lane, avoid obstacles, and intelligently adjust its speed to match traffic all constitute a driver assistance system. If somebody figures out how to fool the wheel sensor and take a nap while their shiny new electric car is hurtling down the freeway, they want no part of it.

So it makes sense that the company’s official line regarding the driver-facing camera in the Model 3 and Model Y is that it’s there to record what the driver was doing in the seconds leading up to an impact. As explained in the release notes of the June 2020 firmware update, Tesla owners can opt-in to providing this data:

Help Tesla continue to develop safer vehicles by sharing camera data from your vehicle. This update will allow you to enable the built-in cabin camera above the rearview mirror. If enabled, Tesla will automatically capture images and a short video clip just prior to a collision or safety event to help engineers develop safety features and enhancements in the future.

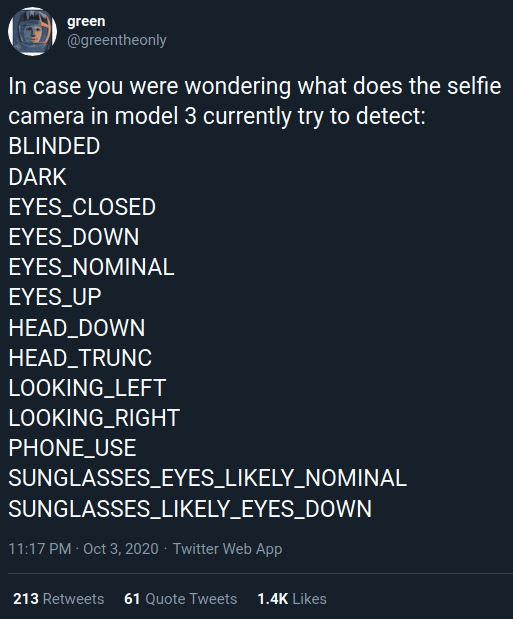

But [green], who’s spent the last several years poking and prodding at the Tesla’s firmware and self-driving capabilities, recently found some compelling hints that there’s more to the story. As part of the vehicle’s image recognition system, which usually is tasked with picking up other vehicles or pedestrians, they found several interesting classes that don’t seem necessary given the official explanation of what the cabin camera is doing.

But [green], who’s spent the last several years poking and prodding at the Tesla’s firmware and self-driving capabilities, recently found some compelling hints that there’s more to the story. As part of the vehicle’s image recognition system, which usually is tasked with picking up other vehicles or pedestrians, they found several interesting classes that don’t seem necessary given the official explanation of what the cabin camera is doing.

If all Tesla wanted was a few seconds of video uploaded to their offices each time one of their vehicles got into an accident, they wouldn’t need to be running image recognition configured to detect distracted drivers against it in real-time. While you could make the argument that this data would be useful to them, there would still be no reason to do it in the vehicle when it could be analyzed as part of the crash investigation. It seems far more likely that Tesla is laying the groundwork for a system that could give the vehicle another way of determining if the driver is paying attention.

Cadillac Competition

While Tesla certainly has the public’s eye and the Internet’s attention, they aren’t the only automaker experimenting with self-driving technology. General Motors offers a feature called Super Cruise on their high-end Cadillac luxury cars and SUVs that offers a number of very similar features. While Tesla’s vehicles undoubtedly know a few tricks that no Cadillac is capable of, Super Cruise does have a pretty clear advantage over the competition: hands-free driving.

To pull it off, Super Cruise uses a driver-facing camera that’s there specifically to determine where the driver is looking. If the aptly named “Driver Attention Camera” notices the operator doesn’t have their eyes on the road, it will flash a green and then red light embedded in the top of the steering wheel in the hopes of getting their attention.

To pull it off, Super Cruise uses a driver-facing camera that’s there specifically to determine where the driver is looking. If the aptly named “Driver Attention Camera” notices the operator doesn’t have their eyes on the road, it will flash a green and then red light embedded in the top of the steering wheel in the hopes of getting their attention.

If that doesn’t work, the car will then play a voice prompt telling the driver Super Cruise is going to disengage. Finally, if none of that got their attention, the car will come to a stop and contact an OnStar representative; at that point it’s assumed the driver is asleep, inebriated, or suffering some kind of medical episode.

With Super Cruise, GM has shown that a driver-facing camera is socially acceptable among customers interested in self-driving technology. More importantly, it demonstrates considerable real-world benefits. Physical steering wheel sensors offer a valuable data point, but by looking at the driver and studying their behavior, the system becomes far more reliable.

Falling Behind

Given the number of high profile cases in which users have fooled Tesla’s wheel sensors, it’s clear the company needs to step up their efforts. When police are pulling over speeding vehicles only to discover their “drivers” are sound asleep, something has obviously gone very wrong. Even if these situations are statistical anomalies in the grand scheme of things, there’s no denying the system is exploitable. For self-driving vehicles to become mainstream, automakers will need to demonstrate that they are nigh infallible; embarrassing missteps like this only serve to hold the entire industry back.

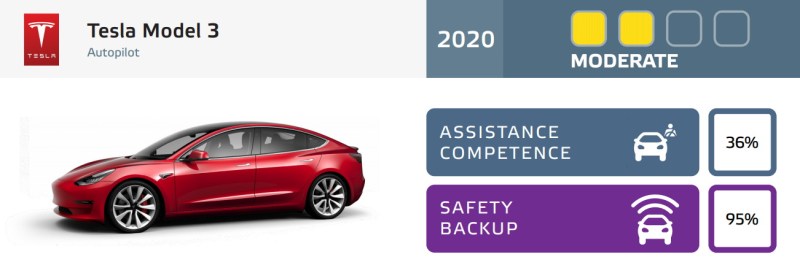

Tesla’s failure to keep their drivers engaged certainly isn’t going unnoticed. In the European New Car Assessment Programme’s recently released ratings for several vehicles equipped with driver assistance systems, the Tesla Model 3 was given just a 36% on Assistance Competence. The report explained that while the Model 3 offered an impressive array of functions, it did a poor job of working collaboratively with the human driver. It goes on to say that the situation is made worse by Tesla’s Autopilot marketing, as it fosters a belief that the vehicle can drive itself without user intervention. The fact that the Model 3 has an internal camera but isn’t currently using it to monitor the driver was also specifically mentioned as a shortcoming of the system.

While Tesla was an early pioneer in the field, traditional automakers are now stepping up their efforts to develop their own driver assistance systems. With this new competition comes increased regulatory oversight, greater media attention, and of course, ever higher customer expectations. While it seems Tesla has been reluctant to turn a camera on their own users thus far, the time will soon come where pressure from the rest of the industry means they no longer have a choice.

Information that I’m sure will find it’s way to insurance companies..

– Yep, or land you in much more trouble in the case of an accident, if it reports/shows anything other than you were paying 100% attention.

*Except* Tesla cars are by far the best in the world at preventing accidents *even* if you’re not paying attention. Also you are ignoring the statistical/probabilistic aspect of this … If you have an accident, it’s very likely it’s going to be the other person, and the Tesla’s (outside) cameras are going to be able to show that. It’s not like accidents are something magic that only looking at the eyes of the driver can allow to figure them out. If there’s an accident, somebody didn’t respect the rules. If somebody didn’t respect the rules, the outside cameras on the Tesla are going to detect that. Unless I think I’m more likely than the other guy to mess up ( say I’m a frequent drunk driver ), I’m going to turn on all the cams I can, because in case on an accident, the chances are *much* more likely it’s the other guy than me/you. Meaning what’s the most likely, by far, is that inside-facing camera will help show you did nothing wrong, because it’s extremely likely you didn’t.

From watching dashcam videos on YouTube I’ve learned that most accidents between two cars occur because someone makes a big rule break and someone else had been driving with a smaller rule break, driving too close to the car in front for example, or not reacting to an obvious hazard in front.

Which active safety measures make extremely unlikely to happen with a Tesla, yes. Tesla cars are many times a second looking at whether the current happenings are going to cause an accident, and if they are, it takes over and makes it so the accident is not going to happen. Therefore the only times accidents are going to happen is when a situation occurs that gives the ASM no possible method of avoidance, which must be incredibly rare ( ie “Giant rock falls of truck right in front of your car” ). Other issues are predictable and therefore avoidable.

Except they’re not. Most accidents are caused by a relatively small number of drivers who should not be driving at all (drink, drugs, etc). The vast majority of sober drivers are much better than the mean average.

Tesla’s stats show them to be about the mean average, which means that they’re equivalent to a half drunk driver.

You compare things to the average, not to “the average, the worst of it removed”, that is not how stats are done, why not also remove the best drivers, which would put it back to the same average??

Also, drunk people do drive Teslas. No reason to exclude them from this comparison…

And how do you explain the fact that Autopilot is twice safer than using only Active Safety Measures, are ASM somehow drunk?

I’m an avid follower of self-driving car stats. Where is the data?

So far, the only public data we have is about fatalities, because those make the news. We don’t have good data on miles driven, miles driven in a given mode, etc. The data that Tesla submitted to the NHTSA, under subpoena, was incomplete and strange. Like they were saying “go ahead, sue us” and the Feds blinked.

If there is more out there, I’d like to see it.

> We don’t have good data on miles driven, miles driven in a given mode, etc.

I was just researching this for other comments here, and you can actually piece quite a lot from presentations/articles from Tesla, they give news about how this is going, and have for the past three years at some point given the stats for miles driven on autopilot *so far* (so you need to substract previous years), and same thing for active safety measures.

This means reporters can actually account the amount of fatalities, and from those and the number of miles driven, can check if the numbers Tesla provides are correct or not. The fact there is no news article about how Tesla’s stats are a lie, are a pretty good indicator the stats they provide are at least within the range of what’s true.

>Also, drunk people do drive Teslas. No reason to exclude them from this comparison…

The people who drive Teslas are not representative of the entire population, because of the exclusive price of a Tesla and its upkeep costs. This causes a bias for all sorts of factors such as your probability of driving drunk, or your probability of driving long commutes while tired, etc.

>You compare things to the average, not to “the average, the worst of it removed”, that is not how stats are done,

There are more than one “average” in statistics. “mean” gives the simple average over the population, “median” gives the most typical (majority) number, and “mode” gives the distribution peak number. Which average you use is determined by what answers you want to get out of the statistics and what kind of data you have. Using the simple average on data that is not a normal distribution (skewed, bi-modal, etc.) is basically fooling yourself into seeing things that aren’t true.

That is exactly what you’re doing.

>The fact there is no news article about how Tesla’s stats are a lie,

There were plenty of commentators and articles back when the data came out pointing out that the statistical power (significance) is low and the comparisons is meaningless.

The only reason you can’t outright call it a lie is because you can indeed calculate these averages and numbers out of the data. The trick is that Tesla itself never said “were this much safer!” – they just gave out the numbers, and left the lying to all the pundits in the media. People like you, who don’t understand statistics and how to correctly apply it or what it really means, and consequently see in the data what you want to see in it.

> The people who drive Teslas are not representative of the entire population, because of the exclusive price of a Tesla and its upkeep costs.

You know, it would have taken you 30 seconds to Google this and realize it’s wrong. Not that poor people buy Tesla, but your assumption that poor people drink more is just plain wrong. And you’d know this if you looked things up instead of presuming. You presumed massive amounts of things about Tesla also in our conversations, in the same way.

If you don’t know, say you don’t know, making stuff up is not OK. Or look it up:

https://inequalitiesblog.files.wordpress.com/2011/05/bingeing_by_income_2009.jpg?w=640&h=386

Yes, Teslas are expensive and out of reach to poor people. You know what else is? Cocaine.

How about you present actual stats instead of assuming like you have always done so far.

> Using the simple average on data that is not a normal distribution (skewed, bi-modal, etc.) is basically fooling yourself into seeing things that aren’t true.

Then go ahead, do the *correct* math and show your results. I believe this is the third time I see you making claims about where the math would lead, and both times before when asked to show your math, you just ignored the request. I wonder why…

> People like you, who don’t understand statistics

I’m not going to again explain where you got the stats wrong and I got them right, at this point this is just bad faith from you.

Remind me, who drinks the most, rich people or poor people?

Remind me, you claimed removing bad drivers could get the average to reach/explain/approach the Tesla Autopilot numbers, but when asked to show your math, DID YOU?

Sorry, “mode” is the most frequent number, while median is the most typical number as defined by half of the population.

In a case like road accidents, “mode” would return you the accident rate of the worst driver (who still dares to drive regardless), “median” would return you the most common driver, and “mean” would return you the expected total rate of the entire population.

If you place people on an axis from 0 – 100% according to their skills in driving, most people would be closer to 100% than 0%, and the accident rate would be the opposite. The peak distribution for accidents would skew towards 0% but not reach it, because people with zero or marginal skills don’t drive, or they don’t drive very far before they crash their vehicles or learn to drive better. As people drive more, they gain skill, and vice versa, moving them across the scale to the other end.

The falsehood of claiming that Tesla’s statistic show their autopilot is safer than people is that their “average” (mean) is a combination of the two distributions, and smack dab in the middle of it, which represents neither the worst drivers who cause most of the accidents yet drive the least miles, nor the majority of drivers who drive most of the miles and safely so.

By using the metric “average miles per accident”, you are in fact making the calculation as follows: (miles / person) : ( accidents / person) = miles / accidents. What you’ll notice is that the “person” term cancels out, which means the statistics they are using is not comparing the autopilot to human drivers, but to the system of traffic as a whole. That is fundamentally not the same comparison. It is a subtle difference that is often not easy to spot, and this is a very common way statistics is used to mislead people.

> By using the metric “average miles per accident”, you are in fact making the calculation as follows: (miles / person) : ( accidents / person) = miles / accidents.

It’s not miles/accident they are showing, but miles/accident/person

>Remind me, who drinks the most, rich people or poor people?

Irrelevant comparison again. It’s not how much you drink, but whether you drink and drive. Lower income groups fall guilty of most DUI convictions – rich people take a taxi.

>It’s not miles/accident they are showing, but miles/accident/person

Same thing essentially. No statistics about the person are used, the numbers are simply normalized to scale it down to “per driver”.

>you claimed removing bad drivers could get the average to reach/explain/approach the Tesla Autopilot numbers, but when asked to show your math, DID YOU?

There is not enough data to make the calculation, because the Tesla data is apples to oranges. It would be meaningless to calculate it, since it’s still an irrelevant comparison.

I did however show you exactly how the principle works, of how using the average (mean) driver as a metric of merit causes multiple times the risk for most drivers while making the autopilot look good by eliminating the worst drivers. I also gave you a reference to a scientific paper where they studied the distribution of accidents and found that between 70-90% of drivers in the sample sets were in fact “better than average drivers”, which is roughly the same as the 80-20 Pareto distribution that I used in my example.

>How about you present actual stats instead of assuming like you have always done so far.

Scroll down more. The link to the paper with the driver accident rate distribution is under the other thread. This comment board doesn’t really work, especially when you start multiple branches of the conversation where you spam the same opinion over and over.

At this point you’re just spamming one irrelevant comparison after another, like equating the rate of drinking to the rate of drunk-driving, which is not the same thing. You’re not addressing my arguments at all, you’re just punching the air around – shadow boxing. If this is the way you genuinely think, it’s no wonder you believe all the things Elon Musk says.

> Lower income groups fall guilty of most DUI convictions – rich people take a taxi.

Of course that can’t have *anything* to do with the extremely well documented bias of the justice system against lower income classes. It’s definitely not the only factor, but simply hiring a better lawyer, and presenting better in court, and having more free time to prepare for it, are going to influence the outcome.

How about you present *actual stats* to show DUIs are done more by poor people ( and not talking about convictions, I’m talking about direct measure of DUIs versus means )? Do you realize I’m the one that keeps giving links/data, and you’re the one that keeps making half-assed excuses to try to ignore those?

Repetition is cheap though.

A simple example: assume 80-20 Pareto distribution. That means the average relative risk to 80% of the population is 25% of the mean risk, and 400% for the remaining 20% of the population. In other words, one in five is a “bad driver” who risks four times the average, and 4/5 are “good drivers” who on average have four times fewer accidents than the average.

Now, when you prevent the bad drivers from having accidents any more than the good drivers by evenly distributing the risk (everybody is using autopilot), it looks like the average just got four times better, but in reality this is just what the majority were already doing.

And this is somewhat close to what’s happening in the real world. The distribution is not that clean cut between the groups, and the causes of accidents are not 100% up to the driver, so the difference in risk is a bit flatter, but it’s about right to show the principle or “the math” of it.

>> It’s not miles/accident they are showing, but miles/accident/person

>Same thing essentially.

No it’s not. Your math doesn’t work in this case, and works in the other, that’s quite the difference to ignore. Go ahead, show the equation that ends in miles/accident/person, and show us where things cancel out?

> There is not enough data to make the calculation, because the Tesla data is apples to oranges. It would be meaningless to calculate it, since it’s still an irrelevant comparison.

If you can’t do the math, you shouldn’t be reaching conclusions. If you’re reaching conclusions, you should be showing your math. This isn’t how science works, at all.

I’m not saying you’re wrong this is a factor, but I’m *extremely skeptical* it’s a factor strong enough to explain the Tesla numbers. You’re not going to show you’re correct without math. If you can’t present math ( be it because of incompetence *or* because of lack of data ), then you shouldn’t be reaching conclusions. If you don’t know, say “I don’t know”, making shit up isn’t a correct way of doing things.

> I did however show you exactly how the principle works

I already agreed the principle is sound, this is a straw-man. You keep answering as if I had asked “show me the principle is sound”, when my question really is “show me the principle reaches factors large enough to explain the Tesla numbers”. Answering a different question and ignoring the actual one is the very definition of a straw-man fallacy.

> Scroll down more. The link to the paper with the driver accident rate distribution is under the other thread.

That paper doesn’t explain away the Tesla numbers, at best it shows it could explain *part* of it, but you have done absolutely nothing to show it’s *enough* to explain Tesla’s numbers.

> And this is somewhat close to what’s happening in the real world. The distribution is not that clean cut between the groups, and the causes of accidents are not 100% up to the driver, so the difference in risk is a bit flatter, but it’s about right to show the principle or “the math” of it.

Still not doing anything to *actually* show this is *enough* to explain the Tesla numbers.

>Of course that can’t have *anything* to do with the extremely well documented bias of the justice system against lower income classes.

Of course it does have SOMETHING to do with it, but to claim it explains the difference entirely, or even a significant part is making up some absolute bull****

Unfortunately direct statistics about income group and DUI are hard to come by (it’s not PC to group people like that), if they exist, but we can use proxies. For example, the most common age group to drink and drive is 20-24 year old (NHTSA). The average age of a Tesla owner is 54 years old. The two don’t exactly match.

https://hedgescompany.com/blog/2018/11/tesla-owner-demographics/

> Unfortunately direct statistics about income group and DUI are hard to come by (it’s not PC to group people like that), if they exist, but we can use proxies.

No, we can’t. If we do, we’ll go into a rabbit hole of objections to objections to objections, EACH without an *actual measure* of impact. This is *completely* pointless. And that’s pretty much the issue with your entire complaint against the Tesla numbers, you don’t have *measures* of *how much* your objections explain the Tesla numbers.

If you don’t know, you should say “I don’t know”,, not make shit up.

That’s the honest thing to do.

> this is *enough* to explain the Tesla numbers.

It doesn’t need to, because the Tesla numbers aren’t making the same comparison. The Tesla numbers are bogus, apples to oranges, and essentially arbitrary. The calculation would be meaningless, like explaining the number of frogs in France by counting cherries in Greece.

What I’m showing you is that even IF Tesla were to make a honest comparison, they would need to show many times greater average safety records just to get to par with the majority of drivers. Notice that these are two different complaints. 1) Tesla is using irrelevant data, 2) Showing higher miles per accident on average doesn’t directly prove its safer than people.

>No, we can’t. If we do, we’ll go into a rabbit hole of objections to objections

Yes we can, and I just did. What objection do you have with showing that the group of people who are most guilty of DUI does not really overlap with the group of people who own Teslas? It’s making the same point either way.

>you don’t have *measures* of *how much* your objections explain the Tesla numbers.

Most of those numbers are impossible to come by, for the major part that the data doesn’t exist, which by the same point means that Tesla doesn’t have that information either and cannot validate the claims of safety. They aren’t even trying.

What you’re simply trying to pull off is the continuum fallacy, by suggesting that because nobody can put an exact number on it means the opposite must be true.

What we do know is that the Tesla data is so sparse and so badly influenced by so many different biases that it cannot show what you’re claiming it to show. Everything form the socio-economic status of the owners, to where these cars are driven, to how the autopilot works or doesn’t work, to how the statistics work, it’s just not a valid comparison. If someone misses the barn door entirely and the bullet disappears in the grass, it’s irrelevant to ask “by how much?”.

> they would need to show many times greater average safety records

You mean like 9 times? And it’s increasing, expected to gain an order of magnitude in the coming few years with the complete rewrite of the system they just did, which is totally not what you’d expect to be happening if your argument was correct.

The future is going to hit your argument pretty hard I expect, but we’ll see if it does. Thankfully this isn’t Reddit, they don’t close comments after 6 months, so I’ll be back in a year :)

*You* would need to show your explanation explains the Tesla numbers, and it simply doesn’t. You are not capable of providing a quantification of it, and as such, you can’t claim it explains how high the Tesla data is. You just keep pointlessly repeating the same argument, without actually linking it to data/evidence.

You keep saying things like «many times», but are completely incapable of saying how much is «many». It’s crazy that with such a blatant flaw in your argument, you don’t stop and just keep repeating it in an obvious attempt at a strawman.

Reasoning without any link to reality is mental masturbation.

> What objection do you have with showing that the group of people who are most guilty of DUI does not really overlap with the group of people who own Teslas? It’s making the same point either way.

Sure, people are their age, and that’s it. No other factors matter or influence this. You are so full of shit.

This is all pointless, because you can’t actually *quantify* how strong the effect you are complaining about is, and you definitely have not done a sincere effort to take into account *enough* factors for this to be honest reasoning.

To be able to make your argument correctly, you’d need an actual peer-reviewed scientific paper demonstrating this, with all the care and attention to detail, and data, that a scientific paper has, and you don’t have it, all you have is comments and no sufficient data. And so, you make shit up. This is tiring.

> Most of those numbers are impossible to come by, for the major part that the data doesn’t exist,

And so, you making claims is pretty much you making shit up. Glad we finally got there. If you don’t have data, you don’t make claims, you say «I don’t know».

> which by the same point means that Tesla doesn’t have that information either and cannot validate the claims of safety. They aren’t even trying.

That’s not what their data is about. They aren’t *claiming* their data takes all these factors into account (it couldn’t, not without *a lot* of work), it’s just showing the massive difference that can be observed.

You however, are making a claim you can explain away this data, and have completely failed at doing so. Just giving factors that *could* influence this negatively, without quantifying them AT ALL, and ignoring any other factors (including possibly factors that would influence this positively), is just plain dishonest.

You *do not know* if your explanation explains away Tesla’s data, so stop acting as if you knew it did. I don’t know if it does, you don’t know if it does. Until it’s proven it’s true, I’m withholding belief, and going with the best data we have so far, which is Tesla’s, all caveats included.

> If someone misses the barn door entirely and the bullet disappears in the grass,

Of course, lacking data here, you have in fact not shown that the Tesla bullet dissapears in the average driver grass. You’re just asking us to believe you at your word, and I’m not going to until you provide data, that you don’t have. Just because it seems obvious to you, for some reason, doesn’t mean it is. Not providing data but believing anyway is pretty much the recipe for being wrong about these sorts of things.

Those files will be taken in the discovery phase.

Unless you ask that no files be stored, which is apparently an option.

One problem is that the “self driving” feature has a somewhat unreasonable expectation – “The car will drive itself and should operate with no human attention, but the system isn’t good enough to actually work without a human checking it to make sure it’s not doing something insanely stupid.” Not an unreasonable thing to ask a company employee performing safety testing and debugging on your Autopilot system. But a customer is going to see the whole point of a self driving system as freeing the driver to not pay attention to what the vehicle is doing. It’s not like cruise control where there is still a clear expectation that the driver needs to be doing something. Average drivers will not see their role as beta testing Autopilot and checking it for circumstances where it can fail, and I’d argue that is not a reasonable request for the average driver.

Instead, Tesla needs to look at Autopilot from the rule of “Build it idiot proof, and someone will build a better idiot.” They need to anticipate what disasters are likely to happen with Autopilot in the hands of the “better idiot” instead of a proactive beta tester.

Cars running on autopilot are several times safer than cars being driven by humans. It is already idiot proof by quite a large margin. The complaints about what drivers “feel” about Autopilot completely ignore it’s currently the most advanced system in existence, and it’s making drivers many times safer. Sure, it’s a new feature, some users are going to think it does things it doesn’t, but how does that matter if the driver is safe anyway ??

“several times safer than cars being driven by humans. It is already idiot proof by quite a large margin” – great joke :-)

Have you actually looked at the numbers, or are we just talking about your imagination?

FYI https://cdn.motor1.com/images/custom/Tesla%20Safety%20Report%20-%20Q3%202019%20data.png , see comparison to US average. As I said, many times safer … (just active measures is 5 times safer than US average, Autopilot close to 9x )

What was this about a joke?

– Without even getting into Tesla data being self-reported, those are not apples to apples comparisons. A few items to throw out, and making a few assumptions since an infographic has no backing data details:

– Tesla compared to average is meaningless in this case, other than to make Tesla look good. ‘average’ more than likely includes all accidents – including learners permit drivers, newly licensed, unlicensed, parking lot incidents, other low-speed incidents where autopilot wouldn’t even be applicable, etc. Tesla’s are by no means a ‘cheap’ car, new or used, – and there is relatively small subset of all drivers who would have one in the first place. Generally speaking (not everyone) being more experienced (on the roadway), responsible people, who are able to afford and are interested in owning a Tesla. I’d bet you’d see a similar safety trend if you could chart accidents of this group of people (without a Tesla instead) against the general population – but this doesn’t, and we do not have such data (well, there may be something ‘close’ if you mined NTSB data deep enough…).

– Also as mentioned above, the baseline includes ‘everything’ – so comparing autopilot incidents (which many accidents in the baseline could not even apply to an autopilot scenario) again is irrelevent. It’s like comparing how many times I’ve bumped into someone (in person – anywhere – including a busy event/concert) to how many times I’ve bumped into someone while hiking in a park.

– And lastly – I don’t have any backing data obviously, but it seems ‘survivable’ autopilot crash stats are also worthless as compared to if they went full driverless mode. Most autopilot crashes aren’t an accidental lightly (or heavily) rear-ending the car ahead of you type of incident that may play heavily into ‘regular’ highway incidents – it is more ‘oops, ML algorithms didn’t navigate that construction zone properly, and would have put me into that bridge support / over embankment / etc’. ‘Epic fail’ level shortcomings due to edge cases the system can’t deal with. With a driver ‘supposed’ to be paying attention, the severity of these can be mostly mitigated, but without someone paying attention, Tesla autopilot fails quickly could tend towards the fatality side. And how many times has the Tesla driver paying attention just taken over for autopilot in a ‘situation’, when it would have been a fatal crash otherwise , or even a crash in general? – Those make no appearance in reporting here, and would be a huge issue if this ever went true driverless.

@my2c, your points about demographics and ML algorithms seem to be self-refuting, i.e. if the autopilot is already as good as the group of drivers who have the least accidents that’s a good thing, and if the ML algorithms are only making mistakes that are easily correctable by the human driver that’s also a good thing.

> including learners permit drivers, newly licensed, unlicensed

Teslas carry around learner’s permit drivers, newly licenses, and unlicensed, therefore these do not make the statistics less relevant in any way, you’ve got your statistical science mixed up here.

> parking lot incidents, other low-speed incidents where autopilot wouldn’t even be applicable

But active safety measures are, and we are *also* talking about those here, it’s a combination of Autopilot and ASM we are evaluating.

> Tesla’s are by no means a ‘cheap’ car, new or used, – and there is relatively small subset of all drivers who would have one in the first place.

This has no bearing on the significance of these statistics. Sure, it’s expensive *and* safer. That’s pretty common actually, for more expensive cars to be safer. Being more expensive doesn’t negate their safety, this is such weird thinking ( or obvious excuse-seeking… )

> I’d bet you’d see a similar safety trend

I’m sure there would be a difference, I think it’s completely unreasonable to expect this to completely or even significantly negate the effect we are seeing here. One very clear indicator of this, is it’s twice safer to drive Autopilot than to drive just with ASM, and both of these are driven *by the exact same population*, therefore this doubling of safety is in fact completely uncorrelated to what type of driver is using the machine. Again, you have your statistical thinking wrong here.

> to how many times I’ve bumped into someone while hiking in a park.

No it’s not. While autopilot is indeed limited to highway driving ( it’s soon not going to be, and I’m expecting we won’t see these stats lowering much, if at all, but you’re free to expect anything you want ), ASM are not, and they are used everywhere the average applies, and you can see there a very marked difference in safety.

> it seems ‘survivable’ autopilot crash stats a

I was not talking about autopilot crashes, I was talking about the crash tests all cars go through, at which Tesla has the best results in the world.

There are in fact lots of anecdotal pieces of evidence that autopilot is better at saving passengers than humans are, but this happens so rarely we don’t really have that much data on it yet.

When we talk about Tesla survivability, we talk about how the machine is structurally/mechanically designed ( it has an extremely low center of gravity, which has massive benefits, and it doesn’t have a motor in the front, and *only* has a crumple zone instead, which is also massively beneficial. There are *known* good reasons why Teslas are safe. Search Youtube for the tests, and compare to those of other cars, the differences are impressive. In particular, check out tests where they try to get the car to do barrel rolls, and it’s incredibly resistant to those, and immediately dissipates the energy through deformation, instead of throwing their passengers around and risking their lives, all this thanks to the very low center of gravity ( thanks batteries ).

This isn’t something controversial, you’d know all of this if you had looked it up, it’s easy information to find, it’s public and they are forced to release it.

> ‘oops, ML algorithms didn’t navigate that construction zone properly,

You realize the media is *incredibly* hungry for these sorts of studies ( thanks Terminator ), and they are *incredibly* rarely seen in the media right? Have you *actually* looked up at data on autopilot accidents, or have you just presumed reality matches what you believe anyway? That’s not a really good way of thinking about things…

> ‘Epic fail’ level shortcomings due to edge cases the system can’t deal with

The system is designed very solidly so these only result in the user having to take back control. We have very few cases to study, but nearly all are not caused by the Tesla but by the other party. You really should browse the news for “tesla accidents”, it’s extremely clear.

> but without someone paying attention, Tesla autopilot fails quickly could tend towards the fatality side.

Well, you don’t understand how Autopilot works then. If the person is not paying attention, the car is going to stop on the side of the road. Even assuming the driver is not paying attention and tricking the care into thinking they are (which I don’t expect to be common), the car is driving itself, and is extremely good at not taking risks and protecting the driver, if somebody runs into it, it’s much better than a human at avoiding a crash and at minimizing damage/risk, it reacts *much* faster than a human can for example, and thinks better/faster about risk minimization.

> And how many times has the Tesla driver paying attention just taken over for autopilot in a ‘situation’, when it would have been a fatal crash otherwise , or even a crash in general?

If it didn’t happen, we can not know anything about it. What we can know is there are instances of users completely failing to pay attention ( ie sleeping, having a heart attack etc ), and in those cases the universal consequence is the car will safely pull them to the side of the road.

> Those make no appearance in reporting here, and would be a huge issue if this ever went true driverless.

They are obviously not going true driverless without this sort of issue solved, and they are saying this sort of issue is *already* mostly solved on highways, and the main limit now is regulatory.

You really seem to be taking a lot of your information from your imagination instead of from reality…

@Shannon thanks for saying exactly the same thing as me, but in a way that is 50 times more compact ^^

>What was this about a joke?

The fact that it is. The autopilot can only be engaged in specific conditions, so the comparison between “autopilot on” and “autopilot off” is entirely meaningless. It’s cherry picking those miles which are easy enough for the autopilot, and saying “look how safe we are going down a straight urban highway”.

The NHTSA statistics show that rural roads are twice as dangerous for the average driver with twice the road fatalities, because of poor road markings, no division of traffic, lacking signs, unmarked intersections, blind curves, animals on road… etc. meanwhile, Teslas rarely venture outside of the city and the vicinity of a supercharger. They’re literally playing on easy mode with the most difficult task being detecting a lane divider (which they sometimes fail and crash into)

> The fact that it is. The autopilot can only be engaged in specific conditions, so the comparison between “autopilot on” and “autopilot off” is entirely meaningless.

No it’s not. We have stats for “active safety measures only” versus “active safety measures with autopilot”, and these show a doubling of the safety, that is ABSOLUTELY meaningful and statistically significant.

Additionally, we have stats for ASM, which is IN FACT engaged in *all conditions* (that is the same conditions as the average driver, making comparisons with average driver stats meaningful ). And when we do these comparisons of average driver versus ASM, we do see that *in fact*, it is several times safer.

So, to recap: ASM is several times safer than driver average. And Autopilot is twice safer than ASM. All these are statistically relevant and significant ( even though to compare autopilot to average driver you can’t do it directly, there is a step you have to take there, but that doesn’t remove significance ).

https://cdn.motor1.com/images/custom/Tesla%20Safety%20Report%20-%20Q3%202019%20data.png

> It’s cherry picking those miles which are easy enough for the autopilot, and saying “look how safe we are going down a straight urban highway”.

No it’s not. Not for ASM, and then you can compare ASM and Autopilot to get the rest of the way there.

Isn’t science neat?

The NHTSA statistics show that rural roads are twice as dangerous for the average driver with twice the road fatalities, because of poor road markings, no division of traffic, lacking signs, unmarked intersections, blind curves, animals on road… etc. meanwhile, Teslas rarely venture outside of the city and the vicinity of a supercharger.

Evidence please? I’m sure the effect you describe exists, I have extremely strong doubts it’s strong enough to matter here. In fact the collosal majority of outside-of-city miles driven are driven on highways, ( where Autopilot can be used btw ). The miles you describe, at least in the US, are the (comparatively) rare “last miles to home”.

> They’re literally playing on easy mode with the most difficult task being detecting a lane divider (which they sometimes fail and crash into)

More than humans do?

Neural networks are totally amazing, they are mind blowingly fast, but only when dealing with anything that was used in their training datasets. An unexpected event that was not part of the datasets they can fool them into doing the wrong thing. Most of the time, they will get it right but errors can lead to constantly slamming on the breaks with things that would rarely fool a human e.g. https://twitter.com/chr1sa/status/1310350755103031296

Given we’re in 2020 Q3, it might be worth including more recent data. See https://www.datawrapper.de/_/uym2v/ for a more interactive view including up to 2020 Q2. What I don’t understand is why there’s such a big dip in Q4 2019.

> Neural networks are totally amazing, they are mind blowingly fast, but only when dealing with anything that was used in their training datasets. An unexpected event that was not part of the datasets they can fool them into doing the wrong thing.

Imagine being so sure you’re right, you don’t have any interest at all in checking if maybe you might be wrong. That’s an impressive amount of “ignorant and happy to be so”…

It’s like you’re imagining a Tesla car is some cameras, a neural network, and some motors, and poof that’s it.

If you did any research on this, like read a paper or watch a conference, you’d know it has *a lot* of parts, including physics/prediction, a ton of different elements, and only *some* of those are in fact Neural Networks, that are designed to do very specific tasks, that are only a part of what the system does.

Also, you do realize they have billions of miles driven in their datasets, that’s easily large enough to contain all possibilities *within reason*.

If you were right, we would expect Tesla Autopilot to be *less* safe than a human drivers right? How comes then the facts show the exact opposite? Oops!

> Most of the time, they will get it right but errors can lead to constantly slamming on the breaks with things that would rarely fool a human e.g. https://twitter.com/chr1sa/status/1310350755103031296

You do realize that’s early release and the users of warned of it though, right? And of course, finding one anecdotal example of an issue means the whole system is fundamentally flawed, that’s sound reasoning there for sure!

Also, are you seriously suggesting this is something Neural Networks can’t figure out? Hilarious…

The new / redesigned version of Autopilot they are going to start previewing soon was in fact designed for/is expected to be much better in this kind of situation.

Also, oh my god, the care braked??? What a catastrophe! This must mean the car is unsafe, right? It’s not like we have stats that it’s several times safer than a normal driver!

> What I don’t understand is why there’s such a big dip in Q4 2019.

Accidents are so rare, they only have a few to go by, meaning this doesn’t have a ton of statistical significance, and when you’re in that situation, just random chance can make your result vary like this, it’s pretty much expected considering how few accidents they have to go by/with.

If someone is malicious and wants to fool the neural network, there is almost no protection:

https://www.youtube.com/watch?v=1cSw4fXYqWI

> If someone is malicious and wants to fool the neural network, there is almost no protection:

Oh my god, you can trick a car into carefully stopping, what an insane safety risk!!!

It’s not like you can do the exact same thing to human drivers with slightly different techniques.

Yes, if Coyote draws a tunnel on the side of a cliff, and redirects the road to it, Beep-beep will run into the wall. How does that in any way demonstrate an issue with Tesla’s self driving system?

You’re grasping at straws, man.

>We have stats for “active safety measures only” versus “active safety measures with autopilot”, and these show a doubling of the safety, that is ABSOLUTELY meaningful and statistically significant.

No it’s not, because these cases include different road conditions and different roads. Autopilot on only happens over certain areas, whereas autopilot off happens everywhere. It’s an irrelevant comparison.

The areas where you can turn the autopilot on have fewer accidents by default because of the type of road and traffic, while the autopilot off case includes all the fender benders that happen around parking lots and intersections that the autopilot simply it’s equipped to handle and will not engage, plus all the accidents where the driver was intentionally speeding and crashed the car. This has been pointed out to you many times, but you refuse to acknowledge the fact; you’re applying fanboy logic over “statistical significance” while admitting that they only have a handful of cases to go by in the first place, which does not make it statistically significant. Quite the opposite.

Secondly, Teslas are all brand-spanking new: better cars, fewer accidents. Teslas are expensive: they’re mostly driven by a particular demographic that isn’t as prone to accidents compared to the general public. Teslas are mostly driven in sunny San Fransisco, not up in snowy Seattle… etc. You have all these external factors and sampling biases skewing your statistics, plus the statistical sparsity and insignificance of it, plus the fact that Tesla got caught stuffing the dataset to make it look better.

What you’re doing there is just spreading false hype.

> No it’s not, because these cases include different road conditions and different roads. Autopilot on only happens over certain areas, whereas autopilot off happens everywhere. It’s an irrelevant comparison.

Yes, let’s conveniently ignore the fact that the vast majority of miles driven are driven on autopilot-capable roads, which completely demolishes this argument.

I had a job offer in the US recently, I had to find possible homes to live in two states in the US ( wasn’t sure where the office would be), both in ~50k people towns, one in Texas one in California. For *all* of the dozen houses I looked at, over 80% of the drive from home to work was on highways. That’s just the reality of how the US is built. Ignoring that is just pretty much a fallacy from you.

This is getting sad at this point, the FUD and excuse-seeking is just so plain and obvious…

As I’ve said many times, if you really think you’ve got a leg to stand on here, *do the math* and show it. I’m not holding my breath.

Yeah, that guy in the Tesla on my commute going 40 in 55 is a safety hero! Thank God for his ability to multitask while he is in traffic. I have seen this multiple times in the last year. Arrogant Tesla driver holding up traffic.

Thanks for the anecdote, surely you are not suggesting it’s representative of some general trend? I’ve seen people driving too slowly in many different cars, actually I don’t think I’ve ever seen a Tesla driving too slow … must mean Teslas never drive too slowly!

Early Autopilot had some situations where it would slow down in areas where it shouldn’t have (if uncertain about something, it will choose the safest option), but that has completely disapeared in the most recent versions.

Such as the video that came out a couple years ago, of a couple having sex in their Tesla while it was driving.

Well, you can have sex with your hands on the wheel, so as long as you’re paying attention to the road while having sex ( well, one of you pays attention at least ), it’s perfectly fine and within the accepted terms of use for the vehicle. Isn’t technology great? You can now have sex while driving.

Bah. That’s nothing new, you don’t need all that fancy technological assistance to do that.

My wife isn’t up for those kinds of risks but my ex, before her. Well, there’s a reason I always turned down manual transmission cars and yes, I do know how to drive them.

The thing is, this was never meant as a system to assist drivers. They have been after full self-driving, taxi-like function since the beginning. The “driver assist” part of this is there only because they *can* provide this to users while they are working on FSD, so they do, mostly as a way to give them a taste of what’s to come ( though at this point there are many reports that you can experience FSD while on highways, which is pretty awesome ). My point is, they don’t play well with the drivers because that was never the point of the system, the system is designed to get rid of the driver (ok that’s a bit more omminous than I intended :) )

Steps to fix this mess

1. Change the name from autopilot to something that doesn’t imply the thing can drive itself.

2. Admit that it is still not as safe as a human driver. They only release statistics when it favors them and the best I’ve seen is it being on par with human drivers who drive in the snow at night.

3. Actually try to see if the driver is there. Turn the steering wheel just a hair. If the drivers hands are there you’ll get some resistance, if it’s just a clip like the “autopilot buddy” you’ll get next to none. But hey that would require paying my interns to even consider safety when designing this crap.

1. It can though. People have gone thousands of miles at a time without human intervention. The reason you’re still a part of this is both for legal reason ( regulators aren’t yet ready for autopilot ), and because there are still rare cases where the car will need you to figure things out, but those are not common at all ( on highways ). Outside those, it’s in fact driving itself.

2. https://cdn.motor1.com/images/custom/Tesla%20Safety%20Report%20-%20Q3%202019%20data.png

3. That sounds pretty annoying. I’d rather use the camera. But sure let’s make it an option, you get the wheel tugs every 15 seconds, I get the photon-based method :)

Also, the whole “hey that would require considering safety” is pretty funny when it’s pretty much the safest car in existence, both in terms of miles-per-accident *and* crash survivability.

Your informations on this are clearly incredibly incorrect or outdated ( though part of what you’ve said has in fact never been true ).

So, the car has all these new features that have clearly been demonstrated to systematically prevent accidents in accident-involving situations, but your expectation is despite all these features, the car is going to be about as unsafe as the average car. Does not compute. If the car learns to prevent accidents, and the others do not, it’s accident rate is (extremely likely) going to be lower than the average, that’s just what’s reasonable to expect.

1. But autopilot DOES NOT imply that it is autonomous.

Have you seen autopilots in most planes and ships?! The better ones can follow waypoints instead of just holding direction (and altitutde in case of planes)!!

Only the modern airliners have auto-landing and other similar features but they are still what you would call DUMB.

To the general public, which this is marketed to, autopilot DOES imply autonomous. I’d wager the general public assumes autopilot in an airliner means no attention is needed while it is engaged. I’d also wager few people think autopilot can take off or land a plane – the assumption is ‘while it is engaged’ for cruising you do not need to pay attention – while there may be scenarios where you cannot engage it (such as takeoff and landing – or maybe a parking lot in tesla’s case).

If you’d done even the tiniest bit of research on this, you’d know Tesla cars actually explain to users what Autopilot *exactly* is, and what they can expect from it. Therefore what is implied doesn’t practically matter. Really, if somebody *buys* a Tesla with this feature not understanding what the feature actually does, it’s them not doing their due diligence, not Tesla failing in some way.

And yes, the same way few people think autopilot can land a plane, few people are going to think autopilot can take a Tesla everywhere. Autopilot doesn’t mean full self driving, be it on a plane or a Tesla, and the general public knows this.

Then they hand the car over to someone who didn’t hear the spiel and they crash it.

“If you’d done even the tiniest bit of research on this” – no research was needed, thank you. I was refuting the “But autopilot DOES NOT imply that it is autonomous” comment my reply was on – ‘autopilot’ (not Tesla’s feature) comes with connotations / public perception of plane being able to fly itself (even if only midflight).

-If a Tesla dealer and/or the car correct this perception, great, but that does not relieve it from guilt of a misleading marketing term. You also can’t reasonably compare the level of training / understanding commercial airline pilots have of ‘autopilot’ vs a Tesla’s owners training / understanding of their cars ‘autopilot’ capabilities and shortcomings. And if the car explains it, (obviously arguable here – not having seen it myself), odds are it does about as much good as a license agreement you click through when you install software.

> Then they hand the car over to someone who didn’t hear the spiel and they crash it.

If you want to invent a situation where somebody mis-uses something and causes damage, that is possible for *any* tool, with nearly infinite possibilities. That doesn’t make it statistically significant, or in any way important.

Also, even if you let your idiot friend drive your Tesla (for which you have only yourself to blame, not Tesla’s fault, yours), the Active Safety Measures *will* prevent accidents much more efficiently than if an average driver was driving a car without ASM. This is safer *even* if you do it wrong, isn’t that impressive?

> no research was needed

Well, if you had researched this, you’d know Teslas do in fact explain to users what the limits of the car are, and how Autopilot works, which means that your expectation of users not knowing about this while driving is completely unreasonable.

> the general public assumes autopilot in an airliner means no attention is needed while it is engaged

I have no such assumption, and I do not believe that is a common assumption in the general public. I expect most people to know that pilots in fact have to pay attention to the plane even if it’s in autopilot mode. In fact if this wasn’t the case, I wouldn’t go on the plane, and I expect most of the “general public”, wouldn’t either, you have extremely weird expectations of what’s generally expected…

Therefore, Tesla’s Autopilot term, isn’t misleading, it’s in fact very similar to the term as used for planes ( not enabled everywhere, and still requires attention, this is true of both planes and Tesla )

There is also an argument to be made that Autopilot for cars doesn’t have to necessarily mean the same thing as autopilot for planes, these are different vehicules, meaning different technologies are going to have different properties. Nobody excepts wheels on a car to have the same function as wheels on a plane ( «well, why would I need my wheels *while it’s moving*, planes don’t use wheels while they are moving, this is so misleading!!! » ). You can do this with many parts of many vehicles, just because it means something for planes, doesn’t mean it has to mean the same for other vehicles, that are running on completely different concepts. This is completely solved once the car *explains* everything to the driver before it can use the feature.

Also, yes indeed, Tesla *does* openly explain to customers what Autopilot is and what it does. If you buy a Tesla with the expectation Autopilot will drive you from point A to point B without requiring your attention, you are a complete idiot with fingers in their ears, there is no other possible scenario.

> obviously arguable here – not having seen it myself

But “no research was needed”. Yes, you haven’t completely missed the point, that’s for sure!

> “If you’d done even the tiniest bit of research on this” – no research was needed

Maybe go to the Tesla website, and take 10 seconds to try to buy a car. When you get to the options, and you try to purchase Autopilot, the website makes it very clear, I quote:

« Full Self-Driving Capability is available for purchase post-delivery, prices are likely to increase over time with new feature releases

The currently enabled features require active driver supervision and do not make the vehicle autonomous. The activation and use of these features are dependent on achieving reliability far in excess of human drivers as demonstrated by billions of miles of experience, as well as regulatory approval, which may take longer in some jurisdictions. As these self-driving features evolve, your car will be continuously upgraded through over-the-air software updates. »

No research needed you say? Even trivial amounts of research just showed you’re wrong.

>few people think autopilot can land a plane

Autopilot does land airplanes. They also take off fully automatically these days. The takeoff, cruise, and landing are technically not the exact same system and the pilots switch between modes, but they are covered by the general category of the plane’s “autopilot”.

Auto-land systems have been around since 1968 (SE 120 Caravelle). They’re used especially in poor visibility conditions.

Getting kind of tired of the aviation autopilot comparisons. Airliners operate in controlled airspace for every second of their flights, except across oceans, where there just aren’t any non-controlled aircraft just because DUH. Aviation autopilots do not identify objects in their aircraft’s path, nor do they do any kind of collision avoidance. Please everybody stop this!

>Therefore, Tesla’s Autopilot term, isn’t misleading

You’re applying the logic that because YOU don’t expect it, then nobody else does, which is a false argument. “Autopilot” as a marketing term is INTENDED to convey the idea of autonomous driving, which Teslas aren’t.

If I say “This knife cuts butter”, and then add a small print with, “This knife cuts butter at 100 F and above”, I am not technically wrong but I am engaging in false marketing. The same goes with Tesla’s “autopilot”. The concept of an autopilot is that it pilots the vehicle autonomously, which in a limited sense a Tesla does, and the disagreement here is between the public perception of how much autonomy is required to merit that badge.

Tesla’s use of the term is intentionally giving a far better impression of the system than what it actually does, which is why it is false marketing, and why they got slammed in court in Germany. In the US they have been settling the lawsuits out of court with money.

The reason it is false marketing is because you’re drawn in with promises that are later retracted. They keep saying “Full Self Driving feature” at the front and then backpedaling by saying it’s not really once they’ve got you reeled in, just so they wouldn’t be sued right out of the gate by the authorities. If they weren’t being sued over it, they wouldn’t (and in the past didn’t) bother with the full disclaimers; they’re toeing the line.

> Autopilot does land airplanes.

This entire conversation is not about what can be done by autopilot, but about what can be done *without driver/pilot attention*.

Automatically landing planes *definitely* still need pilots paying attention to them.

It’s pretty much all the same as a Tesla: automated driving, but requiring pilot attention. Exactly the same for a plane and for a Tesla: both can do their own thing, but both require driver/pilot attention…

> You’re applying the logic that because YOU don’t expect it, then nobody else does, which is a false argument. “Autopilot” as a marketing term is INTENDED to convey the idea of autonomous driving, which Teslas aren’t.

Planes autopilot: autonomous but still requiring users to pay attention, and be ready to take over as needed

Tesla autopilot: autonomous but still requiring users to pay attention, and be ready to take over as needed

So, planes have been abusing the term autopilot for decades, is what you’re saying? And the general public has been ignorant of the capabilities of those autopilots for decades too, getting to today with an incorrect view of what they are capable of? Are you serious? I know nobody that believes planes autopilots can fly without pilot attention.

I also think Autopilot does imply Autonomy despite all the protestations otherwise.

Some car magazine recently also marked down their assessment of Tesla based on the use of the term Autopilot because large numbers of people do consider it to be autonomous.

In marketing, the difference between “technically correct” and “false marketing” is fuzzy. Some level of hype is “allowed” because there’s no real way to stop companies from lying to your face.

Now that Tesla has got bad publicity about their autopilot not actually being autonomous to a great degree, this has devalued the term “autopilot and people got wise about it. Previously, when Tesla started marketing the car, people took their claims on trust and we had youtube videos of people driving around going “look ma no hands!” – so Tesla had to fess up and tell them to stop doing that – especially after the autopilot killed a bunch of people.

After all, any car is “autonomously driving” if you just let go of the wheel. Sometimes for quite a long distance, before you end up in the ditch.

It did not «kill a bunch of people», what dumb fearmongering… you have zero shame.

How about you prove I’m being an idiot about this by demonstrating your claim with some evidence?

I’m not holding my breath waiting for it…

In Soviet Russia […] watches you!

(coffee hasn’t fully engaged this morning)

A car. It’s a car :) You’re welcome.

My Volvo sometimes decides that I need to take a stop and drink some coffee. I have tried to find what behavior prompts this message, I’ve tried driving over lane markings, trying to look as if I’m nodding off, looking out of the side windows (when it’s safe), taking my hands off the wheel and letting the auto lane keeping system do all the steering (on well marked motorways only), making snoring noises, nothing triggers the message. But if I give up the acting and drive normally I get the coffee warning message. It’s getting me down. I am sure that Volvo has put some terribly serious algorithm in place – I would love to know how it works, so that I could learn to drive like a good Volvo driver and drop the sarcastic comments about coffee.

AIUI, the Swedes know their coffee!

Their enhanced knowledge of coffee, obtained through centuries of consumption, genetics, auroras, and quite possibly a bit of Old Norse religion have achieved for them a consciousness of coffee unobtainable to non-Nordic minds.

So, don’t bother trying to understand it.

As the Kevin Spacey character said in K-PAX; “you’d only blow yourselves up, or worse, somebody else.”

In the same way that we deal with laptop cameras, a piece of tape will disable this feature.

The rating systems are manipulating Tesla into controlling drivers. As a Tesla Owner I do not want big brother watching me especially with the intent purpose of analyzing me in the moments leading up to a crash. That actually puts the Tesla driver at a disadvantage since there is not a camera watching the other driver in the moments leading up to a crash. When there is a crash there are usually two people who could have avoided it, and two people who were not paying enough attention. Usually the law backs up the person who was obeying the rules of the road, but once the camera says, well this driver was looking at the air conditioner, then that driver is at a disadvantage legally. When I bought my model 3 I immediately put a sticker over the in cabin camera. I’m much happier keeping my hand on the wheel for a long trip than having a camera watch and study my engagement. I’m not one bit happy with consumer reports and the European new car assessment manipulating the information to bully Tesla into watching me. Anyone who has tried the two systems KNOWS teslas autopilot (WHICH IS CORRECTLY NAMED as it is in the airline industry) is superior and much more closely resembles the reactions a human would make while on the freeway. In full disclosure autopilot is not save when crossing intersections on side streets because it tends to follow the lines of lanes merging in from side streets, but on the highway it is about as good as a 16 year old driver.

It seems clear Tesla is going to give you the choice of activating that camera or not, so I wouldn’t worry about big brother or about what pressures Tesla to do what. Also, Tesla is not working on driver assist, it’s working on full self driving, and you’re just getting a demo of that *as* driver assist. So their goal is to get rid of the driver (no, not by jumping off a bridge), which would also make that camera not matter. This is all temporary.

> So their goal is to get rid of the driver

Well, they’ve succeeded on a few occasions, I give them that!

Actually, being 9 times safer than other cars just in terms of number of accident per mile, and much safer in terms of crash survivability, they are in fact much worse than other cars at getting rid of drivers, which is ironic when autopilot’s job is to get rid of drivers :)

Is this pure fanboyism or are you actually paid by tesla for you comments?

Yes, it can’t be I’m just stating facts and correcting somebody who’s saying obviously incorrect things.

It has to be a conspiracy, I have to be paid to say this, can be no other reason, can’t be you’re just wrong.

Can’t be this is an incredibly obvious https://en.wikipedia.org/wiki/Red_herring , trying to divert to talking about me, instead of talking about the facts, because you’ve got no data, no argument, no reasoning, no evidence for your position.

And so, you have to find *something* else to divert to, and what’s easier than a conspiracy theory?

What’s incorrect about what I’ve said? Wouldn’t pointing *that* out make more sense than talking about me, when I don’t matter, *at all* to this subject?

To be honest, that might apply to USA, which isn’t really especially safe. It would be interesting to see data from Norway. Tesla is the second best selling brand there, close behind VW and far ahead of the third, and it has pretty much a perfect infrastructure with few small sideroads and chargers EVERYWHERE. And Norway is one of the countries in the world with best road statistics. It would be nice to compare to Sweden where Tesla isnt as common.

I believe Tesla wouldn’t have the same statistics there.

Another thing is also what customers by Teslas and drive them compared to the average usage. This would be bias the data in Norway since Tesla have had benefits normal cars wont. Like being able to use bus and car lanes with less traffic, and no tax for congestion and road toll in to cities. They’re driven by a lot of commuters.

My experience from talking with Norwegians and being in Norway is that Teslas might be driven more on the bigger highways in to cities, compared to normal average car.

But the main question? If Tesla really was much safer and better in Norway, and they had the stats to prove it, which they should with all the data collecting both they and the goverment do, including automatic tolls, why can’t I find it on Teslas Norwegian homepage?

Why arn’t they bragging with statistics?

> I’m just stating facts

As pointed out above, your “facts” are based on statistically irrelevant and insignificant data from the company itself. You’re not correcting anyone, you’re doing marketing and hype.

> based on statistically irrelevant and insignificant data

Yes, welcome to conspiracy theory land, where not only advanced AI systems do not provide improvements over normal driving, despite pretty much nobody in the scientific/AI community doubting in any way their claims, but also Tesla is just straight out lying to the world *about vehicle safety*, one of the legally worse/most dangerous things to lie about in all of the developed world.

Isn’t it quite extraordinary, that with accident data being public, and Tesla publishing the value for their claimed miles driven and miles driven on autopilot, no journalist has taken the time to do the math and notice it didn’t match their claimed figures? You live in a fantasy land if you think something like this would go un-noticed when this is math a 12 years old can do.

No, it’s not statistically irrelevant and insignificant, it’s in fact the opposite. I’ve taken the time in another comment to write a very long explanation of what was wrong for your arguments against the data, if you just ignore that, I can’t do much about it, willful ignorance is pretty hard to solve.

> Why arn’t they bragging with statistics?

They are. Autopilot is twice safer than Active Safety Measures alone. This is true even if you exclude bad/drunk drivers, and means no matter the group ( including Norwegians ), that 1:2 ratio is something you’re going to see there.

That’s plenty enough to brag about on it’s own.

Also, accident data is public, and accidents are rare enough to be counted. This means journalists can do the math themselves, and you better believe if the math didn’t add up to what Tesla is saying, you’d hear about it in the news… even the short sellers don’t claim Tesla is fudging the numbers.

If you had ANY experience with actual Deep Learning and AI you’d know that as for now, these systems have an high failure rate, and that is a defect of the CNN-based approach itself,

CNNs are a wonderful algorithm for classification in their training dataset space, but are awful algorithms when it comes to extrapolation: a CNN performs good and reasonable when it’s operating in the same domain it was trained into, God only knows what happens when it comes to exceptions and outlier data.

By definition, car crashes and car crash situations are an extremely under-represented class and training data is scarce, I would almost trust that a Tesla model has a reasonable output in “normal” driving situations but I’m SURE it would perform badly in exceptions, at least that’s my experience after years of study on the matter.

> If you had ANY experience with actual Deep Learning and AI

I do, actually. I’ve worked over a year with them, and still frequently use them.

> CNNs are a wonderful algorithm for classification in their training dataset space, but are awful algorithms when it comes to extrapolation:

You’re making the incredibly classic mistake of thinking of Autopilot as cameras, a neural network, and wheels. It’s incredibly more complex and better designed than that.

This is incredibly clearly demonstrated by video of how it does it’s job you can find easily online, and by the accident stats themselves (none of the mitigating factors people have brought up here gets us anywhere close to explaining all or even a significant part of the improvement).

> a CNN performs good and reasonable when it’s operating in the same domain it was trained into, God only knows what happens when it comes to exceptions and outlier data.

That also tends to be what humans have issues with / what causes accidents, though. And it’s been trained on *over a billion* miles driven…

And when a Tesla can’t figure out what something is (because yes, Neural Networks will make mistakes, but they’ll tell you how certain they are that they are right, so you can most often *know* when they are confused), they won’t cause a crash, instead they will stop or give the user the wheel back.

This means the risk in case the system ( which isn’t limited to just NN ) can not figure something out, is in the vast majority of cases, not a risk of fatality, but a risk of annoying the driver.

> By definition, car crashes and car crash situations are an extremely under-represented class and training data is scarce,

They do use some simulation data, as they have publicly said. Also, the crash avoidance systems is based a lot on physics prediction, the NN are there mostly to help recognize other cars, which they are incredibly good at (training data to recognize cars is NOT rare).

> I would almost trust that a Tesla model has a reasonable output in “normal” driving situations but I’m SURE it would perform badly in exceptions, at least that’s my experience after years of study on the matter.

You’re making the mistake of thinking this is *just* a NN. It’s not. If you’ve ever done complex use of NN/DL, you’ll find that those projects also typically involve a lot of human input/programming of the logic, with NN/DL being used for tasks that tends to be too hard to code.

And obviously, human detection/notification of errors does improve the dataset and the resulting NN, and you can be certain Tesla has put a lot of effort into that too.

Tesla autopilot in fact has in the vast majority of cases a high confidence of what it’s reading, and whenever it doesn’t, it doesn’t put the driver in danger. The media is watching for a hawk for any story that would indicate Autopilot isn’t a good idea/isn’t safe, and they can barely find one story a year and they always are ambiguous, never clearly Autopilot’s fault. Google it. Autopilot is considered the likely cause of only 3 fatalities, that’s ridiculously small compared to the average for human drivers, and it’s constantly improving, with the completely redesigned system they are fielding in the coming weeks expected to provide an over 10x improvement over the coming years.

So you’ll see very soon what’s what here.

“When there is a crash there are usually two people who could have avoided it, and two people who were not paying enough attention”, true, but when we add in the Tesla AP that’s two people and a computer “who” could have avoided it, and if a Tesla hit another Tesla (has that happened yet?) that’s two computers.

I wonder if I’ll ever see a Tesla in the dock for causing death by dangerous driving….

The thing is, Autopilot is currently close to 10 times safer ( less likely to cause accidents ) than the average US driver, and it is expected in the coming years to become many times safer still. At some point, you have to take this into account. Yes, sometimes autopilot is going to cause deaths. The same way your brakes very rarely but *sometimes* do cause deaths. It’s hardware failure/lack-of-success of something that *otherwise* saves much much much more lives than it costs. That math matters, saving lives matters. Your brakes are not going to be in the dock if their failure was extraordinary/unavoidable, so isn’t Autopilot going to be.

The way the autopilot causes deaths is different from the way human errors cause deaths. Humans are bad at paying attention. We miss things, and then can’t react in time. But, while we lose attention randomly, we do not always do it and we deal with the edge cases in a flexible manner because we actually know what we’re doing, and we have various ways of improving our personal outcomes by e.g. not driving drunk, tired, paying more attention… etc.