News this morning that AMD has reached an agreement to acquire Xilinx for $35 Billion in stock. The move to gobble up the leading company in the FPGA industry should come as no surprise for many reasons. First, the silicon business is thick in the age of mergers and acquisitions, but more importantly because AMD’s main competitor, Intel, purchased the other FPGA giant Altera back in 2015.

Primarily a maker of computer processors, AMD expands into the reconfigurable computing market as Field-Programmable Gate Arrays (FPGA) can be adapted to different tasks based on what bitstream (programming information written to the chips) has been sent to them. This allows the gates inside the chip to be reorganized to perform different functions at the hardware level even after being put into products already in the hands of customers.

Xilinx invented the FPGA back in the mid-1980s, and since then the falling costs of silicon fabrication and the acceleration of technological advancement have made them evermore highly desirable solutions. Depending on volume, they can be a more economical alternative to ASICs. They also help with future-proofing as technology not in existence at time of manufacture — such as compression algorithms and communications protocols — may be added to hardware in the field by reflashing the bitstream. Xilinx also makes the Zynq line of hybrid chips that contain both ARM and FPGA cores in the same device.

The deal awaits approval from both shareholders and regulators but is expected to be complete by the end of 2021.

In my ideal world, every desktop CPU would have a small FPGA section and drivers that allow applications to configure the logic cells. I’d hoped Intel would go that route with Altera, but they only added FPGAs to some of their server CPUs.

Hopefully a little competition will get the ball rolling and bring FPGAs to consumer hardware.

Intel had the idea that using an on chip FPGA would be great for application specific hardware acceleration.

But in reality, FPGAs are not all that fast, they also use tons of resources for the job they do, and ends up having laughable power efficiency.

In the end Intel stopped producing Xeons with FPGAs in them a couple of years back. Since it is simply better to spend those resources that the FPGA would have used on things like cache, cores and more IO. It tended to even outperform the “FPGA acceleration” itself….

The idea of “FPGA sections in CPUs” were frankly dead before it even left development. (Intel mainly pushed through with it for 2-3 years to make investors feel happy about the perches of Altera. Even if those CPUs really didn’t sell well….)

FPGA add in cards are on the other hand still a thing, and have a decent market, mainly due to having their own out of system IO. Making them suitable for all sorts of high bandwidth application specific uses.

I think that’s a little unfair on them, its a perfect fit for many use cases, and in theory they can develop into using the FPGA for the Management engine bollocks so patches that work on any security failings can be implemented.

I don’t think its a great fit for the core of their market, but the reconfigurable hardware level does have some serious potential bolted into the normal cores for unbeatable IO speeds between them. PCI expansion cards are all well and good but are ‘only’ PCI speeds.

Its just too new a reinvention to get great traction yet, most folks working on PC’s now have probably never dealt with co-processors of any sort but GPU, and developing and deploying for those usecases takes time.

Using them in the management engine is an interesting idea, a bit similar to how the car industry is currently using FPGAs. (ASICs are wonderful, but a flaw is hard to fix, unlike FPGAs were it’s just a software patch most of the time.)

In terms of using them for IPC, I can say that dedicated switching hardware is going to be both more power efficient, and faster than an FPGA solution.

But FPGAs main downside is that they are slower and more resource intensive than dedicated logic.

It doesn’t make too much sense in many applications.

Only reason why FPGA add in cards have a market is for two tasks:

1. ASIC development.

2. Data gathering. (For an example gathering sensor data in various applications, like in the LHC at CERN. Most sensor data isn’t “needed”, so the lack of bandwidth on the PCIe interface isn’t a major problem.)

For general use, FPGAs have little actual use, and a lot of times can be outperformed rather trivially by dedicated hardware.

I myself see little reason for AMD to buy Xilinx, other than “Intel bought Altera!”.

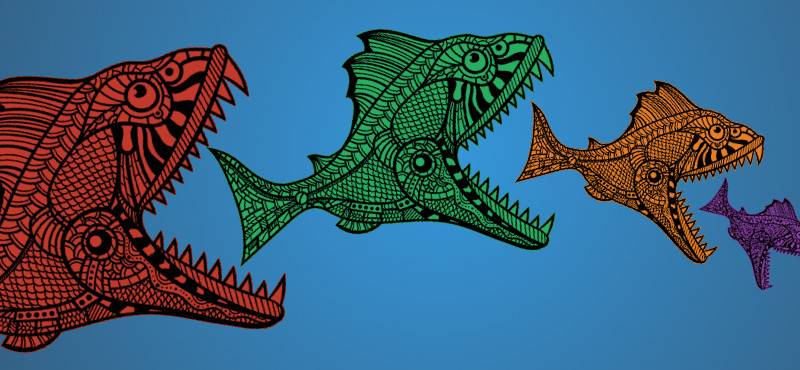

Indeed, FPGA are an odd fish, by being so reconfigurable they are superb in many situations. But most deployed hardware has no need of that ability so the dedicated static hardware developed on the FPGA is probably used (unless the cost of a stock FPGA to do the job works out cheaper than the tooling up a new run of dedicated chips for your production run).

I think with the future of Edge computing and IOT crap we are going towards FPGA’s might actually have more deployment scenario that make sense. And owning reconfigurable silicon to add to the impressive general purpose CPU to me makes sense for that alone.

But also if you lean into it and support it properly applications that want security can use their own hardware level RNG’s (so whatever flaw in randomness might be found for x or y mass deployed RNG you are not stuck with it or even using in the first place. A ‘worse’ RNG that is only used by you is going to attract less attention than the mass deployed widely used ones – just another little bump to cracking stuff)or on the fly encryption of any and all IO/disk reads etc that is invisible to the host OS. So you don’t need as many expensive in tech support for the security deployments across a company, and your users can be ignorant to a large degree because it just works like it does at home.

Yes FPGA’s have their downsides from a security point of view, but all of the ones I’m aware of require direct access to do anything with – so its no worse than normal there really – have the hardware in hand you can get the content eventually.

The wonder of FPGA’s is within their gate, memory and pin in/out limits they can do anything you might desire, so useful features just require the imagination to spot a way they can improve your systems or an existing problem group its hard to solve with CPU/GPU cycles.

Reconfigurable logic in a CPU is “interesting”, full on FPGA is likely to have little actual use. (Outside of areas where security is of concern, mainly in the “management” system, crypto accelerators on FPGAs are generally slower than running code on a core. RNG is an interesting tidbit, but an FPGA is not going to add much here compared to a software solution to be fair. (The logic fabric is likely not going to run freely, but rather be referenced to some base clock or another.))

I remember back in about 2010-2012 when I designed some mostly theoretical CPUs using reconfigurable logic, and frankly stated, it isn’t worth it from a performance standpoint. More cores, more memory and more IO is a lot better in general as far as processing is concerned, almost regardless of what the application is.

Intel later went on to buy Altera in 2015 and started producing CPUs with FPGAs in them, and only 2-3 years later stopped producing them and stating the same reasons I found myself half a decade prior.

Cores are most of the time better than an FPGA.

The reason for this is due to the inherent downsides of FPGAs.

They need additional logic just to facilitate reconfigurability, this means that they contain more transistors in series for a given task, and since every gate in the fabric has that extra logic, then it can add a considerable amount of time to one’s processing. But not only does it add time, but it adds power demand too. Not to mention the added challenge of getting good clock speeds out of one’s configuration, since control loops and ripple are a thing. So throughput can be lackluster.

The resources needed for such a logic array can be better spent elsewhere, mainly by building more cores, cache and IO, not to mention IPC to tie said resources together.

Outside of the CPU on the other hand, the picture can be a lot different.

FPGAs are generally cost effective if one needs very few ASICs. (Few as in less than a few tens of thousands of them.) For an example IO processing. If one for an example have a 100 Gb/s data stream from a sensor array that one wishes to prod at, then it can be nice to have an FPGA doing the grunt work for us. (One can splurge on an ASIC, but if one only needs 1-1000 of these IO processors, then an ASIC is going to cost 100’s of times more than using even a 40 grand FPGA.)

Same story goes for other applications where an ASIC would make “sense”, but the volume isn’t there to carry the cost. (For an example, just a photo mask can cost from a few thousand USD up past a hundred K depending on manufacturing node and design size/complexity. And one needs typically 4-12 of them, + a few at a larger node as well as renting time in the factory, who’s machines spit out 200-300 wafers an hour, each likely containing anywhere from 500-5000 chips. And the setup time for the machinery is in the order or many hours, so that is a lot of chips to cover. ASICs gets expensive unless one makes 100k+ of them.)

If one desires to have a fully reconfigurable design for safety reasons, then an FPGA makes sense yet again. For an example, car manufacturers uses a lot of FPGAs, despite having order volumes in the 100’s of thousands of units annually, even millions at times. They can design and produce an ASIC with better performance and power efficiency. But FPGAs are fully reconfigurable and validated, giving the car maker the ability to easily amend any safety flaws in their design. If they make a semi-reconfigurable ASIC, then there is risk that they might not be able to fix a potential flaw. Meaning that fixing the flaw will get a lot more expensive, either through legal fines, or through repairs/service. And if they go fully reconfigurable, then they can just by an off the shelf FPGA instead.

ASIC manufacturing also has one more downside, that is lead times. Getting fab time can be hard, and typically is anywhere from 6-24 months out, sometimes longer… This alone can make an ASIC undesirable.

While FPGAs can be ordered in fairly high volumes fairly trivially, and usually be on one’s doorstep within a week or so.

But inside of a CPU, FPGAs aren’t interesting.

A CPUs main job is to deliver cost/power effective computational performance.

While FPGAs main job is to replace low volume glue logic, where controlled response times and real time processing is key values. (An application area where multicore microcontrollers almost became a thing…)

As someone designing CPUs, I have to ask, why would you want an FPGA on your CPU? It makes no sense as far as resource allocation and end performance is concerned. It would make much more sense to sprinkle on a bunch of smaller cores for background tasks than it does to add an FPGA. (Just a handful of logic blocks in an FPGA uses more transistors than an Intel 8080….)

The reason you might want a FPGA on your CPU is kind of obvious, if you are not being deliberately obtuse. Its properly reconfigurable and capable of tasks CPU’s suck at, yes the application specific silicon outdoes an FPGA in many ways, except the big one – it can only do what its designed for. The FPGA bolted on the side of your CPU can do anything at all, and be told to do something new on the fly to fit the situation…

Sure you get more out of the chip realestate making it another cpu core or two IFF you are using the FPGA for CPU like work. Make it do the tasks CPU’s are really crap for and it will work better by far – being 100% reliable in function time for example. Where this workstation might need to wait ‘ages’ to sneak a process in on one core if the system is working hard, faster cores and more of them doesn’t change that CPUS need task schedulers and lower/equal priority tasks have to wait for the current ones. But the FPGA on your CPU can for example automatically run ALL the PID loops SIMULTANEOUSLY keeping your machine working smooth as can be while waiting for the updates from the CPU to change the target conditions… And being directly bolted to the CPU the bandwidth and latency between them can be much higher than using just the PCI bus – heck the FPGA could actually be managing every bus on the CPU if you wished.

It also really doesn’t matter at all if the RNG is refrenced to a base clock or two – its your FPGA run whatever algorithm you like, that one frame of reference an attacker might be sure of is of very little help in that regard. And as there are many clocks on a system, and if security is you goal you can always throw some more in its not even going to be easy to know which one(s) to pick.

Deploying a CPU core for security tasks is inherently inferior as doing so requires your OS to be aware of it so the surface for attack is huge the entire scope of the operating system and its programs – the ‘hardwired’ logic of the FPGA the OS need not even be aware of at all. Or It might for example know to instruct the FPGA to be in Security mode x while doing y, does not mean it knows anything about how that mode works, meaning the attackers need to know your specific FPGA logic. Even if they know via other methods all ethernet activity is encryped and travels via the office server before being filtered, encryption changed and sent on, they can’t learn anything about the encryption from flaws in the OS or even use normal access to the computer to transmit anything in a way they can read. Nothing is perfect of course but there is alot you can do with a FPGA for security that is superior because its FPGA (ASIC can also be good – but can’t then be patched only replaced).

Ever heard of little cores?

There is no reason a CPU can’t have a cluster of smaller cores for background tasks, these cores doesn’t have to have as many high end features, and can use a lot more microcode instructions if a background task ever were to need such.

No reason to have an FPGA for that.

Secondly, mapping logic to an FPGA is a fairly processing intensive task in itself. And keeping bitmaps around would only be applicable to the specific FPGA fabric the bitmap is made to fit. Ie, might work fine on one CPU, but not on another model.

Having constant response times for a task is a nice feature, but this would require a rather gigantic FPGA to be realistic in practice. Modern computers runs far too many background tasks for FPGAs to be applicable in this regard. (One can make exceptions to specific background threads, but there one can just as well give them dedicated little cores.)

And when it comes to simultaneous throughput, this is generally not of great importance.

The main issue of FPGAs is that they are fully reconfigurable, this makes them slow, and exceptionally resource intensive. And in turn this makes their use inside of a CPU rather inept.

Handling various system buses via an FPGA is also an area where dedicated logic would have a strong upper hand. And bus management systems already do have a fair bit of reconfigurability in them, they aren’t typically static monoliths. (Now, FPGAs have found a rather wide sweeping use as bus handlers in a lot of applications, but this is mainly due to most of those applications existing in a low enough volume for dedicated ASICs to not be viable.)

FPGAs aren’t magic.

Building dedicated logic for the application is practically always better.

And one can include reconfigurability into such dedicated systems to “future proof” them, without loosing much performance, nor increase the amount of chip area needed for it by much at all, keeping its size much lower than a competing FPGA solution. This is thanks to the fact that in such semi-reconfigurable systems, we can put in reconfigurability where it logically isn’t redundant. Now most of this though tends to get rebranded as firmware/microcode.

And yes, when designing such semi-reconfigurable systems, there is a risk that one makes a mistake, having a tiny logic array for fixing such mistakes is still logical, but there is no need for the whole system to be made as an FPGA….

But to be fair, I generally don’t care too much if consumers are misinformed and stupid. It isn’t my money being wasted on hype. (Though, I kind of get thrown under the bus regardless due to companies making inappropriate investments into technology regardless…)

I don’t think anybody is taking about whole systems on FPGA here at all – just as highly reconfigurable and thus performance/feature enhancing co-processors.

I’d also say FPGA are sort of like magic, being very much like any custom bit of dedicated silicon in functionality, but not trapped that way is really really damn cool. Yes if you know you will only ever do x adding in a little dedicated core/co-processor that does only that is fine, got security and functionality implications that might not be, but if it works right its fine.

But FPGA and CPU bolted together is really neat match because it can do x, but also the other letters of every damn alphabet out there – so your clients can have the functionality they want, and only that functionality – which actually can easily work out more efficient than having lots of underused cores on the die.

Heck some of the most popular Dev boards I know of are ARM+FPGA, no reason at all the same winning formula that makes ’em so good at that won’t work as a more conventional high power CPU replacement option.

An example for use of an FPGA card are the “anything I/O” cards from Mesanet, which are popular for generating step and direction pulses for LinuxCNC.

After working with FPGAs professionally for the last few years I have to wonder. What would you want the FPGA to do for the home computer?

ASICs will fit the bill a lot better for hardware interfaces. You can’t change the connectors on the backplane no matter how many gates you change so why waste the money on an FPGA.

ASICs again fit the bill for crypto engines a lot better. FPGAs are extremely weak to side channel attacks because of how the gate logic screams out basically.

GPUs fit the bill lovely for anything graphics. Sure FPGAs can do it to but they are not designed for this role, they are meant for many parallel but different tasks at the same time. If you want many of the same tasks use a GPU it’s what they are for.

CPUs still rain supreme when it comes to versatility and adaptability. Sure FPGAs are well field programmable but it’s not the simple button press that CPUs are.

I really would like to know where someone would want an FPGA to go. I would love to bring FPGAs to the home market but I just can’t find a place for them. They are great at high speed multi channel processing. So I guess a home movie system? Which CPUs can already handle just fine?

I can see a place for them where standards change, and throwing hardware away should be discouraged.

Considering that Intel stopped making CPUs with FPGAs in them due to the following reasons:

1. FPGAs are resource intensive.

2. FPGAs are slow.

3. Building more cache, cores, and IO with those resources gave overall better performance compared to what the FPGA could deliver in practically all FPGA “accelerated” tasks.

FPGAs in a CPU is largely pointless due to that.

The main area where FPGAs are actually good is when one has multiple independent inputs, where one wants the processing time to remain independent for each task handled by the device. (Since each task is handled by dedicated logic in the fabric.) FPGAs are also well suited for real time IO due to that reason.

This is rarely a requirement for applications running on a CPU. And if it is, then more cores is usually a sufficient solution regardless. (Especially together with more manual control over when/where threads run.)

Only thing I will disagree on. “FPGAs are slow.” To be clear. They are slow when used as a computer. When used for signal processing, parallel jobs that are all unique. FPGAs are the fastest thing on the market. But you need to carefully craft them for the job they fill.

Also FPGAs are very useful in certain embedded applications. That’s why we have chips like Zinq. Making CPU in LUTs is not very practical (although quiet interesting) but if someone needs some weird peripheral than FPGA can help a lot. Needs something weird? Yep, you can have it. Need 20 UARTs for whatever reason? Yes, possible. High speed serial? Yep. A LOT of GPIO? If it is unusual and you need just a small quantity of final products (test equipment, specialized equipment, low volume production).

Well and of course in places where you would normally use bare logic – triggers in oscilloscopes (CERN detector application is basically just a massive super fast programmable trigger engine), CPLDs are used as small glue here and there – but yes, that is not tightly coupled with CPU.

Also Cypress PSoC was/is an interesting concept.

“FPGAs are the fastest thing on the market.”

Ever heard of ASICs?

If built on a similar performing manufacturing node, then an FPGA is always slower, by the very nature of what FPGAs are.

One might argue that “ASICs aren’t on the market.”

But it isn’t all that hard to get in contact with an ASIC designer and have them draw up the chip based on one’s Verilog/VHDL code. Lead times are though typically long, and prices are steep. But if the application has large enough volume, or requires high enough specifications, then ASICs are a very logical option, at times even the only reasonable option.

FPGAs are always slow compared to what one can do with a manufacturing node.

When it comes to building CPUs, tossing in an ASIC onto it isn’t actually all that benefitial.

I were surprised back when Intel bought Altera, were really thinking that the deal would be forbidden by market regulators.

Considering the size of Intel and Altera.

Now seeing AMD go for Xilinx is honestly surprising.

Though, AMD is all but a fraction of Intel, though still, this means that the two largest FPGA vendors now happens to also be the two largest CPU vendors, is this good for the market, or is it just a duopoly in the making?

Hopefully AMD will continue to embrace an open source stance as they have with AMDGPU drivers with their new acquisition.

That would really sell me on which FPGA’s to buy in future…

A Zen3, 4c8t, 3ghz SoC on a 7nm die with a xilinx fpga on-board with a large lvl3 shared cache could make for a killer Pi-like multichip.

That would be the only way I’d ever consider Xilinx again

Their toolchains have been notorious for being utter crap for over two decades now. Back when I was an undergraduate, one of the professors doing a computer architecture course that taught logic design used Xilinx ISE.

What was his rationale for choosing it:

Students will have to work with shitty tools in the real world, so the course will be taught using the shittiest commercial tool the professor could find.

I wonder if this will do anything to the cost of FPGA dev tools. $4k for a fully unlocked license puts even the mid range stuff out of reach for any hobbyist.

AMD will open it, im sure. Its kind of their thing.

This.

Chip Vendor : “Why don’t you use our new, cool parts?”

Also Chip Vendor : “Here’s a licensing fee that doesn’t generate any real income for us, but is going to suck for you every year when you have to spend three weeks writing emails to justify it to your management.”

Xilinx has been quite disappointing in the last 10 years or so. The hardware is great, but they went off chasing acceleration cards and haven’t really done much with their FPGAs for a while. Actually bothering to make Spartan7s is a positive step (although 2 generations back), but they’re still too expensive. They need to make low pin count, low cost FPGAs that don’t suck. They seem to have decided that’s not the market for them.

Software matters… development environments matter. Xilinx is my favorite FPGA to work with, but I pay a substantial price penalty to use the parts. My BOM costs are at least a little flexible for my products, so I can afford to use them in some places… but I’d like to use them more often than I do.

The fact that it’s very difficult to get any better pricing than 1-piece pricing is absolutely insane. I’ve got Avnet guys that can create quantity quotes, but it’s long lead time and not remotely like the quantity price curves of almost any other IC. They don’t get that much cheaper, even at 500-1000 qty. It’s crazy. I actually feel insulted every time I order Xilinx parts.

Altera almost seemed to stop cold on improvements to their FPGAs and software when they were bought. I’d hate to see Xilinx ignore their core FPGA market any more than they already have. We have already more or less lost Altera.

I mean, I get how everyone focuses on “FPGAs in the computer!!” thing, but… Intel didn’t. Why? Because it’s not the reason they bought Altera. FPGAs are one of the few cases where bleeding edge is basically always better (and it’s not “just pack mo’ bits”, like memory), so Xilinx/Altera have quite a bit of experience at bleeding edge nodes.

For instance, Xilinx had engineering samples of the 7 nm Versal out to customers in 2019, same year as AMD’s 7 nm designs came out (both through TSMC).

You’re not going to see FPGAs in the home computer. The synergy here is in silicon design, not products.

Not to mention that FPGAs makes for a nice stable income from the automotive industry.

(Car manufacturers loves the reconfigurable nature of FPGAs compared to ASICs. One can be fixed very trivially, the other needs expensive servicing. That is if a safety issue shows itself. Sometimes, a safety issue can lead to rather sever fines, and even forbidding sale of the vehicle. So a rather nice feature to say the least.)

FPGAs also find use all over the place in other products to be fair, anywhere were the volume isn’t sufficient for ASICs, or where ASIC lead times are too long for the product, an FPGA makes for a decent solution.

Though, I am frankly skeptical to if it is good for the market in general that the two largest FPGA vendors are owned by the two largest CPU vendors. Similar reason for why nVidia shouldn’t really buy ARM to be fair. These giants should compete, not merge. Competition is needed on the market.

I am honestly surprised that regulators even let Intel buy Altera back in the day.

Again, you’re focusing on the products. The products aren’t that big a deal. It’s the skills and tools that matter, and I disagree it’s bad for the market.

Building nanometer chips is *hard*. Fragmenting that knowledge by pointless market sectors is counterproductive at this point.

It’s easy to say “more competition!!” but really, there’s not enough IC design talent on the planet to create another company to compete at these scales.

I hope you realize that there currently is a few larger semiconductor fabricators on the market.

To name a few that manufactures chips for others we have:

TSMC

Global Foundries

Samsung

UMC

Intel used to be on the list back in the day. And AMD too. (Though, AMD spun off its factory section as global foundries a fair few years back.)

And this isn’t including companies that solely makes their own products like:

Infineon

On semiconductor

NXP

Texas Instruments

Microchip

STMicroelectronics

Intel

Etc….

There is a lot of semiconductor manufacturers in the world, all working with different technologies at different manufacturing nodes.

And my lists there aren’t remotely exhaustive. (Not even wikipedia has a complete list of them all. (they for an example are missing Nordic semiconductor (mainly works with RF), silex microsystems (MEMS), and a bunch of others.))

And smaller nodes aren’t inherently better. They are typically denser, but that isn’t the only thing of importance. Not that a modern “7 nm” node is all that much smaller than a “22nm” node. (non of those are what they state on the tin, they are huge in comparison…) There is a lot of other factors that plays a major role in what a given manufacturer is capable of.

That AMD buys Xilinx isn’t directly effecting what AMD can manufacture, but I’ll get into why bellow.

Both companies are fabless…. They do though interact with manufacturing, but generally, the fab is doing a lot of the research into material science, and generally exploring what can be done with a given manufacturing node and the resources they have at hand, and future resources they are looking into, this information and knowledge is generally not something customers like AMD, nVidia, Xilinx, etc are privy to.

This is why fabs have their own transistor implementations. And their own power, RF, and analog solutions. Even memory fabrics, DDR controllers, PCIe drivers, and also a lot of other “high end” features for that matter.

If these manufacturing optimizations and considerations were to be let out the bag, then the customer can simply go to another manufacturer without really needing to respin the design. (also known as the fab shooting itself in the foot.)

Now, most fabs are “happy” to just take your finished photomasks and follow your recipe, but it will likely not be as good as if you let the fab design the photomasks based on your requirements.

Looking at TSMC currently producing both nVidia’s latest GPUs and AMD’s CPUs and seeing that Intel can’t keep up and using that to paint broad strokes about the semiconductor industry in general is about as interesting as looking at nascar and saying that it is the pinnacle of motorsports technology. (Forgetting the fact that nascar is a stock car race…)

nVidia’s GPUs and AMD’s CPUs and even Intel’s processors are frankly mainly consumer oriented high performance computing products. A niche in the overall semiconductor manufacturing scene as far as manufacturing technology is concerned.

And Xilinx and Altera has historically just licensed IP from other manufacturers to include on their chips. Sometimes that IP is from the very fab itself, other times from ASIC design houses, or even universities at times. They do of course have their own in house IP as well.

In my regard, it is more the system integration knowhow of FPGA designers at Xilinx that are of interest. After all, FPGAs have a lot of high speed interconnects, though how much of value this is for AMD of all companies is a dubious question. FPGAs tend to be monolithic in their implementation after all. And with a 250nm interconnect layer, we can have 2000 traces per mm per interconnect layer, ie, exceptionally wide buses to tie our device together. Something that is rather uninteresting as far as a multi chip design is concerned.

“After all, FPGAs have a lot of high speed interconnects, though how much of value this is for AMD of all companies is a dubious question. FPGAs tend to be monolithic in their implementation after all.”

We’re thinking CPUs. AMD may be thinking GPUs.

https://www.anandtech.com/show/16155/imagination-announces-bseries-gpu-ip-scaling-up-with-multigpu

Ostracus

There is a major difference between the interconnect in an FPGA and the interconnect in a GPU or CPU.

In an FPGA, the interconnect fabric is statically mapped, there is no routing, no sharing of bus resources (unless one uses some of the logic fabric to implement such a feature), in short, the interconnect fabric in an FPGA is like a patch panel.

While the interconnects in CPUs and GPUs are much more dynamic in how they are shared between multiple resources. It is much more comparable to a network switch than just a patch panel.

FPGAs simply toss tons of resources at the problem, so that everything can practically be given its own path, its own set of buses. (The added cost of doing this is not gigantic compared to spinning a custom ASIC for low volume products, ASICs are stupendously expensive in low volumes so it isn’t hard to compete with.)

AMD frankly already knows this, just look at their rather basic caching system where they instead of developing a more efficient caching algorithm instead just tosses tens of MB of L3 at the problem. (A cruder algorithm is a cheaper upfront cost since it needs less RnD, more cache is though more expensive in production. Ie, for larger volumes, it isn’t cost effective, neither for AMD nor their customers.)

Ie, all interconnect fabrics aren’t made the same.

An interconnect fabric is simply a set of connections between multiple points. How it is implemented can vary wildly from one application to another.

The interconnect fabric in a Xeon isn’t even the same as the fabric in an I7/I9, let alone Ryzen’s Infinity fabric, PCI/PCIe, CXL, NVlink/SLI, not to mention Ethernet/IP for that matter, they are all interconnects, but they are all different.

“I hope you realize that there currently is a few larger semiconductor fabricators on the market.”

Those are *fab* companies. Not *design* companies. Isn’t the difference clear? TSMC gives you design rules on how to build something, but doesn’t tell you *what* to build.

Yes, there are plenty of stock stuff at the fab house, but a *lot* of Xilinx’s stuff ends up being significantly better simply because it marries them to a programmable logic core and live-calibrates everything. That’s how you get the world-leading gigasample ADCs/DACs they have, as well as the high speed transceivers and even the memory cores. That’s the “design” bits I’m talking about. As in, “oh, these end up with process variations, you measure and calibrate it live.”

But *even ignoring all of that*, I’m simply talking about the *ability* to coordinate and design a large chip at nanometer scale. Including the tech resources and expertise for people to actually be able to work on them. It’s not like it’s 1 guy sitting in his basement designing these things anymore.

“These giants should compete, not merge. Competition is needed on the market.”

Has any company ever said, “man I need me some more competition”?

I remember many, many years ago when AMD made the Am2900 bit-slice chips. Wire up a few of those, and you could build a 16-bit minicomputer. All but forgotten now, but AMD are getting back into making chips that will let you make a CPU — and not an x86 CPU!

https://en.wikipedia.org/wiki/AMD_Am2900

I see lots of people asking what FPGAs can do for AMD, but (after a quick scan, maybe I missed someone) no one is talking about what AMD can do for FPGAs. Imagine an FPGA that had power requirements and speed similar to a CPU. I’d like one of those.

> But more importantly because AMD’s main competitor, Intel, purchased the other FPGA giant Altera back in 2015.

I was expecting to read:

But more importantly because AMD’s main competitor, Nvidia, agreed to purchase Arm just this year.

Deals of this size take years of negotiations. I wouldn’t be surprised to find that AMD started talking to Xilinx the day they found about about Intel buying Altera.

The real advantage I see of FPGA’s is everything happening at once. If you have a task that requires 5000 things to happen at the exact same time, either synchronously or asynchronously FPGA’s all the way, you can not beat that speed with CPU’s, or even GPU’s in terms of power and price.

Now the question in my mind is what do Intel and AMD see the use case for these in 8 to 10 years time, when the gate counts will be much much higher than they are now and the only killer application that I can thing of is Artificial neural networks.

And you win the internet for spotting the connection between FPGA and AI.

Nvidia is AI crazy.

Intel talks about it a fair bit too.

AMD just wants a ticket on the hype train as well.

After all, how else is AMD going to sell CPUs to the AI centric Amazon web services?

I’ve given up long ago on trying to understand how modern CPU’s work (Much more than a Z80 does not fit in my head), but I’m kind of curious in how much overlap there is between the internals of an FPGA and the microcode in a processor.

I think I’ve seen a few patches for the microcode fly by, so apparently it is changeable in some way.

I can also imagine that during development of the next generation processors some kind of hybrid between existing processor blocks and FPGA logic as an intermediate step may be useful. It’s probably possible to emulate the “next generation” processor in software, but that may be too slow to run extensive tests suites or benchmarks.

Microcode isn’t configurable logic. (It is just boring memory.)

You can think of microcode as a library of functions.

When the program asks for a function who’s upcode/instruction call is linked to microcode.

Then the decoder will stumble over to the memory housing the microcode and read out the set of instructions corresponding to that instruction the program asked for, before executing that and getting the desired result.

A simple example is multiplication.

A microcode for it would just be a loop adding a number to the accumulator X times.

(Or get more flamboyant and use bit shifting to do it much faster.)

Intel back in the day built one of the world’s most powerful supercomputers to do CPU simulation.

It is honestly not all that crazy to do it in software, and it is way more true to life than FPGAs.

Since FPGAs lack the following:

1. They are far bellow the clock speeds of the final product.

2. FPGAs lack the gate density of the final product.

3. And FPGAs also consume way more power…. (Due to having a lot of interconnect logic that a CPU wouldn’t have. (CPUs tends to not be reconfigurable at every single logic gate.))

And having done a bit of CPU simulation myself.

One doesn’t actually need all too much computing performance to model another CPU.

Obviously one won’t run real time, how close to real time will vary with how much one simulates, and how optimized one’s simulator is. (Typically it will be some custom made one. I wrote my own for example, it’s really crude.)

The main limits are the system memory of the computer doing the simulation, since one will need a lot of it to keep track of all the logic states, not to mention the all important logging. (Don’t log all transitions btw, use triggers and log the state of the triggers.)

How deeply one wants to simulate is the other limitation. More accuracy = more number crunching and more memory required.

Like do we want to simulate everything?

Propagation delay, crosstalk, wire resistance, switching response, parasitic capacitance, etc for the full chip…

Or do we characterize smaller building blocks and set up triggers for when the block has a potential crash.

Power consumption, cross talk and propagation delay can be characterized as well. But then it is also a question of how fine time steps one takes etc.

In the end, there is many ways to skin a cat.

But CPU simulation on a CPU isn’t all that unpractical.

And with a smaller computer cluster, it will likely be easier, all though, node to node bandwidth might start eating into performance. (but 100 Gb/s network cards are a thing thankfully enough.) Not that one should expect to get close to real time performance regardless.

A simple simulation mainly dealing with logic can get fairly close to real time though, even for fairly complicated processors. (Though, “close” is a relative term, you don’t want to run a bench mark that normally needs an hour, would likely take all week if not more.)

And it is nice to save a key frame of the system in a booted state, then one doesn’t have to reboot it each simulation when stress testing things post boot.

I’ve heard Nvidia uses FPGAs as part of it’s GPU development.

An FPGA can typically be used to test different logic implementations at much greater speed than what a software simulation tool is able to.

Ie, one can test if one logic solution is better than another in terms of work done per cycle over billions of cycles.

Since even if the FPGA solution runs at only 10-100 MHz, then completing a 10 billion cycle benchmark should only take 100-1000 seconds, in other words, 2-17 minutes, + a little more for compiling the program and transfer the binary to the FPGA. (On top of any design alterations, but this would be needed for a software simulation regardless, and should therefor not be included.)

A software simulation on the other hand might take a couple of hours for a similar task.

And considering that we likely want to test a few different implementations, then it does indeed scale.

If we only were to shave off 5 minutes from the simulation time, but have 50 simulations to do, then that is over 4 hours of saved time.

This can speed up development rather noticeably, but large FPGAs are expensive, so it can be cost prohibitive. Especially since one will need a computing farm regardless for final simulation. (One can though just rent time over at AWS, or other cloud computing provider.)

Since no current FPGA can’t simulate the final product, nor be used for characterizing high speed limitations of the final product. Since even the world’s largest FPGA is tiny compared to how many gates are needed for simulating even one of nVidia’s smallest current GPUs. (And max fabric clock speed is also a bit “slow”, to get up to what their GPUs are able to reach.)

Not that the FPGA’s limitations are indicative of what an actual manufacturing node’s limitations are. Ie, FPGAs can simulate logic level design, silicone level is not something it can do. (So signal integrity, cross talk, power plane stability, power consumption, transient response, etc are all outside of what an FPGA can tell you.)

But in the end, software simulation is usually fast enough in most cases.

After all, it is rare that one needs to do billions of cycles worth of simulation to get useful data. So most software simulation can typically give a result before one has even finished compiling the program for the FPGA, let alone started transferring the binary to it, or logged the results from it… (Though, longer simulations gives the FPGA the upper hand. (Unless one is simulating something larger that has a larger compile time…))

Wasn’t AMD in the market before?

With medium scale PALs (which they acquired from the original MMI–Monolithic Memories Inc), and then larger scale MACH family parts. Both families with rather decent specs. Then two decades ago AMD pissed off and stranded their customers when they dumped their programmable logic offerings and sold it to Lattice. This allowed AMD to concentrate on CPUs which they have done quite well with. CPUs and GPUs.

Lattice had some decent parts (at an angineering level) but like many tech companies were run by a bunch of assholes (at the corporate level).

I worry that in a few years AMD will get fickle again and dump or spin off their programmable logic division, again, leaving customers high and dry, again.

Niche use for FPGAs: accurate, reprogrammable, retro-gaming console emulation:

https://github.com/MiSTer-devel/Main_MiSTer/wiki