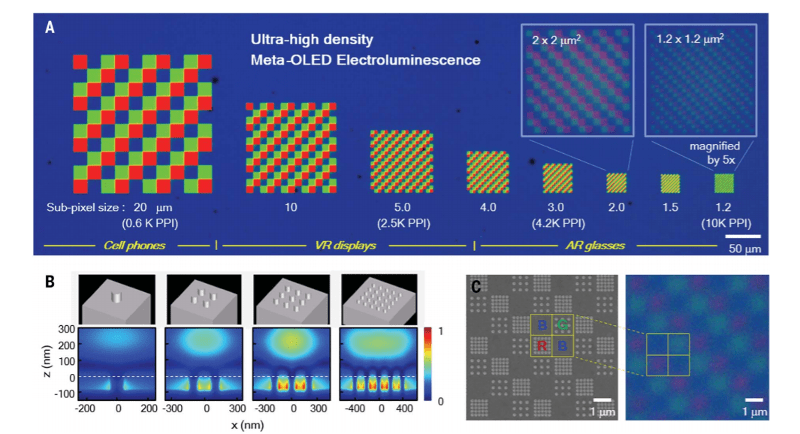

A good smartphone now will have about 500 pixels per inch (PPI) on its screen. Even the best phones we could find clock in at just over 800 PPI. But Stanford researchers have a way to make displays with more than 10,000 pixels per inch using technology borrowed from solar panel research.

Of course, that might be overkill on a six-inch phone screen, but for larger displays and close up displays like those used for virtual reality, it could be a game-changer. Your brain is good at editing it out, but in a typical VR headset, you can easily see the pixels from the display even at the highest PPI resolutions available. Worse, you can see the gaps between pixels which give a screen door-like effect. But with a density of 10,000 PPI it would be very difficult to see individual pixels, assuming you can drive that many dots.

Working with Samsung, the team added an extra layer to the bottom of a conventional OLED screen. The new layer — an optical metasurface — has very tiny corrugated mirrors that reflect white light into red, green, and blue components allowing the high density of pixels. A normal OLED panel uses white OLEDs also, but uses a filter to create the different pixel colors.

If you read the Standford paper, you’ll learn about Fabry-Pérot cavities and the Purcell effect. We are sure if you spend all day thinking about OLED displays, this is stuff that makes sense to you, but we had to look up quite a few things on Wikipedia.

While this is not in production, the team did create a proof-of-concept device not only had dense pixel packing, but also high color purity and twice the efficiency of a conventional display. Samsung is investigating scaling the technique to production.

We know you can make OLEDs at home, but probably not at that density. Of course, for serious organic lighting, why not consider a pickle?

The Sony A7S3 camera have an EVF of 9,437,184 dots for a size of 0.6″ So it’s already there, or I’m missing something?

(3,145,728 pixels OLED to be precise)

Sensing light vs creating light at high intensity. I don’t think the challenge is just the size and packing of the elements but the power delivery and thermal management too.

Does pixel power density scale that way? I figure with more pixels they each use less power, but of course the relationship likely isn’t perfectly linear. Anyone know the math on that?

For any display, the power required is the desired intensity x the area of the display x the angular viewing area / efficiency. The number of pixels literally does not matter.

Now, the power it takes to calculate the intensity for each pixel? That scales linearly with the number of pixels. Sort of, assuming that each pixel takes the same arithmetic, and you don’t have some savings with calculations that apply to more than one pixel.

I’m talking about the EVF, not the sensor.

OLED is a display technology as far as I’m concern.

That is the entire field (array) of pixels, not a linear measurement like PPI is. 10,000 PPI is a field of 100,000,000 pixels.

And while can “clock in” a speed, one cannot “clock in” a density

That’s right, a 10,000 ppi display of any appreciable size would have a ridiculous pixel count. But if you’re saying that they must mean 10,000 pixels per square inch, that is almost as ridiculous, since that would be only 100 pixels per linear inch. Do the math.

That 3Mpix OLED display will have a linear density of about 2300 ppi, assuming a 1.6 aspect ratio. Pretty darned good, but still a long way from 10000 ppi: Your pixels are 18 times bigger (in acreage).

Yeah, you’re missing the glaringly huge difference; one is a CMOS sensor, the other is an OLED display.

He’s talking about an electronic viewfinder, not the camera sensor…

They said EVF. Electronic view finder. The small screen inside the eyepiece. Why do people seem so confused by this.

Sorry, but no.

I’m talking about the EVF display inside the camera.

I’m describing the OLED screen, please read again.

Ughhh end is a display not the sensor.

Apparently the sony’s EVF has ~10M sub pixels, and I assume the research in the post is talking about RGB pixels. Also, 10,000 x 10,000 = 100,000,000, which is an order of magnitude larger than said EVF.

They mean 10,000 pixels per inch per line. So, if the display is 1 inch by 1 inch, that’s 100,000,000 (100 Million) pixels.

a little more than 5 square inches of this screen can equal human eye resolution.

Just use your phone to record video showing on a 5K display. Back off until the display fills about 1/2 inch on the phone screen. Done! :-)

“A normal OLED panel uses white OLEDs also, but uses a filter to create the different pixel colors.”

I think you’re confused. OLEDs have picture elements that are self-illuminating, and use PHOSPHORS that generate the different colors. They do not use white OLEDs with filters. You may be thinking of what are sometimes called “LED” displays, which are actually LCD panels with backlights that use white LEDs. Whole different technology.

And now I see that there ARE SOME displays that use white OLEDs with color filters. However, this is not what is used in the “normal” OLED displays you mention.

The largest OLED display manufacturer (LG) uses white OLEDs with color filters. Even better: they are the largest OLED display manufacturer because of that technology: they have a patent that protects it from use by others (i.e. Samsung). Using colored OLEDs is possible but at the current state of technology yields an inferior degradation as the blue pixels will degrade a lot faster than the reds and greens.

So are these are made up of three white OLED emitters each with a passive colour filter (R, G and B) to form a pixel? I hadn’t come across that sort of OLED before.

Even better: they use four pixels: red, blue, green and white. Pretty old but nevertheless interesting articles about this:

https://www.cnet.com/news/lg-says-white-oled-gives-it-ten-years-on-tv-competition/

https://www.cnet.com/news/what-is-oled-tv/

It’s more like they’re blue OLEDs with secondary red and green emitters depending on the pixel, so you only need to filter out the blue. When the blue OLED starts to fade, the secondary emitter is also producing less light automatically, so the color balance doesn’t shift.

If it was literally white pixels all around, then you’d waste 2/3rds of the light output filtering out two of the three color bands each time, whereas the secondary emitter converts most of the blue light into the other colors.

I KNOW. I was disappointed to learn that this is now a thing. I don’t know how efficient the EL (cough, “organic LED”) emitters are, but a white emitter with a filter in front of it is certainly going to be less efficient than an emitter that puts out the right color to begin with. So whatever efficiency advantage OLED may have had goes right out the window. If the industry takes this as The One True Way to make OLED panels, about the only remaining advantage over LCD with LED backlight is the improved contrast ratio. In fact, white OLED with filters is a step backward from newer LCD backlight technologies that instead of using white LEDs and three color filters, use blue leds and red and green phospors to down-convert that, eliminating the filters altogether.

The micro displays I’ve been working with are active matrix color filter oleds made by Sony with a 3.3 μm RGB × 9.9 μm dot pitch. They’ve been around for quite some time (before the modern VR industry was born). Kopin also makes some nice displays of the same type with a 2.88 x 8.64 μm color dot pitch in a .99″ diagonal, 2048 by 2048 package that I’d like to play with. Apparently LGs patent must have some peculiar specifics.

The articles subject is orders of magnitude beyond these.

“for larger displays and close up displays like those used for virtual reality, it could be a game-changer.” For larger displays? Are you sure that’s what you meant to say? Larger displays have the LEAST need for higher pixel densities.

It would be amusing to lay a microscope slide on a 10000 DPI display and point a camera at it and have a computer sweep a peculiar pattern on the display, then have the camera take many pics, then have a computer do CAT scan mathematics to generate a 3-d scan of the sample on the microscope slide, then look at the 3-d scan.

Yeah the sample is mostly 2-d by nature of being thin, but the CAT scan VR model would be in perfect focus thru the entire sample not just only in focus in a vary narrow focal plane at any distance.

Essentially given enough computer power you’d have a microscope that doesn’t require focusing and can make full 3-d models of whatever little bug or cell is on the slide.

Cool idea for structured light scanning with microscope

Structured light is so cool

Great idea!

“Of course, that might be overkill on a six-inch phone screen, but for larger displays and close up displays like those used for virtual reality, it could be a game-changer.”

On the contrary hi-res is required for small display looked at close and not for large display looked at from a distance. This is all about angular resolution of the eye. There is no point for a display to have a highest than the human eye.

Light field imaging requires a pixel for each angle you want to emit.

That’s what I came here to mention. The type of display that demands this kind of resolution is a light-field display, where, for each pixel (think X-Y location on the display), you want to control the light that comes out at different angles from it (rather than just emit the same color/intensity light at all angles).

The exact analog for this is the light-field camera. In fact, you can make a light-field display in the exact same way you make a light-field camera: You just take a very high-resolution [image sensor]/[display panel] and place a micro-lens array over it. The micro-lens array ensures that different pixels [receive]/[emit] light from different angles. It trades off spacial resolution for angular resolution.

With enough angular resolution, you can look at a light-field display just like you look through a physical window. Objects will appear at different depths of focus, and if you move your head right, you’ll see things that are more to the left (that were previously blocked by the edge of the window), etc.

Of course, computing images for such displays will require a couple orders of magnitude more computation. Completely new rendering algorithms will be needed to do this efficiently.

Okay, I’ve already been wrong more than once here, so I might as well throw this in: in this sense, what you’re calling a light field display is the extension of a lenticular display to an additional dimension. Which requires a LOT more pixels. Which adds cost not only to the display itself, but to whatever is calculating the pixels. What is the advantage?

u can be stuck in a spaceship and the simulated window looks better

when moving a 1D lenticular animation card, the grainy fade between frames can be improved with higher PPI print or display

with 2D lens grid display using display mounted camera to track 3d position of human eyes in front of screen allows beamforming of stereoscopic images to individual users

at a high enough resolution and lens perfection, it’s possible to sit next to someone at a tv and receive a completely independent stereoscopic view with no glasses on either person

have a nice day

A display that is visually indistinguishable from reality.

ray: I meant the advantage of a 2D “lightfield” rather than a 1D lenticular array, for the same pixel density. I wasn’t asking how increasing the pixel density helps – that part is obvious. The advantage of having a stereoscopic display that can be viewed by multiple people simultaneously, all without special glasses and with true “looking around edges” of objects works with lenticular screens as well, with a far lower number of emitters required. Your point about making the orientation of the display moot is valid, but I have doubts that this justifies the extreme increase in the number of pixels that have to be uniquely driven. A lenticular screen with 500 pixels/ .. and WHY on Earth are we measuring pixel densities in pixels per INCH, anyway? Anyway, a lenticular screen with 500 pixels/in vertically and 10,000 horizontally takes 20 times as many pixels as a 10,000 x 10,000 pixel/in display takes 400 times as many.

Yes, a lenticular display is a type of light-field display. They’re usually very crude, though, only offering a few unique angles (as few as just two for a basic stereoscopic-only display). They will alias as you over-rotate and see them from angles they weren’t designed to support. Instead of seeing additional viewing angles, the same views will repeat over and over.

The more angles a display supports, the better it can support both depth-of-field and wider viewing angles.

You’re kidding, right? My point was, how is this better than a one-dimensional lightfield display, i.e., a lenticular screen OF SIMILAR RESOLUTION? I wasn’t talking about 1960s novelty lenticular prints, or even 1980s 3D photos. Try to keep up.

Your lack of tact makes me hesitate to answer, but here goes:

With just one dimension, you can’t do true depth of field. In fact, it’s difficult to imagine what would happen if you had depth of field along one axis but not along the other. It would probably confuse your optic system and give you a headache, similar to how the convergence-accommodation disparity gives you a headache when using VR goggles..

Also, if you moved your head up or down, you would not be able to see around things like you could when you move your head left or right. Even a slight vertical movement of your head will make the image seem weird, since with left-right motion, objects would shift left or right according to their depth, while with vertical motion, objects remain locked to their height on the display.

With one dimension, you lose the stereoscopic effect if you turn your head at an angle to the display.

Try to keep up.

Okay, so that was a rational answer. Thank you. See how communications can work, if you pay attention to what the other person says before treating him like an idiot?

The most likely near-term use for this would be to just treat groups of the 10,000 dpi pixels as a single pixel. That’d make the addressing issue more tractable while still allowing significant increases in dpi.

This gets more interesting if you add analog interpolation between sub-pixels in a super-pixel. You could make a 1080P video look ultra smooth even when examined at minute range. I’d just blur out smoothly as you zoomed in.

Ok, so how much memory is required for a frame buffer?

What is the processing needed to transfer it?

how much power is needed to do all this?

Perhaps the display also becomes the framebuffer/s?

Pixels x ( RGBAbpp + zbuffer-bpp )

This may open up interesting possibilities in the area of nanofabrication using UV cured resin printing, or just very high resolution printing at desktop scales.

“Stanford”

UV Resin based 3d printers will get a resolution boost!

SRI had this … on CRTs in their nuclear/conventional war simulator in the 1980’s … Heaven knows what those monitors cost, but they were stunning. Crisp would not begin to describe them.

As long as the fonts don’t get smaller…

Don’t get me started on phones with small fonts.

Or Linux Desktops running on 4k…

Between having to resize fonts on an apps by app basis or using a MAGNIFYING glass to see it…

Well it’s something the Linix community could agree on or enforce. I guess that is why windows and apple still make people choice that.

As of today, a fresh Ubuntu install on a 4K laptop does HiDPI beautifully with little/no setup. No tiny fonts, no resizing individual apps.

Source: tried it.

(I don’t mean this to sound snarky, sorry! Just, desktop Linux has gotten way better in the past few years. Give it a try!)

+1. I just installed Mint 20 on a 1920×1080 laptop, connected to a 4k monitor. It Just Worked, with native resolution on both screens and legible fonts.