It seems like just yesterday (maybe for some of you it was) we were installing Windows 3.1 off floppy drives onto a 256 MB hard drive, but hard drives have since gotten a lot bigger and a lot more complicated, and there are a lot more options than spinning platters.

The explosion of storage options is the result of addressing a variety of niches of use. The typical torrenter downloads a file, which is written once but read many times. For some people a drive is used as a backup that’s stored elsewhere and left unpowered. For others it is a server frequently reading and writing data like logs or swap files. In all cases it’s physics that sets the limits of what storage media can do; if you choose wisely for your use case you’ll get the bet performance.

The jargon in this realm is daunting: superparamagnetic limit, LMR, PMR, CMR, SMR, HAMR, MAMR, EAMR, XAMR, and QLC to name the most common. Let’s take a look at how we got here, and how the past and present of persistent storage have expanded what the word hard drive actually means and what is found under the hood.

Grains of Data

You could punch your data into rolls of paper tape, or press it onto optical discs, but for today’s article we’re talking about rewriteable media and for that, magnetic storage is still king.

For all magnetic technologies, an important concept is the superparamagnetic limit, which refers to how small a grain of material (a distinct collection of atoms) can be for each bit to store the data. If there are only a few atoms holding the magnetic state of a bit, then the reader has a difficult time sensing their value, and the writer has a hard time writing to the bit without impacting nearby bits. For this reason the write head is often larger than the read head, so that the stored and read value of the bit is correct and overcomes the magnetic slop at such small sizes. This is important for tape and hard drives, but not solid-state drives (SSDs).

Magnetic Tape is Still a Thing

Tape is still really well regarded by archivists and people with long-term storage needs, and is still an actively developed technology. With an areal density of up to 201 gb/in2 (sorry, the industry uses this unit) achieved in 2017 by Sony, and prices of tape dropping, it’s a great option for backups and rarely accessed data, and lasts decades when properly stored. Data access isn’t fast, but it’s reliable, and if you don’t have the hardware, you can outsource your tape storage and retrieval through online companies.

Tape is still really well regarded by archivists and people with long-term storage needs, and is still an actively developed technology. With an areal density of up to 201 gb/in2 (sorry, the industry uses this unit) achieved in 2017 by Sony, and prices of tape dropping, it’s a great option for backups and rarely accessed data, and lasts decades when properly stored. Data access isn’t fast, but it’s reliable, and if you don’t have the hardware, you can outsource your tape storage and retrieval through online companies.

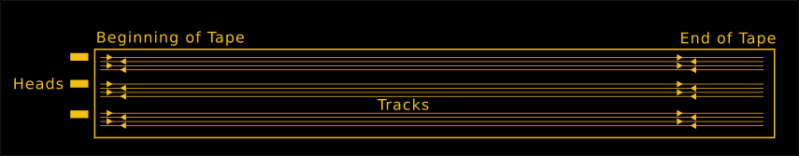

The storage has linear tracks, so that a set of heads can read/write data as the tape passes by. Track density can be so high that it exceeds the width of the heads, which led to linear serpentine tracks; really dense tracks but the heads can be shifted sideways a smidge to access the next track. The tracks go from the beginning of the tape to the end of the tape, and then back again (because why rewind the tape to start the next track?), so the tracks end up looking like a short and wide S.

The neat thing is that cartridges can be a little future-proof, as in some cases the tape is compatible with higher track density, so they’ll work with newer hardware, and even have more storage when formatted using that hardware.

Spinning Platters

Hard Disk Drives (HDDs) are the spinning platters we have known for a long time (and covered recently in Maya Posch’s deep dive into the precision engineering of hard drives). Just like with CDs and even vinyl records, the data is stored in small bits on tracks in concentric circles, and a head moves along the radius to access the data. Manufacturers have essentially hit the limit of density due to the superparamagnetic limit, but it wasn’t immediately obvious to consumers because they could just increase the number of platters in a drive of the same outer physical dimensions and therefore increase the size of the disk storage. Then they started replacing the air inside with helium, which reduced friction and allowed the disks to spin faster and add more platters. But the storage mechanism on the platters themselves has become fundamentally different. For the past few years some new technologies were being developed that are starting to make it into the market now.

Hard Disk Drives (HDDs) are the spinning platters we have known for a long time (and covered recently in Maya Posch’s deep dive into the precision engineering of hard drives). Just like with CDs and even vinyl records, the data is stored in small bits on tracks in concentric circles, and a head moves along the radius to access the data. Manufacturers have essentially hit the limit of density due to the superparamagnetic limit, but it wasn’t immediately obvious to consumers because they could just increase the number of platters in a drive of the same outer physical dimensions and therefore increase the size of the disk storage. Then they started replacing the air inside with helium, which reduced friction and allowed the disks to spin faster and add more platters. But the storage mechanism on the platters themselves has become fundamentally different. For the past few years some new technologies were being developed that are starting to make it into the market now.

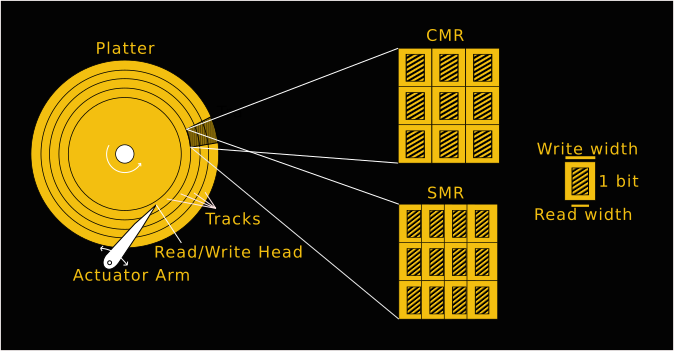

Hard drives originally used Longitudinal Magnetic Recording (LMR), where the poles of the magnet grain were flat on the surface of the disk. This took up a lot of space, so they aligned the grains vertically in Perpendicular Magnetic Recording (PMR) drives. In both LMR and PMR the disk has a series of concentric tracks, and there is no overlap between the tracks; the smaller read area is nestled snugly within the larger write area. The lab coats then increased areal density with the invention of Shingled Magnetic Recording (SMR), but since it’s a variant of PMR, they had to rename the previous kind to Conventional Magnetic Recording (CMR).

SMR uses the wide write head, but overlaps the tracks slightly so that the read areas can be more densely packed. That’s why it’s called shingled. However, this comes at a cost. Much like how it is difficult to replace a single shingle in the middle of a roof without disturbing the shingle above it, it’s impossible to write a single bit in the middle without overwriting the nearby bits, so the drive needs a lot more logic with the data management. It typically accomplishes this by writing new stuff to empty parts of the disk, then later going back to the old area and reorganizing it for future use, making a whole section clear at once. This means an SMR drive isn’t great for specific tasks, like heavy use, because it needs to have time to reorganize tracks. It may have more bytes, which is great for people who want large storage with rare writes, but as a primary drive it will likely have performance problems. Despite this significant downside, manufacturers haven’t been up-front about which drives use the technology, and they’ve been caught putting the drives in NAS appliances.

Besides packing the tracks together closer, physicists are working on pushing the superparamagnetic limit with technologes like Heat Assisted Magnetic Recording (HAMR) and Microwave Assisted Magnetic Recording (MAMR), and Energy Assisted Magnetic Recording (EAMR). All three operate in essentially a similar way, but get there slightly differently. The idea is that you want the grain to be stable enough that it won’t flip on its own or due to temperature variations or influence from nearby bits, but you also want it to be strong enough to be easy to read, and easy to write (called coercivity). As the grains get smaller and smaller and more densely packed, this becomes more and more difficult. The XAMRs solve the problem by making the grains stronger despite their close packing, using a different material with higher resistance to change, and then boosting the power of the write to accomplish the more difficult energy requirements necessary to flip a bit. In the case of HAMR, it’s with a laser that briefly heats a grain up by a few hundred degrees, writes it, then lets it cool rapidly. MAMR uses microwaves instead of lasers for heat. And EAMR uses electric current to boost the energy on the write head.

Another area of improvement is in the motion of the head. The thinner the track, the more accurate the position of the head must be. This is being accomplished with piezoelectric flexing, so that the heads rotate slightly and can be more aligned with the track.

Doing Us a Solid (State)

Solid state drives are increasing in storage size as well, and without the opportunities for mechanical failure, their simplicity means increased speed and lifetime. Advances in density have come in two forms; levels and layers.

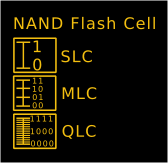

NAND flash is organized into cells, where each cell stores a voltage level which represents a bit. While it’s actually a transistor, you can think of each cell as a capacitor, very good at holding a charge for a long time. Originally data was stored with a single bit per cell, which was very fast and reliable. Then they realized that voltage levels in the cell can be considered analog, and with an analog to digital conversion they could store multiple bits in a single cell by changing the voltage level. We’re now at Quad Level Cell (QLC) technology, which means that a single cell can have 16 different voltage levels that are decoded to 4 bits. This increased the possible drive size significantly, but came at a huge cost as well.

The tiny changes in voltage means less reliability, especially as cells eventually wear out over time and are less capable of maintaining their voltage level, and the need to do ADC conversion slows things down. Compounding the problem, each cell is being written more frequently, too (because they hold more data), which means they degrade faster. Finally, NAND flash cells don’t last forever without power, and without regular refreshing the cell voltages can slowly drop, leading to bit rot. QLC is best suited as a low-cost drive that replaces HDDs in read-heavy applications, but may not be ideal as an unpowered backup or a write-heavy drive.

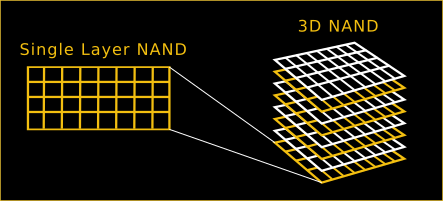

We typically think of chips as mostly flat things, but 3D NAND is another way that storage density has been increasing. All of the addressing mechanisms are the same, and the memory cell concept is the same, except a whole bunch of memory cells are stacked on top of each other, with some drives at 128 layers already. Now instead of a single address returning a single bit, that single address returns 128 bits as the entire stack is read out. 3D NAND can be accomplished either with single long strings, or multiple strings stacked on top of each other and separated by an insulating layer. The easiest way to picture this is to imagine a multi-story hotel, and each address refers not just to the room on the first floor, but to every room directly above it. In other words, X01 gives you 101, 201, 301, 401… up to the top of the building. Some drives are combining the two technologies, using levels and layers, to get even higher density.

Hybrid

Why choose when you could have both, and when the computer can optimize for you? Hybrid drives combine both platters and chips. This gives large storage as well as speed. The drive then gets to do analysis on which files are used most frequently and how, and choose where to store the data for the most efficiency. For laptops that only have enough room for one drive, this makes sense, but when you have the space for both you’ll get better performance and price by having one of each.

What’s right for ME?

The hard drive requirements for Windows ME are 480-645 MB free space, and it does not specify the type of hard drive. For newer operating systems performance is generally better with solid state drives, but for data storage PMR drives win. We’re seeing larger and larger storage sizes, aided by advances in physics, but at the cost of more and more delicate mechanics, as well as slower access speeds. I’m holding out for holographic crystal circuits you see in sci-fi all the time with the clear acrylic cards. Realistically, though, it seems the best idea is to evaluate your actual data needs and buy the appropriate hardware, instead of just looking at the number of bytes and buying the biggest you can afford, or assuming that SSD>HDD>Tape for every need.

I recently picked up an ’80s SF book that had a 3D crystal memory that could store “one gigaword per cubic centimeter,” and was amused at how far that had been eclipsed by a modern SD card. Assuming a “word” is around 8 bytes.

Bit (1), Nibble (4), Byte/Char (8), Word/Short/Int (16), Long (32), Double (64) is how I learned it

I have learned that word is the natural bit width of the data bus in the cpu. So a pdp-8 have a 12bit word, a z80 have a 8bit word and a 68020 has a 32bit word. (Of course I can be wrong about this)

A word was historically either the width of a CPU’s registers or the width of data that could be read in a single fetch (which correlates with the natural bit width of the data bus). There were all manner of word sizes for CPUs in the past.

A 256 MB hard drive? Seems like an odd size. I recall sizes like 170 MB, 240 MB, and even 252 MB. I drive from Conner long ago, I think it was 540 MB.

I do have a 32 MB and 256 MB MMC card. But that’s solid state media, so the whole power-of-two thing makes some sense. For rotating platters, those sizes aren’t any more common than other random capacities.

You can only format a drive in sizes divisible by 2^n and the sector size was 512 Bytes, so you could technically have any size drive within half a megabyte, before they decided that a megabyte is 1000 bytes instead of 1024. Then it stopped making any sense.

Don’t know what system you are referring to. Plenty of PC compatible drives were 10 MB, 20, MB, 42 MB, various other misc capacities. There were limits on the number of clusters a drive could have (based on bits in a field – so power of 2).

But a given drive could have (and typically did have) fewer than the maximum number of clusters. (A cluster would typically be a few sectors.) [This is all in the PC DOS/MS DOS/etc. realm. Other OS’s may have done things differently.]

10 MB? Such luxury! My first hard drive was 5 MB.

Hahaha! Looks is was gifted with my 40MB on my Atari ST40 :)

The first HD I used was 10MB. It was useful to have in the computer lab, but it didn’t get very much use because it had to warm up for over 5 minutes before you could use it, so floppies were still usually more convenient.

yes, but were they ACTALLY 10MB, etc? I have had many drives that said one thing on the label but the actual number was less.

all the 3.1 support I used to do was on MFM drives, HUGE 5 or 10 megabyte ones!!

my first Mac + had a whopping 20 Meg SCSI drive, all my friends were so jealous!

20 M of storage, 4 M of RAM!!

Until LBA came along drives were configured with Cylinders Heads and Sectors where like in old money, you could do some interesting maths to get the drive size. IIRC of the C, H and S, some were 0-based and some were 1-based, making the maths more than simply multiplying the 3 together.

My 1st HD was 10 meg on a 8-bit plug in ISA card. In my Walmart 8086 with 1M mem. ha

“Track density can be so high that it exceeds the width of the heads” … tautology is wasteful storage technology for words !

> Perpendicular Magnetic Recording (PMR)

How can you mention this technology and *not* bring up the music video that Toshiba put out? https://www.youtube.com/watch?v=wVRh79LHgsU

+1

– Thanks for sharing :-)

Thank you. Well worth the three minutes of my day – made me smile!

While I’ve heard of a couple exceptions, all of the vinyl records I’ve encountered since I started paying attention in 1960 as a kid – had just two spiral tracks, one per side.

Disk drives have many circular tracks per side, whether magnetic or optical. They are in no way “just like vinyl”.

Just to show that that’s not true: Monty Python had a disk with 2 groves on one side – imagine my surprise when I played it for a friend and a routine I had never heard before played.

That sounds like the kind of prank they would do.

Nudge nudge wink wink….know what I mean?

https://en.wikipedia.org/wiki/The_Monty_Python_Matching_Tie_and_Handkerchief

Matching tie & handkerchief?

“They are in no way “just like vinyl”.”

Thank goodness. Not another vinyl vs digital argument.

Techmoan on YouTube has recently put up a video of records that contain 4 or more separate grooves per side – as part of a horse-racing game – so you get a different/random result each time you play it.

There have been a goodly number of albums that don’t have a groove on one side. Most often they were “three sided double albums” – a two disc album – one regular two sided disc, and one disc that had music only on one side, blank with no groove on the other.

Why is everyone using “bespoke” all of a sudden? Was there a tv show, movie, or pop culture movement I missed? What happened to “custom” or “custom-made”? Unnecessary wording, in my opinion.

The word has changed meaning recently (https://en.wikipedia.org/wiki/Bespoke). I wanted a word that implied the products are designed to meet very specific needs, but custom meant to me a single customer. I was trying to convey that storage needs are branching and getting more and more diverse, so technologies are being developed that address those smaller and more specific branches in ways that make those products unsuitable for generic use. People need to know more about the product they are buying. Decades ago you’d buy a plain white T-shirt, and there weren’t a lot of options. Now you have to choose between material, size, neck style, and fit, and not all the options will work for someone. They aren’t custom t-shirts that are made one-off, but they are designed for a certain niche, so I picked bespoke.

Words don’t change meaning; it’s people who use them incorrectly. Like, literally.

Ministry for funny words.

Oh most definitely. Thank you.

From that same article – “synonyms include custom-made and made to order”. And the conceit of the “recent change in meaning” portions of the article reek of marketing that was once unique (a new means of saying “custom” in a very specific context) and is now annoyingly blase (very frequently in my experience, used as marketing jargon for “custom, but expensive”).

“Bespoke” is a fine word.

B^)

Indeed, a fine word that means to make something for a specific customer! lol

I was also curious about the source of this newfound interest in misusing this word. It must have a reason.

I wish someone would bring that tape technology from the enterprise level pricing home. Even the used drives for useful capacities are in the thousands of dollars range. The media pricing isn’t terrible but come on!

Main reason is because it’s the only technology other than another hard drive for dealing with ever increasing sizes. Advising backups as a strategy is easier when one has affordable and capable tools to doing so.

I work in a datacenter, and with use tapes mainly for the data density they offer (from 2.5 TB to 12 TB per tape depending on the technology), and their good cost : 12TB is 100€ and when not accessed they don’t cost electricity ;)

The point is that data density on tapes did not follow the density of hard drives: a tape is 800 meters long, so if your extrapolate HDD density you will have huge capacities. Sold / available tapes are currently 12 TB big (uncompressed), and in 2017 IBM plans to deliver tapes at 330 TB: those should reach the market in ~10 years. So there is a big place for density enhancements.

The system we have is an HSM (Hierarchical Storage Management) and works on the same principle that RAM HDD in your computer: data moves from a “hot” place to a cold one which is cheaper.

In our data architecture: when a file is “hot” it remains on HDDs (2 PB at 40GB/s), its data is copied on 2 tape libraries (each with 10PB of data): its states becomes DUAL. When it becomes “cold” (not often used), AND the systems needs to write some new files, the system frees the data from the HDDs, but the inode remains (basically, the user still see its file with a “ls”): the file becomes OFFLINE. When user access its file (read), the system automatically mounts tapes and recalls the data: the file is now DUAL again.

Talking about performances: each drive delivers 250 MB/s, we have 10 drives in each library: so 50 TB can easily be recalled in half of a business day (usually users call us to recall big sets of files prior to running their jobs). Small recalls are transparent to the users (the systems pauses the “open” call during the tape access: a tape mount is less than 30 seconds). We are currently working to enhance our infrastructure, mainly to increase space capactity but also to increase HDDs bandwith.

Working with tape libraries is fun: I prefer those big robots (with name taken from Asimov novels ;) to the pizza box we usually see. Moreover making the whole architecture working is interesting: we tune the policies, the machines, to give the users the best experience.

Having tapes at home ? Well … I have one tape library ;) its a small 24 slots one with a single LTO4. I use it with bacula to backup data from my family and some friends. When I need to repair a computer for a friend, I first dump all the HDD to tapes (need 1 TB more ? just add two tapes !): in case of disaster I still can restore the HDD in its initial state. Performances are lower of course, but it’s still good: 100 MB/s reading or writing (sequential access, of course). The tape library I bought cost me 400€ 5 years back, and the seller gave me 60 tapes with it (so 48 TB), so it was a good deal. A LTO4 tape drive costs 100€ today, and LTO4 tapes are quite cheap, and a software like bacula can manage it well (just change tapes when the software requires it ;). If you just need to put data on a tape you may also consider using TAR (with stands for Tape ARchive) and mt commands (rewind, fast forward, …).

Oh, and I forgot to say that I am working on making my own tape library to deal with other drives … and because working with stepper motors, 3D printer, arduino is fun :)

The serial protocol used for the drive to speak with the library is known and documented, so this is an interesting project I hope to post one day on HaD as a “DIY tape library” ;).

The other part is developing the HSM software part … time is missing :)

I was researching this a while back -I routinely just copy everything from my devices to the NAS to back them up – but the cost of tapes put me off and decided to stick with HDDs for now. It was cheaper to just buy a new 4TB hdd than to buy the equivalent in tapes + drive. Not sure about data retention, but I’m expecting at least 10-15 years.

I would pay 400E in a heartbeat for such a deal, even today. Guess I need to look out for deals now.

Yes … A tape library (also known as autochanger), is quite hard to find now, particularly small (12 to 24 slots) one… That’s why I planned to build a DIY one … You may consider buying a standalone tape drive: LTO4 can be found at 100€.

I did not even consider an autochanger, just a plain drive. But the cheapest LTO4 tapes I found were around 15E/TB (uncompressed), while HDD can go even <25E/TB.

I think the problem is volume, volume, volume. An enterprise can use dozens, hundreds, or thousands of $100 12TB LTO-8 tapes for its cold storage needs. Several or even tens of thousands of dollars for a drive amortized over all those tapes means the drive is effectively free.

For a home user, the needs are quite different. Even if that user has some big online storage at home, say 50 TB, three rotating sets of hard drives for backup would be cheaper and be sufficient for a long time. Unlike the enterprise, they wouldn’t likely need to create permanent archive snapshots which requires buying more backup medium on a regular basis. No doubt manufacturers prefer to cater to customers who would need to regularly buy more tapes.

I used to have a Travan tape backup system at home and probably three dozen tapes. HDD capacities kept going up and prices kept going down so quickly it didn’t make sense for me to keep using tape for backup.

Yah I more or less gave up pursuing tape for home backup when SOHO gear was only a gigabyte, drives were 20GB+ and DVD-R was in the offing. There was a bit of a golden era a few years before that, where tapes exceeded sub $200 HDD sizes

Some company needs to decide to fill the home and SOHO market for an affordable LTO drive. It’s one of those chicken and egg things. People need it but they don’t know they need it because nobody makes it for them *and* tells them it exists.

+1

Although I don’t see that happening with the “cloud”, data plan and stream everything mindset.

Why the reference to Windows ME? I get the comparison to times of old with Win 3.1, but the ME storage size is a bit perplexing.

Indeed. We should convert the storage density to Libraries of Congress per Olympic-sized Swimming Pool.

I think it is a pun: “What is right for ME” with the double meaning of “me” and “Windows scheisse ME”

Did anyone else put an RLL controller on an MFM drive, and suddenly discover an extra 50% capacity?

+1

Yes and then I gave my plow horse some laudanum.

Yes.. My first HDD was a 20 MB MFM the first thing i did was puting in a RLL, a low level format, and got that 50% the seconds change was replacing the 8088 with a NEC that did give a 70% processing boost.

All that happend a long time ago (1986).

Right now i am still using that old PC case with a Core2duo, 4GB ram and a fixed mounted 1TB hdd and 2 removable hdds mostly 1 TB but have also 500 GB, 2 TB and even 8 TB drives.

3 or 4 times, on 386 or 486 boxen, you could get very nice performance with 3:1 or 4:1 interleave too.

Back in the late 80’s CDC supplied a hard disk that NCR Corp used in data centers that had HPT or Head Per Track. It provided fast access on 12 inch platters because the heads didn’t move. I have always wondered if that could be scaled down to consumer hard drives.

I doubt it. Ignoring the engineering challenge of keeping multiple heads aligned with today’s HDD track densities, SSD’s have dropped so far in price and have such higher performance than mechanical drives that such a drive can’t compete economically nor performance-wise.

Thought I’d heard of some with extra sets of mobile heads, like 2 or 4 provided they design them so arms clear each other.

Anyone remember or use the good old Jumbo Tape Drives that connected via parallel port ? Ability to backup 120/250 mb of data was a lifesaver back in the day. Especially if you had a Rodine hard drive. My buddy was constantly replacing and restoring those pieces of crap.

Thank you for mentioning good old Windows 3.1!

Surely It had its quirks, but was otherwise such a friendly little environment,

that allowed the user to do everything he/she/they wanted to do.

Along with the rare Windows 3.0a MME, It introduced so many things we take for granted today.

Screensavers, TrueType fonts, MIDI, AVI, VCD/CD-i (Xing MPEG Player), FLI&FLV, MOV (QuitckTime) etc.

Also RAD enviroments like Visual Basic 1.x-3.x, Turbo Pascal Windows, dBFast etc. made it easy to develop little

programs at home, which serves very well for simply tasks.

While much larger than TOS or AmigaOS in file size (Win 3.x, 5 to 10MB; DOS 6, ca. 6MB), it could work in little RAM:

It could start with less than 1MB if run in Standard-Mode, albeit this was very stressing for it (it flew with 4MB).

Personally, I ran it on a 286-12 PC with an 80MB Conner HDD (AT-Bus, IDE or EIDE).

But due to the fixed CMOS table for HDDs, I had to operate the HDD with half the capacity.

Too bad XTIDE Universal BIOS (XUB) wasn’t available in these ancient days.. ;)

I was a bit underwhelmed after I ran 3.1 on a 286-12, with 1MB onboard and a 2.5MB LIM card, was kinda snappy in /s or /2 mode. Then I got a 386-16 board, but only 2MB of SIMM RAM, but thought I was gonna be rocking that 2.5MB LIM as well, but nope, didn’t play with EMM386.. and sucky BIOS locked up that 384kb UMA too so even running /3 without it, it was basically running on 1.5MB only and thrashy with VM set up. So at that point it felt like the 286-12 had been faster with the LIM. There were crashes running 386 in /s or /2 without EMM386 and the LIM driver, maybe the RAM was too slow. But yeah, in general, with same VGA, i/o, adequate RAM etc, 386es didn’t feel a lot faster than 286es on early 3.1 stuff. However, when it was all winGs, win32s, SVGA and more demanding apps, they pulled ahead. It was actually 286-16 machines that I first browsed the WWW on with real early Mosaic version. They were tolerable on those early mostly text sites with one or two small images. Only for about 6 months though until things moved on.

Though come to think of it, there always seemed to be something specifically horrible about 16Mhz 386es, 20Mhz ones felt twice as fast.

I had a 286 with 12 megabytes RAM. 512K in DIP on the motherboard and three Micron ISA cards. One had (IIRC) DIP chips and was used to backfill the low RAM to 640K plus more. The other two cards were a different design with ZIP chips on edge.

They had EEPROM for software configuration so I split them between XMS and EMS because I had some old software that needed real EMS.

I also had an 8bit soundblaster and a Pro Audio Spectrum 16 in it (a REEL Magic card minus all the video and SCSI parts) both operational with config.sys and autoexec.bat setup so Windows 3.11 (the OEM only version that had Standard Mode, not Windows For Workgroups 3.11) and DOS games with PAS 16 support would use that card, and DOS games without PAS 16 support would use the soundblaster.

The reason the REEL Magic card didn’t have the video and SCSI was because it was a secondary card meant to be paired with the full REEL Magic PAS 16/video/SCSI card. ‘Course I never encountered any software capable of dual wielding PAS 16 soundcards.

I desperately wanted a 386 DX 40, with a 40 Mhz 387 to go with it. I did eventually pick up a Baby AT (more like micro AT) board with one, but no FPU, used but by then there were triple digit Mhz 486 boards. I wanted to try one of those 486 DX2 386 pin compatible upgrade chips to see how it’d run at 80 Mhz but it would’ve been too limited by the 30 pin RAM and only ISA slots.

30 pin SIMMs weren’t of themselves a handicap, could get 60ns modules by 94ish. But, an older system might have had built in wait states expecting 100ns or 120ns even. In a decent BIOS you could disable them, or maybe by jumper. However, by 95 there were a handful 486 boards supporting EDO and PCI. Power users were already on Pentium though. Bit hard to nail down the comparisons, since there was so much overlap between gens. However, there were some nice Orchid ISA SVGA cards, that were as fast as midrange PCI, then also it was pretty much when consumer P54s (socket 5) were shipping before ISA HDD i/o really became an issue, unless you were still rocking one bought at beginning of AT era. Particularly when the later ones tolerated the AT ISA bus at 10-12Mhz, rather than 8 or lower.

Then you had a half dozen variations of the MakeIt486 theme, and it depended on the exact spec of what you were coming from. Versions based around Intel chips tried to be ultra compatible, down to inserting extra bus wait states, and they didn’t have high clocks anyway, so unless you went from a real plodder of a 386, they didn’t do much for you apart from apps with heavy 32bit code. Which wasn’t most people in earlier 90s. Then the cyrix based versions, which had higher clocks, but the base 486 cyrix lagged a bit behind AMD and Intel ones. So for a start there, the Cyrix at 80 was just a hair faster than late AMD or intel 66mhz parts. Now what might seem a bit weird to some, the Cyrix 387 FasMath was the fireball of math co-processors, performance wise. However, maybe because of this, they thought they didn’t need to do much to it for a 486 FPU design and the kludged on 486 extensions took it’s edge off and it wasn’t so competitive as part of the Cx486 DX line. Also, AMD tweaked up their late 386s, so had an edge over Intel, they seemed in integer math to match first gen 486 running just a handful of Mhz slower. So a good late AMD 386DX with Cyrix FasMath 387, on reasonable motherboard, at 40Mhz, was probably doing everything as well as a 486DX33 and a little faster on those simple things where mhz mattered.

So trying to upgrade that with a plug in 486 chip or socket adapter kit would have been a bit unsatisfactory as Intel based ones would have been same speed or slower anyway, or the Cyrix with non-cleansheet and kludged FPU, may have performed about the same in math heavy stuff and only give a slight edge in clockspeed for the stuff that needed it. For anything where you were stuck with lesser 386 and no or slow FPU then yah, you could get 486-ish performance, probably feeling twice as fast. But you didn’t really “futureproof” yourself, since by the time games and apps were “486 required” for actual 486 instructions rather than speed, you wanted to be on a full 486-DX 66 or better, which your hopped up 386 board probably trailed, even with the max mhz upgrade. i.e. they could run with the 25-40mhz 486 native machines, but DX2s parted company.

Also it seemed to me, it was only a few months or so after these were widely available that you could pick up a 486 AT motherboard for $50 and get a DX33 thrown in for practically free, or spend another $50 on Intel DX2 or AMD/Cyrix DX4 and still have money in your pocket vs the $150 to $200 retail of the upgrade chips. so if your 386 or 286 motherboard was AT format, it was easily swapped out. Owners of proprietary systems might have had I/O sound and video onboard, so it was $150 vs buying everything from scratch, may have found it more attractive, also those with special systems like lunchbox portables, and some of the clamshell luggables may have had space for the clip over type too.

Anyhoooooo, some vintage enthusiasts like playing with them for the “what ifs” if they can snag them cheap on fleabay, you know 286 upgraded to pentium overdrive, through stacking adapters, that kind of hilarity.

A kind of reverse to all this, I excitedly opened a Zenith “486” notebook on hearing it had a desktop CPU inside, hoping to retrofit a 5×86 … yeah it was actually a design that originally took a desktop PGA 386 and they’d put in the Cyrix 486 in a 386 footprint upgrade chip at the factory LOL …. However, I might flip that back again, it would go in the square PLCC socket, and I have a AST 386 COLOR VGA notebook, in far, far nicer cosmetic shape than the zenith with crappy mono LCD, which has it’s 386 in that socket. Unfortunately it’s a very back burner, round tuit thing, as despite it looking perfect it acts totally dead, so first requires resurrection before I think about that.

That reminds me, back in the day I tested hardware and software for some articles that ran in Computer Shopper. I received copies of The first retail Windows 1.03 and Digital Research GEM. I ran Windows from two 360K floppy disks on a Xerox 6064 that had an Intel LIMS board, NEC V30 to replace the stock 8086 and an Orchid EGA+ board that could handle the 16 bit bus in the 6064 with its opposite byte order of the PC/AT came later. The 6064 was the same as AT&T’s 6300 and Olivetti M24, all of which were made by Olivetti. With a V30 installed, the Olivetti machines out benched the IBM PC/AT easily. Windows was pretty meh in version 1.03, while Gem looked a lot nicer and had a pretty good word processor and paint program sold separately. DR wound up knee-capping Gem’s visual interface to settle the fight with Apple over the look of the trash can. It shows that the best software idea doesn’t always win, but rather the best public relations combined with lower prices.

No mention that pretty much every single read from ALL modern storage devices, at the very lowest level, contain corrupt data, that is automatically rebuilt from the error correction codes. But to be totally fair, after correction the error rate are much lower than they have ever been. The low level error rates today, that only 40 years ago would have been considered totally unusable, thanks to modern error correction codes are normal. But then again, 40 years ago, the data transfer rates in common use today would have been considered insane (MFM @ 5Mbit/sec vs NVMe @ 32 Gbit/s or even SATA 3.0 @ 6 Gbit/s).

How about a fact every single read from SSD degrades stored data in adjacent pages, think Row-Hammer but for NAND, and xxxK number of reads triggers internal Read Disturb Management to rewrite whole block.

Not to mention the best one – current cheapest deployed NAND technologies hover around Hundreds of P/E cycles.

Unlike mechanical magnetic HDD, SSD is a wear item in your computer an slowly degrades with time and use.

It seems like just yesterday I was working on Cromemco Z2 with a Morrow Designs Thinker Toys hard drive running CP/M. I forget the capacity of that thrive at the moment, probably five megabytes. The drive was the size of a small microwave oven and drew about five amps at at 120 Volts for power. Those were the days! Oh yeah, the console was an ADM3 terminal with the lower case option installed – the lap of luxury!