The Raspberry Pi platform grows more capable and powerful with each iteration. With that said, they’re still not the go-to for high powered computing, and their external interfaces are limited for reasons of cost and scope. Despite this, people like [Jeff Geerling] strive to push the platform to its limits on a regular basis. Unfortunately, [Jeff’s] recent experiments with GPUs hit a hard stop that he’s as yet unable to overcome.

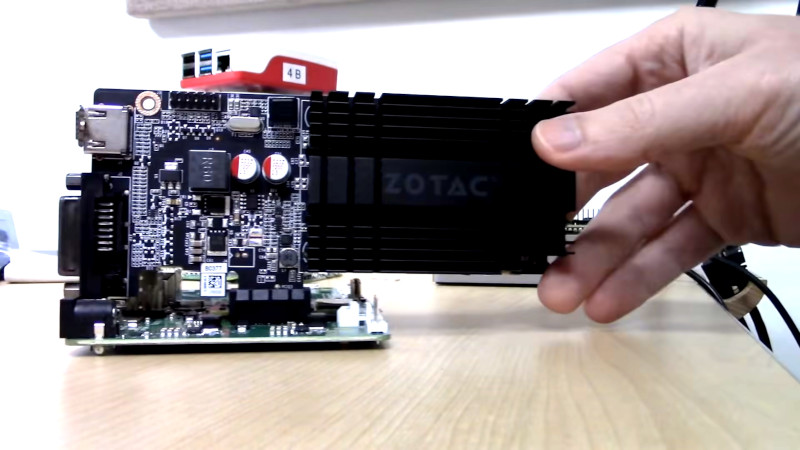

With the release of the new Compute Module 4, the Raspberry Pi ecosystem now has a device that has a PCI-Express 2.0 1x interface as stock. This lead to many questioning whether or not GPUs could be used with the hardware. [Jeff] was determined to find out, buying a pair of older ATI and NVIDIA GPUs to play with.

Immediate results were underwhelming, with no output whatsoever after plugging the modules in. Of course, [Jeff] didn’t expect things to be plug and play, so dug into the kernel messages to find out where the problems lay. The first problem was the Pi’s limited Base Address Space; GPUs need a significant chunk of memory allocated in the BAR to work. With the CM4’s BAR expanded from 64MB to 1GB, the cards appeared to be properly recognised and ARM drivers were able to be installed.

Alas, the story ends for now without success. Both NVIDIA and ATI drivers failed to properly initialise the cards. The latter driver throws an error due to the Raspberry Pi failing to account for the I/O BAR space, a legacy x86 feature, however others suggest the problem may lay elsewhere. While [Jeff] may not have pulled off the feat yet, he got close, and we suspect with a little more work the community will find a solution. Given ARM drivers exist for these GPUs, we’re sure it’s just a matter of time.

For more of a breakdown on the Compute Module 4, check out our comprehensive article. Video after the break.

Wonder if it’s the BIOS on the GPU. I know back in the day Apple users had to flash PC Graphics Cards to become compatible.

Most graphics cards are made to connect to x86 CPUs. x86 CPUs have seperate IO space for writing and reading control registers in peripherals like the old fashioned parallel port, serial port, and also graphics cards. ARM CPUs don’t have seperate IO space, so this generates incompatibility issues. It would be nice if ARM CPUs could somehow simulate this IO space in hardware using a dedicated part of the normal memory space. Perhaps this is already hidden somewhere?

Most embedded pci-e controllers do support IO space. They just memory map it, like a normal BAR. Although I never used this, almost no devices require IO space (mostly used network/wireless hardware. A serial or parallel port card will use it)

While I won’t say you are wrong, the fact the cards he is using had Arm drivers at all suggests the hardware should be useable. So to me points elsewhere for the issues, as TCPMeta says above the VBIOS on the cards might need an update with alternative config. It could also be the arm driver is supposed to be handling the communication mismatch but was written for a particularly variety of ARM board that doesn’t happen to match the pi, not sure how that could be true, what would be different… I have no idea why the drivers actually exist for arm, can’t say I’ve ever seen a pci gpu on Arm…

Maybe it’s simple issue of endianness of the transferred data?

I don’t know sh*t about PCI-E, but I do remember that endianness on ARM processors is (or used to be, at least) configurable. Some systems used big-endianness, some little-endianness. And some systems it can be switched on-the-fly, others not. Different variations for different arm processors.

Yeah Arm can go both ways in theory, but I don’t know of anybody doing it, and remember reading somewhere Debian doesn’t support both endians for arm – keeping it consistent with amd64 i386 stuff – so a good thought but I don’t think so.

As mentioned the problem is likely to be with the Video BIOS. That the program located there isn’t executed and thus the graphics card is not initialized. (Not sure how this works in the modern EFI-world)

At one time X11 on SPARC-based system overcame this by emulating a x86 core which executed the Video BIOS instructions.

Note that I’ve also been trying to get a newer Radeon RX 550 working (which doesn’t have the old BIOS anymore, according to some AMD folks I’ve talked to), and am having new difficulties: https://github.com/geerlingguy/raspberry-pi-pcie-devices/issues/6

One other issue—many newer cards require more BAR space than the Pi will even allow, due to the driver being stuck in 32-bit land :(

In the end, the moral of my story could be “but the current-generation Pis are just not meant to run GPUs.” But it would be neat to get one working if for no other reason than to say it worked!

As you say, it looks like you’re close. I think between yourself and the other people throwing in their experience on Github, it should be doable!

I thought it was DEC Alpha that came with an x86 emulator in its firmware in order to run the video bios during startup. Could be wrong though, or maybe both

I’m still fighting with the error “GPU 0000:0b:00.0: RmInitAdapter failed!” ; while I’m trying to pass through my graphic card (2080 ti but also 1060 ti) to a linux virtual machine running on esxi 7. The error is the same,so the two situations are connected in some way. I created a post on the nvidia developer forum,but no one replied yet :

https://forums.developer.nvidia.com/t/rminitadapter-failed-nvidia-smi-no-devices-were-found/159559

Just get a Jetson Nano

You’ve missed the point entirely. Congratulations.

What is the point?

What about the Nvidia P106 GTX 1060 mining card? I would think that since this card has no actual video output that it would not have an IO BAR… would be an interesting candidate if you were just trying to use CUDA.