Can you remember when you received your first computer or device containing a CPU with more than one main processing core on the die? We’re guessing for many of you it was probably some time around 2005, and it’s likely that processor would have been in the Intel Core Duo family of chips. With a dual-core ESP32 now costing relative pennies it may be difficult to grasp in 2020, but there was a time when a multi-core processor was a very big deal indeed.

What if we were to tell you that there was another Intel dual-core processor back in the 1970s, and that some of you may even have owned one without ever realizing it? It’s a tale related to us by [Chris Evans], about how a team of reverse engineering enthusiasts came together to unlock the secrets of the Intel 8271.

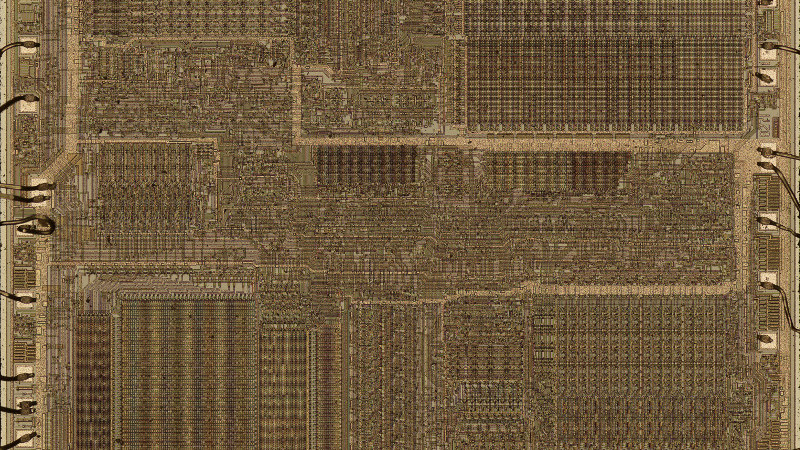

If you’ve never heard of the 8271 you can be forgiven, for far from being part of the chip giant’s processor line it was instead a high-performance floppy disk controller that appeared in relatively few machines. An unexpected use of it came in the Acorn BBC Micro which is where [Chris] first encountered it. There’s very little documentation of its internal features, so an impressive combination of decapping and research was needed by the team before they could understand its secrets.

As you will no doubt have guessed, what they found is no general purpose application processor but a mask-programmed dual-core microcontroller optimized for data throughput and containing substantial programmable logic arrays (PLAs). It’s a relatively large chip for its day, and with 22,000 transistors it dwarfs the relatively svelte 6502 that does the BBC Micro’s heavy lifting. Some very hard work at decoding the RMO and PLAs arrives at the conclusion that the main core has some similarity to their 8048 architecture, and the dual-core design is revealed as a solution to the problem of calculating cyclic redundancy checks on the fly at disk transfer speed. There is even another chip using the same silicon in the contemporary Intel range, the 8273 synchronous data link controller simply has a different ROM. All in all the article provides a fascinating insight into this very unusual corner of 1970s microcomputer technology.

As long-time readers will know, we have an interest in chip reverse engineering.

> but there was a time when a multi-core processor was a very big deal indeed.

Because multi-core didn’t make sense when clock speeds were still increasing rapidly. Why add complexity of a 2nd core when you can just double the speed of a single core instead ?

This were indeed a large reason for why a lot of consumer grade systems didn’t venture towards multi-core implementations as far as the user environment and central processing unit were concerned.

Though, dedicated processors have been rather common for IO taks for quite some time, and graphics have had dedicated processors for a long time as well. So one could partly argue that computers have been multi-cored for a long time, even before the micro computer revolution in the 80’s.

But usually, “mulit-core” is generally only used in regards to the CPU(s). If a disk controller or graphics processor, or even a floating point processor technically is another core that does lift up overall system performance rather noticeably.

Though, interesting to see an actual multi-cored chip from the 70’s.

But to be fair, it isn’t actually all that hard to make a multi-cored chip, though it does depend on the architecture.

And the consumer OSes at the time (pre 2000s) wouldn’t support multiple processors. It’s kind of a big deal for them too.

Indeed and THAT is why we didn’t see multi-processor systems until well into the 2000s. Only Linux, OS/2 and maybe BeOS ran well on multiple processors back in the 1990’s and early 2000s. Microsoft still had a cooperative tasking primary OS until Windows XP came along in late 2001 and it too was very poor at multi-processing. Microsoft didn’t allow vendors to benchmark their OS on multi-processor systems so there were very few examples showing how bad it was and how good the other OS’s were.

There was one motherboard vendor which made a nice dual processor board which could run two inexpensive Celeron processors and it sold like hot-cakes to Linux users and a number of remaining OS/2 users. Abit BP6 was the model.

So because the company dominating the OS landscape, Microsoft, could not produce an operating system which handled multi-threading well and most all of its software was single threaded, there was no advantage for users of those systems to run multi-processor let along multi-core systems.

Just another bit of proof that Microsoft held back the industry about 10 years because of sub-par software and dominating marketing.

Windows 95 up had preemptive multitasking as did Amiga OS, Concurrent, and even Window 386 since it could run DOS applications. NT and later got much better memory protection but yes even 95 was had preemptive multitasking.

Windows 95 might have had pre-emptive multitasking but it was a hideous implementation. They tried to create a well threaded version of Explorer in the Chicago beta since OS/2’s UI and filesystem UI was very well written and threaded but it killed the performance of the whole Windows system. A later beta had a greatly stripped down Windows Explorer and the rest is history with most all of Microsoft’s OS utilities were painfully single threaded.

“Microsoft still had a cooperative tasking primary OS until Windows XP came along in late 2001…”

Actually, Windows 2000, Windows NT 4.0, Windows NT 3.51, Windows NT 3.5, and Windows NT 3.1 were all preemptive multitasking and could all use multiple processors. Windows NT 3.1 was released in 1993 for x86 and within a year, there were versions for MIPS and DEC Alpha – all having multi-processor platforms available as I recall.

“…Microsoft didn’t allow vendors to benchmark their OS on multi-processor systems…”

Well, since Microsoft had no way of preventing any company (vendor, customer, or competitor) from doing whatever they wanted with Windows, I’m not sure this statement holds water. Perhaps you’re thinking of Microsoft’s desire not to have certain benchmarks *published* without their blessing.

“…so there were very few examples showing how bad it was…”

I don’t know where that comes from, but as someone who extensively used the multi-threaded features of the Windows NT family, I can say we extracted a *lot* of processing power from a second processor. Windows NT was fast and rock-solid and was the OS of choice for engineering/scientific workstations and servers of the day. (Yes, Novel was the more popular server software in the late 80’s and early 90’s, but the Windows NT line quickly surpassed it in functionality and popularity.)

“…most all of [Windows] software was single threaded…”

That’s true enough – any 16-bit software written for Windows 3.x would have been single-threaded. But as soon as Windows 95 came out (in 1995), true 32-bit multi-threaded apps were everywhere (because developers already had a head-start developing on Windows NT).

I’m aware of all the Windows NT versions and their performance and hardware requirements. The consumer hardware platform did not attain the capabilities to run Windows NT well enough until XP shipped. I was a beta tester of that platform and ran versions up to NT 4.0 and it worked but required lots more hardware then other OS’s or even Windows 9x to get the job done good enough. It was sad to see them move the graphics system into the kernel in 4.0 as prior to that it was rather stable for a Windows platform.

But again, the performance was poor compared to OS/2 or even UNIX on the same hardware. NT along with other 32bit OS’s did quite well on the Pentium Pro hardware but Microsoft was still peddling the mostly 16bit hacked Windows 9x platform and really screwed Intel over. Their partnership was legendary but it fractured from that point forward as Intel had to hack together a more 16bit tolerant CPU to run Microsoft’s primary OS, Windows 9x. And OOP frameworks made it easy to develop for different platforms in the early and late 90s. Java software was one bit which showed the cracks in Microsoft’s OS strategy and it took more illegal activity for them to protect their ecosystem.

I ran many OS’s back in those days and developed on Windows, UNIX and OS/2. The number of times memory leaks and bugs traced back into Microsoft APIs were disheartening. But marketing is king when management ignorance knows no difference and is feed lies and then dictates development policies. By the time NT 4.0 came out, I had given up on that platform as others were far better and far more advanced but mostly far more stable. But what really got my goat regarding Microsoft was their attacks on software development advances. CORBA, OOP frameworks, Java, etc were all attacked viciously by Microsoft as it worked to maintain control of developers and therefore the platform. By the time Windows XP shipped they had collapsed the software development market, stalled C++/OOP and hardware was fast enough to sell 32bit Windows to consumers and businesses alike. “Does anybody remember Windows?” was a famous quote from those days.

Only if by consumer you mean the home user. The business consumer had several options in the mid 90’s to use multiple processors via Windows NT Workstation, which iirc was the predecessor [in spirit] to the “Pro” versions of Windows XP and beyond given NT server DNA influenced Win2k/XP.

There was underlying hardware and firmware interfaces needed as well. It took quite a while for all the pieces to be put into place.

A key point is how those other cores were limited in the software they could execute. You couldn’t really deploy software to those other processors, only interface with their firmware.

The small amount of low bandwidth for RAM of a typical workstation at the time was starving even single core processors of data, and operating systems were still grappling with how to multitask. You were hitting swap just to load up Windows98.

Having a multi-core processor, or a multi-processor machine, was a kind of pointless curiosity. I mean, we had those Pentium boards with slots for two CPUs, but outside of specialty programs made specifically for two CPUs, it made absolutely no difference. Your software still ran on one or the other CPU and it was just as slow as anything.

Single thread performance was the limiting factor for the basic stuff like how fast your word processor ran, which is easy to forget now that we have multi-GHz machines that can perform multiple instructions per cycle with branch prediction.

PS. People often forget how slow the old computers actually were. People are nostalgizing about how the old software wasn’t so bloated, but in reality back in the day you had to wait for the desktop icons to show up one by one while the hard drive was going crunch-crunch, and you could literally brew coffee waiting for Word to start up.

Yes, back in those days it weren’t much room for bloat, and especially not performance for it for that matter.

While now a days it is trivial to make a simple program become a behemoth without actually noticing it.

Be it consuming tons more processing power than needed or using excessive amounts of RAM, of just stalling about to no end due inefficient APIs and the like….

Another thing was that most consumers couldn’t afford to buy a second CPU. If you did have multithreaded software running on a single CPU, it ran slower with more overhead from the thread switching, so software developers saw it pointless to even attempt.

Especially when Microsoft dominated the industry with a 16bit OS. Do you remember when Intel came out with a 32bit optimized CPU and Windows ran slower on it at the same 150MHz clock speed? Pentium Pro was the CPU name and while far better OSs ran much faster on it( Linux, UNIX, OS/2 ) it was a flop because Microsoft’s marketing sold Windows as being 32bit but it was operating mostly as a 16bit OS.

Intel had to back peddle and go back to a 16bit optimized processor design and to sell this ‘lesser’ hardware as ‘new’ they added a little bit of DSP capabilities( SSE ). Pentium II it was called and it took almost 10 years before the Intel CPU architecture was dominated by 32bit instructions.

So most people don’t know their computers were slow because Microsoft held back the industry. It has little to do with backwards compatibility and more to do with marketing. We’ve seen Microsoft force developers down alley after alley in pursuit of crushing a competitor. They chose to keep the bloatware because it keep people upgrading as they slowly, very slowly, progressed unabated by competition because they controlled the distribution channels.

I ran multi-processing OS/2 on ABIT BP6 dual processor hardware in the late 1990’s.

Similar experience with dual Pentium Pro but used NT 4.0 Workstation. Don’t recall poor performance but that was a long time ago. I used Linux elsewhere, but neither it nor wine was fit for general purpose business computing inside a heavy windows shop at that point. I cringe at memories of installing Redhat 5.x via floppy and missing dependencies that you had to manually select and thus having to start over when the install failed after innumerable disk swaps.

I worked at a Solaris shop writing code used for Atlas V launch validation data acquisition and noticed the performance of the Solaris workstations were hideously slower than my BP6 machine at home. So I built up a box and brought it to work, got permission to put it on the LAN running Linux and our 10 person team developed and built on that before final build and testing on Solaris.

I also ran OS/2 at home running Lan Server kernel and while Windows NT worked, it wasn’t even close to the performance of OS/2 or Linux on those BP6s.

I also developed on OS/2 and was tasked at one point to find out why the developed software was not showing performance boosts expected on the Pentium Pro hardware. It was because of the data structures were not aligned to 32bit boundries. Tons of 16 bit words mixed with bytes screwing up alignment. Before the Pentium II with that MMX was the 32bit optimized Pentium Pro and it was very fast at the same clock when the OS and software left the 16bit world behind. It wasn’t a huge task to clean up data structures but it did require major testing effort since some devs liked to do fun things with pointers back then.

I thought Microsoft should have made a big push to make a 32bit Windows back when the 80386 was introduced, abandon DOS completely. Instead they opted for a (too) slow transition. They went so far as to graft a chunk of their Windows 95 work onto Windows 3.1x with Win32s. Most software of the early 90’s that runs on 3.1x and 95 uses Win32s and usually includes that as part of its install.

But that hobbles it somewhat on 95 because it cannot use any 32bit stuff not included in the Windows 32bit subset – unless it’s written to have some features only available on 95.

Drive speeds were terrible. Earliest CD-ROM were worse. And don’t get me started on Laplink (especially serial), backpack drives, or tape backups via parallel port. But I disagree on the bloated debate being simply nostalgia. Some software changes, in the guise of being helpful, definitely slowed user operation, i.e. bloat. Best example I can quickly think of was the MS Office font list when it first started displaying an example of each font vs. the simple, faster listing of each fonts name that preceded it. Not a problem on the fastest systems when introduced, but something unneeded by the average user that really slowed down font changes on older systems. It is a cool feature, but how often does the general user use more than two or three fonts and thus need to see scores of font names rendered in their specific format?

You should have tried some of the word processors native to OS/2 then. The multi-threading of the OS/2 kernel was amazing and applications written to take advantage of that multi-threading were a pleasure to use. Some native graphics apps too. Even UNIX on the old 368, 486 and Pentiums were so much better user experience because of the kernel multi-processing design than anything made by Microsoft. Cooperative tasking was a hideously bad choice even in the 1980s and that choice and that market power stalled the tech sector a good 10 years.

OS/2 was always on my todo list but I never got around to it. I almost mentioned it above but would have been hearsay from friends that used it.

>but how often does the general user use more than two or three fonts and thus need to see scores of font names rendered in their specific format?

Often when people do need to change the font, they want to browse for a font that looks the part, and they don’t remember exactly what “Foobar Sans Serif” looks like. Other times when the font doesn’t matter, they just stick with the default and don’t touch the font selector at all.

When you think about what the font selector is for, it’s like the color picker in a paint program. It changes the visual outlook of the font. Not seeing what the font looks like is like picking colors based on a text description such as, “olive green”, which could be anything. You then have to test it out and go back to the list if you don’t like it, which is inconvenient.

Maybe you’re just used to older word processors that weren’t WYSIWYG and printers that had their own fonts built-in, so what you had on screen had no relevance. What really killed the performance was the “feature” in Windows where having lots of fonts would cause the system and all the applications to bog down in general, and the font cache system made to help with that issue would sometimes corrupt and cause the system to boot up to a black screen.

Sorry, not that slower. I have a very specialize program that took an entire weekend to on my 7.16 MHz Amiga. The same program runs in less than a hour on my 2.1 GHz I7 machine. In Fact it is 55 times faster than my old Amiga. But just looking at the clock speed it should be 300 times faster today. And we know the old 68000 wasted a lot of clock cycles to do even simple instructions.

Today I am fighting in how to connect my custom projects to my computer using USB, and most ports available give me horrible thru-put. On my old Amiga I could read 2 MB per second with hardware tied directly to the system bus, today I have to go thru so many junk interfaces I am lucky to get 200K per second and most times 1-2 KBs is the more normal speed.

To me computers have gone backwards.

Sounds to me like you’re just not very good at programming for the modern machines.

It sounds like your software and your hardware interactions are dominated by round-trip times. A modern x86 should be closer to 10,000 – 50,000 times as fast (single-core), depending on whether you can take advantage of SIMD. That’s not an exaggeration.

I’m looking at Dhrystone MIPS. According to Wikipedia, an 8MHz 68000 pulls 1.4 DMIPS, and so a 7.16MHz 68000 should be about 1.25 DMIPS. It claims a per-core IPC of 0.175.

The Core i7 numbers I see on Wikipedia range in Dhrystone IPC from high 7s to low 10s. If we assume IPC=8 (since 2.1GHz sounds like an older i7), then it should pull around 16800 DMIPS. That’s about 13440× as fast.

A math-heavy workload (particularly floating point) would see much larger gains, even if you had a 68881 back in the day.

Your code obviously isn’t Dhrystone. It’s either hitting memory latency or disk latency or something.

For your hardware interfacing: if you are bit-banging from the PC over, say, an FTDI single, I feel your pain. The RTT is killing you. You can try tuning the latency timer, but it only goes so low. You may be better off building a small microcontroller front end to bit-bang locally, and communicate the results back high speed, with something like an FT240X or FT2232H.

Something is wrong with your i7 and USB :-)

Running winuae on 14 year old Core 2 Duo gives you A500 running at around x1000 the speed. Running your magic “1 day” task should take ~1 minute in emulator on 14 year old computer, much less on i7.

> we had those Pentium boards with slots for two CPUs

Ah, those were the times were some needed to patch their BIOS to get it to recognize HDDs with more then 32GiB (if I recall correctly there was another barrier at 128GiB but irrelevant for my 80GB HDDs).

Those dual slot Pentium 3 boards were useful with Windows 2000 as (game) servers (for LAN parties). Not so much or at all with Windows 98(se) / ME

OS responsiveness. I had a series of dual socket computers starting with a dual Pentium MMX and Windows NT was just always responsive regardless of what else was going on.

Also because power consumption wasn’t 100+W per chip you could put two high end chips and not need specialized cooling.

The downside was even in the late 90s you’d still need single cpu only OSes to play games or run older software so you generally still needed “good processors” rather than two weaker CPUs to make this truly useful. Dual booting of course for work and play wasn’t too terrible but was still lost time.

That said I can see why they spent their transistor budget on higher clocks and IPC over time but what if Intel went with dual core Pentium 3s when the P4 launched for workstation and server uses, instead of the pentium 4 Foster based Xeons. Ensure the custom P3 had access to more bandwidth like P4 and it would have been impressive while fitting in the same die size as a single core p4.

The core architecture is essentially an evolution of the Pentium 3. While p4 was on the desktop, Pentium m was the laptop CPU,, which was based on the P3. The core architecture came from Pentium m. Smaller pipelines that p4, more cache and more execution units. Higher IPC with a slower clock.

Intel was notorious for pushing up the MHz war and neglecting to add any front side bus bandwidth, which is part of the reason why the P4 bombed so hard. It was a very powerful processor trying to drink the ocean with a straw.

You look at the likes of an Amiga:

Motorola 68000 for the main CPU typically running at 7MHz

a co-processor for graphics, called the Blitter chip – it moved data about in RAM and modified it.

a co-processor for sound that could transfer audio to/from disk by itself.

And you could technically argue that it was a multi-core board, processor might be pushing it a bit.

Technically it was a chipset with hardware accelerators for certain functions. The starting point for the system was a game console / arcade machine where instead of the the Motorola CPU you had a cartridge containing the game logic on an ASIC to control the chipset. The idea was to take the CPU out of the machine to make it cheaper to manufacture the device itself, and then part of the cost would be pushed to the game cartridges which could have faster or slower CPUs depending on the game.

When the video game market crashed, they put the CPU back in and retconned it as a personal computer. As a result, you got the weird Amiga architecture with boundaries like fast and slow RAM where the game cartridge would have been.

Never knew that, but that makes a so much sense.

But you look at a modern embedded CPU like the NXP LPC4370 which has an ARM M4 and two ARM M0 coprocessors, one of the M0’s job is to handle data transfer from the 80MSPS ADC or USB 2.0 HS, to free up the main CPU for more urgent tasks.

The Amiga with hardware accelerators for certain functions, is amazingly similar in a lot of ways to how some multi-core CPU operate they allocate cores for specialised functions but now they can also operate as an independent processor running code, but there is a lot of similarity.

There are no coprocessors in Amiga transferring audio directly from disk to sound output. Disk reading requires CPU. Paula is just a dumb pll Data Separator, and the rest is handled by CPU (trackdisk.device) and Blitter to speed up bit decoding. This was very “clever” in 1985 (a1000), questionable by 1987 (a500) and plain stupid in 1992 (a1200) when Commodore already fired anyone with a first clue about phase-locked loops and was unable to update Paula to make it work with HD floppies.

Would be interesting to see what the difference is with the 1770 (the controller that was used in the BBC Master (and B+ iirc). I know the 1770 could do some things the 8271 couldn’t and vice-versa.

I seem to remember Watford Electronics made an expansion board for the BBC Micros that had a 1770 on it.

Ah, memories. BBC Micros were such nifty machines! Shedloads of expansion (Tube, 1Mhz Bus, User Port, Printer Port, Econet) just ripe for experimentation. It was fairly easy to make your own ROMs for it too, although that was back in the days of EPROMs which were a pita to use.

What I recall is the 8271 was about to go obsolete when Acorn designed it in to the BBC ( or rather kept it from the earlier System 3 as they already had the software) , so it was really expensive and could be hard to get hold of if you wanted just the chip and not a package including a drive. The cost alone made it worthwhile for third parties to do 1770/1772 plug-in boards and write their own filing system for it.

For me it was, Athlon 64 X2 4200+ in 2005, great procesor for the time.

Core2duo came a year later… If i remember correctly, there was Pentium 4 dual core as well, but these were just bad.

Similar here. In 2005 I had an Athlon 64 X2 3800+ (bit of a mouthful), and it absolutely blitzed my son’s 3 GHz P4 at the time.

My, how times have changed. Back then there was very little software out there that could take proper advantage of the second core, and that includes Windows. I suspect that until CPUs with multiple cores actually started appearing on the PC market, Microsoft didn’t have much incentive to make Windows utilize them properly.

Made a floppy disk controller once (8272 is in the big 2×20 DIL chip in the lower left corner on board, the rest is a full Z80 system compatible with both CP/M and ZX Spectrum). The disk controller chip was an 8272, the biggest brother of the 8271 floppy disk controller.

https://hackaday.io/project/1411/gallery#83d0e26d5dcf011373b9db3375d4cf11

:o)

Interesting decapping, thanks for the info! Never looked under the hood, but I’m quite intrigued: why use a dual core there, or a CPU at all, when a hardware state machine would have been more than enough for the job?

¯\_(ツ)_/¯

According to the article linked in the post 8272 was no brother of 8271, but Intel licensing NEC D765 and manufacturing it using inferior process node.

I just scrapped out an old dual Pentium graphics workstation from the 1980s. Pretty high end for that time window.

I scored $2.00 USD at the recycle center. Back room now clear.

Pentium from the 1980s? Was it left by a time traveler?

Being a decade off isn’t too bad now is it?

At least I wouldn’t complain if I find a computer from the 30’s on my doorstep.

Though, I don’t complain when people leave computers from the 10’s on my doorstep for that matter….

https://www.youtube.com/watch?v=SkUdjlQy3rk

Late 1980s.. Duh.

Intel released Pentium in 1993, so even late 80’s is too early…

Don’t jump in conclusion to fast, maybe it’s JERRY from Sphere.

Fully working. Original keyboard and mouse. 1994yr.

Integrated scsi, sb16, amd pcnet, matrox millenium 2MB.

Floppy with 32bit pcmcia adapter.

Whoa! How did I not know the first gen Pentium was released in early 1993? If someone told me to guess, I would have said ’95 or ’96.

It was, but they quickly found a math bug in the CPU and had to do a recall in late 1994. The bug cost Intel almost a billion dollars in today’s money, but it put the Pentium in the headlines right around 1995.

Why did they call it the Pentium instead of the 586? Because 585.9999999999 didn’t sound very good.

How many Intel engineers does it take to change a light bulb? .99999999999

Hehe, what a time to be alive

big brain play or weaksauce troll

1 no pentiums in 1980s

2 shitty clone vintage pentium system sell >$100

2 vintage high end dual Pentium workstations easily fetch >$300

Of course, there were schemes for popular 8bit CPUs for dual processing. Definitely the Z80 and 6809, but I think the 6502. But it was mostly schematics, not much software published.

Not the same as dual-core, but they beat dual Pentium machines by decades.

And there were hardware printer spoolers and “smart” external floppy drives and Gimix had serial and parallel boards that included CPUs. Amateur packet radio was generally done by adding a bix with a CPU.

And OSI’s floppy controller used a USART, people windering at the time why they didn’t use a floppy controller IC

I believe the Fairlight CMI used 2 6800s on the same bus.