This year has been the year of home video conferencing. If you are really on the ball, you’ve managed to put some kind of green screen up so you can hide your mess and look as though you are in your posh upper east side office space. However, most of the consumer video conferencing now has some way to try to guess what your background is and replace it even without a green screen. The results, though, often leave something to be desired. A recent University of Washington paper outlines a new background matting procedure using machine learning and, as you can see in the video below, the results are quite good. There’s code on GitHub and even a Linux-based WebCam filter.

The algorithm does require a shot of the background without you in it, which we imagine needs to be relatively static. From watching the video, it appears the acid test for this kind of software is spiky hair. There are several comparisons of definitely not bald people flipping their hair around using this method and other background replacers such as the one in Zoom.

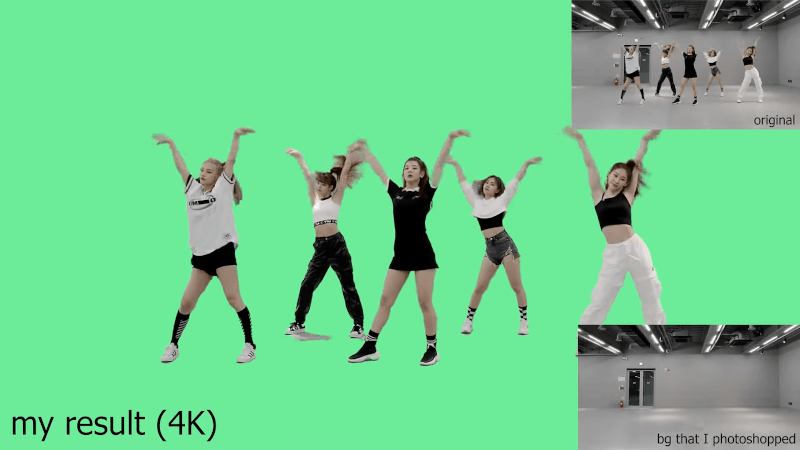

The quality of the results depends somewhat on how many pixels the filter can work with, so 4K video seemed to do better than lower resolutions. Even then, it isn’t perfect, but it does seem like it did a better job than some of the existing tools. If you try it yourself, we did read that you want to avoid blocking bright light, especially during background capture, and try to keep lighting constant. For prolonged use, it may be helpful to re-take the background image as light conditions drift.

As you might expect, you are going to need a big video card to do this in real-time. This is especially true since the algorithm seems to work better with 4K input. However, if you are doing video production in post, you can probably trade hardware for time.

We’ve seen a similar effort with DeepBackSub. Once you have this working, you can build a mirror to improve your video conference eye contact.

So, if it is using a background only frame, it is working exactly like many other systems have done for decades, it’s not even chroma keying, then, I’m wondering where is the novelty

It works faster, can work in real time, and works better with hair, which is usually a pain to get right…

Still needs tweaking to deal with shadows, see foot of left girl, back row.

Did you not watch the video?

Cool to be able to do this on your own projects. I know this feature from Microsoft Teams. The only downside is the “halo” around people and “non human objects” disappearing.

Example: Try to show your phone or cool coffee mug.

Forget hair. Decades ago there was a promotional photo of the ultimate test by the “Ultimatte” chroma key system: pouring water from a pitcher into a glass, ALL transparent with the background through them. Can’t find the photo online, but there’s your challenge for background replacement.

chroma keying works in real-time, and simple background substraction as well, still don’t see huge advantages over regular technology, people tout AI as alien or magic, while the gains here seem pretty dull.

So [Al Williams] is a K-pop fan. Who would have guessed?

I’m in the business and use a high level green screen for many hours every day. (BBC patented Reflecmedia Chromatte) This system in the video seems to need about as much tweaking as a well light cheap greenscreen drape… It doesn’t seem dramatically better than standard issue cheap greenscreen results. High-end work is moving toward not using green/blue walls or drapes and instead, replacing them with very large high resolution video monitors that also provide perfect lighting for talent in the shot. Maybe the “New AI” system would be very useful to use by non-professionals like on zoom calls and Tic-Tok vids??