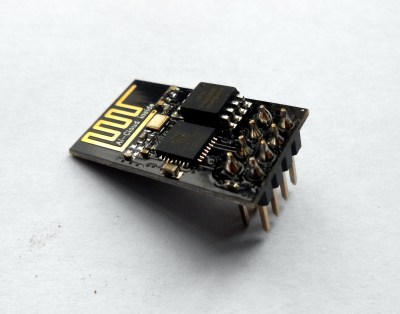

We are extraordinarily fortunate to live at a time in which hardware with astounding capabilities can be had for only a few dollars. Systems that would once have taken an expensive pile of chips and discretes along with months of development time to assemble are now integrated onto commodity silicon. Whether it is a Linux-capable system-on-chip or a microcontroller, such peripherals as WiFi, GPUs, Bluetooth, or USB stacks now come as part of the chip, just another software library rather than a ton of extra hardware.

Beware The Blob!

If there is a price to be paid for this convenience, it comes in the form of the blob. A piece of pre-compiled binary software that does the hard work of talking to the hardware and which presents a unified API to the software. Whether you’re talking to the ESP32 WiFi through an Arduino library or booting a Raspberry Pi with a Linux distribution, while your code may be available or even maybe open source, the blob it relies upon to work is closed source and proprietary. This presents a challenge not only to Software Libre enthusiasts in search of a truly open source computer, but also to the rest of us because we are left reliant upon the willingness of the hardware manufacturer to update and patch their blobs.

An open-source advocate would say that the solution is easy, the manufacturers should simply make their blobs open-source. And it’s true, were all blobs open-source then the Software Libre crowd would be happy and their open-source nature would ease the generation of those updates and patches. So why don’t manufacturers release their blobs as open-source? In some cases that may well be due to a closed-source mindset of never releasing anything to the world to protect company intellectual property, but to leave it at that is not a full answer. To fully understand why that is the case it’s worth looking at how our multifunctional chips are made.

Chips Aren’t Made Like They Used To Be

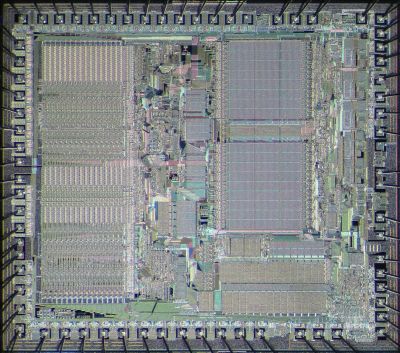

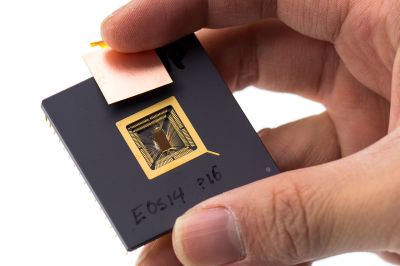

Decades ago, a new microcomputer and its range of peripheral chips would have been designed in-house in their entirety by a team of engineers employed by the company. Chips such as Intel’s 8086 or Motorola’s 68000 were produced in this way, and would in many cases even have been put on silicon by in-house chip fabs. Today’s semiconductor industry is much more fragmented, and works in an entirely different fashion. While some large companies might still do the whole job in-house it’s far more likely that they will instead buy in the components of their new products as pieces of IP, as software in the form of VHDL or similar hardware description languages. It’s entirely possible to design a complete SoC in this way without owning any of the IP yourself, and companies such as ARM have made themselves into dominant industry players by selling their cores to chip developers. Such a chip assembled from off-the-shelf IP can then be sent off to a third-party chip fab for production, meaning that a complete product line of chips can be marketed without the direct ownership of either IP or factory.

A chip assembled from multiple pieces of commercial IP will of course be subject to all of the individual licensing agreements for its constituent parts. Individual IP owners will have a multiplicity of reasons for inserting restrictive clauses in their agreements, but at the most basic level they are anxious not to reveal any trade secrets to their competitors in a cut-throat industry. Thus it’s a given that the blob controlling a peripheral on that chip will be bound by a clause in the licensing agreement restricting the dissemination of information relating to its hardware. The blob remains a closed-source precompiled binary, and no amount of railing at the chipmaker about open source will change that. Even chips that contain open-source components such as a RISC-V core are not immune to also containing closed peripheral IP, as for example is the case with the Bouffalo Labs BL602 WiFi SOC.

It’s All In The Updates

So open source advocates have an answer to why blobs exist and why they won’t be going away any time soon, while it might not be to everybody’s taste it is at least a valid one. But the problem with blobs doesn’t stop there, and perhaps our community needs to think a little about it too. Because even if you have no problem with your hardware requiring a blob its presence can still come back to bite you. The reason may have as much to do with the open source world as it does with the IP holder or the manufacturer.

If you own a Raspberry Pi, you may well have updated your copy of Raspbian or Raspberry Pi OS several times with new versions that included major updates to their Linux kernel. The Raspberry Pi’s Broadcom SoC is just like all the other chips in that it comes with a blob, and when they release a new kernel it will be in a firmware package customised for use with that blob and will also come with any appropriate blob updates. The Raspberry Pi folks will have the sources to the closed-source bits but they are prevented from releasing them by their agreement with Broadcom that granted them blob source access. Thus the Raspberry Pi has up-to-date software, but it’s an uneasy mix of an open-source operating system that relies on a closed-source component to work.

Now compare the Raspberry Pi to a lesser-known single-board computer, say a ten-dollar board with a name that follows the {SomeFruit}Pi naming scheme. The Raspberry Pi might have a less exciting spec, but if you examine the operating system that comes with the off-brand board you’ll find that it has a very similar customised kernel that relies on a blob. The difference will come as you continue to use it, over time there may be no new kernels released and after a while you will be using an ancient kernel version with no prospect of an upgrade.

Even if you don’t have a no-name board you’ll recognise the same problem if you have an Android phone. It’s a powerful Linux-capable computer running a customised Linux distro, but after a few years your chance of a new Android version is very slim indeed and you will have next-to-zero chance of installing another Linux distribution on it without tricks involving userland chroot and whatever stale old Android kernel it has installed.

Both these examples have the blob at the root of their problems, in that both come from manufacturers with no interest in releasing new custom kernels for their blobs, so both slowly drift into obsolescence.

What Do You Think Open Source Is For?

In asking what can be done to alleviate this situation it’s worth considering what role open-source software can play. We have established semiconductor industry IP as the root of there being zero chance of manufacturers making their blob code open-source, but how can the world of open source react to that? It comes down to a question of open source philosophy that is probably reflected in licence choice; does open-source exist to make software that works alongside closed source components, or does it exist to extend open-source to all corners of computing and exclude closed source? In the case of Linux it veers toward the latter as the interface to which the blob must talk changes with each version of the kernel, forcing a blob developer to update or let their distribution age into irrelevance. The Raspberry Pi developers put in their effort as a key feature of their products, but this is not a priority for many hardware vendors. If other operating systems such as Microsoft Windows can retain some low-level driver compatibility across their versions, why can’t their open-source alternatives?

There is of course another potential outcome. Semiconductor manufacturers prefer things that cost them less money, and as can be seen with the current slow appearance of RISC-V cores, they are showing signs of becoming willing to dip their toe in the water when it comes to open-source hardware chip components. A quick look at OpenCores or LibreCores will reveal a wealth of parts that can be freely added to designs, so there is at least a possibility of an SoC with no proprietary IP that would need a blob. It will take more than the mere existence of such resources to persuade a manufacturer to take the plunge though, for a fully open-source chip to be a practical proposition there must not only be components for all on-chip functions but they must also be reliable enough for production. Even with the best of intentions, those two things may take a little while to happen.

I hope this article gives some food for thought as to the role of the blob on modern chips and to its relationship with open-source software. It goes beyond a simple argument that manufacturers should just release their blob sources, but if the blob situation can’t be changed should the open source world adapt itself to deal with that? As always, we’d like to hear your views in the comments.

It’s not just the IP for the silicon that has restrictions, some of the standards themselves require association membership and non-disclosure to be allowed to use them, maybe even per unit license fees. Therefore the frequent whine of I want a fully open source board with ALL the modern interface standards, no blobs, ain’t gonna happen. Maybe you can do an open core board with VGA, RS-232, USB 1.1, 10Mbit Ethernet annnd not a lot else, but immediately you want HDMI, PCIe, SATA, SDHC etc it ain’t gonna happen, all blobs.

There are reasons for this, some technologies have multiple associated patents, and the interested parties in the association have pooled the patents for the purposes of implementing this tech, so a per device fee of 25 cents or so covers all that patent licensing required to use the standard.

So what are you going to do if you want modern interfaces on blob free boards? Pioneer new interface standards that a) are 100% open b) avoid even smelling like they use any currently valid patent.

Or ignore entirely the fact something is patented and proceed full steam ahead. What they going to do? Take all your money. ‘A’ would be nice but there’s always inertia.

I think a few of the standards you listed have standards bodies but no license fees to use them or hidden parts of the standards, just a membership cost if you want any say in the future development.. But either way its a mess that doesn’t lend itself to useful fully open devices.

Be interesting to see if a HDMI competitor could ever catch on as open source, actually getting it to appear on displays so you could choose to use it..

Whats are you talking about? SATA, USB 3, PCIe are open standards. And while HDMI is not it would be trivial to implement it through DVI.

But things like adaptive refresh over HDMI is secret, and you must sign a NDA to read it.

Then death to HDMI? How are the other standards? Displayport?

DVI (or even VGA in some cases) would be fine by me.

I fully agree with what you say, but unfortunately, Joe Average buys said monitor with HDMI, and before too long, all *you* can buy is a monitor with only HDMI. We are being screwed by the sheep of the world.

MIPI stuff (for cameras and embedded displays) are also under license stuff, though many times the people doing the integration don’t seem to have to pay because e.g. the chip vendor and panel vendor have. Either that, or there are a lot of people out there who are skipping out on paying MIPI: I’ve seen a lot of MIPI based devices but don’t know anyone that’s not in the IC business who is a member. The biggest impacts probably are to blocking open soft (fpga) designs implementing the protocols, which is a shame since the relevant bridge chips are technically available but you need a lot of buying power usually to get your hands on them in bare IC form.

Hardware is not something easy or else everyone would be making it for themselve already. The low hanging fruit days are pretty much gone. One can’t hope to do anything without stepping on shoulders of others. Thta’s why there are things like standards. Getting a standard accepted by others is not an easy task. (Been there done that). There are a lot of engineering put into a good standard – figuring out what can be manufactured, how thing interoperate, forward and backward compatibility. All of those activities and administration cost money, so they aren’t “free”.

Hardware has a cost assoicated with the fabrication, prototyping stage. Software have a lousy track record of forward/backward compatible or care about standards. The same open source software model should not be applied.

Governments also make standards that cost a lot of money, but are released to the public for “free” afterwards.

The key is to finance the standardization process in another way, that does not require restriction of access.

It’s quite similar to the question of open access to research papers.

DisplayPort is an open standard, and more capable than HDMI.

Death to HDMI then! :D

Except for the annoying feature where – unlike with DVI/HDMI – the host computer does not communicate with the EDID chip directly through the cable, because the data is now is transmitted through a generic multi-purpose “AUX” channel which requires active participation from both devices. So when the display powers down, the operating system loses information about the monitor and has to assume it was disconnected. The OS has no way of knowing whether the monitor is still there.

This then results in annoying side effects, like having all your windows moved to a second monitor so they’re not stuck on a non-existing desktop. To “fix” that, the display manufacturers started adding the option (or default) to leave the logic board on even when you turn your monitor off.

There are also instances where government regulation (say FCC regulations for the use of various radio frequencies) require things to be locked down so they can’t be modified in ways that could cause problems.

For example, its a good bet that a totally open source cellular radio would violate all sorts of laws.

I don’t think that’s an accurate statement. Manufacturers have been using the excuse of “we had to do it to get it certified” to lock down their hardware. But I challenge you to find where the FCC requires that.

I wonder about that also, because if you lie about what country you are in to the software, the cellular or wifi radios configure themselves for alternate band plans. (Compare Japanese wifi channels with US for example)

Maybe Linux would be better off with more stable ABIs. Why does a kernel have to be customized for a blob? Why can’t the blob be customized to the kernel, and the kernel be stable enough to keep using the same blob for the life of the hardware?

Some people seem to think Linux purposely makes changes all the time specifically TO throw off propietary blobs that they’d rather not exist at all, and probably especially to throw off unmaintained blobs.

If that were never a thing, could we have had a one size fits all devices Android image like Windows and Linux have always had, running on most any machine?

“one size fits all devices Android image”

When was that though? Refresh my memory.

That was his point…

Ohhhh, one of them thar re-heretical questions, like does the pope crap in the woods?

If your idea is to transform the blob into the driver, it is a bad idea. Just see the graphic video drivers in linux: they started with FBdev, a very simple and generic graphical framebuffer. Then it moved to DRM (nothing to do with digital rights management), which means that devices that don’t support DRM can only use the old FBdev (which, by the way, today is 100% obsolete). Inside DRM you have the old DRI, DRI2 and recently DRI3, three different interfaces.

This means that, doing what you want, would mean to keep four (or even more, when the developers decide to change again the internal API to fix whatever problem they discover with new hardware with new characteristics) different APIs, because the blob can use any of them, and you must be sure that all of them works fine, and that you can do the same with all (which is problematic, because you are implementing a new interface precisely because you can’t do something with the old one)…

Bad idea.

It’s also true that this is a design feature in some cases/some people’s minds, that it strongly incentivizes upstreaming driver development. It’s not necessarily a strictly technical decision, it’s also social and political. (Though for sure it does have technical advantages. Stable api/abi’s are a lot of work and a lot easier to break than maintain, seemingly exponentially so as their size increases.)

It’s a terrible policy, because anyone who makes hardware then has to trust the driver support to third parties who have no interest whatsoever to provide that support.

If you use some standard/generic chip in the product, you get the minimum support for that, and nothing specific to your device because the upstream is not interested in the peculiarities of your product. For example, if you have a printer, the upstream is happy to say “it prints”, and doesn’t give a hoot about your paper configurations, color calibrations, the integrated scanner… etc.

Just to be clear – there *are* ~15 USD Pi boards, which don’t use any kind of blobs for SoC (external modules like wifi/bt excluded) when using mainline U-Boot and Linux. Such boards are based on Allwinner and Rockchip SoCs (that I know). Such complete open source stack supports most features that are present in SoC, including HDMI, DRAM, VPU and GPU. Most of the times, standard cores are used in such SoCs, so there is great chance that driver already exists in Linux and only few platform specific bits must be added. For example, commonly used HDMI controller is Synopsys DesignWare HDMI, which is used at least on Allwinner, iMX, Rockchip, RCAR, Amlogic SoCs and completely open source driver exists for it for quiet some time now. But it’s true that at least in Allwinner case that’s mostly community work, a lot of reverse engineering work was done to come to this point.

I see vendor supplied blobs as a ticking time bomb, you have no idea when, but someday with constant bit-rot they will eventually become a gaping wide security hole, that can only be patched if the vendor feels like providing long term support (loss) or push a new product (additional profit).

I can’t BELeiVE nobody here has mentioned the Libre SoC project! Not even Raptor’s blob-free workstations.

https://libre-soc.org/

It’s basically the perfect open-source chip. Exactly what people want.

You can bet your @$$ I’ll be in first in line to buy one.

“If other operating systems such as Microsoft Windows can retain some low-level driver compatibility across their versions, why can’t their open-source alternatives?”

This is one of the key things that needs to happen for “Linux on the Desktop” to really take off. But it’s not just the Linux Kernel… Having to deal with things like graphics drivers being required to match up to very specific Kernel, Xorg, etc version is a huge pain in the ass.

There is Ndiswrapper for example. Ndiswrapper is a shim that allows Linux to use Windows drivers for wireless card support. Similar shims could exist for almost any other part, except for (probably) a high-performance video card.

I remember using that like 15 years ago trying to get my crappy HP laptop online. Please don’t speak of this ever again.

If you use AMD (assuming we’re talking x86 desktop here) or Intel, this headache is long gone. The official driver is open source, high quality, and upstreamed. Only the Nvidia driver still has these problems due to being a blob. Graphics on Linux are way better than what they used to be 10-15 years ago, it’s awesome. (And I do open-source VR/XR development so I end up using some pretty leading/bleeding edge driver features. It’s pretty cool that I can report issues and feature requests on the Intel and AMD drivers by filing issues on Mesa on freedesktop GitLab.)

There are a few out of tree drivers I still end up compiling per kernel (mainly for super cheap usb wifi adapters based on stuff like RT8188FU, and I used to do it for better it87 embedded controller drivers), but most of the time dkms works fine to build them automatically once set up, and none of them will keep you out of your GUI. I do wish ARM vendor kernels were all as easy.

Whoa, that raspberry pi is not just a raspberry pi 1, but also one of the first batch of 10k! Only that very first batch has the JTAG header mounted. someone forgot to tag it as “do not mount” for the first batch.

Well spotted. Yes, it was ordered on Feb 29th 2012, and arrived in May 2012. It’s not one of the first 1000 with the rework, but the following batch that were made in China.

“If other operating systems such as Microsoft Windows can retain some low-level driver compatibility across their versions, why can’t their open-source alternatives?”

Windows 95 (don’t know about 98 or Me) retained some compatibility with 16 bit drivers. Its printing system was fully 16 bit compatible, to the point where it would work with Windows 3.1x printer drivers.

After build 1607, Windows 10 only accepts drivers signed with the newest method introduced in Windows 8.1 Some Windows 7 drivers have been signed with it and will work in 8.x and 10, but some 8.x and 10 drivers were not signed with the latest method and will not install in later than build 1607.

What happens is the driver installer will run and finish but Windows 10 will silently block all copying of the files to where they need to go, and Registry additions also get blocked. If you can extract the driver files and use Device Manager to attempt a manual install, it will lie to you with a BS claim of being unable to find the file.

All that in the name of “security”. Has there ever been an “in the wild” security breach of any PC running Windows, using a hacked video driver, or any other driver? IMHO that change by Microsoft is just a ploy to push new PC sales.

There is a workaround. Install Build 1607 with all the “insecure” old drivers required, then update to 1909, then 20H2, or 2004 if your PC for some reason isn’t sympatico yet with 20H2. THEN MAKE A DRIVE IMAGE BACKUP.

Why the pitstop at 1909? Because 2004 will only update from 1809 or 1909. A fact the updater will not tell you when you run it on any build older than 1809. It will go through doing the install then tell you it has to roll it all back (wasting up to several hours of your time), rather than doing a version check as the very first step. Nope. It lies to you saying all is good to go for the update. Then once you’ve updated to 1909 the 2004 update may again say all is good, go through the install, and find that “PC Settings” or some other issue prevents it from installing again.

There are lots of security issues with graphics drivers. I haven’t heard of publicized uses of security flaws in desktop drivers, but in mobile the GPU situation is notoriously buggy and any given exploit is likely to use a GPU driver bug.

in 20 years we’ll be wondering how we got by with just this stuff and we’ll joke about using an esp32 instead of 555s.

I think we should make “blob” an acronym.

e.g. Blocked Library Of Binary

Base Loaded Obscure Binary

Boycott Losers Outta Business

Others?

It is already an acronym: Binary Large OBject…

But I like your proposals.

I think that a lot of Chinese blobs exist to hide the fact that they contain stolen IP.

I get a smile reading the G. Skoll site. Not that I think they’re doing anything bad. Just that a country that historically has had a rather lax policy on IP is now concerned about piracy and counterfeits. Sucks to grow up I guess.

It sucks buying a product on ebay that’s “new”. You scratch off the validation code, type it in, and see that it’s been validated 10 million times already.

The people making these chips, get to do what they want, it’s their chips. You can use them or not, or make your own. You won’t always get everything you want, just handed to you free. Tight control over the internals, protect the company, and their product. If anybody can go in and customize, they no longer know what there product does, nor do other people, who got the customized versions. Sometimes there are trade-offs, and the chips are configured to perform best, with select peripherals, but some features aren’t comparable, or as reliable, and get locked. They sell several versions, of the same hardware, just different configurations, and at different prices. If anybody could reconfigure the chips, they are likely looking to save some bucks, and not care so much about how well the chip performs.

If they sell their chips at a loss intending to make it up with restricting access to a blob they write, that is extortion, and they deserve to have their stuff bypassed. If it were an item of produce, it would be called ‘dumping’.

Hardware manufacturers should be selling their hardware at what it costs plus reasonable profit.

“Post-sale monetization” is a sad reality of the modern market. Smart TVs aren’t everywhere because there’s such huge demand for them, it’s because they can sell them cheaper and make up the money on referral payments, ads, data sales, etc. (This is even the case for non-physical purchases: Robinhood, and possibly all major brokerage firms with consumer businesses, make at least some of their money on your “free” trades by payment for routing your trades through people who can make money from knowing what you’re buying and selling.) It really sucks not to be able to just buy something outright and not end up being the product at some point in the transaction.

“””A chip assembled from multiple pieces of commercial IP will of course be subject to all of the individual licensing agreements for its constituent parts”””

Well said! For even a medium complex SoC, there’s no way to disentangle the web of licenses binding the chipmaker.

If you have a hard need to avoid blobs – perhaps for transparency or security reasons – then currently, the only way forward is to define one’s own silicon. Open Titan does this, and, thankfully, its being developed under an Apache License.

When it comes to wireless aren’t there also issues with open source and getting FCC or equivalent certifications? I.e. those agencies across the globe not being fond of radios that can easily be hacked to emit at frequencies other than the ones they are licensed for.

Of course nobody will release their blob sources voluntarily. That’s why they should be forced to do so.

Trade secrets are economically damaging; they prevent building one innovation on top of another, they often interfere with users who want to make the most efficient use of the technology they infect, and they severely damage the functioning of markets by creating asymmetries of information. Trade secrets are environmentally damaging; in addition to their general efficiency problems, they often prevent the reuse of old equipment. In the case of computers, trade secrets are damaging to security; they reduce incentives to avoid bugs, and they interfere with the response to bugs, but they don’t make it hard enough to discover bugs that you can rely on those bugs not getting discovered by motivated bad guys. Trade secrets not infrequently conceal malfeasance. Trade secrets interfere with human dignity by preventing people from communicating, sharing information, learning, and building on top of what they have learned.

Any attempt to keep a trade secret should therefore be banned as a matter of public policy. If you put something on the market, you should be required to disclose *everything*, or at least everything you have written down or on a computer, about what it is, how it works, how you make it, and for that matter how you sell it. There should also be no positive legal protection for trade secrets, agreements to keep them should be unenforceable, and retaliation for their disclosure should be illegal.

Yes, I know this will not happen.

No, those of you who will now go ballistic and start to complain that business could not exist without trade secrets do not, in fact, know what you are talking about. It’s perfectly possible to adapt to doing business in a world in which trade secrets don’t exist.

No, you do not have some kind of natural human right to keep secrets about technology, or to “own” ideas of any kind in any way. That’s just an insane idea. And a corporation *definitely* doesn’t have any such natural rights.

True, disclosing your secrets puts you at a competitive disadvantage… but if your competitors don’t have any secrets, either, there’s still a level playing field. This does, of course, imply that the whole thing would have to be done by international cooperation, which is one of the reasons it will never happen even though it definitely should.

No, disclosure laws would not be unenforceable. Making and selling things in significant volume is a very visible activity that’s easily regulated and *is* already heavily regulated. Given worldwide agreement about the laws, on the same order as the present worldwide agreement about the far less justifiable drug laws, you could easily enforce disclosure laws more than well enough to make the system work. There would be some details to work out. They’re not any harder than the details of lots of other regulations that work just fine.

If you want to “own” some piece of technology, there are patents for that. That is, of course, assuming that there’s any reason that the public should in fact let anybody own technology by any means at all. The actual evidence that patents create more innovation in practice is pretty thin at best, and the idea that everything should be ownable is a matter of religious faith, not economic fact.

>It’s perfectly possible to adapt to doing business in a world in which trade secrets don’t exist.

It’s one thing to say so…

Easiest when one has nothing to protect themselves.

The most appealing SoC in the pipeline at the moment has to be https://libre-soc.org/

kernel (supervisor) space will be entirely PowerISA (OpenPower 64)

Userspace RISC-V

Because of UC Berkeley politics (and money) means that they can not really do it any other way, if they want to use RISC-V and integrate high performance graphics (ref: https://www.crowdsupply.com/libre-risc-v/m-class/updates/nlnet-grants-approved-power-isa-under-consideration and this is interesting as well https://www.crowdsupply.com/libre-risc-v/m-class/updates/openpower-eula-released-fosdem-and-more ).

The main SOC portion of Libre-SOC is a quad-core open source SOC with a GPU, VPU, and open source VLSI design cells. Open source down to VLSI Cells.

Very well put, and so true. We are slowing ourselves as humanity down by “protecting innovation” etc. I’m sure the evil aliens will also be very happy to steal all that very valuable paper/digital money!

This article gets a few things slightly wrong: while blobs can be an impediment to open source, they are *not* the reason for lack of kernel updates on affected machines. After all, you can boot the latest Linux kernel on x86-64 CPUs that have blobs too, even if Intel doesn’t specifically support that particular kernel version on that machine.

The reason for the kernel version limitations is most non-x86 systems lack the PC ACPI standard that makes hardware components discoverable by host kernels in a standard way, regardless of whether the device code is open or closed source. ARM has something similar called the SBSA (and some server ARM motherboards support ACPI, IIRC), but those are only just very recently becoming mainstream, and even then only in the server market.

The other option, as you alluded to, would be to simply have every OEM in the hardware stack upstream their drivers, but as you said that’s unlikely.

Now, *all of that said*, for the most part binary blobs are an absolute non-issue unless you’re super anal about privacy and security or under state actor attack *AND* are either technically qualified to audit device code or have another entity you trust to do so. Or, if you do hardware research. And even then, the computing industry at large’s solution to that challenge has been effectively black box devices that are highly tamper and tinker resistant, e.g. iPhones, Macs, Surfaces, and Secured-Core PCs. You still don’t get to see the code, but you can have a fair amount of confidence it hasn’t been compromised.

But yeah, this is a non-issue for vast majority of (even FLOSS OS) users.

This is a great article and explains a lot about the industry.

There is another reason for closed blobs, the same which it is often, that you get datasheets for more complex chips only under NDA: In these complex chips there are always things to hide:.

Errors and bugs, which are secretly covered up in the blob by workarounds. Sometimes these workarounds could even lessen performance below the theoretical values, but of course strongly depending on the application.

Internal trade secrets, as the way the HW is programmed and used shows details about it’s internal structure.