IBM has come up with an automatic debating system called Project Debater that researches a topic, presents an argument, listens to a human rebuttal and formulates its own rebuttal. But does it pass the Turing test? Or does the Turing test matter anymore?

The Turing test was first introduced in 1950, often cited as year-one for AI research. It asks, “Can machines think?”. Today we’re more interested in machines that can intelligently make restaurant recommendations, drive our car along the tedious highway to and from work, or identify the surprising looking flower we just stumbled upon. These all fit the definition of AI as a machine that can perform a task normally requiring the intelligence of a human. Though as you’ll see below, Turing’s test wasn’t even for intelligence or even for thinking, but rather to determine a test subject’s sex.

The Imitation Game

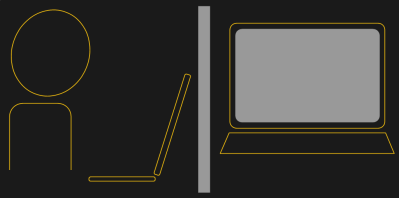

The Turing test as we know it today is to see if a machine can fool someone into thinking that it’s a human. It involves an interrogator and a machine with the machine hidden from the interrogator. The interrogator asks questions of the machine using only keyboard and screen. The purpose of the interrogator’s questions are to help him to decide if he’s talking to a machine or a human. If he can’t tell then the machine passes the Turing test.

Often the test is done with a number of interrogators and the measure of success is the percentage of interrogators who can’t tell. In one example, to give the machine an advantage, the test was to tell if it was a machine or a 13-year-old Ukrainian boy. The young age excused much of the strangeness in its conversation. It fooled 33% of the interrogators.

Naturally Turing didn’t call his test “the Turing test”. Instead he called it the imitation game, since the goal was to imitate a human. In Turing’s paper, he gives two versions of the test. The first involves three people, the interrogator, a man and a woman. The man and woman sit in a separate room from the interrogator and the communication at Turing’s time was ideally via teleprinter. The goal is for the interrogator to guess who is male and who is female. The man’s goal is to fool the interrogator into making the wrong decision and the woman’s is to help him make the right one.

The second test in Turing’s paper replaces the woman with a machine but the machine is now the deceiver and the man tries to help the interrogator make the right decision. The interrogator still tries to guess who is male and who is female.

But don’t let that goal fool you. The real purpose of the game was as a replacement for his question of “Can a machine think?”. If the game was successful then Turing figured that his question would have been answered. Today, we’re both more sophisticated about what constitutes “thinking” and “intelligence”, and we’re also content with the machine displaying intelligent behavior, whether or not it’s “thinking”. To unpack all this, let’s take IBM’s recent Project Debater under the microscope.

The Great Debater

IBM’s Project Debater is an example of what we’d call a composite AI as opposed to a narrow AI. An example of narrow AI would be to present an image to a neural network and the neural network would label objects in that image, a narrowly defined task. A composite AI, however, performs a more complex task requiring a number of steps, much more akin to a human brain.

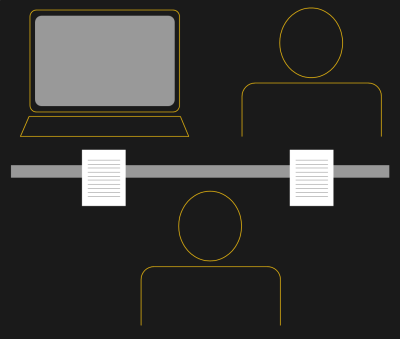

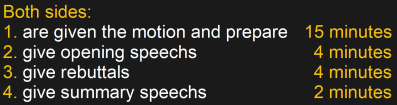

Project Debater is first given the motion to be argued. You can read the paper on IBM’s webpage for the details of what it does next but basically it spends 15 minutes researching and formulating a 4-minute opening speech supporting one side of the motion. It also converts the speech to natural language and delivers it to an audience. During those initial 15 minutes, it also compiles leads for the opposing argument and formulates responses. This is in preparation for its later rebuttal. It then listens to its opponents rebuttal, converting it to text using IBM’s own Watson speech-to-text. It analyzes the text and, in combination with the responses it had previously formulated, comes up with its own 4-minute rebuttal. It converts that to speech and ends with a summary 2-minute speech.

All of those steps, some of them considered narrow AI, add up to a composite AI. The whole is done with neural networks along with conventional data mining, processing, and analysis.

The following video is of a live debate between Project Debater and Harish Natarajan, world record holder for the number of debate competitions won. Judge for yourself how well it works.

Does Project Debater pass the Turing test? It didn’t take the formal test, however, you can judge for yourself by imagining reading a transcript of what Project Debater had to say. Could you tell whether it was produced by a machine or a human? If you could mistake it for a human then it may pass the Turing test. It also responds to the human debater’s argument, similar to answering questions in the Turing test.

Keep in mind though that Project Debater had 15 minutes to prepare for the opening speech and no numbers are given on how long it took to come up with the other speeches, so if time-to-answer is a factor then it may lose there. But does it matter?

Does The Turing Test Matter?

Does it matter if any of today’s AIs can pass the Turing test? That’s most often not the goal. Most AIs end up as marketed products, even the ones that don’t start out that way. After all, eventually someone has to pay for the research. As long as they do the job then it doesn’t matter.

Does it matter if any of today’s AIs can pass the Turing test? That’s most often not the goal. Most AIs end up as marketed products, even the ones that don’t start out that way. After all, eventually someone has to pay for the research. As long as they do the job then it doesn’t matter.

IBM’s goal for Project Debater is to produce persuasive arguments and make well informed decisions free of personal bias, a useful tool to sell to businesses and governments. Tesla’s goal for its AI is to drive vehicles. Chatbots abound for handling specific phone and online requests. All of them do something normally requiring the intelligence of a human with varying degrees of success. The test that matters then is whether or not they do their tasks well enough for people to pay for them.

Maybe asking if a machine can think, or even if it can pass for a human, isn’t really relevant. The ways we’re using them require only that they can complete their tasks. Sometimes this can require “human-like” behavior, but most often not. If we’re not using AI to trick people anyway, is the Turing test still relevant?

I still think the big problem with the Turing test is the basic idea that a human is the right tester. Humans are notoriously awful at constructing tests for themselves. We construct tests that ignore a huge amount of what we actually have learned. It’s like the famous example of asking a human to give a string of random numbers, or the apocryphal “physicist with a barometer” question. I feel like it’s a similar situation here.

I mean, drop an AI into some reality TV show and I get the feeling you’d be able to tell the difference within minutes.

Byte magazine once had an article (humorous) that said in effect, machines will never think like humans until they learn Artificial Stupidity.

to quote Terry Pratchett:

“Real stupidity beats artificial intelligence every time”.

“None of are as dumb as all of us”, that is AI in a nutshell.

Its only half as smart as the dumbest programmer on the team.

Remember, “Garbage In = Garbage Out”.

IQ of a collective is the lowest IQ in the collective, divided by the number of people in the collective.

The law of large bureaucracies. With no profit incentive, government ones are even worse:

Price’s Law – “The square root of the number of people in an organization do 50% of the work. As an organization grows, incompetence grows exponentially and competence grows linearly.” – Derek John de Solla Price, physicist and information scientist, credited as the father of scientometrics

The thing is, a lot of times human stupidity is actually just ridiculous pattern-matching ability gone wrong, and so honestly I’d say most AIs are well on the way to artificial stupidity because of the way we program them.

I mean, I can’t count the number of times I’ve heard a facepalm-worthy response from a smart speaker because it misheard something (and I *get* what it misheard) but any amount of context would’ve been able to deal with that. Plus there’s the famous example of IBM’s Watson cleaning the hell up on Jeopardy and then thinking that Toronto was a US city.

Think about it – we’re amazed at when these machine-learning genetic algorithms can do things like learn how to walk. And it’s basically pretty similar to how humans learn it too – except there are also human scientists who actually *really know* how walking works. It’s a similar thing above – I mean, you’d have to be pretty dumb to guess “Toronto” for a US Cities question. And if you look at a lot of “AI fails” it’s also similar. Think upscaling gone wrong – you’ll see people split in two, and you’re like “no, idiot.”

In fact, if you think about it, the *really* dumb human stupidity stuff (conspiracy theories, for example) are *very* similar to huge AI fails.

Despite being humorous, it’s actually not wrong. What we call stupid is really just an amalgamation of ignorance, specious conclusions, and impaired ability to discern fact from fiction. AIs are naturally ignorant and accept all input as true so no problems with those requirements. Making logical conclusions is a huge part of intelligence (even if the conclusion is wrong) that we haven’t cracked. If we can make AI reason then we can sure as hell make Artificial Stupidity.

I’m not sure I agree: I mean, aren’t most current “haha AI fail” examples just an example of artificial stupidity already? Imagine an AI upscaler splitting a person in two. The algorithm “made” a logical conclusion, based on its previous experience, and just extended it to ludicrous extent.

If we think about examples of impressive human stupidity, the biggest ones that come to mind are conspiracy theories, and those seem *incredibly* similar to huge AI fails. And, in fact, if you think about it, certain recent disinformation campaigns are *strikingly* similar to methods that people have come up with to “poison” machine learning algorithms.

Hegel and Freud would kind of agree and suggest that madness/insanity are essential stages of the development of a “soul”.

Hegel never made any real sense as a philosopher, and Freud was a crank, so it’s rather fitting.

Machines will have arrived when they understand humor.

Well, that’s one level, but if they could then be further improved to understand British humour, that would be impressive!

I’m pretty sure that was ensure that it’s granted British citizenship.

I think the Heisenberg Uncertainty principle has macroscopic applications too (at least on a philosophical level). Everything you test you affect. Wildlife biologists really need to learn. ‘We strapped this 2 kilo tracking collar on the animal and strangely it is now rubbing on trees contrary to any prior observed behavior at least that is what the accelerometer seems to show. Further study required….”

For what it’s worth, that’s the observer effect. Or maybe the Hawthorne effect… that’s what Wikipedia leads me towards.

Definitely a real thing, though. Double-blind studies are another example. If the doctor knows whether they’re administering a placebo or a real drug (single-blind), patient reactions are statistically different than when the doctor doesn’t know (double-blind).

https://en.wikipedia.org/wiki/Observer_effect

What about the opposite test, human pretending to be a machine? They would be caught in an instant, just ask a simple multiplication question of a few dozen digits.

This “machine” just might show different.

https://abcnews.go.com/2020/autistic-savant-daniel-tammet-solves-problems-blink-eye/story?id=10759598

That’s an interesting question. For an AI to pass the Turing test would it also have to model human limitations?

Here’s an even more interesting question: why can’t typical humans multiply large numbers nearly instantly?

Our brains clearly are *capable* of doing it. But most people can’t. Is it necessarily genetic, or is it just that most people’s brains dropped the ability to do it because there are alternative ways to do it, and optimizing your brain for it is a waste of resources?

Now imagine a “computer AI” learning in a similar situation. Why would we consider the computer AI “smarter” for wasting “brain” resources on doing something that could be better done by other tools?

In other words – *are* human limitations *actually* limitations? Clearly some are – we don’t have high-bandwidth interconnects to a global information network. But are all of the “perceived” limitations like that?

Simple answer: because they never trained for it.

>most people’s brains dropped the ability

Nobody has the ability by birth. It’s an acquired skill.

Fair point, poorly worded. Replace “dropped the ability” with “never acquired the ability.”

But this raises the question: is someone who can multiply large numbers quickly *smart*? Why? There’s no driving need for it in most people’s lives. Having a useless skill seems an odd marker for intelligence.

It’s the “physicist and barometer” again: why would we consider someone smart for knowing how to calculate the height of a building with a barometer, versus someone who takes the barometer and trades it for the answer directly from someone who knows?

If you are referring to the rainman kind of capable then read up on actual information about the spectrum. Your analogy falls face down.

I wasn’t. There is a Mental Calculation World Cup, after all.

> Having a useless skill seems an odd marker for intelligence.

It’s rather a measure of the person’s potential. Intelligence doesn’t concern with what it is used for, so multiplying large numbers or solving great logical puzzles can each act as indicators of underlying ability.

However, rote tasks are generally not considered intelligence because it is just repetition of an algorithm. Even less so when the algorithm consists of a table lookup – or memorizing a bunch of trivial facts. This is where the “savants” usually excel.

“Intelligence doesn’t concern with what it is used for,”

Why? We acquire all skills: the ability to choose which skills to acquire is also a measure of intelligence. If one person excels at math but is easily manipulated by a con man, is the con man less intelligent than the person who excels at math?

Creating slide rules is a skill: would it be intelligent for a man to become the best slide rule maker in the world in today’s world, versus someone who becomes the best computer designer?

For some reason we have this belief that certain artificial tests are “intelligence tests” and others aren’t. Jeopardy is a test of intelligence, but, say, The Bachelor is not. Chess is an intelligence test, whereas, say, basketball is not.

It’s a similar problem with the “imitation game” version of the Turing test. Why do we think that the responses to human questions are an indicator of intelligence? If you put me in a dark room and only had me answer questions through a piece of paper, I guarantee after a few months my responses wouldn’t look *anything* like a human’s.

>Why?

Because then you’d be making assumptions on a sort of utilitarian understanding of what is intelligent – which makes it a value judgement rather than an objective metric.

>the ability to choose which skills to acquire is also a measure of intelligence

For what purpose? One may choose according to their desires, but how do you choose what to desire?

“For what purpose? One may choose according to their desires, but how do you choose what to desire?”

Now you’re getting to the crux of it! The skills themselves *cannot* be measures of intelligence – stripped of context, “abilities” have no merit on their own. Imagine two people – one learns to read early, but never learns to function in society, essentially trapped in books. Now imagine a second – one who never learns to read, but adapts to deal with that limitation. In fact, the *lack* of ability becomes a learned skill for the second person.

Again, stripped of context, you can’t even tell “lack of skill” from “skill.” Imagine being asked to give answers to mathematical problems: the “highest skilled” person would be the one who gives the most correct answers, and the “least skilled” person would be the one who gives the fewest, correct? But in truth it actually would take skill to answer *none* correctly.

If that seems esoteric, consider that in the context of art, or literature: a new style could easily be viewed as “maximally bad” in a previous style. Imagine scientific discoveries – Einstein’s “worst mistake” ends up being necessary and correct. And even *vast* amounts of physical theories proposed which end up being totally wrong end up being useful as guideposts for others. Hence Edison’s (paraphrased) “I haven’t failed. I’ve just found 10,000 ways that don’t work.” Even math itself is full of cases where dead ends have led others to find the correct path.

In the end, skills, long term, end up being judged by society over time – which is truthfully the only way we can judge *any* skill. Including the skill of choosing a desire.

You obviously haven’t watched much reality TV, a ZX81 would seem far more intelligent than most of the contestants. You’d be able to tell the difference, but probably only because the computer was at least semi coherent

I’m not suggesting people are coherent.

You’re forgetting Turing’s original point: replacing “can machines think” with “can machines do what we (as thinking beings) do?” People on reality TV are reacting to the world in a way based on their own experience. We may be like “haha, they’re idiots” but we *recognize* where that behavior came from. Drop an AI in that situation and I’d bet it’d look totally foreign.

Let me put it another way, free of reality TV. Imagine if you trained an AI driving model on the entirety of the US. Now drop it in DC Beltway traffic. Would you be able to tell the difference between it and the local drivers?

The most important idea of the Turing Test is that it provides an answer to the question “can machines think” without having to define what you mean by “think”, but instead simply compare the capabilities of the machine to those of a human.

The setup of the test, as described by Turing is just one possible version to do that. Being able to drive a car from A to B with similar comfort, speed and safety as a human driver is another.

The driving example might be an example of artificial stupidity.

It doesn’t establish that, because it swaps the question to a different one – although related.

It asks “can machines imitate people?”, which does not answer whether they can think, however you define “think”.

Sufficiently advanced imitation is indistinguishable from real thinking. It’s pointless to try to go any further than that.

To be clear the “imitation game” type of test is actually testing if machines can imitate people to people.

That’s the fundamental issue.

You missed the point of my original comment. The actual implementation of the test is not particularly relevant. You can improve on the ‘teletype behind a wall’ setup that Turing used, if you want. You can use an AI in a robot, and see if it can survive in the real world for a few years.

What matters most is that any attempt to define ‘thinking’ or ‘intelligence’ leads to a dead end, and that our best goal is to imitate (or improve) what humans are doing.

The implementation is *entirely* relevant, because it sets the boundaries for the approximation you’re testing.

It’s like trying to fit a function using a range of limited data, rather than an approximation from first principles. The limited range fit might be perfect over the range you’re testing, but outside of that, it’s an extrapolation. Whereas the approximation can likely bound that behavior even past a range where you have no data.

That’s the issue with a definition relying on comparing an imitation to the real thing: so long as you’re within the bounds of how the imitation was tuned, it’s fine. Move outside of that, and you’ve got no idea.

“What matters most is that any attempt to define ‘thinking’ or ‘intelligence’ leads to a dead end,”

This is the part I disagree with – it’s true it always *has* led to a dead end. I’m not convinced it always *must*. Turing’s approach makes the problem more tractable, but I don’t see a reason why it necessarily must be the right one.

If you come up with any definition for “intelligence” or “thinking” that is not expressed in terms of observable input => observable output, then you have something that cannot be experimentally tested.

Suppose you have a hunch that intelligence requires some widget XYZZY, and to test your hypothesis, you create two instances, one with that widget and another without. If they behave exactly the same, there’s nothing you can learn about the importance of XYZZY. That’s why that’s a dead end.

The only solution is to define intelligence in terms of behavior, and since humans are the only known example, the challenge is to make something that can do the same. Anything else is just a matter of arbitrary choices.

>The only solution is to define intelligence in terms of behavior

Not really. You can also define something by what it’s not.

For example, it can be argued that intelligence is non-computational (not a program). While this does not lend us any information about what intelligence is, it shows clearly what it is not, and therefore we can whittle out versions of AI which do not qualify.

Whether this results in something “mystical” and untestable depends on your idea of physics: whether EVERYTHING must be computational (“Newtonian” determinism), in which case the word “intelligence” loses meaning because all your actions are determined by other causes besides yourself anyways – or whether we can leverage some other aspect of reality which allows us to perform the function. The task then is to show that the workings of a brain are not sufficiently explained by computational theories.

In contrast, the behavioral argument can never be proven, because you would have to run the Turing test to infinity – to show that at no point does the AI deviate from the real thing. What walks like a duck and talks like a duck may in closer inspection turn out to be a mallard.

“The only solution is to define intelligence in terms of behavior, and since humans are the only known example, the challenge is to make something that can do the same. Anything else is just a matter of arbitrary choices.”

The fact that you qualified this with “humans are the only known example” should explain why I don’t see this approach as fundamentally correct. Starting with the ansatz of “all humans are intelligent” is a choice – it need not be the only one.

Project Debater. One small step for computer science, one giant leap for social media.

That could get meta. Project Debater could join in on the debates in the comments here.

Maybe it is already happening.

I think the confusion arises because “AI” has evolved into something distinct from what we used to call AI. We recognize that AI like expert systems and self-driving cars aren’t “thinking” even if they are performing at a high level. What we used to think of as AI is now generally called “AGI” for Artificial General Intelligence. IBM’s debater notwithstanding, even the best AI fails miserably at random human conversation. These AI systems are OK, sometimes even superior to humans at performing expected tasks, but they cannot handle edge cases or switch modes the way humans can. And that has been an issue for self-driving cars, because once you leave the big highway systems the world’s roads are a yarn ball of edge cases.

The distinction between narrow AI and general AGI is pretty straightforward, so I wonder where all the confusion is coming from.

It´s a general problem.

Media because there’s something to sell, and those who don’t want another AI winter.

We’re not getting another AI winter, because the current narrow AI is already profitable, and we have a smooth trajectory to steady improvements.

The “narrow AI” is a dead end, because it works fundamentally differently to things like brains or the equivalent neural emulations. It’s stuck at diminishing returns trying to get the last 5% right, and the only thing that’s pushing it forward is the amount of computing power and electricity we keep throwing at the problem.

It’s not a dead end, because there’s no limit to how we can improve the design with clever new ideas. Nothing is set in stone. Funding is provided by usefulness of designs we already have, unlike before when we had AI winters with no useful products, just pure academic research.

That can be said of anything, if the “clever new idea” is to “do it completely differently”.

We are getting to the limits of what CAN be done with things like image recognition by statistical analysis, because the amount of data and processing time you have to throw at it grows without limit. No matter how much money you put in, it won’t get much better than what it is now – so you have to try something else.

A modern Intel/AMD CPU is completely different than the first 4040, but we got from one point to the other by small steps.

Similarly, there is not a day that goes by without someone making an improvement to AI systems.

If you follow ‘two minute papers’ on youtube, you can see regular examples of such improvements.

The limitations you see are only the limitations in your own imagination.

>The limitations you see are only the limitations in your own imagination.

Yours is a typical “singularity” argument that fails to appreciate that making another leap such as from the Intel 4004 to a modern CPU is physically impossible, and we have to change to a different paradigm entirely.

We already know that brains don’t work by comparing a billion different images of an umbrella in order to identify it correctly in every possible situation, and repeat that for EVERY possible object imaginable. Our approaches to AI simply do not extrapolate further. They’re just good enough to sell.

Spot on. Somebody who has never seen an umbrella can recognise one from a *short* description of what one is and what it does. Having to train an AI with thousands of images of umbrellas is rote learning, *not* intelligence.

But in a sense, a human has been digesting images and relating millions of objects into their linguistic terms over the span of their entire life. It’s not a stretch to imagine an AI doing this same “umbrella by definition” identification soon.

Yes, but what’s stopping it is the fact that we’re going at the problem backwards.

What we’re doing is, first trying to get the “eye” part of the AI to see the umbrella, and then get the “mind” part to figure out what to do with it, because the first is relatively easy and the latter seems to be impossible. We haven’t got the faintest idea on how to make the AI generate its own definitions, because the problem is much like trying to create an algorithm that produces true random numbers. Anything we program the AI with is by definition from us, no matter how we program it. We put the meaning in; the computer is not able to do it – unless we make a computer that works like us.

The great illusion pulled off by modern AI research is the use of reinforced learning to program the AI, by training neural network simulations and the like.

The reason why it is an illusion is in the fact that we still define what is a correct answer, a correct interpretation of the situation, so we allow the algorithm we’re evolving no possibility of an intelligence of its own. We just call it intelligent when it comes to the same conclusions.

The most profound problem for “AGI” is that you can’t allow it to BE intelligent, because it would become unpredictable. For example, all the applications of AI we have are “frozen” so they wouldn’t forget what they were supposed to be doing by learning something entirely different. This happens rather quickly, and it’s called “catastrophic interference”.

That makes them not AI because they could in principle be replaced by a conventional program algorithm that follows the same set of rules. You can basically reduce it to just a big pile of IF-THEN statements like John Searle pointed out.

The second problem is that since we’re trying to engineer AI in a piecemeal fashion, we break it up into functional blocks which makes the AI perfectly incapable of having any semantics and meaning. The part that is supposed to look out into the world and identify objects doesn’t understand what objects are – only what something looks like – and the part that understands what objects and their relationships are doesn’t see anything. It just receives symbols representing reality from the other part without a direct experience of it, so it cannot actually observe reality. The system is blind, deaf and dumb.

How is it different to how a human brain works? Do the retinas understand what they are seeing?

Retinas do not try to explain to the brain what’s out there, and the brain doesn’t expect them to. Your eyes are an extension of the brain, not an independent machine that says “There’s a car, there’s a pedestrian…”

Yet that is how we try to build AIs, so we can “unit test” them to say that the computer vision can correctly identify a pedestrian on the street – but the CV system doesn’t know what the pedestrian means so it can’t double-check and adjust its assumptions. The information is passed along a one-way street to the “cognitive-logical” unit that decides what to do about it. In effect, if the visual part ignores something that isn’t sufficiently “a pedestrian” by some set of rules, then it simply won’t tell the logic unit, or gives it a wrong token. The logic unit trusts the visual unit that there are no pedestrians, and promptly runs one over.

One thing that is show about the human visual system is, we seem to run an “internal simulation” of the world where the brain generates similar signal patterns as what are coming from the optic nerve and up the visual cortex. The experience of something isn’t translated into a token symbol along the way, but the experience itself is its own symbol, which is why the higher brain may generate variations and push them downstream to the visual system to check whether it is seeing a different version of the same thing. The thinking and the seeing are intertwined, not separate.

It’s like this: imagine you’re in the control room of a nuclear power station. There’s a light on the wall that says “Coolant pump on”. You pull a lever and the light goes off, you turn it the other way and it goes back on. All good.

Now, the engineers made an error. They wired the lamp to indicate whether there’s power coming to the pump, not whether the pump is actually running. In certain circumstances the two are the same, in others, not. The pump may be broken. An accident happens and the reactor starts losing water. You check the lamp – it says “Coolant pump on” – but in fact it isn’t. This is how Three Mile Island happened.

If you went down the engine room and put your hand on the pump, you would feel that it is in fact not running. The direct experience of the physical vibration of the pump tells you that, and a whole lot of other things. The lamp is merely a symbol of the pump, which is given according to some limited criteria. As long as you’re relying on the symbol alone, you are disconnected from the reality – the lamp may be on or off for any number of reasons completely unrelated to the bit of reality that it’s supposed to represent. Even when it’s functioning as expected, it is filtering out most of the reality anyways and reducing it to a binary state that tells you very little about what’s going on.

Likewise, the computer vision system picking up a pedestrian and saying to the logic system “Pedestrian, there”, is leaving out nearly everything that is relevant to the situation, and since the system is so limited you can’t even trust it to do that all the time.

This isn’t true. Turing never intended it to test the machine, but to test our perception of intelligence because the whole concept was/is too ill-defined to make the question meaningful.

The Turing test is among the most misunderstood, mis-quoted and mis-applied concepts in computer science, right alongside with Moore’s law. Literally everybody gets it wrong, and even when you point out it’s wrong people just stare at you blankly, turn away, and repeat the false version.

Direct AI research into improving humans so our perceptions get better. :-p

I propose another AI test: Can they create content? No, not just writing a few sentences, but creating plots for stories, scripts for movies, or even… memes?

I think this is a barrier that few, if any, AI have crossed, and it sort of lines up with Turing’s methodology. I’m no expert, but I think this concept of content-creation will serve as the basis for evaluating AI in the future.

I think this is a moving of the goal posts. Cameron Coward wrote a great article a few years ago where the researchers he was interviewing talk about how each accomplishment is sort of dismissed as “obviously an AI should be able to do that”. It really stuck with me and certainly worth a read: https://hackaday.com/2017/02/06/ai-and-the-ghost-in-the-machine/

What a fascinating thing though: where do great stories come from? There are novels I’ve read where you know right away it’s going to be a wonderful ride. What sets those apart from the ones that are snoozers? Trying to develop a formula for that is a tall order (perhaps some writers have it, Stephen King comes to mind). An equally interesting question: do I care whether a novel was written by a human or an AI as long as I’m entertained?

AI entertainment. Hollywood trembles in fear.

Beating Hollywood is not a particularly high barrier either, so they should be scared.

If you wanted to try a (slightly) lower barrier, where the plot is less complex, you could start with pr0n. With bespoke deepfakes of course.

It would probably be more profitable.

Artificial Intelligence without morale is the road to skynet.

Who’s Morales will we use? Instead of skynet we may end up with a digital Judge Dredd.

“Artificial Intelligence without morale”

that would be Marvin, the paranoid android! (c) Douglas Adams.

That depends on whether “entertaining” is the only qualifier for a good novel. Other metrics might apply, such as being historical, having an unique point of view, etc. which cannot be made by some formula. For example, we might be interested in personal accounts of people living in the 18th century. The AI novelist can’t create history, it can only re-tell someone else’s story, nor can it have an unique point of view because it’s just scouring the internet for opinions that people already have.

The metric we would use is simply how much money it made. Disney proved that you can do that by re-telling someone else’s story.

Clearly, the best part of the article is the clever “Young Frankenstein” picture. Was it drawn by a human or a machine?

Also, when dealing with an advanced robot, “I beg you, for safety’s sake, don’t humiliate him!”

Well there are the rudiments of “don’t beat me up”.

https://spectrum.ieee.org/automaton/robotics/artificial-intelligence/children-beating-up-robot

I had that experience with my BB-8 droid a while back. At a Maker event, I very young girl kicked it, clearly mimicking the behavior she’d seen in the videos of testers kicking Boston Dynamics robots.

https://hackaday.com/2017/10/09/our-reactions-to-the-treatment-of-robots/

That was our Art Director, Joe Kim. That image is good on soooo many levels…

Bladerunner had the right Turing test.

Was thinking the same. Especially in this context, where the goal, according to IBM, is to give actual policy recommendations. That’s certainly where you want the Voight-Kampff test instead.

A bee can recognise and classify a large number of flowers.

And Stephen King’s books have been generated by a computer program for over 3 decades.

Intelligence is still a multiplicity problem. Like the particle – wave duality: you get what you look for, but neither is the complete answer.

“Stephen King’s books have been generated by a computer program for over 3 decades”

Mwahahaha! Of dubious quality. Was it an Acer 19?

The guy who invented the BEAM robots (basically, “analog” robotics) once commented along the lines that conventional AI is trying to produce complex behavior out of nothing, like trying to compute a truly random number, whereas real intelligence produces simplicity out of the surrounding chaos of nature by filtering it down to meaningful actions.

Ah, here it is:

https://www.youtube.com/watch?v=wg-ZM9bVusQ

Dude, is you real name Alex? You have the same awesome enthusiasm for AI as a guy I used to work with.

So you ask…

“What’s 1.57 and also a dessert?”

(I’m giving AI the edge.)

+1 for Dude at 7:57.

22/14. I don’t get the joke.

:)

Half a pie?

As a test of language comprehension, this was certainly pretty cool. As a debate, meh. The human had the much harder case to make, and as such had to do the creative thinking. The AI had the “just run the numbers” position, which is essentially what it did.

I mean, it’s very cool that it picked up relevant research. Don’t get me wrong.

But it was always “study X says that kindergarten is good”. Statements of facts rather than creative insight.

Am I moving the goalposts? I surely know which of the debaters I’d rather be at a cocktail party with.

This is pretty similar to my opinion of smart speakers as well: my family tries to interact with them as if they’re intelligent, and while it usually works, it’s not hard for me to recognize when a certain question is going to fail – any question that doesn’t have a well-known answer’s never going to work.

Whereas *I* think of it like a smart web browser – I assume “well-established knowledge I don’t know” will show in the first hit (like, “can a dog eat X” where X is something common) whereas “specific knowledge that only a handful of people would care about” I don’t bother with.

I thought you meant smart speakers like, Neil deGrasse Tyson.

But if you follow the kind of public speakers, you quickly notice that they too are repeating a bunch of stock phrases and rehearsed routines in answering questions.

“I thought you meant smart speakers like, Neil deGrasse Tyson.”

Nah, because that would imply I necessarily think Tyson is smart. He’d be better described as a “speaker on smart topics.”

You’re dead-on right that most speakers tend to be stock and unimaginative, because they’d never be able to make money if their schtick changed constantly (and regardless of my opinion of Tyson, he’s certainly smart about making money).

There is great danger in the general public being led to believe that being able to win an argument proves that your are more familiar with the truth. Formal debating interactions only prove how well you play that particular and very limited game. There is no absolute truth to be found in language or even pure mathematics as these systems are incomplete and self referential. This philosophical territory was covered very well during the 20th century.

Does the Turing Test matter? of course it does. People always seem to forget it has nothing to do with how good is an machine learning algorithm at doing a specific task, but how good it is at passing for human. The “debating” AI in this article would fail, as do lots that focus on specific knowledge rather than general. There is a classic case of the AI that pretended to be a child interested in baseball. It was good, fooled quite a few people interested in talking about baseball. Fooled no one who had no interested in talking about baseball.

When it will no longer mater is when AI can repeatedly pass a Turing test, but still have other large deficiencies.

Yes. This article ends with the question if the Turing test is still relevant but the right question is actually “is the test relevant yet?” artificial intelligence will become more advanced and human-like, surpassing humans as years go by. The Turing test will be even more relevant than it is now.

>but how good it is at passing for human

In a sense, it is human, because it’s a roundabout Mechanical Turk: it picks up answers written by people and repeats them.

I was a hidden human at the Turin test 2014 event held at the Royal Society in London. To be in that place, where so many big brains had walked. To say I was walking on hallowed ground would be an understatement. If any of you get the opportunity to take part in any science experiment, do it without hesitation. I got to have a long chat with Huma Shah and Kevin Warwick. Was an amazing experience. :)

https://youtu.be/yWPyRSURYFQ 1982 was the first near death of the Turing test ;-)