It is funny how exotic computer technology eventually either fails or becomes commonplace. At one time, having more than one user on a computer at once was high tech, for example. Then there are things that didn’t catch on widely like vector display or content-addressable memory. The use of mass storage — especially disk drives — in computers, though has become very widespread. But at one time it was an exotic technique and wasn’t nearly as simple as it is today.

However, I’m surprised that the filesystem as we know it hasn’t changed much over the years. Sure, compared to, say, the 1960s we have a lot better functionality. And we have lots of improvements surrounding speed, encoding, encryption, compression, and so on. But the fundamental nature of how we store and access files in computer programs is stagnant. But it doesn’t have to be. We know of better ways to organize data, but for some reason, most of us don’t use them in our programs. Turns out, though, it is reasonably simple and I’m going to show you how with a toy application that might be the start of a database for the electronic components in my lab.

You could store a database like this in a comma-delimited file or using something like JSON. But I’m going to use a full-featured SQLite database to avoid having a heavy-weight database server and all the pain that entails. Is it going to replace the database behind the airline reservation system? No. But will it work for most of what you are likely to do? You bet.

Abstraction

If you think about it, the file system is nothing more than an abstraction over the disk drive. We normally don’t know or care where exactly hello.c is stored. We don’t even care if it is encrypted or compressed. It could be fetched over a network or all the pieces of it could be scattered randomly across the disk. We don’t usually care. What if you abstracted the filesystem itself?

That’s pretty much the idea of a database. If I have a list of, say, electronic components, I could store them in a comma-delimited file and read it with a spreadsheet. Or I could use a full-up database. The problem with databases is that traditionally it requires some server software like MySQL, SQLServer, or Oracle, for example. You can abstract the database interface, but it is a pretty heavy solution compared to just opening a file and using it normally.

However, there is a frequently used library called SQLite that provides a pretty robust database that can live in a single file with no external server or maintenance. There are limitations, of course, but for a lot of simple programs it can bring the benefits of a database without the overhead and expense.

The Right Tool for the Right Job

Of course, there are limitations. However, if you are rolling your own file format for something, you might want to consider switching to SQLite and handling it as a database. According to the project’s website, doing so may actually save space and increase access speed. Plus, once you get the hang of it, it is just easier. It is also easier to scale later if you decide to switch to a real database.

If you are storing huge databases (like terabyte-scale) or you need many concurrent users — especially writing to the database — this might not be for you. The SQLite website has a good page about what uses are good and which are not optimal for the library.

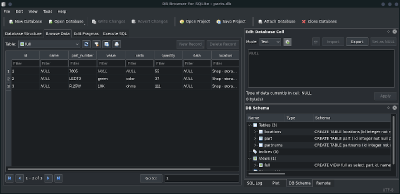

One other advantage: There is a command line program (and some GUI variations like the browser in the accompanying image) that let you work with SQLite databases without writing any code. So you can do things like populate your data or examine your database without having to write SQL at all. For a custom file format, you’d probably have to do everything yourself or populate and debug data with a generic tool that doesn’t know about your specific data.

My Task

I don’t want to develop an entire application in a post, nor do I want to teach SQL — the structured query language that most databases include SQLite use. But I want to show you how easy it is to get a start on a simple electronics database using C. The C code will turn out to be the least of our problems. The two things you’ll want to understand most are how to structure the data — the database schema — and how to populate the initial data. Even if you want to have your program add data eventually, it is nice to start with a little data initially to get your program working.

Database Fundamentals

A modern relational database has one or more tables. Each table has rows of data. A row has one or more columns and each column has a data type. For example, you might have a text column for serial number, a real number value for test point voltage, and a boolean for pass/fail.

Each table has some unique ID per row. The database will provide one for you if you don’t, but usually, you’ll want to provide this unique ID yourself. The database will help you by automatically incrementing the number and ensuring it is unique for each row.

If this is all there was to it, there wouldn’t be many advantages over a comma-delimited file. But we can do a lot of things better once we have this organizational structure. For example, it is easy to ask the database to sort items or pick the highest three voltages out of the table.

However, one of the biggest advantages of a database is being able to do joins. Supposed I have a list of components: a PC board, a resistor, a battery holder, and an LED. I have a table that has one row corresponding to each of them. Now suppose I want to have a table of assemblies that are made up of components.

I could take a simple approach:

Table Component ID Name =========== 1 PCB 2 Resistor 3 LED 4 Battery Holder Table Assembly ID Name Components ============================ 1 Blink1 PCB, Resistor, LED, Battery Holder 2 Blink2 PCB, Resistor, LED, Resistor, LED, Battery Holder That's ugly and wasteful. A better approach would be to use three tables: Table Component ID Name =========== 1 PCB 2 Resistor 3 LED 4 Battery Holder Table Assembly ID Name ========= 1 Blink1 2 Blink2 Table Assembly_Parts ID Component Quan ======================= 1 1 1 1 2 1 1 3 1 1 4 1 2 1 1 2 2 2 2 3 2 2 4 1

Using a join operation, you can bind these tables together to generate what amounts to the first table without duplicating lots of data.

For my toy database, then, I’m going to create three tables: part will contain the parts I have. The partnums table will hold types of parts (e.g. a 7805 vs a 2N2222 or a CDP1802. Finally, a locations table will tell me where I store things. There are other ways this could be structured. For example, there could be a table to store types of footprints: a 2N2222 can be in a TO92 or a surface mount,. In addition, I’m going to create a view that shows everything unrolled like in the first example. A view is something that isn’t stored but acts like a table for convenience. In reality, it is just a query on the database that you can work with.

There’s a lot more to it, of course. There are inner and outer joins and a lot of other details and nuances. Luckily, there’s plenty of material to read about databases on the Web including the SQLite documentation.

Just Enough SQL

For our purposes, we are only going to use a handful of SQL statements: create, insert, and select. There is an executable, sqlite3, where you can enter database commands. You can provide the name of the database on the command line and that’s the easiest way to go. Use .exit when you want to exit.

You can probably figure out the SQL syntax since is pretty verbose:

create table part ( id integer not null primary key, name text, partnum integer, value text, units text, quantity integer, photo blob, data text, location integer, footprint text); create table partnums (id integer not null primary key, partnum text, desc text); create table locations (id integer not null primary key, location text, desc text); create view full as select part.id, name, partnums.partnum as part_number, value, units, quantity, data, locations.location as location, footprint from part inner join partnums on part.partnum = partnums.id inner join locations on locations.id=part.location

I just made those calls in the sqlite3 command line program although I could have used the GUI or — if I wanted to — I could make my C program execute those commands. I also used the command line to insert a few test records. For example:

insert into locations (location,desc) values ("Shop - storage II","Storage over computer desk in shop");

insert into partnums(partnum,desc) values("R.25W","Quarter Watt Resistor");

insert into part(partnum,quantity,location,value,units) values (2,111,1,"10K","ohms");

To get data back out, you’ll use the select command:

select * from part; select partnum, quantity from part where quantity<5;

If you want to know more, there are plenty of SQL tutorials on the web.

Programming!

So far, none of this has required programming. Assuming you have the libsqlite3-dev package or its equivalent, you don’t need much to add database functions to your C program. You’ll need to include sqlite3.h. If you can’t find it, you probably don’t have the development files installed. You’ll also need to link with libsqlite3. For a simple single file project, this makefile will probably get you started:

CC=gcc CFLAGS+=-std=c99 -g LDFLAGS=-g LDLIBS+=-lsqlite3 edatabase : main main : main.c

The code itself is straightforward. You need to open the database file (sqllite3_open). Instead of a file, you can pass “:memory” to get an in-memory database that won’t last beyond the life of your program. The call will give you a handle back to your database. Next, you have to parse or prepare the SQL statement you want to execute. This could be any of the SQL we’ve executed through the interface or lots of other SQL statements. In my case, I want to pull the data from the full view and display it, so I’ll parse:

select * from full;

Finally, you’ll call sqlite3_step and while it returns SQLITE_ROW, you can process the row using calls like sqlite3_column_text. At the end, you finalize the database and close it. Here’s the code with error handling removed:

#include <sqlite3.h>

#include <stdio.h>

int main(int argc, char *argv[])

{

sqlite3 *db;

sqlite3_stmt *sql;

int rv;

rv=sqlite3_open("parts.db",&db);

rv=sqlite3_prepare_v2(db, "SELECT * from full", -1, &sql, NULL);

do

{

rv=sqlite3_step(sql);

if (rv==SQLITE_ROW)

{

printf("%s,",sqlite3_column_text(sql,0));

printf("%s\n",sqlite3_column_text(sql,2));

}

} while (rv==SQLITE_ROW);

sqlite3_finalize(sql);

sqlite3_close(db);

return 0;

}

Or, have a look at the full code. In a case where you didn’t care about stepping through rows, you might have called sqlite3_exec. Even the documentation admits this is just a wrapper around a prepare, a step, and a finalize so you can just pass in a string and expect it to work.

Of course, there are many more calls. For example, you can call sqlite_column_int or other calls to get particular types. You can bind parameters to SQL calls to set values instead of building a string. But this shows you just how easy it can be to do a simple SQLite program.

So next time you find yourself inventing a new file format, think about using SQLite instead. You’ll get free tools and once you learn SQL you’ll find there is a lot you can do without writing any actual code other than different SQL commands. You can even use Git-like branching to keep versions of your database. Then again, some people use git as a database, but we don’t suggest it.

Your idea of stagnation is my idea of stability.

+1. I don’t need new features every 2 weeks, I need a stable filesystem. You can add features all you like but I expect them to go through a LONG vetting process because I don’t want something like what happened with the testing version of Linux kernel 5.12 where it was corrupting filesystems that used swap files(how that passed unnoticed is beyond me. I hope they start testing filesystem integrity from now on.)

you should have listened to your own advice and stick with partition swap (not affected by this bug) instead of those new fangled “swap file”

Yes, it’s very nice to be able to create things on a stable platform without the underlying technology changing every year.

OTOH, a vanilla hierarchical file system doesn’t do “Recent documents” or “show me all folders where I keep music” consistently. Not to mention trying to use a hierarchy to organize things when some things don’t fit in a single category.

“Recent Documents” is way out of scope for a file system or volume manager, but I think you can implement that at the userspace level (where it belongs) using the metadata in the filesystem (where it belongs) like creation time, modification time, last read time, etc.

As for using hierarchies for data that doesn’t fit in a strict hierarchy, the standard solution is a tagging system (tags can also be hierarchical and ad-hoc as well); guess how the tag data is stored? Filesystem metadata! Extended attributes have been around for decades now and are supported by nearly all modern file systems on all operating systems.

My vanilla hierarchical file system does do “Recent documents” with elegant ease. If I recall part of the filename, then “Control-r filename_fragment” on the bash commandline retrieves the whole edit command from the command history, invocable by just whacking enter. If there were several similarly named files edited, then whack just Control-r as many times as needed to step through the search hits to the one now desired. Simples!

If the name of the recent file escapes my recall, then “Control-r vim” allows me to Control-r through my most recent history of editor invocations. If the elusive file was not opened on the commandline, but as a subsequent edit in the editor, then vim has persistent history as well, and it can be sought there, even though that history is neither a filesystem nor shell artifact.

OK, merely using “locate -i music” will list all the files as well as the directories, but a shell function or alias to trim is not difficult. If there is good reason to distribute music files in disparate parts of the filesystem, e.g. if stored under “culture” under “country”, then (on any worthwhile OS) just make a symbolic link from each /culture/music directory back to music/, for a condensed orthogonal view of just music.

That highly ordered shortcut now takes you to the same files – as consistently as one could wish.

Where things don’t fit in a single category, symbolic links can make a directory appear in many parent directories, for any number of orthogonal views of the data. If more disciplined management is required, hard linking the individual files to more than one parent directory has the advantage that each file can be deleted from one directory but not another, according to that view’s desired perspective, and the one copy in the filesystem remains through all directory deletes except the last one, when no-one wants it any more. (*nix maintains a “link count” on every file to facilitate this flexibility.)

Incidentally, setting $HISTSIZE or $HISTFILESIZE to e.g. 10000 retains months of command history, not just file edits, but also html document views with e.g. xpdf. That cross-app “Recent documents” facilty is most convenient. I think it ticks all the boxes.

The pathnames in my music example above were actually: {country}/culture/music and

music/{country}

My use of anglebrackets originally seems to have been swallowed as a html tag or similar bt the webpage. In any event, the text vanished on arrival.

Yes, stagnation at the ideal implementation level is not just stability, it is optimisation.

Unless there are data relations to exploit, a relational database is just a tool looking for a problem, not a solution.

After 30 years in IT, and over 50 years playing with computers, for me it suffices entirely that the filesystem is the database. A meaningful directory hierarchy organises all information, and the “locate” utility instantly locates a file by name, without having to walk the tree, if the target is already known, even partially. (Yup, it’s been *nix all bar the first few years.)

A simple shell function saves keystrokes when seeking the best of (currently) 1282 mailbox files for an interesting list post, worth keeping. (Not all data is information, and pre-scrutinised posts of known interest are worth a thousand times more than terabytes of unsorted bumpf.)

It seems to me that the issue is that relational databases are taught in Computer Science courses, so students feel a need to find something to use them on – anything will do. Those of us who came to computing via the engineering path have enough real problems to solve without needing to take a regressive path – attempting to reinvent the filesystem as a monolithic M$-philosophy filesystem-in-a-file. Let them live in a browser.

“Let them live in a browser.” is the nerd equivalent of “May you live in interesting times.” and is now my favorite curse.

And here I thought we were going to get the Linux version of WinFS.

https://en.wikipedia.org/wiki/WinFS

If you want an OS with the database integrated. Take a look at OS400 or what ever IBM renamed it too.

Or MUMPS

Or Pick.

Or tied hashes in Perl.

Or VMS.

Or Palmos

The core OS should stay file-based, because it’s just easier for users to deal with. We would have to reinvent and relearn everything about how we manage documents. Plenty of things already ARE databases instead of filesystems and they are kind of obnoxious compared to heirarchal organization.

True DB functionality should probably be embedded in applications as SQLite does. Sqlite does just about everything right and REALLY should be used more often. Especially in some browsers, which rewrite entire megabytes size files on a minutely basis with little or no changes.

A better way would probably be to incrementally advance our concept of a file. Files could have arbitrary JSON metadata with configurable indexing and searching. They could have digital signature based search for a native way to say “Look for the latest file that was signed with this key”.

What browsers are you referring to? I was pretty sure that all mainstream browsers now use sqlite for most if not all their data storage needs. Firefox, Chrome, anything Chrome based…

“Sqlite does just about everything right and REALLY should be used more often.”

Sqlite doesn’t do security right, it doesn’t do it at all. Sqlite doesn’t do performance right, INSERT is just awful. Sqlite doesn’t do replication or mirroring or any other form of redundancy. Sqlite won’t store the objects in your program unless you do it yourself. Sqlite won’t let you add a field to one record, you have to add it to all your records. Sqlite doesn’t really support live backups, you have to write lock the whole table, which defeats the purpose. It only supports one write transaction at a time for the entire database; so your typical background task becomes a denial of service attack on your application.. I could go on and on..

A database is a storage abstraction. A filesystem is a storage abstraction. A log is a storage abstraction. The cloud can vend storage abstractions. Within databases there are relational variants, key-value stores, doc-structured, object-oriented, and others. None of them are necessarily “next generation” versions of each other. It’s just sometimes you’re using the wrong abstraction.

Hmmm.

Fist step: define the term “database”. Let us use the first sentence from wikipedia: “A database is an organized collection of data, generally stored and accessed electronically from a computer system.”

Next step: rewrite entire post perspective intro. Ever file system is a database. FAT12 or OS/360-style what is the difference? None beside some minor details. Of course IBM would say: it went downhill from 1967 on after we invented BSAM/QSAM in 1967:)

Performance is almost never a minor detail, and there are really enormous differences in performance among file systems and databases. For example NTFS was designed to work well on ST506 drives while XFS was specifically designed for high speed streaming video. Most SQL databases are optimized for high speed SELECT and have miserable INSERT performance. Some commercial databases have INSERT performance that is close to theoretical hardware limits.

Quote: “At one time, having more than one user on a computer at once was high tech, for example. ”

Simply not true. Apart from Charles Babbage’s Analytical or Difference engine, the modern digital computer always accomodated multiple users. Multitasking even made it possible to have concurrent users executing their programs.

Do not for one moment think that any product from Redmond defines anything in the history of computers

Unless off course you are referring to the scores of women that used to do all kinds of hand calculations, primarily for the army, and were calles computers. More than one user on such a computer would be .. special.

I agree. Historically, computers started shared because they were big and expensive. Only MS-DOS/Windows (late in the game) were single-user. And even that didn’t last long.

And MSDOS/Windows and other single user systems like Classic MacOS, AmigaOS, and various 8 bit DOSses were what more than 99% of computer owners used from the 80s until the mid 90s at least. I would say a feature that is only available on less than 1% of hardware and that you must spend a small fortune to acquire qualifies as “high tech.”

Actually, time sharing, multi-programming, and other multi-user simultaneous techniques are comparatively recent. If you look back to computers in the 40s and 50s and even end of the 60s one person used the computer at one time. Your files probably came from tape that were mounted for the job and the next user got their own tapes mounted as well. Granted, in the 60s we did start seeing timesharing and other solutions that let you spread the cost of the computer better since it was sitting idle most of the time. But there were a lot of computers before that and even in the 60s a lot of computers were more or less single user at one time. I’m not saying only one person used the computer forever but I am saying when your program is running on the computer there wasn’t much else running.

As far as I know ctss was the original timesharing system and it ran on IBM computers starting in 1961 although apparently MIT had developed it a few years earlier. There were a few other things that could claim some amount of multi programming before that and people had been talking about it for years but it really didn’t come into being until the early 60s. The first one to actually see widespread use was the Dartmouth system which was somewhere around 1963 on some GE hardware. Interestingly, this was the same project that gave us basic.

Do IBM mainframes exist in your universe? They got timesharing in 1966, and were the dominating force in computers for decades. Many “modern” computing features like NUMA, virtualization, and clustering, were not only invented, but delivered to customers in the 1960s on IBM mainframes. They were and still are cutting edge systems for high performance computing, despite the ignorant claims of their demise.

Heh. I worked for IBM. I mentioned ctss which was on IBM hardware. All that you are thinking about came later but my point is that many older computers were single user affairs even in the 60s and beyond.

People don’t know mainframes are still being build and new mainframes are released every 3-4 years, and Linus Tech Tips even featured the last launch a couple weeks ago. The financial sector runs its business related code on mainframes today, and will continue doing so for the foreseeable future.

Mainframes run zOS, run zVM (probably the first hipervisor), and run Linux. You can run Apache, Oracle App Server, Oracle database, Websphere, Python, PHP, anything Linux-based app and it will run nicely. It can run OpenStack and OpenShift too, and integrate with your vCenter if you want to.

Mainframes aren’t an alien technology anymore. You install Linux on it, SSH into it and unless you really poke around, you won’t see it’s a mainframe.

Huls wrote: “the modern digital computer always accomodated multiple users.”

Did you think that no one would catch you slipping in “modern” (NAN and undefined) so when someone corrects you you can say “No man! That’s not modern!” What’s a modern computer in your world? Answer must include a year.

@X: What part of “1961” do you not understand?? Al said “1961” and you fire back with “You missed it man! 1966!”

That said, Al, if you had included a short explanation of what you mean by “filesystem” that would have been helpful. I used to follow the blog of a guy who worked at launch.com and collegeclub.com, largely building ASP pages who was always talking about “using the filesystem as a database” but he never explained it. http://asecular.com/blog.php?210607

“Unless off course you are referring to the scores of women that […] were calles computers. More than one user on such a computer would be .. special.”

*rofl*

Touche!

You know the FAT file system didn’t have permissions because Windows 3.1 and previous (excluding NT) were considered single user environments, right? I think FATex may support extended attributes and some rudimentary journaling, but that’s also not an officially sanctioned implementation of FAT.

This is all to bolster your claim about the quality of the early Microsoft software. At the same time, *NIX file systems had this support baked in for literally decades, so it’s not like it was an unknown paradigm for operating systems.

“Apart from Charles Babbage’s Analytical or Difference engine, the modern digital computer always accomodated multiple users.”

Aaaaand, the entire history of batch computing just got tossed on the historical junkheap.

Well. For simple tasks last this, c/c++ and sql are too heavy I think. Better use “MS Access” clones, such as kexi or libreoffice ‘Base’. You really can focus on the important things: schema, and project-specific GUI.

I have to tell you the story about a transactional DB that where every record was data, a transaction type and time-stamp. It was designed to be really fast. It was. However, there started to be all kinds of integrity issues and data errors. Finally traced it all the errors back to not having a consistent method to get the date time stamp. Some procedures pulled from the local machine, others from various servers… Its always the little things…

These “little things” are all over the place in medical databases, they are usually so messed up with bad data that they are practically useless for doing any sort of analysis.

i get critical error: Naruszenie ochrony pamięci (zrzut pamięci)

code are wrong

*sigh* I really don’t see this. Change the headline to “… next-level files” and then the whole article makes sense. As it is, I kept waiting to see how you were going to store a movie, a picture, and a text file and show me the collection and display any of them …

Yea, not a fan of adding a ton of complexity with a database that is stuffing things into files for me. If you look at the AWK Programming Language book from eons ago, they do a simple 3 file (table) database that they use about a 50 line AWK program to build reports / do querys against. If you have less than 10 tables and most tables have less than 3 foreign keys and you have a handful of users in them then flat files are easier to manage work with.

That Addison Wesley book is a slim tome, but an information dense goldmine. Those originators of Awk, C, and Unix were not prone to prolixity. Its permuted index is nifty, too, providing better access to the information.

I still figure if you’re not doing table joins, then a database is a hammer encountering a screw.

And sqlite runs on your phone.

There will be various apps so you can have a play with it.

The thing with sqlite is that it is a relational database. Yes, some problems have data that fits well into a relational database. But many don’t – in fact one of my constant regrets is that nobody (including myself) seems to have time to do a decent generic hierarchical database that is a popular supported open source alternative to sql ie something like IMS DB for the masses..

– as another example of a hierarchical – our file system is one ie NTFS. File names are just better in a hierarchical structure!

Given that, for many many programs it’s better to write a custom file format than force it into a relation structure that will a) be harder to use and b) a lot slower..

It’s backup and restore that worries me. What do you backup? How do you restore? How do you compare versions? When the chips are down, your HD has crashed and you’re in a minimal booted-from-USB-stick environment, you do not want to be trying to install exactly the right version of some DB program just so you can see what state your data is in. Give me ASCII files any day.

In general I’d agree, but sqlite 3 is incredibly stable and slow to change. I suspect their on-disk format is fixed for a long time now: database people tend to really care about data integrity.

sqlite3 does an excellent job on file-format issues (I used to work somewhere that used sqlite, in fact I have code in there). For the kinds of programs which could make productive use of sqlite3, having someone get in there and muck about in the file changing things is REALLY not going to go well. Likewise, programs using sqlite3 generally either integrate it into their build, or specify the version info for package managers, so there’s really no user-level choice involved in getting things working.

As their site says, “SQLite does not compete with client/server databases. SQLite competes with fopen().”

That said, there definitely are cases where something ASCII-based is better. For instance, I could see some developer advantages to expressing config files in a database, but IMHO that would be a real loss. In cases like that, it makes a lot of sense to trade off some additional complexity when making edits against transparency for reads and inspection and backups and the like.

It’s been done and it’s mess. MS calls it the Registry.

Most databases have solid utilities for importing and exporting CSV files, usually with excellent performance. You can import them into your spreadsheet, use them for backups, manually edit them with ease. Some databases can automatically create a SQL table based on a CSV file. Some can use a CSV file as primary storage for SQL tables. CSV files are an excellent data storage and transfer mechanism for many applications.

Full-db backup/restore is handled on “image” level, so full-fledged; yet the interesting question would be what a partly restore would look like; time based snapshots and delta handling are not an issue, at least. What then? Schema, table, partition, columns? There are many options and the big challenge is to get all dependencies right (again).

Image level backups don’t interact well with transactions. You need something smarter than that. What happens if you backup data that is subsequently rolled back? If you are backing up the whole image you can’t pick out the data that’s participating in a transaction. Locking out users to do backups is bad form, and not necessary with modern databases.

If you want to get all that metadata stuff right, in your backup you will put the necessary CREATE TABLE and CREATE INDEX statements to reconstitute your schema, and GRANT statements to recreate your security.

Most commercial databases have invested a lot of development efforts in the backup and restore department, it’s a hard problem and customers really need it. If you really need good backups to keep your business running, then you should also be investing in redundancy techniques like mirroring or replication. These are all very hard problems and you have to pay if you want it done right. Paying $10K or more for a commercial database license is nothing when your data is worth millions.

All of your base are belong to us.

Proper design of the SQL schema is essential for good performance. Getting good performance from multi-table SELECTs is dark voodoo magic.

Decades of experience show that almost nobody can do it correctly, it’s up there with C coding in that department.

If you need a database, use https://www.postgresql.org/ no matter what. It scale from a single core Cortex-M3 up to the most EPYC server you can buy. It have a lot of really cool features, including JSON support, geometric functions, etc… It’s open source, fast and never corrupted any data on the projects I have worked on.

I have once worked as a consultant for a public transport company that used a central + mobile nodes solution based on SQLite. It was a nightmare because SQLlite lack so many features there needed that there developed themselves complex features in the application above the db, like online remote duplication. Of course this was way above the available competence and that “solution” was unreliable, crash prone, and a pain for everyone.

I doubt postgresql can practically run on a cotex-m3 class MCU. Even SQLite requires 100s of K of compiled code, 100K+ of heap, etc .. Certainly a postgresql client .. but the database itself?

I would like to be proven wrong – got any resources indicating a cortexM3 hosting a postgresql database?

Wasn’t the idea of using a database as a filesystem replacement the one that actually was omitted on building Windows XP? I remember a hype on Microsoft going in that direction. But as we can see neither they nor Apple followed this path. So there must be some “real” obstacles that prevented these “large ones” (in terms of volume, not in terms of improved handling capabilities) from following this path. Hm. Subject to a seminar’s research… Yet I like the idea as databases turn out to have some really cool features, not only the “in-memory” ones.

WinFS has been promised in each Windows release and it’s the first thing that gets scrapped during the development process. I suspect for a simple reason; NTFS is stable, safe, secure and more than fast enough for non-extreme uses (don’t put one million files all in the sand directory or you can guarantee performance will be trash, which is why outlook uses an internal database on top of a flat file rather than a file per message as most *NIX email clients/servers do).

Using a database server complicates things like booting where you need to bootstrap the files to start the service to read the files to bootstrap… It has to also work in conjunction with the file system journaling so as not to become corrupted or out of sync with the on disk data (where the database is also stored). But there number one reason I think it gets scrapped from each release is that for all that extra complication, Microsoft will not ship one single, solitary, extra copy of Windows because of a new file system, but they will have to support it.

Suddenly it’s a cost sink, engineering sink and additional complexity with no upsides. I’d cut it too and make it a desktop search service or something if I really needed it, but it’s a solution to an unknown problem that no one has, I think.

It’s performance, pure and simple. You pay an enormous price for structured data. A filesystem is a heavily optimized database with one column of type LONGVARCHAR, with string indices. (Okay, so Apple HFS had two columns, “data” and “resource”, Apple was smart enough to keep the “resource” metadata handling out of the filesystem). Custom built parsers will beat generic metadata engines all day long.

Didn’t BeOS do this?

https://en.m.wikipedia.org/wiki/Be_File_System

palmos too

IBM i

Yes. “IBM” and lowercase letter “i”

Dumbest technology rename ever. OK, “OS400” was a bit dated, but still.

what about writing reading arbitrary data structures in the db ?

CDP1802’s, seriously? Maybe you need them for your COSMAC Elf from 1976?

I’m getting flashbacks to an earlier job I had…

Oracle (of course) had/has a Database File System

https://docs.oracle.com/cd/E18283_01/appdev.112/e18294/adlob_fs.htm

Probably to late for anyone to care, but the old iPod OS was a database for a filesystem.