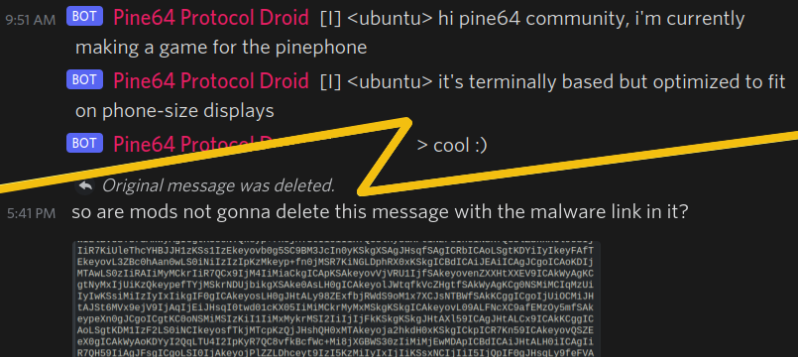

On December 5th, someone by the IRC nickname of [ubuntu] joined the Pine64 Discord’s #pinephone channel through an IRC bridge. In the spirit of December gift-giving traditions, they have presented their fellow PinePhone users with an offering – a “Snake” game. What [ubuntu] supposedly designed had the potential to become a stock, out-of-the-box-installed application with a small but dedicated community of fans, modders and speedrunners.

Unfortunately, that would not be the alternate universe we live in, and all was not well with the package being shared along with a cheerful “hei gaiz I make snake gaem here is link www2-pinephnoe-games-com-tz replace dash with dot kthxbai” announcement. Shockingly, it was a trojan! Beneath layers of Base64 and Bashfuscator we’d encounter shell code that could be in the “example usage” section of a modern-day thesaurus entry for the word “yeet“.

The malicious part of the code is not sophisticated – apart from obfuscation, the most complex thing about it is that it’s Bash, a language with unreadability baked in. Due to the root privileges given when installing the package, the find-based modern-day equivalent of rm -rf /* has no trouble doing its dirty work of wiping the filesystem clean, running a shred on every file beforehand if available to thwart data recovery. As for the “wipe the cellular modem’s firmware” bonus part, it exploits the CVE-2021-31698. All of that would happen on next Wednesday at 20:00, with scheduling done by a systemd-backed cronjob.

[ubuntu] didn’t share sources, just the binaries, packaged for easy installation on Arch Linux. One of the prominent PinePhone community members installed that binary and enjoyed the “game” part of it, asking about plans to make it open-source – receiving reassurance from [ubuntu] that the sources would be released eventually, “just need to clean it up”. Some weren’t so sure, arguing that people shouldn’t sudo install-this random games without a source code repo link. Folks were on low alert, and there might’ve been up to about a dozen installs before a cautious and savvy member untarred the package and alerted people to suspicious base64 in the .INSTALL script, about half a day later.

How Do We Interpret This?

This was a small-scale yet high-effort destructive attack on PinePhone users, targeting the ones using Arch specifically, by the way. The malware sender announced their “game development efforts” before publishing, stayed in the channel doing a bit of small talk and Q&A, and otherwise was not quickly distinguishable from an average developer coming to bless a prospective platform with their first app. Most of all, the Snake game was very much real – it’s not clear whether the code might’ve been stolen from some open-source project, but you wouldn’t distinguish it from a non-malicious Snake game. It’s curious that the package doesn’t seem to be sending private data to any servers (or encrypt files, or force you to watch ads akin to modern mobile games) – it easily could, but it doesn’t.

With the amount of work being done on the PinePhone cellular modem reverse-engineering, it’s peculiar that the malware takes advantage of the CVEs discovered alongside that effort. You wouldn’t expect a typical phone virus to pull off a cellular modem brick trick, given the fragmentation of Android world and the obfuscation of Apple world. Funnily enough, the community-developed open-source firmware for the Quectel cellular modem is immune to the bug being exploited and is overall more fully-featured, but Pine64 is required to ship the exploitable proprietary firmware by default for regulatory compliance reasons – the consequences for stepping out of line on that are drastic enough, according to a Pine64 source.

Questions spring to mind. Is PinePhone a safe platform? My take is – “yes” when compared to everything else, “no” if you expect to be unconditionally safe when using it. As it stands, it’s a platform that explicitly requires your understanding of what you’re directing it to do.

With more OS distributions available than any other modern phone could boast about being able to support, you can use something like Ubuntu Touch for a smooth experience. You are given overall more power to keep yourself safe when using a PinePhone. People who understand the potential of this power are the kind of people who contribute to the PinePhone project, which is why it’s sad that they specifically were targeted in this event.

Other platforms solve such problems in different ways, where only part of the solution is actual software and architectural work done by the platform, and another is by training the users. For instance, you’re not expected to use a third-party appstore (or firmware, or charger, or grip method) on your iPhone, and Android has developer mode checkboxes you can reach if you recreate the third movement of “Flight of the Bumblebee” with your finger in the settings screen. The Linux ecosystem way is to rely on the kernel to provide reliable low-level security primitives, but the responsibility is on the distributions to incorporate software and configurations that make use of these primitives.

I’d argue that mobile Linux distributions ought to define and maintain their position on the “security” scale, too, elaborating on the measures they take when it comes to third-party apps. Half a year ago, when I was preparing a summary on different OSes available for PinePhone and their stances on app security, it took me way more time than I’d feel comfortable having someone spend on a task of such significance.

What Are Our Options?

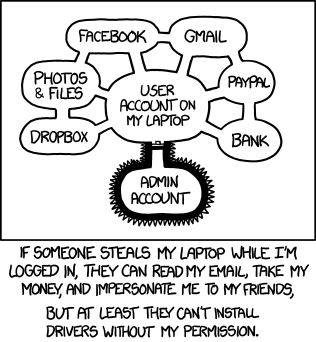

The gist of advice given out to newcomers is “don’t install random software you can’t trust”. While this is good advice on its own, you’d be right to point out – a game shouldn’t be able to wipe your system, and “get better users” generally isn’t a viable strategy. Any security strategist in denial about inherent human fallibility is not going to make it in the modern world, so let’s see what we can do beside the usual “educate users” part. As usual, there’s an XKCD to start off with.

Even being able to write to an arbitrary user-owned file on a Linux system is “game over”. Say, in $HOME/.bashrc, you can alias sudo to stdin-recording-app sudo and grab the user’s password next time they run sudo in the terminal. .bashrc isn’t the only one user-writeable file getting executed regularly, either. While sandboxing solutions are being developed to solve these kind of problems, the work is slow and the aspects of it are non-trivial, generally best described as “dynamic and complex whitelisting”.

A piece of commonly handed out advice is “if you can’t read the code and understand what it does, don’t run it”, presumably, supposed to apply to packages and codebases longer than a weekend project. Ironically, this puts Linux at an unwarranted disadvantage to closed-source systems. The “share an .exe” way of distributing applications is older than I am personally, and it still is an accepted method of sharing software that someone wrote for Windows, with UAC having become yet another reflexive clickthrough box. Again, putting more of a security burden on Linux users’ shoulders is easy but foolish.

Would sharing the source code even help in the malware situation? No! In fact, attaching a link to a source code repo would help [ubuntu] make the malware distribution more plausible. When you publish a package, even on supposedly reputable platforms, there’s rarely any checks on whether the code inside the package you upload matches the code in your repo.

That’s true for a lot of places – GitHub and GitLab releases, DockerHub, NPM, RubyGems, browser extension stores, PyPi, and even some supposedly safe Linux repositories, like F-droid, are vulnerable. Providing sourcecode along a malicious package adds legitimacy, and takes away incentives for skilled people to check the binary in the first place – hey, the code’s there to see already! If [ubuntu] did just that, perhaps we’d be talking about this incident a few days later and in a more somber tone. Supply-chain attacks are the new hotness in 2020 and 2021.

Plenty of security systems we have set up are trust-based. Package signing is the most prominent one, where a cryptographic signature of a person responsible for maintaining the package is used to establish “person X vouches for this package’s harmlessness”. HTTPS is another trust-based technology we use daily, though, really, you’re trusting your browser’s or OS’s keystore maintainer way more than any particular key owner.

When enforced to the extent that it actually makes us more secure, trust-based tech puts a burden on new developers who don’t have reasonably polished social and cryptographic prowess. However, when often already met with lacking documentation, incomplete APIs and untested libraries, should we really be increasing the burden any further? Maybe that’s not so bad.

The trust-based signing tech I mention often is applied to OS images you typically download to bootstrap your PC (or phone!) with a Linux install, but it’s not yet popular on PinePhone – for instance, quite a few OS images for PinePhone don’t have such signatures, which I was disappointed by, since most major distributions for the PC provide these and I expected the Linux phone space to be no different, and not having signatures can be disastrous. Quite a few security-related features like this are there for the taking, but aren’t being used because they require non-trivial effort to fit into a project’s infrastructure if it was not designed with security in mind from the beginning, or create an additional burden on the developers.

What Do We Really Need?

The PinePhone community has implemented some new rules, some channeling into the “automation” territory. This will possibly help a specific kind of problem to be less impactful in the future – though I’d argue that institutional memory should play a larger part in this. Beware of Greeks bearing gifts… until they learn how to work around your Discord bot’s heuristics? I already have, for instance. This is a monumental topic with roots beyond the Great PinePhone Snake Malware of 2021, and this article isn’t even about that as much as it’s about helping you understand what’s up with important aspects of Linux security, or maybe even the security of all open source software.

For me, this malware strikes the notes of “inevitable” and “course adjustment” and “growing pains”. Discussions about trust and software take place in every community that gets large enough.

We need the acknowledgment that Linux malware is possible and may eventually become widespread, and a healthy discussion about how to stop it is crucial. Linux still has effectively no malware, but the day we can no longer state so is approaching us.

I’m unsure on the exact course adjustment we need. Understanding the system goes a long way, but the security measures we expect can’t exclude power users and beginner developers. Technically, whether it’s containerization, sandboxing, trust-based infrastructure, or memory-safe languages, we need to know what we need before we know what to ask for.

I would like to thank [Lukasz] of Pine64 community and [Hacker Fantastic] for help on the PinePhone situation fact-checks.

“it’s Bash, a language with unreadability baked in”

Queue a Greta “how dare you” impression…

They’re not wrong, though. Bash is the most idiosyncratic and aesthetically displeasing programming language I have seen. At least things like Brainf*ck and INTERCAL have a sense of humor about them.

No, WIndows Command / Batch scripting is worse!

I don’t know about that… If you are familiar with BASIC, or other similar programming styles, it is pretty straight forward. I haven’t done a lot with BASH, but I have done several complex batch file scripts for packaging and combining binary files (.bin, .hex) and flashing parts using the command line, and it wasn’t hard at all, I just needed a reference to remember all the commands and how to use them.

It’s just what you are used to. To me, Batch looks a lot worse.

To the untrained eye, lisp looks like a parenthesis soup. Cobol like a Hex dump or a string listing of an executable. ADA looks like one of these yelling END USER AGREEMENTS. C is a mumbo-jumbo of special symbols. Java.speaks.with.dots.and.very.long.lines. Assembly is very repetitive and hard to make sense of.

None of which is particularly visually exciting. But once you are used to it, you can start appreciating “beautiful code”, spot patterns, etc. It’s all very subjective and we are all biased.

Have you tried writing anything in Powershell?

Even the most basic of tasks is convoluted, with no man pages to refer to.

Yeah there is no delay so everyone pings the loopback address, keep doing that till you get the delay you need. It’s amazing what you can do with a batch script, but it always takes a lot of ugly hacks to get there.

Powershell has the get-help command. try it.

zsh parameter substitutions has entered the chat…

(Don’t get me wrong, they’re awesome. But readable? Not so much.)

Yes indeed. I hate bash with an undying hatred — as a programming language, but I use it all the time as an interactive shell. A clear case of a tool being forced to do the wrong job.

Half of what I do for Hackaday is contained in a directory (read: “namespace”) full of 5-liners in Bash. If you chain simple things together, each of which having reliable behavior, you don’t really have to worry about the underlying language.

If you insist on writing “real programs” in Bash…. may God have mercy on your soul.

I’m definitely in the “hate it, but use it all the time” camp.

That’s the use of Bash: not a scripting language, but a “glue” layer to combine different programs.

What? Using a tool for its intended purpose?!? Kick this clown off of Hackaday!

:)

It’s not one of our proudest moments, but at my workplace we’ve made a Raspberry-Pi based jig for programming and testing some embedded devices during manufacturing, and the program & test procedure was almost entirely written in bash.

The only question is, “does it work?” on the one side and “Does it ever fail in a really expensive way? Does anyone lose fingers?” on the other. If the answers come out right, then congratulations for a nice piece of appropriate engineering :)

Don’t call yourself names, and just find a different job! :P

It’s awful as a programming language. It’s so bad it makes Perl look good. Sure in few cases (piping stuff around a lot) it’s pretty convenient but as a programming language it is a horribly designed one. For anything longer than few lines just use Python, your future self will than you.

I find bash pretty easy to read. It’s not really a programming language per se, it’s a scripting language. To read it one needs to understand the programs it calls, sometimes it’s canonical Unix stuff that people are not used to read anymore. But really it’s not hard.

> Say, in $HOME/.bashrc, you can alias sudo to stdin-recording-app sudo and grab the user’s

> password next time they run sudo in the terminal.

That’s why having sudo ask for the password is something that adds little security. If the bad guys get a “user account” for a couple of milliseconds, they can gain root provided that user sometimes “becomes root” with su or sudo.

Password-enabled sudo does currently add some amount of security to protect against less-prepared hackers who have keyboard access, as well as other non-security related benefits (like forcing you to take a moment that you might use to review your own command in case of mistakes). It’s still only a part of the puzzle, and for all the benefits it provides, it has its own flaws we should know to work around.

Ah yes. Windows users often run as administrators all the time. Which is why when you click on email link, your entire hard drive is encrypted by malware. And you are not asked for a password.

You are asked for password, just not your password *lol*

Whichever the system, if people run some software as root, not much can be done. Of course, some parts of the system could be natively read-only, then only the users´ data would be at risk, but still a problem.

As for the security in general, until we accept to pay the prices of good security, instead of ignoring it just because of laziness, we can´t have things correctly secured.

Oh I don’t know, I think that making the hardware impossible to brick from software (so that it is always possible to recover it, for instance by flashing new firmware) is the least that could be done. But that modem has bricking included as a feature, because they don’t want the user to be able to flash firmware on it. Company’s security wins over user’s security, and always will as long as there is no feedback mechanism that would make user’s more important.

If they can be blamed for RF interference, it is sensible of them to take steps to avoid it. Like in the answer below, users need to accept that with freedom comes having to assume responsability and the maybe bad outcomes of their actions.

They shouldn’t really be blamed for users breaking things with different firmware.

Another addition would be to make it unusual to run software as root, limiting the attack surface, even surface that’s accidentally exposed. That would not protect from things like ransomware though; some things, we aren’t even discussing at the moment, but it’s clear to me that “root installs” are only part of the future security problems we aren’t talking about yet.

That’s the Android security model in a nutshell.

Android 10+ has the OS set as Read Only, and I hate it. I’m rooted because I want to use features that ordinary people don’t use, like good ad blocking and being able to change the appearance and sounds of the OS. I’m teetering on the edge of whether I should install a different OS so that I can customize the OS as I want instead of being stuck with stock features because of security. Security always comes at the price of freedom.

I disagree with the last bit, if you use the freedoms you have you can create much more secure than the default setups without getting in the way of use.

Security is a balancing act between a few factors, usability, remote and local access security, ease of recovery and severity of loss if breached. With full freedom (and sufficient interest, time and understanding of the users needs) you can create a much more perfect security setup for that situation…

But as always nothing is fool proof levels of secure – even if you performed the miracle of a perfect computer set up for security the social engineering is always there…

Thinks for reminding me to choose wisely the hardware for my next “smart” phone.

Rooting your device for good adblock is certainly unnecessary as AdGuard is better and doesn’t need root. As far as sounds go I have no idea what you are referring to as I can change any sound.on my Pixel devices without root.

I don’t discourage rooting.

I’ve always rooted my devices starting with the OG Droid and continued up to the point of receiving my first Pixel, I have written several apps which require root (pub: f1vefour). These days I prefer security but certainly miss developing and running my own kernels, at one time there were tens of thousands of devices running my kernels.

People call me crazy, but this is why I don’t run on bare metal. No binary package management, no AppImage, no Flatpak, no “ready-to-run” stuff, nothing. I verify the full dependency chain, start to finish. If I don’t trust it, it’s relegated to one of three QEMU/KVM installs with their own NVMe passed through (they “share” a GPU): Windows 10 LTSB, Ubuntu LTS, macOS Big Sur.

Pretty happy with Sailfish OS on my phones, since Firejail is straightforward on it.

I may be paranoid, but at least I don’t have to deal with this kind of nonsense. Due diligence seems like wasted time until you have to recover from badware like this. Moral of the story: andbox, audit, default to zero trust.

I’m shocked that they weren’t more suspicious based purely on the terrible spelling in the message. If that was an unsolicited text or email it raise red flags immediately

It might be a reliable metric when weeding out email spam, but isn’t anywhere near being a good metric in an international community that consists of different kinds of people, which is what the Pine64 Discord server is.

If those different kinds of people type like that, I don’t mind weeding them out. They’re the ones I WANT to weed out. Intelligent people in international communities that I frequent tend to try to sound like the opposite of an idiot. If they can’t be bothered, neither can I.

In bash you can define functions with / in the name:

function /bin/sudo {

script … sudo “$@”

}

So that’s something to beware of, typing /bin/sudo won’t save you.

I think, at the end of it all, we really have two paths to take. Trust the user input, or don’t.

Apt recently changed a safety feature that keeps you from accidentally blowing away important system files. This was in response to Linus Tech Tips managing to destroy a Pop!OS install, while trying to install Steam. Instead of requiring a phrase to be typed to continue, you now need an extra flag, which is not mentioned. The original method did explain itself, but Linus even admitted to not bothering to read the whole thing. This change will at least stop that, but it still won’t stop a user from copy-pasting from a site or another user. It doesn’t stop malicious intent.

I think modularity and containerization in a system can help limit damage, but since power user actions are gonna look very similar to malicious actions, to properly protect a user, you either have to take away their freedom, or educate them. And since you can’t know if a user will bother to listen or not, the choice is, let a user put themselves at risk, or follow in Apples footsteps. We can make it easier to understand, we can make it clearer where the “danger” lines are, but a user that doesn’t listen, can’t be protected.

“… a user that doesn’t listen, can’t be protected.”

Sure they can. Obligatory car analogy:

• throttle return springs

• brake lights

• steel- or aramid-belted tires

• airbags

• seatbelt interlocks

• seatbelt pretensioners

• antilock brakes

• automated braking

• proximity alerts

• rain-sensing windscreen wipers

• automated headlights

• automatic lane centering

• automated collision avoidance systems

Heck, in WWII, the Luftwaffe had to invent a “stick-kicker” that automatically pulled up the nose of dive bombers at low altitude to keep target-fixated pilots from flying into the ground along with their explosive payload. At the time, these were arguably the best-trained pilots in the world.

Add in everyday safeties like dead-man’s switches on industrial and heavy equipment.

Humans are diverse, fallible, often distracted, and reliably stupid – not because we’re bad or insufficient, but because these characteristics are built into us. We have limited attentive bandwidth, we get tired, we experience hope and other optimisms, we get sick, we get interrupted, we get surges of adrenaline. Our shortcomings, imperfections, and failings are uncountable and literally legendary. Ask anyone from Sophocles to Homer to little Bobby Tables.

Our tools must accommodate this. They must fail safe.

> Heck, in WWII, the Luftwaffe had to invent a “stick-kicker” that automatically pulled up the nose of dive bombers at low altitude to keep target-fixated pilots from flying into the ground along with their explosive payload.

Of course the Japanese just saw that as a feature.

(Snort!)

LOL!

That’s an extremely dishonest comparison. Throttle doesn’t have a return spring to protect from user errors, it’s for convenience (just like fancy shell features like completion and fancy PS1’s), same with brake/turn lights, aint nobody have a time to wave their hand like in the first automobiles. Same with auto levelling lighs and a bunch of other stuff.

Seriously, calling “rain sensing wipers” a safety feature is patently idiotic

And the “lane centering/collision avoidance” are often turned off as they can be very intrusive (wrongly detecting lane, braking too hard on just someone merging it etc.) so at least that part matches with the `-i` option for many commands

The “protections” for users that do not listen become impediment for people that are competent. You just can’t goo too far into “protecting idiots from themselves” before inconvening the competent ones.

And somehow humans do need errors to learn.

Some humans even to need to make errors themselves.

Early automobiles had unsprung throttles, not unlike later aircraft. Automotive throttle return springs were added because in emergencies drivers too often failed to throttle back while braking and/or turning, accidentally (ha!) maintaining speed into the crash. The same happened if the driver became incapacitated. This was bad. The design changed to be more like a dead-man’s switch. See also, “stick-kicker”, above.

We can blame the user, but our products and services won’t exist without them. And then we get to live outdoors and beg for for food while our wiser competitors design systems that fail safe.

Reliability in adverse conditions is a feature, not a bug. So is avoiding liability suits and the ensuing ego-driven penury.

I don’t believe in limiting power users, either. I’d like to point out that even power users make mistakes, and power users deserve to get no less protection from malicious actions than regular users – but definitely more power over what they’re protecting themselves against. I don’t think that this choice is binary, instead, I think that Linux developers are in an unique position to design security measures that don’t limit power users’ freedom. I also believe that this position and the time we have to prepare shouldn’t be wasted away.

Making sweeping changes because Linus Tech Tips does something stupid is an over reaction. He’s always doing something stupid. I’m not convinced he actually knows anything about computers. He just seems to understand that certain shapes fit in certain slots. RAM slot, put RAM. SATA connector, attach SATA drive.

I can’t stand that youtube channel. Reminds me too much of pewtiepie. Ignorance isn’t a positive character trait.

Strongly agree with you. They did a series recently on “Gaming on Linux” and stated it took two (2) days to figure out how to run shell script. Previous series with Pop! OS install complained about opposite things…

Infotainment.

“a game shouldn’t be able to wipe your system” – that is part of the problem. You don’t know if it is a game or not unless you can and will read the code. In this case it is a game with a malicious payload. Code signing only can tell you who signed the code. Whether you can trust them or not and whether you can trust the CA that is vouching for the signature is another out of band question. People need to understand that I can code sign malicious code, the signer does not vet the safety of the code, it only tells you i signed it. Whether that means you can actually hold me responsible is another story. I might be offshore and using a completely fabricated identity, it is up to the end user to decide if the CA is confirming my identity properly.

With great power comes great responsibility, if you install anything with root permissions you are open to anything that happens. This is the dark side of truly open source code. It is up to you to decide how you feel about the person providing the code which requires researching their reputation in a loosely organized community, mostly based on other user’s experiences with the code. Most open source packages are installed based on other peoples opinion of their usefulness and integrity, someone has to try it first though.

It kind of works like this, my friend develops code, I trust him so I run it, I go online and tell others how useful it is and then the trust chain continues until it is widely accepted as safe. It is kind of like a community based CA based on a trust chain of users of the code.

As you correctly point to, our current web of trust can’t prevent malicious actions; instead, it raises the barrier of entry (with interpersonal keysigning happening IRL), disincentivizes malicious actions (obvious who to blame with interpersonally signed keys) and also helps us with damage control (key revocation + someone to blame and investigate into).

My mention of CAs was just an example of “how we use trust-based technologies already”, as far as I know, there’s no CA in the web of trust that the package-signing kind of people participate in. Perhaps, Debian-like package signing would be a better example to illustrate the paragraph.

> With great power comes great responsibility, if you install anything with root permissions you are open to anything that happens.

If you are alive, you are bound to die, but it’s generally accepted that we should manage our risks and live longer as a result. I concur with you on the “web of trust” summary, and I think it’s one of the cornerstones that we can and do build security practices on. Many distribution ways now require installs with root, and here’s one example of a possible future scenario – if, by making root-less packaging more convenient and root-enabled packaging therefore less prevalent, we will make requiring root permissions for packages into something unusual and easier to avoid accidentally doing. Your web of trust summary is good, and I think that, even in that case, users deserve more protection than they currently get.

B^)

Why don’t they have multiple users and different permissions, isn’t this linux?

Noob question. Because it was on IRC and it looked like something fun and of course they run it instantly as root. Linux does not have viruses!!elf1

Funny! I suppose with a history of programming on BSD 3, 4, System V, Minix, Linux from the very beginning, Android, Busybox etc al, that does make me a bit of a noob.

Therefore you are Brian Kernighan, and I claim my £5. :-)

You are a noob when I don’t agree with you. Synonym of “ur stupeed”

That would help but when things a using stuff like cellular drivers and addressing the OS at a very low level, it is hard to create a permission that allow low level access but still blocks malicious code.

I wouldn’t expect a permission for lower-level access to block malicious code effectively, but such a permission should be harder to hand out for the user, in my view. Not technically unfeasible, but not as easy as UAC.

There is no reason why a driver needs to have “escalated” privileges. It only needs access to its hardware and a few select kernel calls. We need a fine-grained security model where each module can only access what they need. Better yet we need to assume that all code is potentially malicious and put firewalls everywhere in internal code. So what if it is slow, our machines are already really fast, we can sacrifice some cycles for security. After all we put locks on our physical doors and it slows us down but we put up with it.

GNU Hurd called, it wants its microkernel concept back

The article is written in a confusing way. Sounds like a .INSTALL bash script is executed as part of the package install? Seems a shame executing arbitrary shell scripts is a supported part of the package management system eh. Surely better to have some config file with a predefined set of operations allowed by the package manager, not “…and then execute whatever is in this bash script as root”.

That’s basically every repository-capable package format out there – for instance, Debian has postinstall, preinstall, prerm and postrm scripts. There’s some standardization going on, but not in a security direction – the repository-distributable kind of package formats have a strictly defined set of security protections, but those have to be coupled with the package being inside a repository (which enables protections like package signing and vetting by maintainers). Any “config file”-like security measures are (IMO mostly rightfully) delegated to package formats designed to be individually distributable from the beginning.

Is PinePhone a safe platform?

I don’t know what is meant by “safe” here.

But it is no better than any other platform that allows the user to run untrusted / unverified code.

My Debian install has the same problem — it can all be taken down by running untrusted code.

Perhaps untrusted apps should be installed using a non-privileged method — to prevent abuse.

EG. installed into a user home directory (so that it does not require escalated privs)

Maybe society will figure out a new way to earn and demonstrate trust since that’s ultimately the core issue.

It’s more than just trust, security fails can happen from hardware problems. Look at the rowhammer issues, there is no trust problem but we still have a security failure.

“Is the X platform safe” is a reflexive question that some people will ask (sometimes openly and sometimes internally). To give an insight, a portion of people asking this question will be less security-savvy.

What you’re proposing sounds like one of the precautions we could take, and I imagine that the future world has us invent quite a few more, preventing users from having a new game do `rm -rf /*` on their devices.

I was made a little wary of pine when they decided to uproot and move from california to china. Their stated reasons didn’t give me any additional confidence. I could have agreed with a need to be closer to their manufacturers, for example, but ironically they said something about avoiding government meddling.

Clearly they know nothing about China. It’s like saying I moved to Russia to get away from news about Putin. =D That definitely makes me wary too. I had considered them in the past, but maybe I’ll just keep looking elsewhere or keep building things myself.

Where was this mentioned? Pine is an international team but the fulfillment is all out of china.

“user home directory” is not enough.

If app can’t access your root but can access your home you’re fucked anyway, just look at xkcd in article

Perhaps there is a need for either more stratified hierarchy of responsibilities – like, a hypothetical “user’s root”, or vertical isolation of tasks, like Android does with its ephemeral users per each process.

Also, each resource needs to be described in terms of risks for the world (or various levels of containing environments of the system: household, organization, … e.g. anything making owner liable), the system, or the user if the resource would be destroyed, modified, used in certain manner, or conveyed.

It is a logic similar to Asimov’s Laws of Robotics.

Linux has no malware? Maybe technically depending how you define “malware”, and “Linux”.

If you mean malicious binaries acting as trojans, targeting desktop Linux, perhaps.

But if you look at stuff that’s been done in Supply chain attacks, compromised libraries etc; or attacks against Linux web servers to make them run malicious code, or the new 10.0 java log bug, I’d say that’s a misleading statement.

Reproducible builds [1] [2] is one important component which should be mentioned in this article’s context.

No single measure will cover all of this complex topic, and, at some level, the One Turtle Down There [3] is called trust.

I can’t vouch for each of the 2249 (dpkg –get-selections | wc -l) packages which make up my daily driver. I can only have personal relationship to a few of those.

I trust that the Debian folks are doing The Right Thing.

[1] https://reproducible-builds.org/

[2] https://en.wikipedia.org/wiki/Reproducible_builds

[3] https://en.wikipedia.org/wiki/Turtles_all_the_way_down

Bootstrappable builds too. https://bootstrappable.org/ Guix has some futuretech in this area.

I think that future popular distributions should copy the read only partition for system files that Steam OS will do, as hardening those files there is a lot of security done. And of course keeping dev versions for those really advanced users as most of the actual LiGNUx ones,

Nothing using Quectel will ever be safe. Outdated, broken Qualcomm Android kernels combined with some real ugly hacks incompetently layered on top. God that whole ecosystem is such a fucking trash fire.

Unknown binaries offered for free on the internet without source and it turned out to be an evil pot to conquer the world. Shocker.

Who would’ve thought?!

Yeah, I am shocked.

In one hand, we have the quote about the source code of the “game” not being open,

in the other hand, we have another quote telling us that openness doesn’t mean secure.

Where do you see openness in a closed source game ⸮

(I got it that it was targeting an opensource project community etc, but this was too easy to mention, sorry not sorry)

Would sharing the source code even help in the malware situation? No! In fact, attaching a link to a source code repo would help [ubuntu] make the malware distribution more plausible. When you publish a package, even on supposedly reputable platforms, there’s rarely any checks on whether the code inside the package you upload matches the code in your repo.

–> 100% this, there is no way to prove that a given binary is actually matching a given piece of source code, unless you build it yourself from said source code.

There is nothing to do to help security.

If you add a constraining layer, some users won’t use it, and when it will fail, the users using it will be really pissed off.

If you impose a developer certification, you will segregate code spread and put an entry barrier to beigner programmer.

If you sandbox everything, you block the user from using his own hardware.

The only viable solution I see is to add some kind of “community mark and comment” to the packets (à la docker).

So, this Snake would still be available but flagged as “dangerous and destructive to the system”.

But if someone want to install it, you have no right to prevent this user to do so. Just inform him/her better.

You can even ask at the first usage of the repository manager what you want it to display (safe by community standard, dev, all, deprecated, etc.)

Well, that’s my two cents anyway.

“What Do We Really Need?”

Easy, just wash everything with Virustotal.com before you install it. That way you (and Google) will know if the application is malware.

To be BRUTALLY honest with the article author…. That “share the .exe” being older than dirt is also why MALWARE is older than dirt. It’s why people get zapped and pwned ALL THE TIME.

QUIT EXCUSING IT. You should be ashamed of yourself.

Jeepers, I feel thoroughly ashamed of myself after reading this comment!

Seriously though, it’s not about excusing it. It’s about acknowledging the fact that sharing software on Windows is easy, whereas sharing software on Linux is hard. The Linux world will be only at a bigger disadvantage from making it harder than it already is. And with new (IMO very warranted) moves to make sharing software on Linux easier, we need to keep an eye out for security, lest we end up in the same place where Windows stands. Mind you, Windows is adding checks&balances to prevent even .exe software from fucking up your system, so it’s gaining even more ground in the “ease of SW distribution” field.

The hacker/terrorist should be hunted down and given what it deserves….. a nice long waterboarding session then drowning.