What’s better than a 100MHz scope? How about an optical one? Researchers at the University of Central Florida think that’s just the ticket, and they’ve built an oscilloscope that can measure the electric field of light. You can find the full paper online.

Reading the electrical field of light is difficult with traditional tools because of the very high frequency involved. According to [Michael Chini], who worked on the new instrument, the oscilloscope can be as much as 10,000 times faster as a conventional one.

The measurement of a few cycles of light requires some special techniques as you might expect. According to the paper:

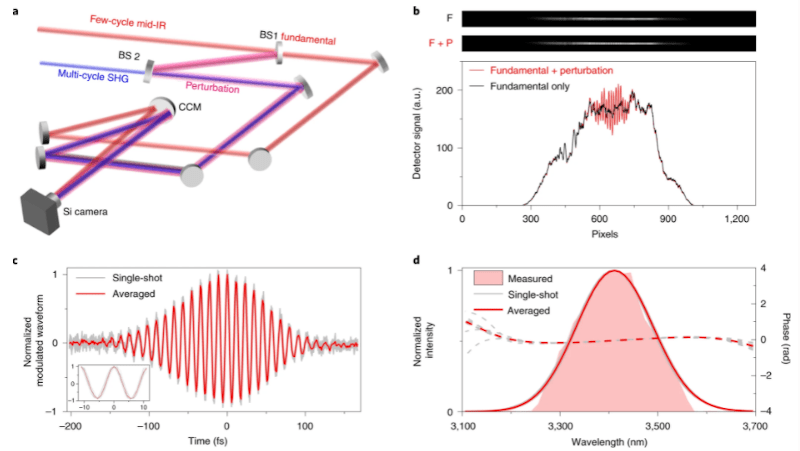

[A]n intense fundamental pulse with a central wavelength of 3.4 µm creates charge packets in the pixels of a silicon-based image sensor via multiphoton excitation, leading to detectable photocurrents. The probability of excitation is perturbed by the field of a weak perturbation pulse, leading to a modulation in the excitation probability and therefore in the magnitude of the detected photocurrent. We have previously shown that, for collinear fundamental and perturbation pulses, the dependence of the modulation in the excitation probability on the time delay between the two pulses encodes the time-varying electric-field waveform of the laser pulse. Here, by using a crossed-beam geometry with cylindrical focusing, we map the time delay onto a transverse spatial coordinate of the image sensor chip to achieve single-shot detection.

Did you get that? In other words, instead of measuring the light pulse directly, they measure the change it makes on another known signal. We think…

Unless you’re moving high-speed data across fiber optic, we aren’t sure you really need this. However, the concept is intriguing and not previously unheard of. For example, we’ve seen capacitance meters that measure the change in frequency caused by adding an unknown capacitor into an existing oscillator.

If you want something more conventional, maybe look at some popular scopemeters. Of course, something this high speed might be able to apply time-domain reflectometry to fiber optics. Maybe.

Paywalled. Seriously, HaD. What’s the point of including a link if it’s useless to most of your readership?

(yeah, yeah, I know there are ways around it, including using my work account, but that’s not the point)

It’s funny since the article at Cornell University ArXiv. Maybe not the final version, but still can be found. However, don’t expect much useful being said in the article. Scientific articles usually don’t dive deep into how it’s done and usually can only be used for general overview of the techniques used, not for practical implementation.

https://arxiv.org/abs/2109.05609

The Arxiv paper is almost word-for-word the same as the Nature version, and also includes the many pages of Supplementary Information referred to but not present in the Nature paper itself.

I’m not sure what “scientific articles” you’re reading: The whole point of a real scientific paper is to communicate enough information that other people can replicate the work. If you can’t infer how to do it from the paper and its references, then you need to learn more about the field first.

Wrong. Lots of papers in machine learning for example omit the necessary information to reproduce the results, to keep competitive advantages, and instead mention the general technique and focus on analyzing the performance.

The complaints aren’t new, and this isn’t the only field.

Practical details are often seen as unimportant, while they turn out to be quite relevant when replicating.

“The whole point of a real scientific paper is to communicate enough information that other people can replicate the work.”

The whole point is to bulwark publishing companies’ (and dubious professional societies’) bottom line.

https://tribunemag.co.uk/2021/08/we-cant-trust-the-market-with-scientific-knowledge

@Paul said: “Paywalled. Seriously, HaD. What’s the point of including a link if it’s useless to most of your readership?”

To drive traffic to Sci-Hub?

Such a ‘scope’ is big (needs a lot of space on an optical table – including the laser – definitely more than 1 sqm) and you need the temperature stabilized lab around, too. Cost: 0,5-1 M€ or M$ (depends on which side of the big lake you are).

Similar techniques have been there for about 20 years (FROG, SPIDER and all the derivates):

https://en.wikipedia.org/wiki/Frequency-resolved_optical_gating

https://en.wikipedia.org/wiki/Spectral_phase_interferometry_for_direct_electric-field_reconstruction

These might help understanding the technique.

Yes, and we have used interferometric autocorrelation for decades for optical pulse characterization, either with second harmonic detection or two-photon detection. I have used two-photon detectors with autocorrelation for 12fs pulses. Never tried attosecond personally, as the bandwidth required normally is only produced with high-harmonic generation, and detection over a large bandwidth (down into the vacuum UV range) is tricky.

I work for an ultrafast laser manufacturer.

No worries, as the technology advances it will get smaller and cheaper soon.

What is genuinely novel about this paper is that it’s a single-shot technique, not reconstruction of the waveform from stepping a delay line over many identical pulses. Of course in the paper they do average their “single-shot” measurement to improve SNR, so…

I can’t think of too many applications for this technique except it could be useful in quantum optics experiments working with squeezed light. But I’m sure more clever people will find out what this is good for. When a new tool is invented, new ideas almost always follow!

Just get yourself a good scope in the Giga hertz bandwith loll will cost less loll

My next O-scope might be 1 more than my wife will tolerate!

B^)