Building a complete operating system by compiling its source code is not something for the faint-hearted; a modern Linux or BSD distribution contains thousands of packages with millions of lines of code, all of which need to be processed in the right order and the result stored in the proper place. For all but the most hardcore Gentoo devotees, it’s way easier to get pre-compiled binaries, but obviously someone must have run the entire compilation process at some point.

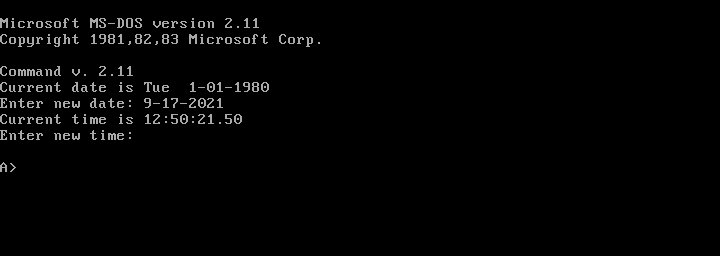

What’s true for modern OSes also holds for ancient software such as MS-DOS. When Microsoft released the source code for several DOS versions a couple of years ago, many people pored over the code to look for weird comments and undocumented features, but few actually tried to compile the whole package. But [Michal Necasek] over at the OS/2 Museum didn’t shy away from that challenge, and documented the entirely-not-straightforward process of compiling DOS 2.11 from source.

The first problem was figuring out which version had been made available: although the Computer History Museum labelled the package simply as “MS-DOS 2.0”, it actually contained a mix of OEM binaries from version 2.0, source code from version 2.11 and some other stuff left from the development process. The OEM binaries are mostly finished executables, but also contain basic source code for some system components, allowing computer manufacturers to tailor those components to their specific hardware platform.

Compiling the source code was not trivial either. [Michal] was determined to use period-correct tools and examined the behaviour of about a dozen versions of MASM, the assembler likely to have been used by Microsoft in the early 1980s. As it turned out, version 1.25 from 1983 produced code that most closely matched the object code found in existing binaries, and even then some pieces of source code required slight modifications to build correctly. [Michal]’s blog post also goes into extensive detail on the subtle differences between Microsoft-style and IBM-style DOS, which go deeper than just the names of system files (MSDOS.SYS versus IBMDOS.COM).

The end result of this exercise is a modified DOS 2.11 source package that actually compiles to a working set of binaries, unlike the original. And although this does not generate any new code, since binaries of DOS 2.11 have long been available, it does provide a fascinating look into software development practices in an age when even the basic components of the PC platform were not fully standardized. And don’t forget that even today some people still like to develop new DOS software.

Was it just me or do others with a long memory think that the ideas for MS-DOS were lifted from DEC’s RT-11?

Yeah and Windows NT smells a lot like VMS when you peel back the covers. Microsoft has always suffered from a lack of innovation, they copied relentlessly from Digital and poached many of their best engineers because Gates and Allen had no talent for system design.

Look up the name “David Cutler”

One of the engineers that worked on VMS and TR-11 while at DEC, then moved to Microsoft to lead work on Windows NT and XP.

He’s certain to not be the only one from those days with fingers in much of early computing, just the only name I remember. Yet it certainly no surprise on why all the commonalities.

(As a side note, I just learned Mr Cutler still works at MS, is on the Xbox team, and had a huge role in its OS too)

Cutler never worked on RT-11 (or TR-11, which doesn’t exist). His PDP11 work at DEC was on RSX.

Yes the TR was a typo of RT. and I could have sworn he did, but I don’t remember why I thought that. Wikipedia says you are right, so I stand corrected.

Cutler also designed VAXELN the VAX real time OS mainly for the rtVAX-models

The more you read about Cutler’s work, the more you realize, this is one brilliant guy.

I corresponded with Dave Cutler during my time at Microsoft (2008-2010). He was the original designer of both VMS and Windows NT. In case you were wondering what NT stood for, I wondered the same thing, and someone told me that the initials “WNT” were actually derived by adding one letter to each letter in VMS. I don’t know if that’s true, but it is a cool story.

This is a great thread! I’m one of those who spent many months reverse engineering DOS’s Undocumented Calls.

Anyway, due to this thread I did some Googling around and came up with a pretty interesting article. The author, Mark Russinovich is also a big low level tech as MS-

https://www.itprotoday.com/compute-engines/windows-nt-and-vms-rest-story

Enjoy!

The ideas for DOS were lifted from CP/M…the ideas for which were lifted from RT-11.

Almost. Gary Kildall, the creator of CP/M, had experience on a PDP-10. And so was influenced by TOPS-10, which was derived from an earlier OS. RT-11 was a later OS by DEC and used conventions from TOPS-10. In some sense, both RT-11 and CP/M were similar, but only in a superficial sense. Similar names for similar utilities, like the garbage can called *PIP. Not to mention, the 3 character file extension names and the limited character set. Since the PDP-10 was an 18 bit machine, it could pack 3 6 bit characters into an 18 bit word. RT-11 continued that convention because it use RAD-50 encoding to pack 3 characters into a 16 bit word. The internals were very different, however. RT-11 was/is a real time system and CP/M isn’t. TOPS-10 was a mainframe system and had very different characteristics.

*PIP was a garbage can because it had a lot of functions. PIP stood for Peripheral Interchange Program and was tasked with things like directory listings, listing the contents of a text file and lots of other things. Those functions were controlled by a large set of switches. One good thing that PC/MS-DOS did was make those separate programs with separate names. Funny what over a decade of Moore’s Law meant for available memory.

Sorry about my ASD kicking in.

Don’t forget OS/8 which was the DOS for the PDP-8 which formed the foundation for all of these, also there was a DOS-11 which was the base for RT-11 and other DEC OSes and was part of the DEC test suite of programs for the PDP-11. You would boot up DOS-11 to get the assembler to build early UNIX or to copy your new proper OS from tape to disk if you didn’t have anything else to do so yet.

Remember ‘ed’? It’s a text editor that has so little memory that you need to manually ask the editor to page in a block of lines, modify them, and then write that block of lines. Then you do the same with the next block of lines.

I was so happy when I finally got hold of wordstar. Excellent program editor too (well, IMO). Still have the CPM 2.2 Sysgen disk and password hanging around somewhere in the world. Original IBM format 8″ Ithink.

Well, ed later got a gui. It’s called vi

Are u thinking of Edwin?

PIP came mainly from RSX-11 I think which Cutler also had been involved in and who also designed VAXELN the real time OS (RT-11 replacement for VAX) for mainly the rtVAX models

No, it was ex that got a UI (by Bill Joy) and became vi.

ed was left by the wayside when ex came along.

Except, sed (stream mode ed) remained/remains useful.

The PDP-10 was actually a 36-bit machine, having two 18-bit “halfwords” per 36-bit word. I’m old enough to have actually programmed a PDP-10, and as I recall, it had a very nice symmetrical instruction set. The two 18-bit halfwords could each address all of the 256K words of memory that the machine could hold, so it was well suited for LISP programming, which uses a lot of pointers.

Some of my memories of using old Intel but mostly Microsoft software tools in the MS-DOS and 16-bit Windows era, pre-880386 and above, was all the memory management related problems. Some builds needs a lot of lower memory since they would only run in the lower 1 MB address space. TSRs had to be removed. It needed the “right” video card and sometimes motherboard to run. MS C V6 would “silently” disable some optimizations if there wasn’t enough memory. And on, and on, and…

Turbo Pascal was the development environment of choice back then, even Microsoft used it because their C compiler was so terrible.

I’m pretty sure MS never released any Turbo Pascal stuff. Microsoft released their first Pascal compiler in 1980, while Turbo Pascal came out in 1983. Nick Wirth’s Pascal language disallowed forward references, because it was designed to be a one-pass compiler. It used nested procedures like P/LM (for which I have near 0 experience!). It’s amazing how long it took manufacturers to figure out that what makes anything popular is low price with decent functionality.

The “first” MS C compiler was a licensed version of Lattice C (I think I still have some old 5″ floppies of that). Later MS was able to make their own version after hiring a lot of talent to get it done. My first MS C (version ?) in 1985 cost something like $395 back when that was a boatload of money (COLA of 3.1, so about $1200 now).

“It’s amazing how long it took manufacturers to figure out that what makes anything popular is low price with decent functionality.”

I think Ford learned that lesson with the Model T.

B^)

“It’s amazing how long it took manufacturers to figure out that what makes anything popular is low price with decent functionality.”

Huh? That sounds as if people were actually paying for their software. I was under the impression that popular things like the latest DOS, Norton Commander, XTree Gold, WordStar, MS Word, 1-2-3, dBase and Turbo Pascal were usually “borrowed” back in the 80s.. :)

Well, those were the days. I remember, when I got pissed of with MSDOS 3.2, then went ahead, got an old book from a second hand store which detailed the 8086 microprocessor and had bios listing and interrupts also. It was like a treasure to me.

Used TCC of Turbo C and wrote program intermixing assembler directive, threw away the C libraries, wrote my own libraries and using interrupts of BIOS, wrote a small OS for doing memory management, disk operations, creating my own FAT design, threw in a word processor, a graphics editor and LOGO interpreter all written using C and assembly.

The whole thing compiled and linked and converted to bin format (anybody remember exe2bin), came to 8k. Burnt it into EPROM and put it on the motherbox and had a working machine which I played on..

One of my best creations till date as I learnt huge loads in doing it.

After that did not write in C again as it used to look boring. And C++ is for lazy people, in my perception and I am 100% wrong as there are some great programmers around who code in OOP though for a long time my response used to be OOPS. :D

Those were beautiful days when we used to judge a programmer’s caliber in how good the program was functionally and how less memory it used to use.

30 years down the line, AM DISAPPOINTED!!

The IT industry has taken a million steps backward in my opinion. Today my laptop has 32GB RAM whereas my PC – XT on which I used to program used to have 128 MB of HARD Disk and 1 MB of RAM and I used to think myself rich.

The programs I see today, hell in those days, I would have thrown the program and the programmer out on grounds of inefficient programming and wastage of resources.

I miss those days when performance (overall) was the only criteria.

It was called Quick Pascal, and yes there was even a professional one. It even supports OS/2.

Microsoft was born a language company, although that C compiler was something they bought.

I have a package of “OS2 for Windows 3.1”.

(Unopened) Never got around to trying it out..

Worse than you might realize. Before Microsoft got into operating systems they were compiler and interpreter providers. Somewhere I have a copy of bascom (basic compiler) and fortran77 for CP/M

Wow! I still think Turbo Pascal 5.5 was the best development tool I ever used. For its day, nothing MS could provide came close. As for the CP/M discussion, Epson came out with an outstanding version of it called TP/M for their QX series of computers. That added paged memory to allow programs to utilize 4 blocks of 64k RAM. That was a joy to play with back then!

God I loved that environment, automated just about everything in my job using DOS and TP. All so new and fun, programming just feels like drudgery these days

8080

8088

80286

80386

80486

No 880386

Probably a typo, one 8 too many. Then there is peripheral chips and other oddball like the 80385.

A thought towards BSD /”thousands of packages”/ for FreeBSD:

When you do install it, you are asked if to install the “sources”, which leads to sources being extracted from an archive (src.txz) into the /usr/src folder. Those are the sources for your whole base system, including ls, cp, etc..

and for every command there are subfolders named “/usr/src/bin/ls” or “/usr/src/usr.bin/awk” (yes the sub-folder is named usr-dot-bin instead of adding another sub-folder)

Mostly you will find a makefile named “Makefile” a “.1” Troff-file/manpage and the c-sources/header files for the program in question, and the parent-folders also have makefile to make everything under the current directory.

The sources are also divided into different dirs by their licenses accordingly, as the FreeBSD base system strives to be BSD-Licensed but in fact borrows for example dtrace which is under CDDL and till some time ago gnu-tar

When you have found “/usr/src/sys” that is the source for the kernel and release-tools are to be found under

“/usr/src/release”

This is the base system and the other programs you do install are handle by the “pkg”-Tool / ports-install/registration

And if you ask yourself why I do prefer FreeBSD over Linux .. it is because of that structural approach, which I really like after having dug myself through LFS.

there have been so many changes in the past 40 years. i remember how euphoric i was when i could simply call malloc(x) with x > 65536, and not have to worry about far pointers or EMS paging or what-not. i could go on. so many little revolutions. but when i read this article, i took all those for granted and just one step stood out to me: source management!

i remember it well…i used to make my changes on the only copy of the source code i had. once i made a change, not only did i create something new, but i destroyed what was old! if i upgraded something about my build environment that required changes to my source code, the version that was built against the old environment is gone forever. and that build environment, it might have one-off hacks that can never be replicated again! all development took place only at the head, there was no propagating a fix back as an update to an older released version. even in big budget products, the occasion of the release of version 2.0 often meant the complete loss of version 1.0 forever. god forbid you have two developers working on the same module and they have to do a merge!

i remember when i was exposed to rcs, i thought “this is pretty neat”. and git is so modern! but imagining life before this tool is quite the head trip

That takes me back a bit. I don’t recall RCS, but I do VCS and ClearCase and Microsoft Visual Source Safe, which was awful compared to VCS (exclusive lock on checked out files anyone?). I remember when Subversion went self-hosting and broke their repository. I still prefer Subversion over Mercurial or Git because it supports directories as first-class revision elements.

Some decades ago building a minimalist Linux was as easy as making a DOS floppy.

Apart the kernel (in extremo on an own disk, which would ask for the 2nd one) only some ‘/dev’ entries and a static shell renamed to ‘init’ were needed. XIAFS could reserve the space for the kernel when formatting a medium and with other FSes it was only a bit more juggling to get all that on 1 floppy.

Not surprisingly a website whicn is obviouly not in this country serves out abandoned software. Amongst its collection is what it calls the OEM kit for DOS3.3. And Lee, they glommed ideas from Digital Research who created CP/M-86. As someone else once said “it’s complcated.”

“obviously”, silly keyboard, still doesn’t like being walked over by cats.

What is the OEM kit for DOS3.3?

Just like today, OEM “kits” are what computer sellers sellers, (e.g. Dell, HP) use to customize for for their hardware (and sometimes, software extras).

Yes, all true.. MS acts like a franchise company, selling access to the core, and allowing custom printing.

MS did have a brain fart when the Win95 Beta was released to us early adopters.

The boot floppy didn’t have a “Path Command” pointing to the CD.

After I installed 95 from the floppies, I figured out why the CD crashed the install.

Fun times..

Not to far from the Win11 requirements, I was obligated to buy a new system to support the win 95 software.

Ancient software???? Come on now, I was in my first year of college in ’83 and I don’t think I am ancient :) …. Not yet anyway….

Above mentioned Turbo Pascal. That was a boon for me in college. I could write school projects with it at home on my DEC Rainbow running CPM/86 and then ‘upload’ to the VAX when done (at 300, then 1200 baud). No fighting for ‘terminals’ at the college. Plus I could, of course, create my own projects for fun.

Interesting article. Brings back a lot of good memories.

We’re ancient perhaps, when it comes to the fields of computing, not sure. 🤷♂️

1983 was years before my time. I had my dated but beloved 286 PC running MS-DOS 6.2 and Win 3.x when I was little. And a Sharp MZ series PC with datasette a slightly before..

Anyway, I’d like to think that 10 years in computing equals 100 years in human society. Because, that would explain a lot as to why old computing conventions start to crumble from time to time..

Anyway, you’re likely not old as a person if your mind is still fresh and open. 🙂

I have long forgotten the name of the variables, but buried in DOS 2 COMMAND.COM was both a hook for reading C/PM format 8″ floppies but also that UNIX multi user instance counter variable used to track how many users were using a program right now.

I don’t the specifics, but none of the rest of command.com was capable of multi-user or multi tasking.

You could replace command.com with your own shell program quite easily it wasnt actually required unless you wanted a DOS prompt

I used to use 4DOS as the shell replacement.

Just for laughs, I once made IBM Basica the dos shell. Although using the shell and system command was a bit of a problem.

How much of Quick and Dirty DOS is in that source code release?

Does anyone remember BOOT.SYS? It used to display a menu out of the CONFIG.SYS file to let you boot up different configurations (e.g. memory managers like QEMM or 386MAX) without having to edit/swap the CONFIG.SYS file and reboot.

I reverse engineered the DOS bootstrap code using a hardware debugger and created BOOT.SYS, because we were tired of maintaining a number of boot floppies in a computer lab at an educational institution. This proved so useful that I started distributing it as shareware and I made a decent living with it for five years, until MS introduced its own bare-bones menu system. Ultimately, the world switched over to Windoze and the need for having multiple CONFIG.SYS variations disappeared.

I also hacked Turbo Pascal so that it would print out the source code and the result of the run on a single sheet of paper, for documenting student assignments. This made it much harder to cheat… :-)

At my school, I fixed each computer with a removable hard drive cage, and each student had their own hard drive.

Solved a lot of errors..

Without the internet you had to rely on magazine articles I also had the 8086 Databook from Intel which was awesome and later in the PC life came a book called PC Intern which was the icing on the cake for PC hackers and shown you how to call just about everything in the BIOS using C, Pascal and Basic

I still have some of my ancient processor manuals, including the 1984 32000 series Databook from National Semiconductor, which I used when writing my own assembler for the 32081 math coprocessor. I needed that to write an APL math library for the 68000 for a client who was selling 68000 add-in boards for the PC. Why not just use the 68881 math coprocessor instead? Because that cost $500 or more while the 32081 was something like $25.