It’s normal for a computer in 2022 to come with a fully-featured sound card containing a complete synthesizer as well as high-quality PCM sound recording and playback. It’s referred to as a sound card after the way the hardware first appeared in the world of PCs, but in fact it’s now considered so essential as to be a built-in part of most mainboards. There was a time when computers boasted considerably less impressive sound hardware, and among the chorus of SIDs and AY chips of the perhaps the least well-featured was the original Sinclair ZX Spectrum. Its one-bit sound, a single line on an I/O port, is the subject of a thorough investigation from [Forgotten Computer]. It’s a long video which we’ve placed below the break, but for those with an interest in 8-bit music it should make a for a fascinating watch.

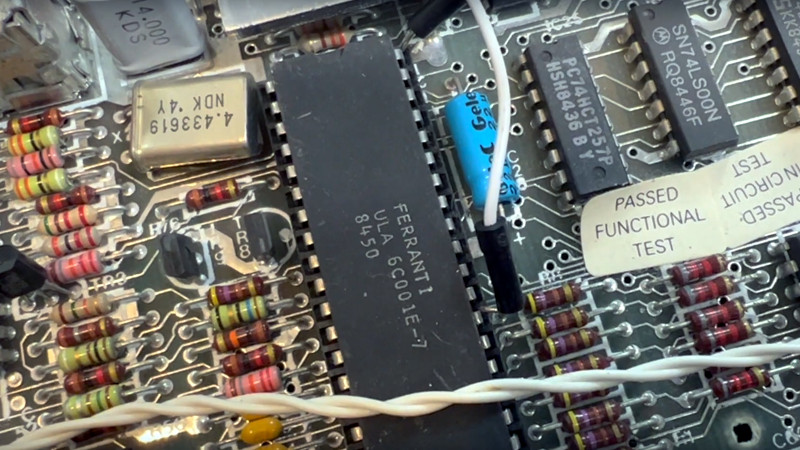

For Sir Clive Sinclair the 1-bit audio must have been welcome as it removed the need for an expensive sound chip and kept the Spectrum to its low price point, but on the face of it there was little more it could do than create simple beeps using Sinclair BASIC’s built-in BEEP command. The video gives us an in-depth look at how interleaving and PWM could be used to create much more complex sounds such as the illusion of multiple voices and even sampled sounds. In particular his technique of comparing the audio output with its corresponding pin on the Sinclair ULA shows the effect of the machine’s simple low-pass filter, though the music was often so close to the edge of what the interface could do that aliasing sounds are often very obvious.

As he demonstrates the various ingenious techniques that game and demo developers used to extract performance from such limited hardware that could even try to compete with the more sophisticated machines even at the same time as their code was running whatever was on the screen, it’s difficult not to come away with immense respect for their skills. If you’ve ever experimented with computer audio then you should try hardware this simple for yourself.

1-bit forum : http://randomflux.info/1bit/

Utz and Shiru are absolute guru’s !!

This man knows what he’s talking about. It’s so good to hear from someone that has completely different abilities. I’m not good with music.

In this era, I wrote sound programs (on Z80) in an era a bit before this but it was still the same. Sending pseudo-audio off to a 1 Bit, well microphone output, but for us it was the very early days of having access to an (unfortunately) well constrained GPIO. It had lots of analogue filters due to it’s purpose as a cassette interface.

Those systems with a basic speaker, they had much the same challenges, if you wanted to produce a melody that was interspersed with other elements of gameplay then it was difficult.

You had to make a software scheduler to switch between sub elements of the the game and the sound code, Obviously the sound routines had priority much of the time.

So it’s so good to hear from someone who appreciates and understands how these old sounds were made. With 1’s and 0’s lol. Oh and I’m getting older now, the beam is catching up.

Pin Pulse Modulation? Is that aka Pulse Frequency Modulation, or Pulse Density Modulation?

Do modern on-board sound chips actually include a synthesizer? I just assumed that all sound nowadays was produced by playing audio files.

With a modern sound card like you’d find on the motherboard of a home PC today, the sound card just takes a value many times a second saying how far in or out the speaker cone should be in that moment. This is about as general as you can possibly get; with a sufficiently fast sample rate and sufficiently precise set of speaker positions, you can make whatever sounds you want, basically. You’re just telling the speaker exactly what to do “by hand.” This is still a synthesizer in the sense that it generates sound through electrical circuitry, but not one that has any particular character or special set of sounds you’d expect to hear from it or anything. A raw audio file like a WAV is just a long list of speaker cone positions, each for a tiny slice of time like 1/48,000th of a second; for stereo audio there are two positions per time slice, one for each ear. The bit depth gives the available number of different speaker positions, so a 24-bit WAV allows for 2^24 or ~16.75 million different positions, which sounds effectively analog to the human ear.[*]

The technical term for this synthesis technique is pulse code modulation or PCM, because each speaker position comes out as a very short pulse with a certain sign and amplitude. It’s pretty demanding for the CPU because it needs to send a new pulse or two to the speakers tens of thousands of times a second with very precise timing, and on top of that it needs to do whatever calculations necessary to figure out what the next pulse(s) should be. For a long time PCM was the “holy grail” of PC audio and many people found ways to mock it up to varying degrees of fidelity in the ’80s and early ’90s using square wave generators and such; this article/video shows one area of this tendency. Demoscene hackers and such today still enjoy finding ways to approach PCM on old hardware so that they can play samples and such.

As far as sound nowadays being just produced by playing audio files, you can also generate sound algorithmically, either in realtime or rendered to an audio file for playback later. When you play a game in an emulator or listen to chiptune music in native formats with specialized players, or if you make music using synthesizer plugins or via programming or whatnot, the CPU is doing the calculations to generate each pulse (sample) and then sending those pulses to the speakers. Modern CPUs are amazingly fast, so it’s no trouble for them to reproduce the behavior of old sound hardware entirely in software and play the results via PCM at the same time, or even execute sound synthesis algorithms in realtime that would have been totally impractical on older computers like physical modeling techniques or the like.

[*] The Spectrum’s “1-bit” sound interface actually describes three different speaker positions—out, in, and center—because the 1-bit on/off switch controls a square wave generator at a configurable frequency. The frequency parameter is not 1-bit, so I think the term “1-bit” is somewhat misleading, since the frequency parameter is essential for techniques like pulse width modulation. In any case, it doesn’t refer to the sound’s bit depth like “24-bit” in the context of a WAV file or modern sound card or the like, /or/ what people mean when they say something like “8-bit music,” which generally just refers to the pointer size of the computers the music was made on (like the C64 or the like) and says little about the music itself.

You seem to know a lot Zoë Sparks,

By chance, would you know if the AY-3-8912 produce “analog sound” ?

What I call “analog sound” is when we don’t try to emulate a wave shape by plenty pulses,

For example, for me, a PCM sinwave is not “analog” as the sin wave we are listening is in fact made by plenty of different values voltages (like 44100 time each second it chose between 65536 voltages) (and then filtered in purpose it look like less a stair) (so it’s an emulation of sinwave)

or PWM is not what I call “analog” too, as for a sinwave made by PWM, each point of the sinwave is the result of the average value of an “ON/OFF” cycle of different duration (charging a capacitor for example, for example if the cycle is 50% of the time OFF and 50% ON, the capacitor will be charged half of the maximum voltage)

Is there some computer that produce “natural” square waves or triangle waves or noise ?

I heard that it’s the case for the NES and the Game Boy, I don’t know if it’s the case for AY-3-8910 based device. I read the datasheet, but I have difficulty to understand how it work.

Depends whom you ask. I consider Pin Pulse Modulation to be equivalent to PFM.

Modern sound chips do include a synthesizer in a sense, but not like those you might find in old computers from the ’80s or whatnot. Instead, tens of thousands of times a second, they take a number describing how far in or out the speaker cone should be in that moment, and they send an electrical signal to the speaker that puts the cone in that position. (For stereo they take two numbers, one for each ear.) This is still sound synthesis in the sense that it uses electrical circuitry to produce sound, but it’s about as general a synthesis method as you could ask for—if you take a speaker position frequently enough (the sample rate), and you allow for a sufficiently precise statement of speaker position (the bit depth), you can indistinguishably reproduce analog audio.

An uncompressed sound file like a WAV is just a long list of speaker positions (samples), with each sample occupying a tiny slice of time (like 1/48,000th of a second for a file with a 48 kHz sample rate). A common bit depth like 24-bit allows for 2^24 or ~16.75 million different positions, fine enough to exceed the limits of human perception.[*]

The technical term for this synthesis technique is pulse code modulation or PCM, because each speaker position corresponds to a short audio pulse with a certain sign and amplitude. It’s a lot of work for the CPU, because it needs to send new a new pulse or two to the speakers at a very high frequency and also do whatever calculations necessary to figure out what the next pulses should be while it’s doing this. PCM was the “holy grail” of PC audio back in the ’80s and early ’90s and a lot of people did their best to mock it up using square wave generators and the like; this article/video shows one region of this tendency. Demoscene hackers and the like today still have fun trying to approximate PCM on old hardware in order to play samples and such.

As for all sound nowadays being produced by playing audio files, you can also generate sound algorithmically and send it right to the speakers in realtime. That’s usually what’s happening when you play an old game in an emulator, for instance: the emulator is imitating the behavior of the old sound hardware in its code and sending the resulting samples to the speakers as they come. You can also write code to procedurally generate sound and render it to an audio file for playback later if you want. From the computer’s perspective, it’s all the same; it just needs samples at the sample rate of the sound card, and it doesn’t care if they came from an audio file or a realtime program or over the network or anything.

[*] The Spectrum’s “1-bit” audio actually describes three different speaker positions—in, out, and center—because the 1-bit on/off switch controls a square wave generator with a configurable frequency. The frequency parameter is not 1-bit, so I think the term “1-bit” is somewhat misleading, as the frequency parameter is essential for techniques like pulse width modulation. In any case, it’s not a bit depth measurement like “24-bit” in the case of a WAV file or a sound card, nor is it the same as when people say “8-bit music,” which just refers to the pointer size of the computer the music is played on (like the C64 or whatever) and doesn’t say much about the music itself.