Vizy is a Linux-based “AI camera” based on the Raspberry Pi 4 that uses machine learning and machine vision to pull off some neat tricks, and has a design centered around hackability. I found it ridiculously simple to get up and running, and it was just as easy to make changes of my own, and start getting ideas.

I was running pre-installed examples written in Python within minutes, and editing that very same code in about 30 seconds more. Even better, I did it all without installing a development environment, or even leaving my web browser, for that matter. I have to say, it made for a very hacker-friendly experience.

Vizy comes from the folks at Charmed Labs; this isn’t their first stab at smart cameras, and it shows. They also created the Pixy and Pixy 2 cameras, of which I happen to own several. I have always devoured anything that makes machine vision more accessible and easier to integrate into projects, so when Charmed Labs kindly offered to send me one of their newest devices, I was eager to see what was new.

I found Vizy to be a highly-polished platform with a number of truly useful hardware and software features, and a focus on accessibility and ease of use that I really hope to see more of in future embedded products. Let’s take a closer look.

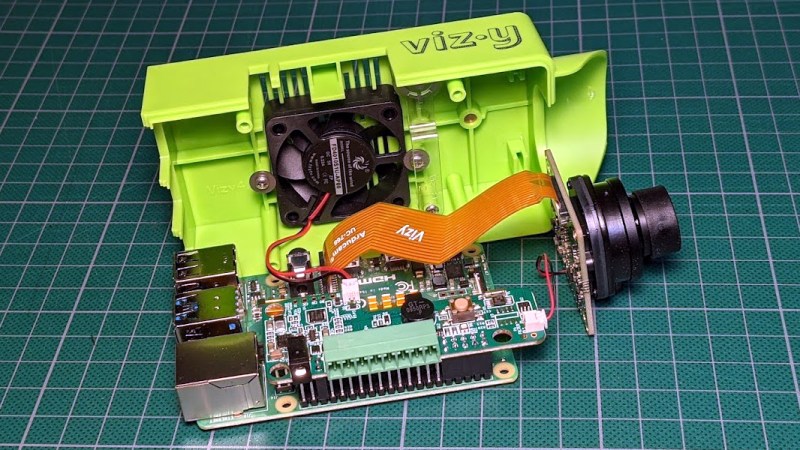

Looking Inside

Vizy is based on the Raspberry Pi 4, which sets it somewhat apart from most other embedded machine vision platforms. Like many other platforms, all code and vision processing for Vizy runs locally. However, running on a Raspberry Pi 4 also means having access to a familiar Linux environment, and this functionality brings a few benefits we’ll explore in a moment.

Inside the case is tucked a Raspberry Pi 4, a fan, the lens assembly and camera (which uses the same Sony IMX477 sensor as the Raspberry Pi High Quality camera), and a small power and I/O management board attached to the top of the Pi’s 40-pin GPIO header. This board handles powering on and off, controls the switchable IR filter, accepts 12 V DC input, provides feedback with a beeper and RGB LED, and has an I/O header with screw terminals for easy interfacing to other devices.

Vizy can almost be thought of as a camera-shaped enclosure for a Raspberry Pi, since it provides full access to all of the Raspberry Pi 4’s ports, which all work as one would expect. One can plug in a monitor and keyboard and see a Linux desktop environment, and adding a feature like cellular wireless connectivity is as simple as plugging in and configuring a USB cellular modem. Interfacing to other systems or hardware — an expected task for a smart camera — becomes easier as a result of being able to use familiar interfaces and methods.

Hacker-Friendly Features

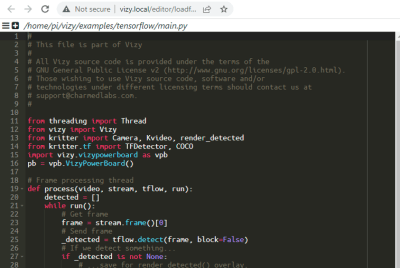

One of the things I liked the most about exploring Vizy was how quickly I started modifying sample code without even having to even leave my web browser, thanks to the built-in web terminal interfaces. Examples and applications are all written in Python, and while it’s certainly possible to use whatever method one wishes to edit Python code and push changes to the device, it’s also trivially simple to launch an editor in a new browser tab.

Here are some of the more interesting features I found in Vizy, each of them having something useful to offer. Their utility is enhanced by excellent documentation.

Hardware Features

Software-controlled, switchable IR filter which is independent of the lens itself. An IR filter is typically built into most lenses because it provides better photos. However, there are times when going without an IR filter is desirable (a camera tends to see better at night without one, for example.) Vizy allows enabling (or disabling) the IR filter with a simple software command.

Lens mount is both M12 and C/CS compatible. Most cameras accept one type of lens or the other, but Vizy allows using either (though it is recommended to use lenses without IR filters, because Vizy provides its own.)

I/O plug with screw terminals provides a way for the camera to directly interface to other hardware and devices. The pins allow for robust digital input and output including serial communication, and software-switchable high-current 5 V and 12 V outputs are available to control external devices (more details on the pinout is here.)

The usual camera standards are present such as a tripod mount, mounting shoe for camera accessories, and an optional outdoor enclosure.

All the usual Raspberry Pi interfaces are exposed which means that Vizy doesn’t get in the way of anything a Raspberry Pi would normally be able to do. It’s even possible to plug in a keyboard and monitor (or connect via VNC, for that matter) and work on Vizy from a normal Linux desktop environment.

Software Features

Simple setup. It takes virtually no time at all to be up and running, or configure the device to connect to a local network. Every part of Vizy’s functionality is accessible via a web browser.

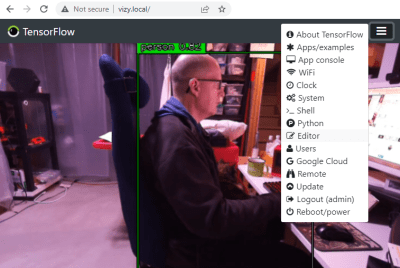

Built-in applications and examples are easy to modify. Two applications and a number of examples come pre-installed and ready to run: Birdfeeder automatically detects and identifies different species of birds, and MotionScope detects moving objects, measures the acceleration and velocity of each, and presents the data as interactive graphs. Examples include things like TensorFlow object detection, which runs locally and provides a simple framework for projects.

Development can be done entirely in the browser, and any example or application can be launched in a Python editor in a new browser tab with a couple of clicks, with no need for a separate development environment (although Vizy also permits SMB/CIFS-based file sharing on the local network.)

Remote web sharing for access from outside one’s network is a handy feature that creates a custom URL through which one may remotely access the device. A URL generated in this way is only valid for an hour, but established remote sessions won’t be terminated; a generated URL simply stops being valid. All of the usual features are accessible through web sharing — including web-based terminal windows and file editing — and the system handles simultaneous access by multiple users gracefully.

Powering the Camera

Vizy is nominally powered by the included 12 V wall adapter but there are a number of options for powering the device, which offers the typical hacker some flexibility. For example, it’s possible to power the device by applying 5 V to the USB-C connector, although doing this means the 12 V output on the I/O connector won’t be functional. Speaking of which, that 12 V output also can function as an input, allowing one to power the camera from an external 12 V source applied to the right screw terminals. Power over Ethernet (PoE) is also an option.

Power consumption reflects the device’s Raspberry Pi 4 internals, consuming around 3 W to 5 W depending on what it is doing. I measured between 500 mA and 600 mA at 5 V when idle, jumping to around 1 A while actively streaming the results of TensorFlow object detection in the camera view.

In-Browser… Everything

It’s one thing to be able to view live video or change hardware parameters from within a browser, but what’s even better is being able to edit Python code directly from a browser tab, complete with application console output. It’s a slick system that really makes modifying or writing code for the camera much more accessible. Need to create new files, or even open a terminal window to the Pi itself? That can be launched in a new tab, as well.

Of course, one can use whatever method one wishes to develop on the device. File sharing, ssh, and remote desktop (via VNC) are all options, as is simply plugging in a keyboard and monitor.

Loving This Direction

I was up and running in no time with Vizy, and the default application is a bird feeder watcher that detects birds, identifies their species, and uploads their pictures to a Google photo album. It’s capable of more than that, however. Want an idea of what goes into developing your own application? Here’s a tutorial on rolling your own pet companion, complete with treat dispenser.

Vizy comes with a number of useful examples that are ready to modify, and development doesn’t need anything more than a web browser. This helps make it more accessible while at the same time offering the average hacker a running start at implementing things like object detection in a project. In fact, thanks to the pre-installed TensorFlow examples, Vizy is only a couple lines of code away from being a functional Cat Detector like this one.

Vizy has a level of polish and set of features that I really hope to see more of in future products like this. Does a device like this give you any ideas for a new project, or perhaps breathe life into an old one? We definitely want to hear about it, so let us know in the comments.

This inspires me to pick up where I left off with my CV project.

It seems like computer vision + AI might be at a place where it could watch my shop with me puttering around in it, and send an alert if I am using a tool and fall down or scream and grab my hand or something that doesn’t match normal patterns. Perhaps even snip a clip asking if I’m ok (yes = train to less alerts, no=offer to call for help), and send it to a family/buddy if I don’t respond. Also, may be a place for monitoring for non-standard people in the shop and watching them more closely to make sure kids aren’t engaging in risky behavior while dad’s out of town or something.

Nobody wants to just hang out while I use tools with associated risk, and nobody wants to just stream my work either. Enough of us do risky work alone on a regular basis, there might even be a place for a co-op or collaborative of people who are willing to keep an eye out for each other.

I like where the Vizy project is going, and it might totally be worth investing in the product to get to the part where I can work on the interesting CV bits of recording snippets of non-standard behavior and doing a check-in before asking family to review to make sure I don’t need help.

Obviously tuning it to not pester me while I’m working is important, and not rat me out to my family if I’m really getting into the music, but there are some professional outfits that run factory floor monitors. So I think it is doable… I think…right?

Great idea! And could eventually expand to all sorts of related cases. Might be challenging to generate the true positives (fake injuries?) for the training set, and will take LOTS of data to generate a pure anomaly detector that doesn’t squawk at every 99.97th image. I am interested in helping out with the CV and ML bits if you set up a collaborative project

Do you think would be a good idea ti implement a telegram bot on It?

This looks awesome. I have a particular application in mind, which I’ve not seen solved (nor in the cost effective hack able consumer market anyhow). In a nutshell it is night time motion detection. Sounds simple enough 😁. It needs an IR camera of course and most I’ve trued have an IR source. Alas these invariably attract insects, moths, which confound moron detectors. More to the point they trigger detections.

I want to come in the morning and review detections. No good to me if I have hours of recordings to study through.

Main applications are rat monitoring, human monitoring and wildlife monitoring. Main use currently is moth monitoring 😉.

With POE you could set the IR Sources apart from each other and then the moths will be fluttering around the emitters. Then set the size detection to a small cat and that should take care of it.

Have a look at Frigate project, it does exactly what you want (AI detection driven events monitoring)

Would be nice to set this up to identify any given “Karen”. ie: anyone pointing a phone or camera device at my house and send out an audio response over a loudspeaker that inform them that images of my house are copyright. 😋

Like the Eiffel Tower – https://www.rd.com/article/eiffel-tower-illegal-photos/

I would need a Vizy plugin for matching images of fastener hardware to ASME B18 PIN.

Thanks for the article.

As soon as you mentioned the birdbath project, I immediately wondered about weather rating – can you speak to that briefly?

Exterior/exposed terminal blocks would suggest not at all waterproof, but thought I’d ask.

Thank you.

You need the $60 optional outdoor enclosure. From their website it looks like a pretty nice option. If only I had money…

——Burton

FWIW, the “Mourning Doves” identified by their Bird application are actually White Wing Doves (_slightly_ smarter cousins). But that’s a training set problem and they’ve told me they are planning to release a rewrite anyway.

This software sounds amazing. I’m assuming its not available by itself and only comes with this specific hardware?

Interesting. I have been looking at various options for doing a sequence of timed photgraphs. They make cameras for that purpose but they tend to bulky or expensive or both. I would up ordering an old Nexus 6 phone to use with the ‘Open Camera’ application but this looks like it could be a better option. I assume that there is a python API for the camera functions (never assume). Is there a PDF document available for the API ?